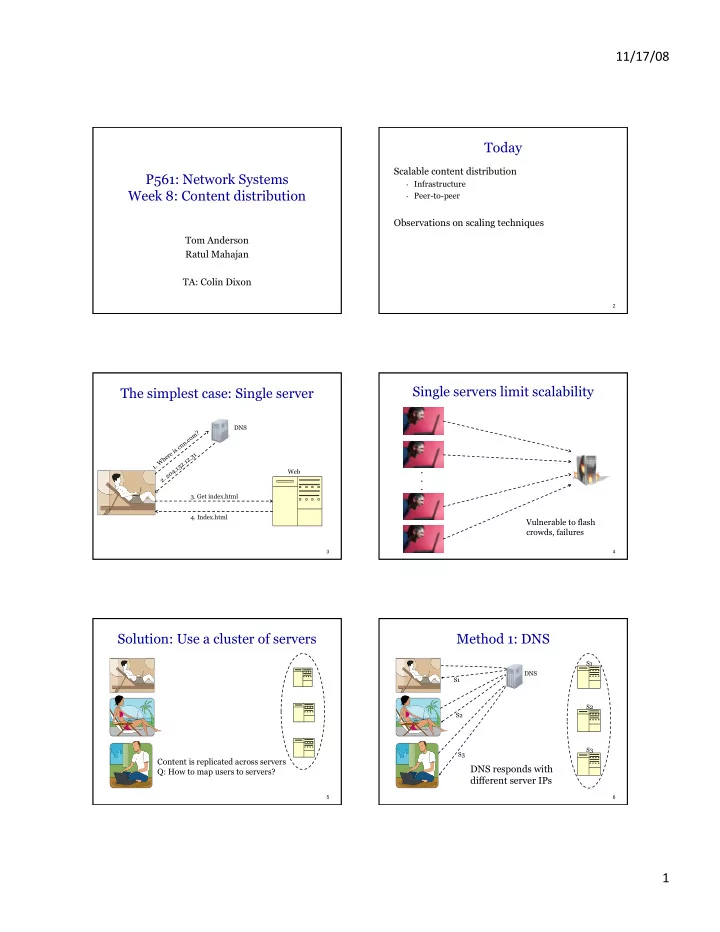

11/17/08 Today Scalable content distribution P561: Network Systems • Infrastructure Week 8: Content distribution • Peer-to-peer Observations on scaling techniques Tom Anderson Ratul Mahajan TA: Colin Dixon 2 Single servers limit scalability The simplest case: Single server DNS ? m o c . n n c s i e 1 r 3 e . h 2 W 1 . 2 . 1 3 1 . . 4 Web 0 2 . 2 . . 3. Get index.html 4. Index.html Vulnerable to flash crowds, failures 3 4 Solution: Use a cluster of servers Method 1: DNS S1 DNS S1 S2 S2 S3 S3 Content is replicated across servers DNS responds with Q: How to map users to servers? different server IPs 5 6 1

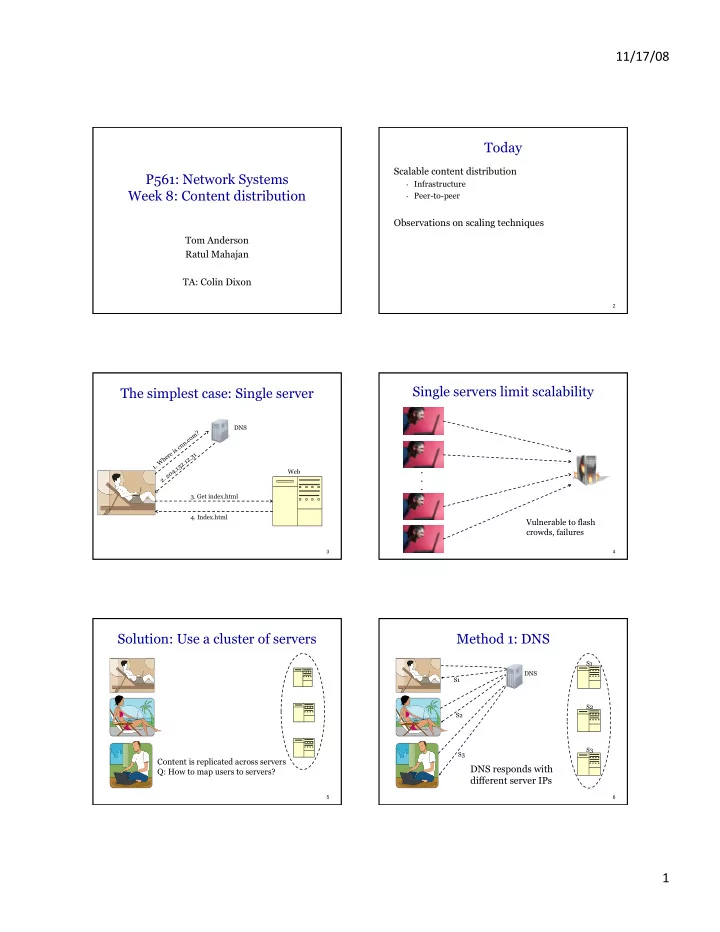

11/17/08 Implications of using DNS Method 2: Load balancer Names do not mean the same thing everywhere Coarse granularity of load-balancing Because DNS servers do not typically communicate with content • servers Hard to account for heterogeneity among content servers or • requests Hard to deal with server failures Based on a topological assumption that is true often (today) Load but not always balancer End hosts are near resolvers • Relatively easy to accomplish Load balancer maps incoming connections to different servers 7 8 Single location limits performance Implications of using load balancers Can achieve a finer degree of load balancing Another piece of equipment to worry about • May hold state critical to the transaction • Typically replicated for redundancy . • Fully distributed, software solutions are also available . . (e.g., Windows NLB) but they serve limited topologies 9 10 Solution: Geo-replication Mapping users to servers Use DNS to map a user to a nearby data center 1. Anycast is another option (used by DNS) − Use a load balancer to map the request to 2. lightly loaded servers inside the data center In some case, application-level redirection can also occur E.g., based on where the user profile is stored • 11 12 2

11/17/08 Question Problem Did anyone change their mind after reading other It can be too expensive to set up multiple data blog entries? centers across the globe • Content providers may lack expertise • Need to provision for peak load Unanticipated need for scaling (e.g., flash crowds) Solution: 3 rd party Content Distribution Networks (CDNs) • We’ll talk about Akamai (some slides courtesy Bruce Maggs) 13 14 Akamai Akamaizing Web pages Goal(?): build a high-performance global CDN that is robust to server and network hotspots <html> Overview: <head> <title>Welcome to xyz.com!</title> • Deploy a network of Web caches </head> • Users fetch the top-level page (index.html) from the <body> origin server (cnn.com) <img src=“ • The embedded URLs are Akamaized <img src=“ • The page owner retains controls over what gets served <h1>Welcome to our Web site!</h1> through Akamai <a href=“page2.html”>Click here to enter</a> • Use DNS to select a nearby Web cache </body> • Return different server based on client location </html> 15 16 Akamai DNS Resolution DNS Time-To-Live T ime T o L ive .com .net 4 Root 10.10.123.5 (InterNIC) 1 day xyz.com’s akamai.net nameserver Root a212.g.akamai.net 15.15.125.6 30 min. Akamai High-Level DNS Servers 10 g.akamai.net HLDNS 11 20.20.123.55 12 30 sec. a212.g.akamai.net LLDNS 30.30.123.5 13 Local Name End User Akamai Low-Level DNS Servers Server 14 TTL of DNS responses gets shorter 3 16 1 further down the hierarchy 2 Browser’s Cache 15 18 OS 17 3

11/17/08 Measured Akamai performance DNS maps (Cambridge) Map creation is based on measurements of: − Internet congestion − System loads − User demands − Server status Maps are constantly recalculated: − Every few minutes for HLDNS − Every few seconds for LLDNS [The measured performance of content distribution networks, 2000] 19 20 Measured Akamai performance Key takeaways (Boulder) Pretty good overall • Not optimal but successfully avoids very bad choices This is often enough in many systems; finding the absolute optimal is a lot harder Performance varies with client location 22 Aside: Drafting behind Akamai (1/3) Aside: Re-using Akamai maps [SIGCOMM 2006] Destination Can the Akamai maps be used for other purposes? • By Akamai itself Peer 1 • E.g., to load content from origin to edge servers … … . . • By others Peer Peer Source Goal: avoid any congestion near the source by routing through one of the peers 23 24 4

11/17/08 Aside: Drafting behind Akamai (2/3) Aside: Drafting behind Akamai (3/3) Destination Taiwan-UK UK-Taiwan Peer 1 … Replica 1 … . . Peer Replica 2 80% Taiwan Peer 75% U.K. 15% Japan 5 % U.S. 25% U.S. Replica 3 Source Solution: Route through peers close to replicas suggested by Akamai DNS Server 25 26 Trends impacting Web cacheability Why Akamai helps even though the top-level page is fetched directly from the origin server? (and Akamai-like systems) Dynamic content Personalization Security Interactive features Content providers want user data New tools for structuring Web applications Most content is multimedia Page Served by Akamai 27 28 Peer-to-peer content distribution BitTorrent overview When you cannot afford a CDN Keys ideas beyond what we have seen so far: • For free or low-value (or illegal) content • Break a file into pieces so that it can be downloaded in parallel Last week: • Users interested in a file band together to • Napster, Gnutella increase its availability • Do not scale • “Fair exchange” incents users to give-n-take Today: rather than just take • BitTorrent (some slides courtesy Nikitas Liogkas) • CoralCDN (some slides courtesy Mike Freedman) 29 30 5

11/17/08 BitTorrent terminology Joining a torrent Swarm: group of nodes interested in the same file 1 metadata website new leecher file Tracker: a node that tracks swarm’s membership 2 join peer list 3 data request seed/leecher tracker 4 Seed: a peer that has the entire file Metadata file contains Leecher: a peer with incomplete file 1. The file size 2. The piece size 3. SHA-1 hash of pieces 4. Tracker’s URL 31 32 Downloading data Uploading data (unchoking) leecher B leecher A leecher B leecher A I have ! seed seed leecher C leecher D leecher C ● Periodically calculate data-receiving rates ● Upload to ( unchoke ) the fastest k downloaders ● Download pieces in parallel ● Verify them using hashes • Split upload capacity equally ● Advertise received pieces to the entire peer list ● Optimistic unchoking ▪ periodically select a peer at random and upload to it ● Look for the rarest pieces ▪ continuously look for the fastest partners 33 34 Incentives and fairness in BitTorrent BitTyrant [NSDI 2006] Embedded in choke/unchoke mechanism • Tit-for-tat Not perfect, i.e., several ways to “free-ride” • Download only from seeds; no need to upload • Connect to many peers and pick strategically • Multiple identities Can do better with some intelligence Good enough in practice? • Need some (how much?) altruism for the system to function well? 35 36 6

11/17/08 CoralCDN CoralCDN overview Goals and usage model is similar to Akamai • Minimize load on origin server Origin Browser Coral • Modified URLs and DNS redirections httpprx dnssrv Server Coral httpprx dnssrv Browser Coral httpprx dnssrv Coral httpprx dnssrv It is p2p but end users are not necessarily peers Coral httpprx dnssrv Coral httpprx Browser dnssrv • CDN nodes are distinct from end users Browser Another perspective: It presents a possible (open) Implements an open CDN to which anyone can contribute way to build an Akamai-like system CDN only fetches once from origin server 37 38 How to find close proxies and CoralCDN components cached content? Origin DHTs can do that but a straightforward use has Server significant limitations ? httpprx How to map users to nearby proxies? ? • DNS servers measure paths to clients Fetch data How to transfer data from a nearby proxy? httpprx from nearby dnssrv • Clustering and fetch from the closest cluster How to prevent hotspots? DNS Redirection Cooperative • Rate-limiting and multi-inserts Return proxy, Web Caching preferably one near client Resolver Key enabler: DSHT (Coral) Browser www.x.com.nyud.net 39 40 216.165.108.10 DSHT: Hierarchy DSHT: Routing Thresholds Thresholds None None < 60 ms < 60 ms < 20 ms < 20 ms A node has the same Id at each level Continues only if the key is not found at the closest cluster 41 42 7

Recommend

More recommend