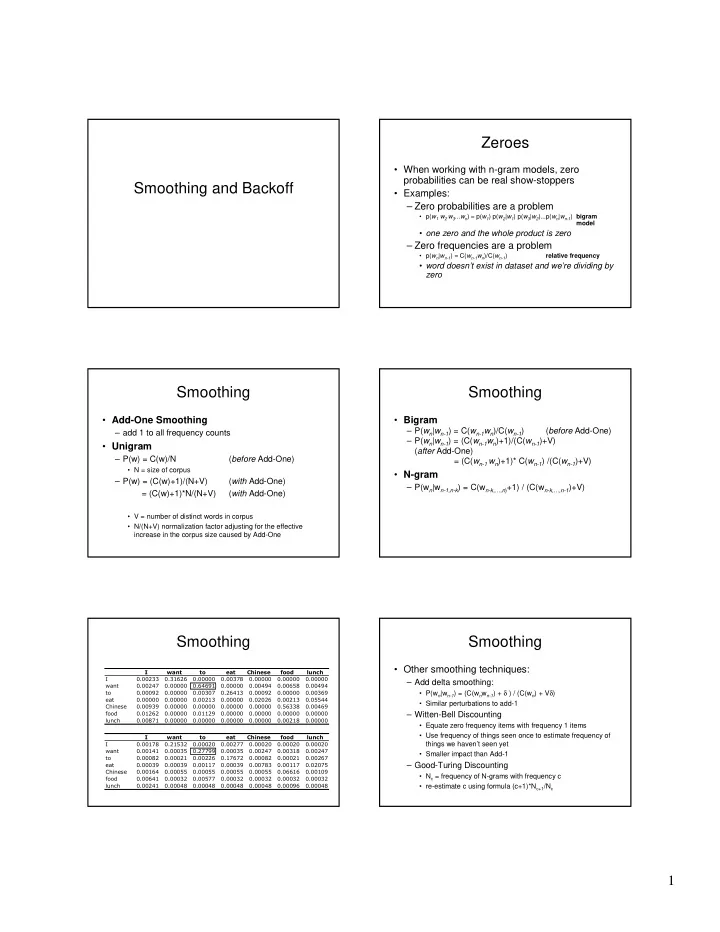

Zeroes • When working with n-gram models, zero probabilities can be real show-stoppers Smoothing and Backoff • Examples: – Zero probabilities are a problem • p( w 1 w 2 w 3 ...w n ) ≈ p( w 1 ) p( w 2 | w 1 ) p( w 3 | w 2 )...p( w n | w n-1 ) bigram model • one zero and the whole product is zero – Zero frequencies are a problem relative frequency • p( w n | w n-1 ) = C( w n-1 w n )/C( w n-1 ) • word doesn’t exist in dataset and we’re dividing by zero Smoothing Smoothing • Add-One Smoothing • Bigram – P( w n | w n-1 ) = C( w n-1 w n )/C( w n-1 ) ( before Add-One) – add 1 to all frequency counts – P( w n | w n-1 ) = (C( w n-1 w n )+1)/(C( w n-1 )+V) • Unigram ( after Add-One) – P(w) = C(w)/N ( before Add-One) = (C( w n-1 w n )+1)* C( w n-1 ) /(C( w n-1 )+V) • N = size of corpus • N-gram – P(w) = (C(w)+1)/(N+V) ( with Add-One) – P(w n |w n-1,n-k ) = C(w n-k,…,n ) +1) / (C(w n-k,…,n-1 )+V) = (C(w)+1)*N/(N+V) ( with Add-One) • V = number of distinct words in corpus • N/(N+V) normalization factor adjusting for the effective increase in the corpus size caused by Add-One Smoothing Smoothing • Other smoothing techniques: � ���� �� ��� ������� ���� ����� � ������� ������� ������� ������� ������� ������� ������� – Add delta smoothing: ���� ������� ������� ������� ������� ������� ������� ������� �� ������� ������� ������� ������� ������� ������� ������� • P(w n |w n-1 ) = (C(w n w n-1 ) + δ ) / (C(w n ) + V δ ) ��� ������� ������� ������� ������� ������� ������� ������� • Similar perturbations to add-1 ������� ������� ������� ������� ������� ������� ������� ������� ���� ������� ������� ������� ������� ������� ������� ������� – Witten-Bell Discounting ����� ������� ������� ������� ������� ������� ������� ������� • Equate zero frequency items with frequency 1 items • Use frequency of things seen once to estimate frequency of � ���� �� ��� ������� ���� ����� � ������� ������� ������� ������� ������� ������� ������� things we haven’t seen yet ���� ������� ������� ������� ������� ������� ������� ������� • Smaller impact than Add-1 �� ������� ������� ������� ������� ������� ������� ������� ��� ������� ������� ������� ������� ������� ������� ������� – Good-Turing Discounting ������� ������� ������� ������� ������� ������� ������� ������� • N c = frequency of N-grams with frequency c ���� ������� ������� ������� ������� ������� ������� ������� ����� ������� ������� ������� ������� ������� ������� ������� • re-estimate c using formula (c+1)*N c+1 /N c 1

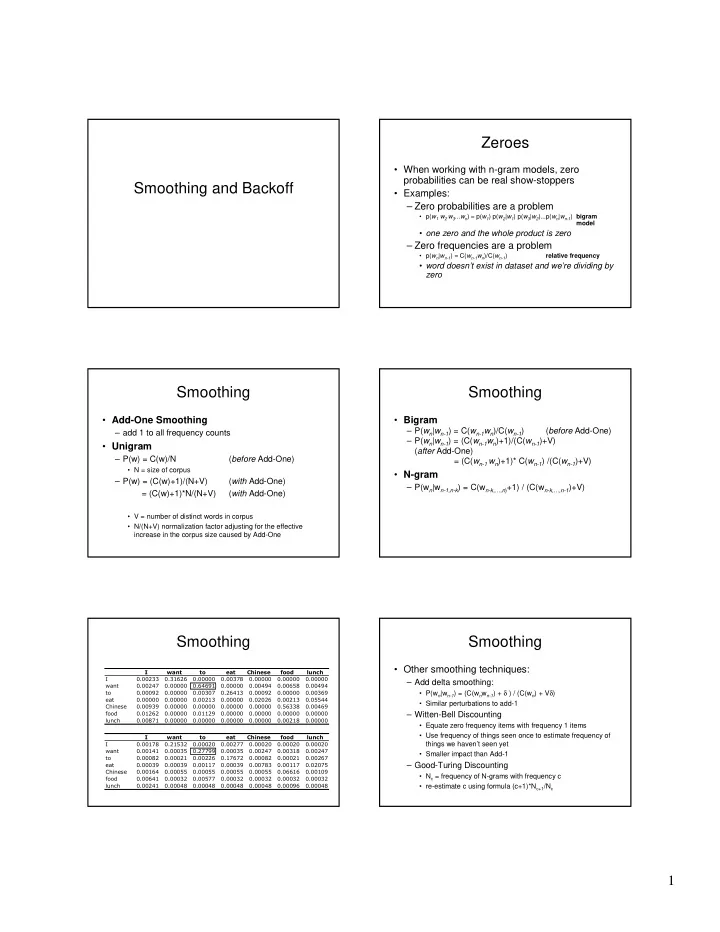

Good Turing Good Turing • Good Turing gives a smoothed count c * based • Basic concept: probability of events with counts > 1 is decreased (discounted) and on the set of N c for all c: probability of events with counts = 0 is increased N c +1 c* = ( c +1) -------- • Essentially we save some of the N c probability mass from seen events and • Example: revised count for bigrams that never make it available to unseen events occurred ( c 0 ) = c 1 * • Allows us to estimate the probability of zero-count events # of bigrams that occurred once ------------------------------------------- # bigrams that never occurred Good Turing Good Turing ������� • Bigram counts from 22 million AP newswire (Church & Gale 1991): ������� � �� ������� ������� ������ � � � � � � � � � � � �� � Good Turing Applying Good Turing • So we have these new counts • How do we get this number? • What do we do with them? – For bigrams, total vocabulary = – Apply them to our probability calculations! (unigram vocabulary) 2 – Thus, 74,671,100,000 = V 2 – (seen bigrams) 2

Uniform Good Turing Uniform Good Turing • Uniform application: – (Examples use bigrams) – To calculate the probability of any bigram, we use: • P ( w n |w n-1 ) = C(w n-1 w n ) / C(w n-1 ) – Apply the revised c * values to our probabilities – Thus revised c * substituted for C(w n-1 w n ) – Thus, if C(she drove) = 6, then c * = 5.19 * / C(w n-1 ) • P ( w n |w n-1 ) = c n – If C(she)=192, then • Revised P(drove|she) = 5.19/192 = .02703 (revised from .03125) Uniform Good Turing Applying Good Turing • Is a uniform application of Good Turing the right thing to do? • Can we assume that C(any unseen bigram) = C(any other unseen)? • Church and Gale 91 show a method for calculating the P(unseen bigram) from the P(unseen) and P(bigram) – What’s the probability of some unknown bigram? – Works only if the unigrams for both words – For example, if C(gave she) = 0, then c * =.000027 exist – If C(gave) = 154, then • P(gave she) = .000027/154 = .000000175 Unigram-sensitive Good Turing Good Turing • How it works (for unseen bigrams): • Katz 1987 showed that Good Turing for large counts reliable – Calculate the joint probability P(w n )P(w n+1 ) • Based on his work, smoothing in practice not – Group bigrams into bins based on similar joint applied to large c ’s. probability scores • Proposed some threshold k (he recommended • Predetermined set of ranges and thresholds 5) where c * = c for c > k . – Do Good Turing estimation on each of the • Still smooth for c <= k bins • May also want to treat n-grams with low counts • In other words, smooth (normalize the probability mass) across each of the bins separately (especially 1) as zeroes. 3

Backoff Backoff • Assumes additional sources of knowledge: • Preference rule: – If we don’t have a value for a particular • P ^ (w n |w n-2 w n-1 ) = trigram probability, P(w n |w n-1 w n-2 ) P(w n |w n-2 w n-1 ) if C(w n-2 w n-1 w n ) ≠ 0, else 1. – We can estimate the probability by using the α 1 P(w n |w n-1 ) if C(w n-1 w n ) ≠ 0, else 2. bigram probability: P(w n |w n-1 ) α 2 P(w n ) 3. – If we don’t have a value for this bigram, we α values are used to normalize probability • can look at the unigram probability: P(w n ). mass so that it still sums to 1, and to “smooth” – If we do have the trigram probability the lower order probabilities that are used P(w n |w n-1 w n-2 ), we use it. See J&M § 6.4 for details of how to calculate α • – We only “backoff” to the lower-order if no values (and M&S § 6.3.2 for additional evidence for the higher order. discussion) Interpolation Interpolation • Rather than choosing between different models • Generally, here’s what’s done: (trigram, bigram, unigram), as in backoff – Split data into training, held-out, and test • Interpolate the models when computing a – Train model on training set trigram – Use held-out to test different λ values and pick the • Proposed first by Jelinek and Mercer (1980) ones that works best • P ^ ( w n | w n-2 w n-1 ) = – Test the model on the test data λ 1 P( w n | w n-2 w n-1 ) + • Held-out: used to smooth model, and to ensure λ 2 P( w n | w n-2 ) + X model is not over-training (over-specifying) λ 3 P( w n ) • Cardinal sin: testing on training data X • Where Σ λ i = 1 i 4

Recommend

More recommend