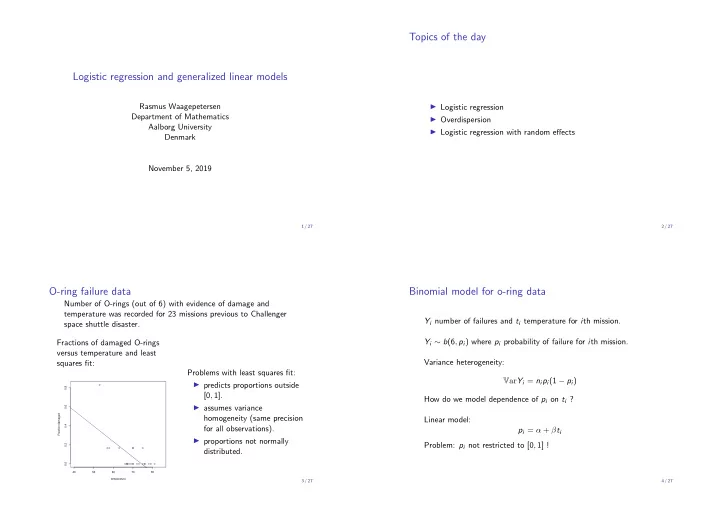

Topics of the day Logistic regression and generalized linear models Rasmus Waagepetersen ◮ Logistic regression Department of Mathematics ◮ Overdispersion Aalborg University ◮ Logistic regression with random effects Denmark November 5, 2019 1 / 27 2 / 27 O-ring failure data Binomial model for o-ring data Number of O-rings (out of 6) with evidence of damage and temperature was recorded for 23 missions previous to Challenger Y i number of failures and t i temperature for i th mission. space shuttle disaster. Y i ∼ b (6 , p i ) where p i probability of failure for i th mission. Fractions of damaged O-rings versus temperature and least Variance heterogeneity: squares fit: Problems with least squares fit: V ar Y i = n i p i (1 − p i ) ◮ predicts proportions outside 0.8 [0 , 1]. How do we model dependence of p i on t i ? ◮ assumes variance 0.6 Fraction damaged homogeneity (same precision Linear model: 0.4 for all observations). p i = α + β t i ◮ proportions not normally Problem: p i not restricted to [0 , 1] ! 0.2 distributed. 0.0 40 50 60 70 80 temperature 3 / 27 4 / 27

Logistic regression Plots of logit, inverse logit, and probit 1.0 6 0.8 1.0 Consider logit transformation: 4 2 invlogit(eta) 0.6 0.8 logit(p) 0 p 0.4 0.6 η = logit( p ) = log( 1 − p ) −2 p −4 0.2 0.4 logistisk probit −6 0.0 0.2 Note: logit injective function from ]0 , 1[ to R . Hence we may apply 0.0 0.2 0.4 0.6 0.8 1.0 −10 −5 0 5 10 0.0 −10 −5 0 5 10 p eta linear model to η and transform back: eta Probit transformation: p i = Φ( η i ) where Φ cumulative distribution exp( α + β t ) η = α + β t ⇔ p = function of standard normal variable (Φ( u ) = P ( U ≤ u ).) exp( α + β t ) + 1 Note: p guaranteed to be in ]0 , 1[ Regression parameter for logistic roughly 1.8 times regression parameter for probit since Φ more steep than inverse logit. 5 / 27 6 / 27 Logistic regression and odds Estimation Likelihood function for simple logistic regression logit( p i ) = α + β x i : Odds for a failure in i th mission is p i � p y i i (1 − p i ) n i − y i o i = = exp( η i ) L ( α, β ) = 1 − p i i and odds ratio is where exp( α + β x i ) o i p i = = exp( η i − η j ) = exp( β ( t i − t j )) 1 + exp( α + β x i ) o j α, ˆ MLE (ˆ β ) found by iterative maximization (Newton-Raphson) Example: to double odds we need More generally we may have multiple explanatory variables: 2 = exp( β ( t i − t j )) ⇔ t i − t j = log(2) /β logit( p i ) = β 1 x 1 i + . . . + β p x pi 7 / 27 8 / 27

Deviance Predicted observation for current model: p i = ˆ β 1 x 1 i + . . . + ˆ y i = n i ˆ ˆ p i logitˆ β p x pi p sat Saturated model: no restrictions on p i so ˆ = y i / n i and i y sat ˆ = y i (perfect fit). Pearson’s X 2 : i n p i ) 2 ( y i − n i ˆ X 2 = � Residual deviance D is -2 times the log of the ratio between n i ˆ p i (1 − ˆ p i ) L (ˆ β 1 , . . . , ˆ i =1 β p ) and likelihood L sat for the saturated model. is asymptotically equivalent alternative to D . n � D = 2 [ y i log( y i / ˆ y i ) + ( n i − y i ) log(( n i − y i ) / ( n i − ˆ y i ))] i =1 If n i not too small D ≈ χ 2 ( n − p ) where p is the number of parameters for current model. If this is the case, D may be used for goodness-of-fit assessment. Null deviance is log ratio between maximum likelihood for model with only intercept and L sat . 9 / 27 10 / 27 Logistic regression in R Generalized linear models Suppose Z is random variable with expectation E Z = µ ∈ M > out=glm(cbind(damage,6-damage)~temp,family=binomial(logit)) where M ⊂ R . Idea: use invertible link function g : M → R and > summary(out) apply linear modelling to η = g ( µ ). ... Coefficients: Binomial data: Z = Y / n , Y ∼ b ( n , p ). µ = p ∈ M =]0 , 1[. g ( · ) Estimate Std. Error z value Pr(>|z|) e.g. logistic or probit. (Intercept) 11.66299 3.29626 3.538 0.000403 *** temp -0.21623 0.05318 -4.066 4.78e-05 *** Poisson data: Z ∼ pois( λ ). µ = λ > 0. g e.g. log. ... Null deviance: 38.898 on 22 degrees of freedom Many other possibilities (McCullagh and Nelder, Faraway, Dobson) Residual deviance: 16.912 on 21 degrees of freedom e.g. gamma distribution and inverse Gaussian for positive ... continuous data. n i = 6 so residual deviance approximately χ 2 (21) For binomial and Poisson, V ar Z = V ( µ ) determined by µ : V ( µ ) = µ (1 − µ ) / n and V ( µ ) = µ , respectively. Residual deviance not large compared with numbers of degrees of freedom. 11 / 27 12 / 27

Overdispersion Wheezing data The wheezing (Ohio) data has variables resp (binary indicator of In some applications we see larger variability in the data than wheezing status), id, age (of child), smoke (binary, mother smoker predicted by variance formulas for binomial. or not). This is also sometimes revealed by large residual deviance or X 2 Aggregated data: (black=smoke, red=no smoke) relative to degrees of freedom. Reason may either systematic defiency of model (misspecified ● 0.20 mean structure) or overdispersion , i.e. variance of observations ● larger than model predicts. ● ● 0.15 ● ● ● Wheeze proportin Overdispersion may be caused e.g. by genetic variation between ● 0.10 subjects, variation between batches in laboratory experiments, or variation in environment in agricultural trials. 0.05 There are various ways to handle overdispersion - we will focus on a model based approach: generalized linear mixed models. 0.00 −2.0 −1.5 −1.0 −0.5 0.0 0.5 1.0 13 / 27 14 / 27 age Let Y ij denote wheezing status of i th child at j th age. Assuming Y ij is b ( p ij , 1) we try logistic regression Issue: observations from same child are correlated - if we know logit( p ij ) = β 0 + β 1 age j + β 2 smoke i first observation is non-wheeze then very likely three remaining observations non-wheeze too. Assuming independence between observations from the same child, Correlation can be due to genetics or the environment (more or and letting Y i · be the sum of observations from i th child, less polluted) for the child. V ar Y i · = V ar ( Y i 1 + Y i 2 + Y i 3 + Y i 4 ) = V ar Y i 1 + V ar Y i 2 + V ar Y i 3 + V ar Y i 4 Explicit model these effects using random effect: = p i 1 (1 − p i 1 ) + p i 2 (1 − p i 2 ) + p i 3 (1 − p i 3 ) + p i 4 (1 − p i 4 ) logit( p ij ) = β 0 + β 1 age j + β 2 smoke i + U i Note: same variance of Y i · for all children with same value of smoke. where U i are N (0 , τ 2 ) and independent among children. We can calculate above theoretical variance from fitted model and Such a model can be fitted by the R -procedure glmer with syntax compare with empirical variances. very close related to lmer and glm Smoke=0: theoretical: 0.58 empirical: 1.22. Smoke=1: theoretical: 0.48 empirical: 0.975 15 / 27 16 / 27

Logistic regression > fit=glm(resp~age+smoke,family=binomial,data=ohio) Coefficients: χ 2 distribution of deviance residual not trustworthy here since Estimate Std. Error z value Pr(>|z|) n i = 1. (Intercept) -1.88373 0.08384 -22.467 <2e-16 *** age -0.11341 0.05408 -2.097 0.0360 * We can increase n i by aggregrating over 8 categories for age × smoke 0.27214 0.12347 2.204 0.0275 * smoke but then variability between children hidden. --- Signif. codes: 0 ’***’ 0.001 ’**’ 0.01 ’*’ 0.05 ’.’ 0.1 ’ ’ 1 Null deviance: 1829.1 on 2147 degrees of freedom Residual deviance: 1819.9 on 2145 degrees of freedom According to above results, age and smoke both significant at the 5% level. 17 / 27 18 / 27 Mixed model analysis Interpretation of variance of random effects > fiter=glmer(resp~age+smoke+(1|id),family=binomial,data=ohio) > summary(fiter) Random effects: Groups Name Variance Std.Dev. Variance 5.491 corresponds to standard deviation 2.343. This id (Intercept) 5.491 2.343 means 95% probability interval for U i is [ − 4 . 686 , 4 . 686]. Number of obs: 2148, groups: id, 537 Large part of the variation explained by the U i relative to the fixed Fixed effects: effects. Estimate Std. Error z value Pr(>|z|) (Intercept) -3.37396 0.27496 -12.271 <2e-16 *** age -0.17677 0.06797 -2.601 0.0093 ** smoke 0.41478 0.28705 1.445 0.1485 Now only age is significant on the 5% level. Note large variance 5.491 for the U i . 19 / 27 20 / 27

Recommend

More recommend