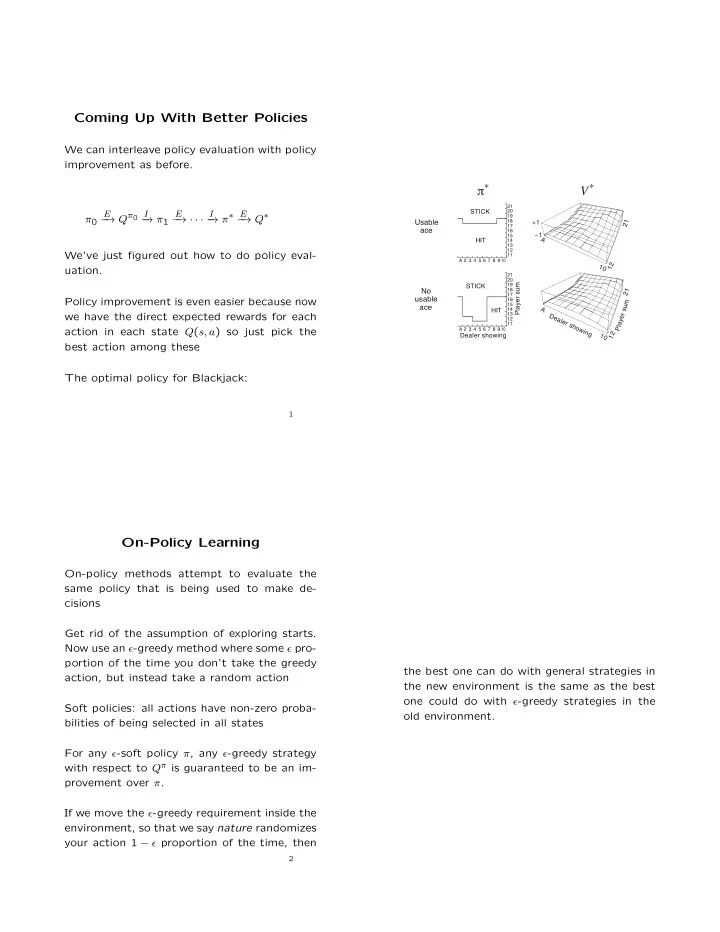

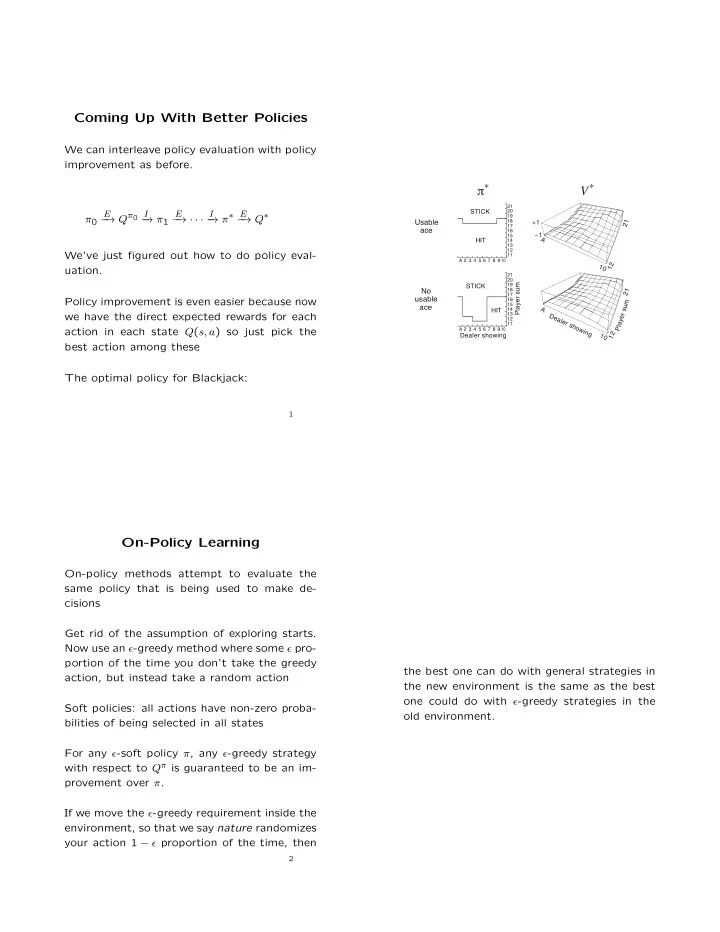

Coming Up With Better Policies We can interleave policy evaluation with policy improvement as before. π * V * 21 STICK 20 E ! Q ⇡ 0 I E ! · · · I ! ⇡ ⇤ E ! Q ⇤ 19 ⇡ 0 ! ⇡ 1 � � � � � 21 Usable 18 + 1 17 ace 16 − 1 15 A HIT 14 13 12 We’ve just figured out how to do policy eval- 11 A 2 3 4 5 6 7 8 9 10 12 1 0 uation. 21 20 Player sum STICK 19 No 18 21 17 usable Policy improvement is even easier because now 16 m 15 ace u 14 A HIT s we have the direct expected rewards for each 13 Dealer showing r e 12 y a 11 l P A 2 3 4 5 6 7 8 9 action in each state Q ( s, a ) so just pick the 10 12 Dealer showing 1 0 best action among these The optimal policy for Blackjack: 1 On-Policy Learning On-policy methods attempt to evaluate the same policy that is being used to make de- cisions Get rid of the assumption of exploring starts. Now use an ✏ -greedy method where some ✏ pro- portion of the time you don’t take the greedy the best one can do with general strategies in action, but instead take a random action the new environment is the same as the best one could do with ✏ -greedy strategies in the Soft policies: all actions have non-zero proba- old environment. bilities of being selected in all states For any ✏ -soft policy ⇡ , any ✏ -greedy strategy with respect to Q ⇡ is guaranteed to be an im- provement over ⇡ . If we move the ✏ -greedy requirement inside the environment, so that we say nature randomizes your action 1 � ✏ proportion of the time, then 2

Temporal-Di ff erence Learning What is MC estimation doing? Adaptive Dynamic Programming V ( s t ) (1 � ↵ t ) V ( s t ) + ↵ t R t Simple idea – take actions in the environment where R t is the return received following being (follow some strategy like ✏ -greedy with re- in state s t . spect to your current belief about what the value function is) and update your transition Suppose we switch to a constant step-size ↵ and reward models according to observations. (this is a trick often used in nonstationary en- Then update your value function by doing full vironments) dynamic programming on your current believed model. TD methods basically bootstrap o ff of exist- ing estimates instead of waiting for the whole In some sense this does as well as possible, reward sequence R to materialize subject to the agent’s ability to learn the tran- sition model. But it is highly impractical for V ( s t ) (1 � ↵ ) V ( s t ) + ↵ [ r t +1 + � V ( s t +1 )] anything with a big state space (Backgammon (based on actual observed reward and new state) has 10 50 states) This target uses the current value as an es- timate of V whereas the Monte Carlo target 3 4 Q-Learning: A Model-Free Approach Even without a model of the environment, you can learn e ff ectively. Q-learning is conceptually similar to TD-learning, but uses the Q function uses the sample reward as an estimate of the instead of the value function expected reward 1. In state s , choose some action a using pol- If we actually want to converge to the opti- icy derived from current Q (for example, mal policy, the decision-making policy must ✏ -greedy), resulting in state s 0 with reward be GLIE (greedy in the limit of infinite explo- r . ration) – that is, it must become more and more likely to take the greedy action, so that 2. Update: we don’t end up with faulty estimates (this Q ( s 0 , a 0 )) Q ( s, a ) (1 � ↵ ) Q ( s, a )+ ↵ ( r + � max problem can be exacerbated by the fact that a 0 we’re bootstrapping) You don’t need a model for either learning or action selection! As environments become more complex, using a model can help more (anecdotally) 5

Suppose our linear function predicts V ✓ ( s ) and Generalization in Reinforcement we actually would “like” it to have predicted Learning something else, say v . Define the error as E ( s ) = ( V ✓ ( s ) � v ) 2 / 2. Then the update rule So far, we’ve thought of Q functions and utility is: functions as being represented by tables ✓ i ✓ i � ↵@ E ( s ) @✓ i Question: can we parameterize the state space = ✓ i + ↵ ( v � V ✓ ( s )) @ V ✓ ( s ) so that we can learn (for example) a linear @✓ i function of the parameterization? If we look at the TD-learning updates in this framework, we see that we essentially replace V ✓ ( s ) = ✓ 1 f 1 ( s ) + ✓ 2 f 2 ( s ) + · · · + ✓ n f n ( s ) what we’d “like” it to be with the learned backup (sum of the reward and the value func- tion of the next state: Monte Carlo methods: We obtain sample of ✓ i ✓ i + ↵ [ R ( s ) + � V ✓ ( s 0 ) � V ✓ ( s )] @ V ✓ ( s ) V ( s ) and then learn the ✓ ’s to minimize squared @✓ i error. This can be shown to converge to the closest In general, often makes more sense to use an function to the true function when linear func- online procedure, like the Widrow-Ho ff rule: tion approximators are used, but it’s not clear 6 how good a linear function will be at approxi- mating non-linear functions in general, and all bets on convergence are o ff when we move to non-linear spaces. The power of function approximation: allows you to generalize to values of states you haven’t yet seen! In backgammon, Tesauro constructed a player as good as the best humans although it only examined one out of every 10 44 possible states. Caveat: this is one of the few successes that has been achieved with function approximation and RL. Most of the time it’s hard to get a good parameterization and get it to work.

Recommend

More recommend