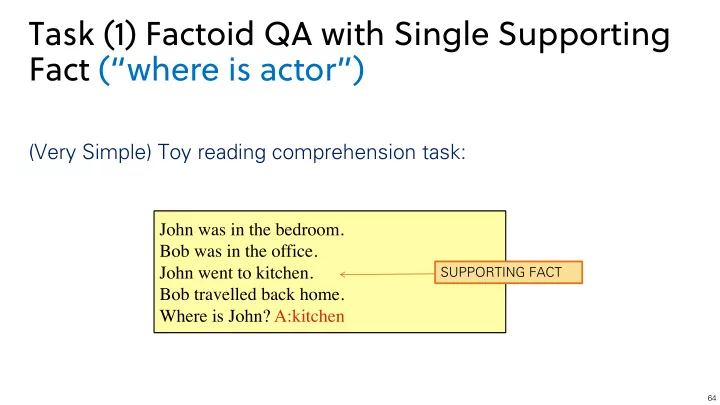

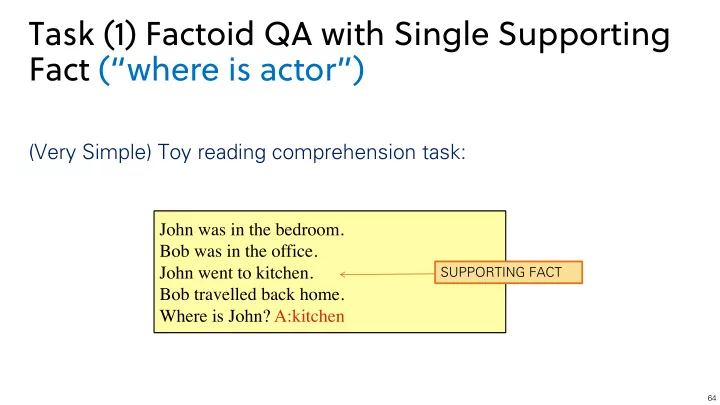

Task (1) Factoid QA with Single Supporting Fact (“where is actor”) (Very Simple) Toy reading comprehension task: John was in the bedroom. Bob was in the office. SUPPORTING FACT John went to kitchen. Bob travelled back home. Where is John? A:kitchen 64

Memory Networks (Fully Supervised) John was in the bathroom. Bob was in the office. Context John went to kitchen. Bob travelled back home Where is John? A: kitchen 65

Memory Networks (Fully Supervised) John was in the bathroom. Bob was in the office. Context John went to kitchen. Bob travelled back home Question, Answer Pair Where is John? A: kitchen 66

Memory Networks (Fully Supervised) John was in the bathroom. Bob was in the office. Context John went to kitchen. Supporting Fact Bob travelled back home Question, Answer Pair Where is John? A: kitchen St Step 1 • Store the representations of facts in the memory • Free to choose what representations you store • Individual words - window of words - full sentences • Bag-of-words - CNN - RNN - LSTM 67

Memory Networks (Fully Supervised) Memories Memories John was in the bathroom. m i = f ( John was in the bathroom. ) Bob was in the office. m i +1 = f ( Bob was in the office. ) John went to kitchen. Bob travelled back home m i +2 = f ( John went to the kitchen. ) Where is John? A: kitchen m i +3 = f ( Bob travelled back home. ) Step 1 St • Store the representations of facts in the memory • Free to choose what representations you store • Individual words - window of words - full sentences • Bag-of-words - CNN - RNN - LSTM 68

Memory Networks (Fully Supervised) Memories Memories John was in the bathroom. m i = f ( John was in the bathroom. ) Bob was in the office. m i +1 = f ( Bob was in the office. ) John went to kitchen. Bob travelled back home m i +2 = f ( John went to the kitchen. ) Where is John? A: kitchen m i +3 = f ( Bob travelled back home. ) x = f ( Where is John ?) Step 2 St • Represent the question using similar function. 69

Memory Networks (Fully Supervised) Memories Memories John was in the bathroom. m i = f ( John was in the bathroom. ) Bob was in the office. m i +1 = f ( Bob was in the office. ) John went to kitchen. Bob travelled back home m i +2 = f ( John went to the kitchen. ) Where is John? A: kitchen m i +3 = f ( Bob travelled back home. ) x = f ( Where is John ?) Step 3 St • Define a scoring function S S and score the memories with the question • Scoring function should be such that it gives a high score to the relevant memories: S(Where is John?, John went to the kitchen.) > S(Where is John?, Bob travelled back home.) 70

Memory Networks (Fully Supervised) Memories Memories John was in the bathroom. m i = f ( John was in the bathroom. ) Bob was in the office. m i +1 = f ( Bob was in the office. ) John went to kitchen. Bob travelled back home m i +2 = f ( John went to the kitchen. ) Exampl Ex ple Choi hoice ces Where is John? A: kitchen m i +3 = f ( Bob travelled back home. ) qU t Ud x = f ( Where is John ?) G w ( q, d ) Step 3 St • Define a scoring function S S and score the memories with the question • Scoring function should be such that it gives a high score to the relevant memories: S(Where is John?, John went to the kitchen.) > S(Where is John?, Bob travelled back home.) 71

Memory Networks (Fully Supervised) Memories Memories John was in the bathroom. m i = f ( John was in the bathroom. ) Bob was in the office. m i +1 = f ( Bob was in the office. ) John went to kitchen. Bob travelled back home m i +2 = f ( John went to the kitchen. ) Where is John? A: kitchen m i +3 = f ( Bob travelled back home. ) x = f ( Where is John ?) Step 4 St • Define another parametric function which maps the current question and relevant memories to the final response • In the first experiments, this was another scoring function which scored all possible responses against the given input and memories 72

Memory Networks (Fully Supervised) Memories Memories John was in the bathroom. m i = f ( John was in the bathroom. ) Bob was in the office. m i +1 = f ( Bob was in the office. ) John went to kitchen. Bob travelled back home m i +2 = f ( John went to the kitchen. ) Where is John? A: kitchen m i +3 = f ( Bob travelled back home. ) x = f ( Where is John ?) Inference ce • Given the question, pick the memory which scores the highest • Use the selected memory and the question to generate the answer 73

Memory Networks (Fully Supervised) Memories Tr Training ining Memories • It involves training the memory m i = f ( John was in the bathroom. ) representations and the scoring m i +1 = f ( Bob was in the office. ) functions to generate answer m i +2 = f ( John went to the kitchen. ) • We do so my minimizing the m i +3 = f ( Bob travelled back home. ) following loss x = f ( Where is John ?) max (0 , γ − S o ( x, m o 1 ) + S o ( x, ¯ X L = f ))+ ¯ f 6 = m o 1 X max (0 , γ − S r ([ x, m o 1 ] , r ) + S r ([ x, m o 1 ] , ¯ r )) r 6 = r ¯ 74

Memory Networks (Fully Supervised) Memories Tr Training ining Memories • It involves training the memory m i = f ( John was in the bathroom. ) representations and the scoring m i +1 = f ( Bob was in the office. ) functions to generate answer m i +2 = f ( John went to the kitchen. ) • We do so my minimizing the m i +3 = f ( Bob travelled back home. ) following loss We had access to true supporting fact during training x = f ( Where is John ?) that’s what we mean by “Fully Supervised” max (0 , γ − S o ( x, m o 1 ) + S o ( x, ¯ X L = f ))+ S o : scoring function for memories ¯ f 6 = m o 1 S r : scoring function for responses X max (0 , γ − S r ([ x, m o 1 ] , r ) + S r ([ x, m o 1 ] , ¯ r )) r 6 = r ¯ This s was s the ca case se when we have a si single su supporting fact ct! 75

(2) Factoid QA with Two Supporting Facts (“where is actor+object”) A harder (toy) task is to answer questions where two supporting statements have to be chained to answer the question: John is in the playground. Bob is in the office. John picked up the football. Bob went to the kitchen. Where is the football? A:playground Where was Bob before the kitchen? A:office 76

(2) Factoid QA with Two Supporting Facts (“where is actor+object”) A harder (toy) task is to answer questions where two supporting statements have to be chained to answer the question: John is in the playground. SUPPORTING FACT 2 Bob is in the office. SUPPORTING FACT 1 John picked up the football. Bob went to the kitchen. Where is the football? A:playground Where was Bob before the kitchen? A:office To answer the first question Where is the football? both John picked up the football and John is in the playground are supporting facts. 77

Memory Networks (Fully Supervised) Supporting Fact 2 John is in the playground. Bob isin the office. John picked up the football. Supporting Fact 1 Bob went to the kitchen. Where is the football? A: playground The current loss function will not work! max (0 , γ − S o ( x, m o 1 ) + S o ( x, ¯ X L = f ))+ ¯ f 6 = m o 1 X max (0 , γ − S r ([ x, m o 1 ] , r ) + S r ([ x, m o 1 ] , ¯ r )) r 6 = r ¯ But the cool thing is that we can iterate! 78

Memory Networks (Fully Supervised) Supporting Fact 2 John is in the playground. Bob isin the office. John picked up the football. Supporting Fact 1 Bob went to the kitchen. Where is the football? A: playground The current loss function will not work! max (0 , γ − S o ( x, m o 1 ) + S o ( x, ¯ X L = f ))+ ¯ f 6 = m o 1 X max (0 , γ − S r ([ x, m o 1 ] , r ) + S r ([ x, m o 1 ] , ¯ r )) r 6 = r ¯ But the co cool thing is s that we ca can iterate! But the cool thing is that we can iterate! 79

Memory Network Models implemented models.. Supervision (direct or Output reward-based) Memory Module read Controller module m a d d r e s s i n g read m a d d r e s s i n g q Input Memory Internal state vectors Vector (initially: query) Figure: Saina Sukhbaatar 80

The First MemNN Implemention • I (input): converts to bag-of-word-embeddings x . • G (generalization) : stores x in next available slot m N . • O (output): Loops over all memories k=1 or 2 times: • 1 st loop max: finds best match m i with x . • 2 nd loop max: finds best match m j with ( x , m i ) . • The output o is represented with ( x , m i , m j ) . • R (response): ranks all words in the dictionary given o and returns best single word. (OR: use a full RNN here) 81

Matching function • For a given Q, we want a good match to the relevant memory slot(s) containing the answer, e.g.: Match(Where is the football ?, John picked up the football) • We use a q T U T Ud embedding model with word embedding features: − LHS features: Q:Where Q:is Q:the Q:football Q:? − RHS features: D:John D:picked D:up D:the D:football QDMatch:the QDMatch:football (QD QDMatch:football is is a fea eatur ure e to o say ther here’ e’s a Q&A wor ord d match, h, whic hich h can n help. help.) The parameters U are trained with a margin ranking loss: supporting facts should score higher than non-supporting facts. 82

Recommend

More recommend