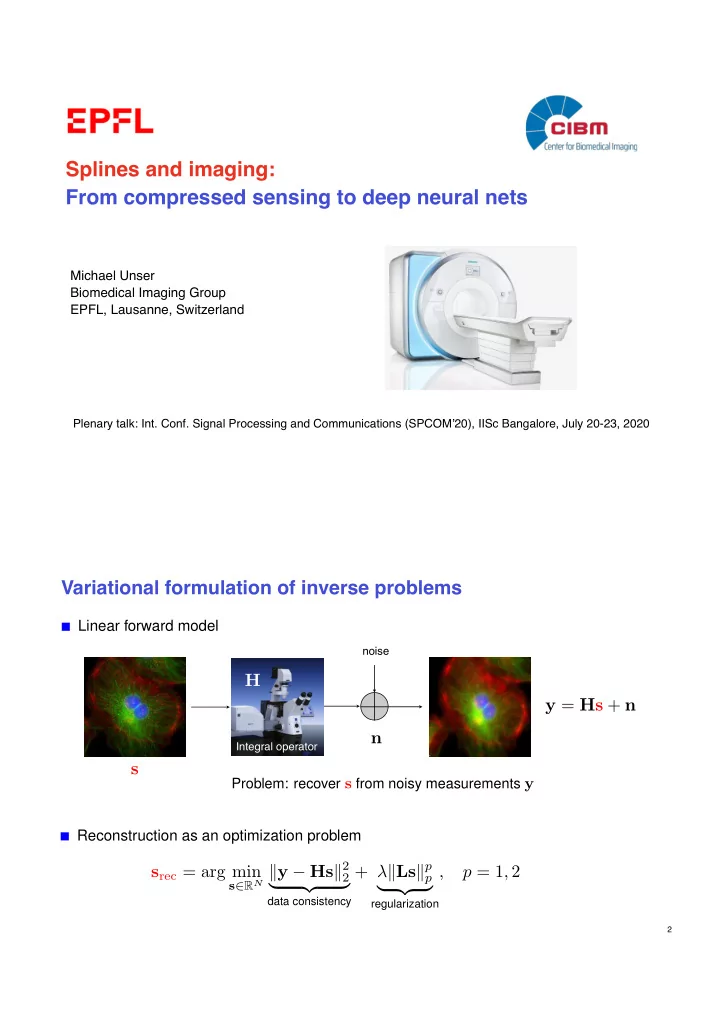

Splines and imaging: From compressed sensing to deep neural nets Michael Unser Biomedical Imaging Group EPFL, Lausanne, Switzerland Plenary talk: Int. Conf. Signal Processing and Communications (SPCOM’20), IISc Bangalore, July 20-23, 2020 Variational formulation of inverse problems Linear forward model noise H y = Hs + n n Integral operator s Problem: recover s from noisy measurements y Reconstruction as an optimization problem s ∈ R N k y � Hs k 2 + λ k Ls k p s rec = arg min p = 1 , 2 , 2 p | {z } | {z } data consistency regularization 2

<latexit sha1_base64="T+C9yVxj8piky8k/0T8uhwPugc=">AL2XiclVZNb9tGEKXSr0RuG6e9FOhlUFuAk8aCqKBNWtRACeBjSapaydOYK+jLsmltPHyI7tLW8qah96KXvuv+g/6S3rtLCnJOUDgEJxMzb94Yzu7PjpYIr3ev907rywYcfzJ1WvtpU8/+/z68o0v9lWSZ89xORyJceVUzwmD3XAv2MpWMRp5gL7zjTet/cKk4kn8TE9SdhTRYcxD7lONpsHy30RlnmK+2WXDTFDJ37IABKNKr6s3GZVMQcg1EFjTI5bICSQh7P68tXczbxNPVgNB4bIqLDlGyTgKhV0ovREMCBUDoFEPB6YEAiPgeylPmzlRLBQrwEqRwMTbj5qydQ2M5gMohgHcI1sg8wHkQ3ieTDkT571YdvEYKfFVAgZyE5Q9WS7FW/xNxcHSyv9Lq94oHF3f6snL/K6d4dgY3rv1LgsTPIhZrX1ClDt1eqo8MlZr7guEXZoql1D+mQ2aKVOfQVMAYSLxF2sorDUcjZSaRB4iI6pHqumzxgt91iJVqOqnhfl7arlMNPhvSPD4zTLPbLgMJMgE7A1hcCLpmvxQSo7+N3ZVRjvDWGZ+6RscHb5TWH8qlgwUb3h/6RGTFxwjT6JYvZqZ9EY0DQ0IacTEJWEgzoXNDVDh7b3faHSj2mwKEgpcJwbT6Ee0EMbg9i1yZKBsmIsiNHq5cW/3une/u93LFzCSTaYXhcB5Q9h1WA8kbFSBiOpxymoh0dCs4ijqyS16ByIzAQzVpWN8/bsJW8wW/nLM0/DvSw3fv57cdt0XZLbE1jK90kJwv+Pu86AHaBsFrZ15AYMGSncO+yWe91v8cl9UzjXhcCVtlVGU3WT1f0rca8C6Rd+rcPdeZ513zePJuoTszoapMKZAf4lkg52K3zEW7xUWC4m/KaVNUZAjsAYAV92L6hsJlRaoqRSHmIs1i86Etp1UAY+srsWFcInybxWn40C54oU6c4jVQIb5ErBiqLScSFskoesFeynxOBdhumIiyEVRVeLybm+JWsK3P8xu8wN5fFBHDQRm7g7Zs7NpnO34lzgPqg4F2ifVpxPm84tLjwm9QyCPdNsNTHsDbYzWziNG1IyYcgJXiqS03iI94rZyG1Try54nKS5KS7Sx02uB3PXg6Zre+7abroCHupL1hQe4TA2ey+gmPFnaB9aux9IFZQXhLfJ06pBxAzJe2KNhFxAnDYQJInYkC7E7B03cMeLza+BEAskY8yJbizDCGUzrwHenFxP8jK3QXk9kf0VtyZgysMzezpwgc78SjeNJgZ9leAruQHFipqAnJWV47o1+wn0yLr2/pOguCGRUIEozY3MjK8pmja9fz42v58btOC+mW7lyLhzx45MvNKufGl2ZsyPElxOTnARlSbAjVf8nCcliuSdMZoHmIHiBPBI65xcGl31osH9pjGljwE7OWQ4mQBCsdKYNrv4tMmiuGYGg/1CLs9G2uUw5nIuK6Ps07VaftQbEuBQ0Dhq98Us7FXaYnUlqHbxQSJ9S8cn3YhopEOeCo6DYHw8WX/X7XvdPt/9pfuX+vnBmdq87XzjfOmuM6d537zpaz4zx3/Favtd8atH5bOlz6femPpT9L6JXWdM2XTu1Z+us/+a1U5w=</latexit> Linear inverse problems (20th century theory) Dealing with ill-posed problems : Tikhonov regularization R ( s ) = k Ls k 2 2 : regularization (or smoothness) functional L : regularization operator (i.e., Gradient) k y � Hs k 2 2 σ 2 min R ( s ) subject to s Andrey N. Tikhonov (1906-1993) Equivalent variational problem s ? = arg min k y � Hs k 2 + λ k Ls k 2 2 2 | {z } | {z } data consistency regularization s = ( H T H + λ L T L ) − 1 H T y = R λ · y s = ( H T H + λ L T L ) − 1 H T y = R λ · y Formal linear solution: Formal linear solution: Interpretation: “filtered” backprojection 3 Learning as a (linear) inverse problem but an infinite-dimensional one … Given the data points ( x m , y m ) ∈ R N +1 , find f : R N → R f ( x m ) ≈ y m for m = 1 , . . . , M s.t. Introduce smoothness or regularization constraint (Poggio-Girosi 1990) Z R ( f ) = k f k 2 H = k L f k 2 R N | L f ( x ) | 2 d x : regularization functional L 2 = M | y m − f ( x m ) | 2 ≤ σ 2 X min f ∈ H R ( f ) subject to m =1 Regularized least-squares fit (theory of RKHS) M ! kernel estimator ⇒ | y m � f ( x m ) | 2 + λ k f k 2 X f RKHS = arg min H f ∈ H (Wahba 1990; Schölkopf 2001) m =1 4

OUTLINE ■ Introduction ✔ ■ Image reconstruction as an inverse problem ■ Learning as an inverse problem ■ Continuous-domain theory of sparsity ■ Splines and operators ■ gTV regularization: representer theorem for CS 2 ■ From compressed sensing to deep neural networks ■ Unrolling forward/backward iterations: FBPConv ■ Deep neural networks vs. deep splines ■ Continuous piecewise linear (CPWL) functions / splines ■ New representer theorem for deep neural networks 5 Part I: Continuous-domain theory of sparsity L 1 splines gTV optimality of splines for inverse problems (Fisher-Jerome 1975) (U.-Fageot-Ward, SIAM Review 2017) 6

Splines are analog, but intrinsically sparse L {·} : differential operator (translation-invariant) δ : Dirac distribution Definition The function s : R d → R (possibly of slow growth) is a nonuniform L -spline with knots { x k } k ∈ S X L s = a k δ ( · − x k ) = w : spline’s innovation ⇔ k ∈ S L = d d x a k x k x k +1 Spline theory: (Schultz-Varga, 1967) 7 Spline synthesis: example L = D = d N D = span { p 1 } , p 1 ( x ) = 1 Null space: d x ρ D ( x ) = D − 1 { δ } ( x ) = + ( x ) : Heaviside function X s ( x ) = b 1 p 1 ( x ) + + ( x − x k ) a k X w δ ( x ) = a k δ ( x − x k ) k k a 1 x b 1 x x 1 8

<latexit sha1_base64="Lhlkq8HrqncLMb/40xnAnOuT6Ag=">ALe3iclVZbT9xGFDb0liy9kPaxL6NuVkorGzSlN6QkEgQSCmlEgE3q7G9uzusONLZsawy2j+ZN/6S9qXSj1jexdfoNoYsbLO9813js85c2a8hFEhbfuvpeUPvzo408ePGytfPrZ51+sPvryTMQp98mpH7OYv/WwIxG5FRSycjbhBMceoy8ca7Bn9zRbigcfRaThPSC/EwogPqYwm/ioT9wzNHm6jVyRhn01ki5maziJNC4P9YuH8V91WcZMyNMmwMwHiKvkXuz9lfJhJtO/oPdi3NfL6EUr6Ue6kv9q2u3b2oOaLU7y0reI56j96+LcbxH4akj6DAtx4diJ7CnMJfUZ0S03FSTB/hgPicrC0qgDpgANYg7/kUSZtcLDoRDT0ANmiOVI1DFjvBMzFi4GourV80LdKlsuUjn4oadolKSRH4e0CBlSMbIVAFlBNfsinCvg/flWIJ8VYUXjs9ZYI3yuA8DEjwXb3x82eGhF2RSTgnETk2o/DEeBcgc4pGwakAFOmdTKFYPZe6vT6qCsIwQCKvJSxogUP4HdBQ40UF7YMB3GLNCKDz2tnHW7u/V83dYNDifTgmN3gZD/A60cjMdSkruBSKpxMuxB0oSUj3rKMPWyOUpI8p4JRPdmr3omrJxv7hyEe6i2vD576Vt0rWgtseglO+TEqD/n3ZVQY6ISD0B7QWbWyvYyiMBvUPW1Ibd/R6WVDMNvc4YetzKoxLvUszJ49slm8YHus/JvX62bv3M8y5pNL3f0bOZo7Kb3IG+gL3g3jpbU3d1iwMC2U+haVKUZQiZDYDazt3yNQ+LOil7yQoxd1IvNh2achoPSJn6chgYC4RvsliEj1qZLqoKJ5iXhReIFUI15QTBLBnZLDhJiE8xQ2YaxiwfBGUvNDrW5he5ZvR5njqufyCNzquM8zpjF7pjBu7WweMS2NA+L4EN2cMSeFgH9ynzCJczCsxMtV/nkHcwzkzhJDQkJ0y5V3CocIqjIZwraluboV5eAOefhlTzEL2qa72YQy/q0MEcOqhDAY1kgQUNb3twvlPCy5+w1+gC78ash9IFeQXRDeh0qpRJjTJp9GidwRuM6xrDjUMyxI2YvXGN24OvxqDNUQmkBNZWwYR8npeAzg5qZzqPLdBfjy5Z2n4kDlm2f2dNAVbHA4/3L2SeJn/FmGC3IHZTumIP2aSw5u0fAX6JNJjv6WAJwJ8DBj5GZC5kaSlc0YLy/nxsu58SAKqK+KVg6VMweOeOzlduFzdTRT3othuXtNAzLCUmWs+ZKXkyRfESczRfUSJkAUMxpSCReXVmcje9AJkTCShwhmOUrgZoEvSGISL8LT8sVBC6S0VCOYNqTiQR3cCdSjuPDXacMmjkUmVLAJSDqifF7GIqJAdpo9DdrBVSYk+Xjg8zUEFI7gqOvWLYfPlbLPrPOvav3/X3tkpLo0PrK+tb6wnlmNtWTvWvnVknVq+9af1z9LS0nLr35X2ytrKek5dXirWfGVnpXn/wFo2zMd</latexit> <latexit sha1_base64="FLgF8iONCx2uG1p2Ptp/DtdeHMQ=">ALgHiclVZtT9xGEDa0zctd25D2Y7+sCqeSKFzPRC0tKhISCQIpTSkJAKj09qeu1tu/cLuGu5Y+XdW/SX9VKmztu/wC1TE0p2seZ59ZjwzO7tuzJlUvd7fC4uf7Fg4ePHrfaX3719ZOlp98cygRHrz3Ih6Jjy6VwFkI7xVTHD7GAmjgcvjgjncM/uEShGR+E5NYzgL6DBkA+ZRhab+0sVRbJb+IAkLw+gys246Fwn1yYrjMxlzOpVqyoFc9R0fuKrzjGZPNtyZBL0x0Q7WQxagJ/S/jglJdJaGTQGxJ+t9JeWe91e9pDmi128LFvFc9B/+vgfx4+8JIBQeZxKeWr3YnWmqVDM45C2nERCTL0xHYLOHKakgyafDCKBv1CRzFrh0UDKaeAiM6BqJOuYMd6KGYuQA1n16rpB2ipbThM1+OVMszBOFIReHtAg4URFxJSB+EyAp/iUM/D70qowngrCu/sM2CN8srgPQoB3+r+v6mR4BvwSFuIAQrwoCGjoa2dA8anPgxowlWqHTmYvbc6rQ7J2kISpBI34RyU3EQ7Vm6ArZCXLEiGEfdTLYZuqu0Xve7GTy96aYMjYFpwel0k5D+klYNxeQK5G4ykGienLnaugoCls14xbOwikXDQxitM0tbsJa0pG/f3Vy7Cva82fv4naZt03VPb5VjKT0kJ0v9Pu6qgRiATV2J74V5ONW7RkcTeged6rdf9GZdUM429zjlZaeVRSdz6AlZulqwbH+QuJ3f62bjxM8+7YuH0bkcvZ47KbnIH6SnuBefG2XN9W7fYKJD9FZomRVmGiNkAZNm+Xb7m4b5Oyl6yQsyd1IvNhqacxgPRpr4CB8Y9wjdZLMInrUyXVIVjKsrC94gVQzXlRMEsGdksOIrBY5QTMw0jng+CshcWHqbmnzhm9LmuPqx/IAtPqoyTOmMHu2MG7tTBwxLY0D4pgQ3ZtyXwbR3cY9wFoWYUnJl6r86BCxnpnAKG1IA184lHiqC0XCI54reSs1QLy94E8UploE5E1d69UcelWH9ufQfh3yWagKzG9428VDnoEof8Juowvca7MeS+fnFSTXqNOpUiY1yqTRo3WGaDCuagwnCmBIGzG74xpv3Bx+NQZviEwJ6q2DCMU9bz6eHIyNU3z3Pr58eQcL9sVBzrfPLOnQy5xg+P5l7OPYi/jzJckDsk2zEF6fdcnCDBr9hn0xy9I8Y4UxABkjNwPMjZCVzRjPz+fG87lxP/SZp4tWDrQ9Bw5E5OZ26Ql9MFPejXC5c8V8GFGlM9Z8yetJnK+I4pmifo0TIw4C5jCi0urs5Y95AgUjuQhwVlOYrxZEMmugYDyuvi0HAl4mwyHaoTHiYK3eGdSNu2h3edMmjmUGhKgZeADKueFLPbqVQCpY1Cd71WSEXdtHR8mIGKQinBq6Jdvxg2X47Xu/bLbu/P9eXt7eLS+Mj6zvreWrVsa8PatvasA+u95Vl/Wf8uPFh42F5sr7Z/bNs5dXGhWPOtVXnam/8BFGE2Ig=</latexit> Spline synthesis: generalization L : spline-admissible operator (LSI) Finite-dimensional null space: N L = span { p n } N 0 n =1 Green’s function of L : ρ L ( x ) = L − 1 { δ } ( x ) X w δ ( x ) = a k δ ( x − x k ) Spline’s innovation: k N 0 X X s ( x ) = a k ρ L ( x − x k ) + b n p n ( x ) ⇒ n =1 k Requires specification of boundary conditions x k 9 ` 1 ( Z d ) Proper continuous counterpart of S ( R d ) : Schwartz’s space of smooth and rapidly decaying test functions on R d S 0 ( R d ) : Schwartz’s space of tempered distributions Space of real-valued bounded Radon measures on R d � 0 = w 2 S 0 ( R d ) : k w k M = M ( R d ) = � C 0 ( R d ) � sup h w, ϕ i < 1 , ϕ 2 S ( R d ): k ϕ k ∞ =1 M R where w : ϕ 7! h w, ϕ i = R d ϕ ( r ) w ( r )d r Basic inclusions 8 f 2 L 1 ( R d ) : k f k M = k f k L 1 ( R d ) L 1 ( R d ) ✓ M ( R d ) ) δ ( · � x 0 ) 2 M ( R d ) with k δ ( · � x 0 ) k M = 1 for any x 0 2 R d 10

Recommend

More recommend