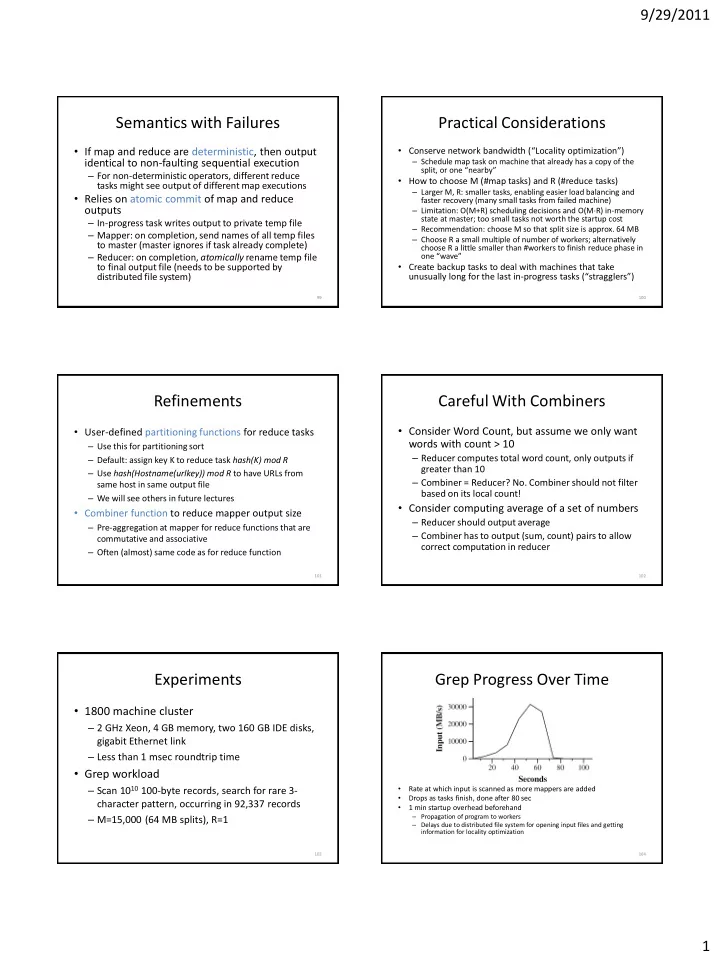

9/29/2011 Semantics with Failures Practical Considerations • If map and reduce are deterministic, then output • Conserve network bandwidth (“Locality optimization”) – Schedule map task on machine that already has a copy of the identical to non-faulting sequential execution split, or one “nearby” – For non-deterministic operators, different reduce • How to choose M (#map tasks) and R (#reduce tasks) tasks might see output of different map executions – Larger M, R: smaller tasks, enabling easier load balancing and • Relies on atomic commit of map and reduce faster recovery (many small tasks from failed machine) outputs – Limitation: O(M+R) scheduling decisions and O(M R) in-memory state at master; too small tasks not worth the startup cost – In-progress task writes output to private temp file – Recommendation: choose M so that split size is approx. 64 MB – Mapper: on completion, send names of all temp files – Choose R a small multiple of number of workers; alternatively to master (master ignores if task already complete) choose R a little smaller than #workers to finish reduce phase in – Reducer: on completion, atomically rename temp file one “wave” • Create backup tasks to deal with machines that take to final output file (needs to be supported by distributed file system) unusually long for the last in- progress tasks (“stragglers”) 99 100 Refinements Careful With Combiners • Consider Word Count, but assume we only want • User-defined partitioning functions for reduce tasks words with count > 10 – Use this for partitioning sort – Reducer computes total word count, only outputs if – Default: assign key K to reduce task hash(K) mod R greater than 10 – Use hash(Hostname(urlkey)) mod R to have URLs from – Combiner = Reducer? No. Combiner should not filter same host in same output file based on its local count! – We will see others in future lectures • Consider computing average of a set of numbers • Combiner function to reduce mapper output size – Reducer should output average – Pre-aggregation at mapper for reduce functions that are – Combiner has to output (sum, count) pairs to allow commutative and associative correct computation in reducer – Often (almost) same code as for reduce function 101 102 Experiments Grep Progress Over Time • 1800 machine cluster – 2 GHz Xeon, 4 GB memory, two 160 GB IDE disks, gigabit Ethernet link – Less than 1 msec roundtrip time • Grep workload – Scan 10 10 100-byte records, search for rare 3- • Rate at which input is scanned as more mappers are added • Drops as tasks finish, done after 80 sec character pattern, occurring in 92,337 records • 1 min startup overhead beforehand – M=15,000 (64 MB splits), R=1 – Propagation of program to workers – Delays due to distributed file system for opening input files and getting information for locality optimization 103 104 1

9/29/2011 Sort MapReduce at Google (2004) • Machine learning algorithms, clustering • Sort 10 10 100-byte records (~1 TB of data) • Data extraction for reports of popular queries • Less than 50 lines user code • Extraction of page properties, e.g., geographical location • M=15,000 (64 MB splits), R=4000 • Graph computations • Use key distribution information for intelligent • Google indexing system for Web search (>20 TB of data) partitioning – Sequence of 5-10 MapReduce operations – Smaller simpler code: from 3800 LOC to 700 LOC for one • Entire computation takes 891 sec computation phase – 1283 sec without backup task optimization (few slow – Easier to change code machines delay completion) – Easier to operate, because MapReduce library takes care of – 933 sec if 200 out of 1746 workers are killed several failures – Easy to improve performance by adding more machines minutes into computation 105 106 Summary • Programming model that hides details of MapReduce relies heavily on the underlying parallelization, fault tolerance, locality distributed file system. Let’s take a closer look optimization, and load balancing • Simple model, but fits many common problems to see how it works. – User writes Map and Reduce function – Can also provide combine and partition functions • Implementation on cluster scales to 1000s of machines • Open source implementation, Hadoop, is available 107 108 The Distributed File System Motivation • Abstraction of a single global file system greatly • Sanjay Ghemawat, Howard Gobioff, and Shun- simplifies programming in MapReduce Tak Leung. The Google File System. 19th ACM • MapReduce job just reads from a file and writes Symposium on Operating Systems Principles, output back to a file (or multiple files) Lake George, NY, October, 2003 • Frees programmer from worrying about messy details – How many chunks to create and where to store them – Replicating chunks and dealing with failures – Coordinating concurrent file access at low level – Keeping track of the chunks 109 110 2

9/29/2011 Google File System (GFS) Data and Workload Properties • Modest number of large files • GFS in 2003: 1000s of storage nodes, 300 TB – Few million files, most 100 MB+ disk space, heavily accessed by 100s of clients – Manage multi-GB files efficiently • Goals: performance, scalability, reliability, • Reads: large streaming (1 MB+) or small random (few availability KBs) • Many large sequential append writes, few small writes • Differences compared to other file systems at arbitrary positions – Frequent component failures • Concurrent append operations – Huge files (multi-GB or even TB common) – E.g., Producer-consumer queues or many-way merging • High sustained bandwidth more important than low – Workload properties latency • Design system to make important operations efficient – Bulk data processing 111 112 File System Interface Architecture Overview • Like typical file system interface • 1 master, multiple chunkservers, many clients – Files organized in directories – All are commodity Linux machines – Operations: create, delete, open, close, read, • Files divided into fixed-size chunks write – Stored on chunkservers ’ local disks as Linux files • Special operations – Replicated on multiple chunkservers – Snapshot: creates copy of file or directory tree at • Master maintains all file system metadata: low cost namespace, access control info, mapping from – Record append: concurrent append guaranteeing files to chunks, chunk locations atomicity of each individual client’s append 113 114 Why a Single Master? High-Level Functionality • Master controls system-wide activities like chunk lease • Simplifies design management, garbage collection, chunk migration • Master can make decisions with global • Master communicates with chunkservers through knowledge HeartBeat messages to give instructions and collect state • Potential problems: • Clients get metadata from master, but access files – Can become bottleneck directly through chunkservers • Avoid file reads and writes through master • No GFS-level file caching – Single point of failure – Little benefit for streaming access or large working set • Ensure quick recovery – No cache coherence issues – On chunkserver, standard Linux file caching is sufficient 115 116 3

Recommend

More recommend