Robust and Practical Depth Map Fusion for Time-of-Flight Cameras - PowerPoint PPT Presentation

Robust and Practical Depth Map Fusion for Time-of-Flight Cameras Markus Ylimki 1 , Juho Kannala 2 , Janne Heikkil 1 1 Center for Machine Vision Research, University of Oulu, Oulu, Finland 2 Department of Computer Science, Aalto University,

Robust and Practical Depth Map Fusion for Time-of-Flight Cameras Markus Ylimäki 1 , Juho Kannala 2 , Janne Heikkilä 1 1 Center for Machine Vision Research, University of Oulu, Oulu, Finland 2 Department of Computer Science, Aalto University, Espoo, Finland

Introduction - Depth map fusion is an essential part of every depth map based 3-D reconstruction software - We introduce a method which merges a sequence of depth maps into a single point cloud - Starting with a point cloud projected from a single depth map, the measurements from other depth maps are either added to the cloud or used to refine existing points - The refinement gives more weight to less uncertain measurement - Uncertainty is based on empirical, depth dependent variances 2 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Motivation - One major issue in time-of-flight cameras (like Kinect V2) is the multipath interference (MPI) problem - Occurs when depth sensor receives multiple scattered or reflected signals from the same direction - Causes positive bias to the measurements - Occurs especially in concave corners Mesh from a single depth map Mesh from the output of the proposed method 3 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

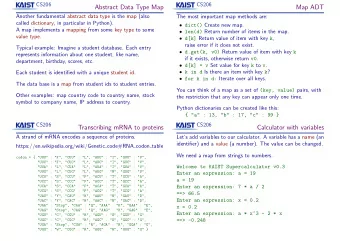

Background - Release of Microsoft Kinect has increased the interest towards real-time reconstruction - Many impressive results have been achieved especially with voxel based approaches • Usually require video input (short baseline) • Memory consuming - Kyöstilä et al (SCIA2013) fused wide baseline depth maps into a point cloud tooking the measurement accuracy into account • Designed for Kinect V1 (structured light) • Does not contain any outlier filtering 4 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Contributions We propose three extensions to Kyöstilä’s method: - 1. Depth map pre-filtering • Reduces the amount of outliers 2. Improvement of uncertainty covariance • Makes the method more accurate 3. Filtering of the final point cloud • Reduces the amount of badly registered and MPI points 5 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Proposed method 2.Depth map fusion Input: - Depth maps Output: 1.Depth map 3.Point cloud with - RGB images pre-filtering post-filtering - Point cloud and camera poses Improved uncertainty ellipsoids 6 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Depth map pre-filtering - Usually, a distance from an outlier or inaccurate point to a nearest neighboring point is much above the average - Filtering removes a measured point if its distance to the 𝑒 𝑛𝑓𝑏𝑡𝑣𝑠𝑓𝑒 > 𝑒 𝑠𝑓𝑔𝑓𝑠𝑓𝑜𝑑𝑓 nth nearest neighbor is ≈ 0.577 ∙ 𝑒 𝑠𝑓𝑔𝑓𝑠𝑓𝑜𝑑𝑓 0.3 - The reference is an average distance from a point to its nth nearest neighbor in the 3-D space at a certain depth (n=4 in our experiments) 7 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu Average distance among all backprojected depths

Depth map fusion - First depth map of the sequence is backprojected into the 3-D space - For every pixel in every other depth map 1. Backproject into the space 2. If it is nearby an existing point in the same projection line - Refine the existing point with the new measurement 3. Otherwise - Add the new measurement to the point cloud 8 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Depth map fusion (2) - The refinement gives more weight to the more certain point - Initially each point has an uncertainty C 𝜇 1 (𝛾 𝑦 𝑨/ 12) 2 0 0 𝜇 1 (𝛾 𝑧 𝑨/ 12) 2 𝑫 = 0 0 𝜇 2 (𝛽 2 𝑨 2 + 𝛽 1 𝑨 + 𝛽 0 ) 2 0 0 - Uncertainty covariance corresponds to an ellipsoid in 3-D Image plane Backprojected depth Image plane measurement Camera center Optical axis C Kyöstilä’s method Our method 9 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Post-filtering - If the existing point and the new measurement are too far from each other they are not merged together - But the points may still violate the visibility of each other 10 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Post-filtering (2) - We record both the visibility violation count and the count of merges - If there is a visibility violation • We increment the visibility violation count of The new measurement if the existing point has already been merged at least once OR The point whose normal creates a bigger angle with its line of sight - At the end, the points whose visibility violation count > count of merges are removed from the cloud 11 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Experiments - Three data sets - Experiments illustrate the significance of each extension CCorner Office2 Office1 and - Simple concave corner - Complex office environments - Ground truth available - No ground truths - Accurate camera poses - Camera poses acquired by solving a generic structure from motion problem 12 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Experiments (2) - Pre-filtering Kyöstilä’s method Kyöstilä’s method with pre-filtered depth maps 13 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Experiments (3) - Re-aligned uncertainty covariances and post- filtering Reduces the MPI points Reduces badly registered points Kyöstilä’s method with pre- Proposed method filtered depth maps 14 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Experiments (4) - Quantitative analyses [7]: Kyöstilä’s method - - PRF: pre-filtering Completeness - RAC: Re-aligned covariances 0,176 - POF: Post-filtering 0,174 0,172 0,17 JACCARD INDEX 0,168 0,166 0,164 0,162 0,16 0,158 0,156 0,154 Completeness at 20mm accuracy [7] PRF PRF + RAC PRF + RAC + POF 15 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Experiments (5) - Accuracy (CCorner dataset) - Pre and post-filterings bring only moderate improvement because of the simplicity of the dataset - Not much outliers - No registration errors Leftover errors 16 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Summary - We propose three extensions i.e. 1. Depth map pre-filtering 2. Re-aligned uncertainty covariances and 3. Post-filtering to an existing iterative depth map merging method which takes the quality of points into account - The experiments show that the proposed method outperforms the existing method both in robustness and accuracy without losses in the completeness of the models 17 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Thank you for your attention! 18 12 June 2017 Markus Ylimäki, Center for Machine Vision Research University of Oulu

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.

![Tsinghua University Monocular Depth-Pose Prediction [R, t] Depth and Pose RGB PoseNet](https://c.sambuz.com/691712/tsinghua-university-monocular-depth-pose-prediction-s.webp)