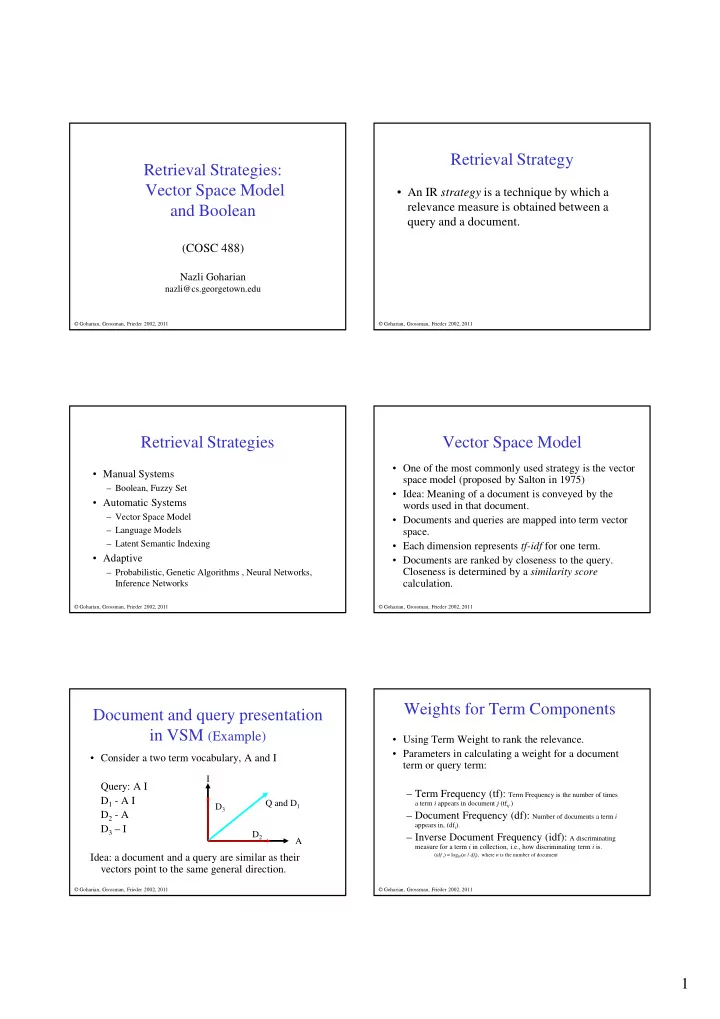

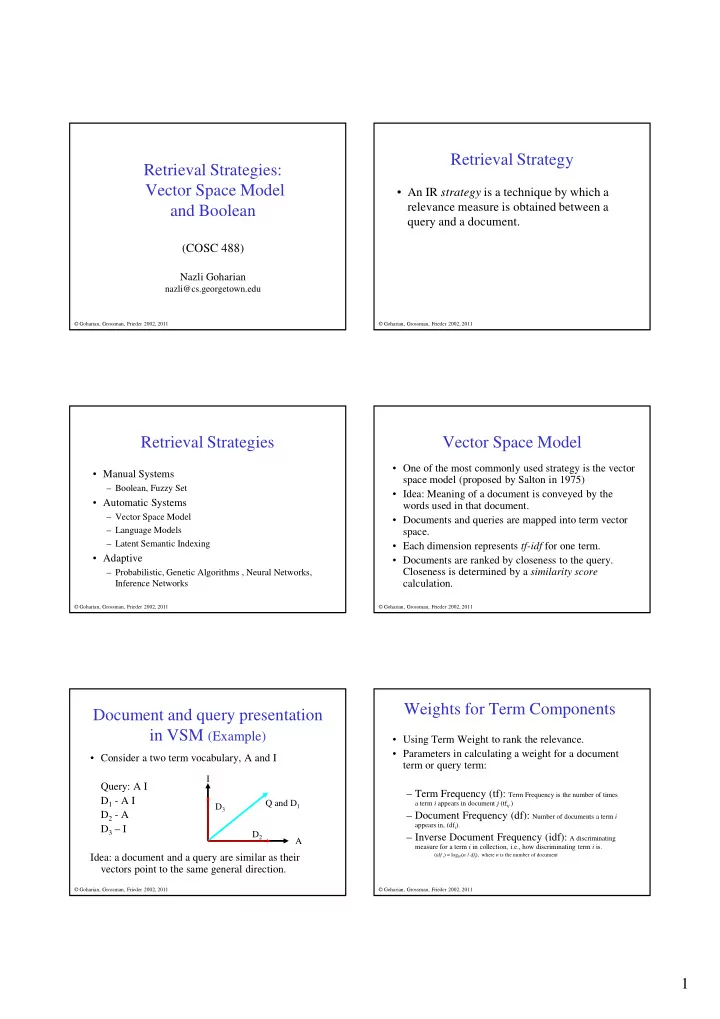

Retrieval Strategy Retrieval Strategies: Vector Space Model • An IR strategy is a technique by which a relevance measure is obtained between a and Boolean query and a document. (COSC 488) Nazli Goharian nazli@cs.georgetown.edu Goharian, Grossman, Frieder 2002, 2011 Goharian, Grossman, Frieder 2002, 2011 Retrieval Strategies Vector Space Model • One of the most commonly used strategy is the vector • Manual Systems space model (proposed by Salton in 1975) – Boolean, Fuzzy Set • Idea: Meaning of a document is conveyed by the • Automatic Systems words used in that document. – Vector Space Model • Documents and queries are mapped into term vector – Language Models space. • Each dimension represents tf-idf for one term. – Latent Semantic Indexing • Adaptive • Documents are ranked by closeness to the query. Closeness is determined by a similarity score – Probabilistic, Genetic Algorithms , Neural Networks, calculation. Inference Networks Goharian, Grossman, Frieder 2002, 2011 Goharian, Grossman, Frieder 2002, 2011 Weights for Term Components Document and query presentation in VSM (Example) • Using Term Weight to rank the relevance. • Parameters in calculating a weight for a document • Consider a two term vocabulary, A and I term or query term: I Query: A I – Term Frequency (tf): Term Frequency is the number of times D 1 - A I Q and D 1 a term i appears in document j (tf ij ) D 3 D 2 - A – Document Frequency (df): Number of documents a term i appears in, (df i ). D 3 – I D 2 – Inverse Document Frequency (idf): A discriminating A measure for a term i in collection, i.e., how discriminating term i is. ( idf i ) = log 10 ( n / df j ), where n is the number of document Idea: a document and a query are similar as their vectors point to the same general direction. Goharian, Grossman, Frieder 2002, 2011 Goharian, Grossman, Frieder 2002, 2011 1

Weights for Term Components Weights for Term Components • Classic thing to do is use tf x idf • Many variations of term weight exist as the result of improving on basic tf-idf • Incorporate idf in the query and the document, one • A good one: or the other or neither. ( ) + tf idf log 1 . 0 * • Scale the idf with a log = ij j w ij t [ ( ) ] ∑ tf + idf • Scale the tf (log tf+1) or (tf/sum tf of all terms in that document) 2 log 1 . 0 * ij j j = 1 • Augment the weight with some constant (e.g.; w = • Some efforts suggest using different weighting for document (w)(0.5)) terms and query terms. Similarity Measures: Similarity Measures (Inner Product) • Similarity Coefficient (SC) identifies the Similarity between query Q and document D i • Inner Product (dot product) t ( ) ∑ •Inner Product (dot Product) = SC Q D w x d , i qj ij •Cosine j = 1 •Pivoted Cosine • Problem: Longer documents will score very high because they have more chances to match query words. Similarity Measures: (Cosine) t ∑ w x d Probability qj ij ( ) = of relevance SC Q D = j 1 , i ( ) ( ) t ∑ ∑ t Slope d 2 w 2 ij qj j = 1 Pivot j = 1 • Assumption: document length has no impact on the Probability of retrieval relevance. • Normalizes the weight by considering document length. • Problem: Longer documents are somewhat penalized because indeed they might have more components that are Document Length indeed relevant [Singhal, 1997- Trec] 2

Pivoted Cosine Normalization Pivoted Cosine Normalization • Pivoted Cosine Normalization worked well for • Comparing likelihood of retrieval and relevance in a short and moderately long documents. collection to identify pivot and thus, identify the new correction factor. t ∑ w d • Extremely long documents are favored qj ij ( ) = j = SC Q D 1 , i t ( ) ∑ d 2 ij ( ) ( ) j = − s + s 1 1 . 0 avgn Avgn: average document normalization factor over entire collection s : can be obtained empirically VSM Example Pivoted Unique Normalization • Q: “gold silver truck” • D 1 : “Shipment of gold damaged in a fire” t ∑ • D 2 : “Delivery of silver arrived in a silver truck” w d • D3: “Shipment of gold arrived in a truck” qj ij ( ) j = SC Q D = 1 ( ) ( ) , ( ) i Id Term df idf − + s p s d • 1 . 0 i 1 a 3 0 2 arrived 2 0.176 dij = (1+log(tf))idf/ (1+log(atf)) where, atf is average tf 3 damaged 1 0.477 4 delivery 1 0.477 |di| : number of unique terms in a document. 5 fire 1 0.477 p : average of number of unique terms of documents over 6 gold 2 0.176 7 in 3 0 entire collection 8 of 3 0 s : can be obtained empirically 9 silver 1 0.477 10 shipment 2 0.176 11 truck 2 0.176 Algorithm for Vector Space VSM Example (dot product) •Assume: t.idf gives the idf of any term t doc t 1 t 2 t 3 t 4 t 5 t 6 t 7 t 8 t 9 t 10 t 11 • q.tf gives the tf of any query term D 1 0 0 .477 0 .477 ..176 0 0 0 .176 0 D 2 0 .176 0 .477 0 0 0 0 .954 0 .176 Begin Score[] � 0 D 3 0 .176 0 0 0 .176 0 0 0 .176 .176 For each term t in Query Q 0 0 0 0 0 .176 0 0 .477 0 .176 Q Obtain posting list l For each entry p in l • Computing SC using inner product: • SC(Q, D 1 ) = (0)(0) + (0)(0) + (0)(0.477) + (0)(0) + (0)(0.477) Score[p.docid] = Score[p.docid] + (p.tf * t.idf)(q.tf * t.idf) + (0.176)(0.176) + (0)(0) + (0)(0) •Now we have a SCORE array that is unsorted. •Sort the score array and display top x results. 3

Summary: Vector Space Model Boolean Retrieval • Pros • For many years, most commercial systems were only Boolean. – Fairly cheap to compute • Most old library systems and Lexis/Nexis have a – Yields decent effectiveness long history of Boolean retrieval. – Very popular • Users who are experts at a complex query language • Cons can find what they are looking for. – No theoretical foundation (t1 AND t2) OR (t3 AND t7) WITHIN 2 Sentences – Weights in the vectors are arbitrary (t4 AND t5) NOT (t9 OR t10) – Assumes term independence • Considers each document as bag of words Boolean Example Boolean Retrieval doc t 1 t 2 t 3 t 4 t 5 t 6 t 7 t 8 t 9 t 10 t 11 • Expression := 0 0 1 0 1 1 0 0 0 1 0 D 1 D 2 1 1 0 1 0 0 0 0 1 0 1 – term 1 1 0 0 0 1 0 0 0 1 1 D 3 – ( expr ) D4 0 0 0 0 0 1 0 0 1 0 1 – NOT expr (not recommended) Q: t1 AND t2 AND NOT t4 – expr AND expr – expr OR expr 0110 AND 0110 AND 1011 = 0010 That is D3 • (cost OR price) AND paper AND NOT article Processing Boolean Query Processing Boolean Queries t1 AND t2 • Doc-term matrix is too sparse, thus, using • Algorithm: inverted index Find t1 in index (lexicon) Retrieve its posting list Find t2 in index (lexicon) • Query optimization in Boolean retrieval: Retrieve its posting list The order in which posting lists are accessed! Intersect (merge) the posting lists The matching DodIDs are added to the result list 4

Processing Boolean Query Intersection of Posting Lists t1 AND t2 AND t3 • What is the best order to process this? Algorithm Sort query terms based on document frequency • Process in the order of increasing document Merge the smallest posting list with the next smallest posting frequency, i.e, smaller Posting Lists first! list and create the result set • Thus, if t 1 , t 2 have smaller PL than t 3 , then Merge the next smaller posting list with the result set, update the result set process as: Continue till no more terms left (t1 AND t2) AND t3 Processing Boolean Query Boolean Retrieval (t1 OR t2) AND (t3 OR t4) AND (t5 OR t6) • Using document frequency estimate the size • AND returns too few documents (low recall) of disjuncts • OR return too many document (low precision) • Order the conjuncts in order of smaller • NOT eliminates many good documents (low disjuncts recall) • Proximity information not supported • Term weight not incorporated Extended (Weighted) Boolean Summary of Boolean Retrieval Retrieval • Pro • Extended Boolean supports term weight and proximity information. – Can use very restrictive search • Example of incorporating term weight: – Makes experienced users happy • Ranking by term frequency (Sony Search Engine) • Con x AND y: tf x x tf y – Simple queries do not work well. x OR y: tf x + tf y NOT x: 0 if tf x > 0, 1 if tf x = 0 – Complex query language, confusing to end • User may assign term weights users cost and +paper 5

Recommend

More recommend