Recognises your face and voice Kinect Adventures What the Kinect - PowerPoint PPT Presentation

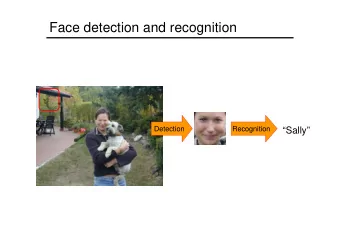

D EPTH , H UMAN P OSE , AND C AMERA P OSE JAMIE SHOTTON Depth sensing camera Tracks 20 body joints in real time Recognises your face and voice Kinect Adventures What the Kinect Sees top view side view depth image (camera view)

D EPTH , H UMAN P OSE , AND C AMERA P OSE JAMIE SHOTTON

• Depth sensing camera • Tracks 20 body joints in real time • Recognises your face and voice Kinect Adventures

What the Kinect Sees top view side view depth image (camera view)

Structured light object at depth d 2 object at depth d 1 y z x imaging plane optic centre optic centre of IR laser of camera baseline

Depth Makes Vision That Little Bit Easier RGB D EPTH Only works well lit Works in low light Background clutter Background removal easier Scale unknown Calibrated depth readings Color and texture variation Uniform texture

KINECTFusion Joint work with Shahram Izadi, Richard Newcombe, David Kim, Otmar Hilliges, David Molyneaux, Pushmeet Kohli, Steve Hodges, Andrew Davison, Andrew Fitzgibbon. SIGGRAPH, UIST and ISMAR 2011.

KINECTFusion Camera drift

ROADMAP T HE V ITRUVIAN M ANIFOLD S CENE C OORDINATE R EGRESSION [CVPR 2012] [CVPR 2013]

T HE V ITRUVIAN M ANIFOLD Jonathan Taylor Jamie Shotton Toby Sharp Andrew Fitzgibbon CVPR 2012

Human Pose Estimation Given some image input, recover the 3D human pose: Joint positions and angles In this work: • Single frame at a time (no tracking) • Kinect depth image as input (background removed)

Why is Pose Estimation Hard?

A Few Approaches Regress directly to pose? e.g. [Gavrila ’00] [ Agarwal & Triggs ’04] Detect and assemble parts? e.g. [Felzenszwalb & Huttenlocher ’00] [ Ramanan & Forsyth ’03] [ Sigal et al. ’04] Detect parts? e.g. [Bourdev & Malik ‘09] [Plagemann et al. ‘10] [ Kalogerakis et al. ‘10] Per-Pixel Body Part Classification Per-Pixel Joint Offset Regression [Shotton et al . ‘11] [Girshick et al . ‘11]

Background: Learning Body Parts for Kinect body joint hypotheses input depth image body parts BPC Clustering side view front view top view [Shotton et al. CVPR 2011]

Synthetic Training Data Retarget to varied body shapes Record mocap 100,000s of poses [Vicon] Render (depth, body parts) pairs Train invariance to:

Depth Image Features • Depth comparisons input Δ depth – very fast to compute image Δ Δ x Δ x x offset depth offset depth x Δ Δ feature x f ( x ; v ) = 𝑒 x − 𝑒( x + Δ) x response image coordinate 𝐰 Δ = 𝑒 x scales inversely with depth Background pixels d = large constant

Decision tree classification image window centred at x f ( x ; v 1 ) > θ 1 no yes f ( x ; v 3 ) > θ 3 f ( x ; v 2 ) > θ 2 no yes no yes P( c ) P( c ) P( c ) P( c )

Training Decision Trees [Breiman et al. 84] for all S n = x P n ( c ) pixels body part c f ( x ; v n ) > θ n n no yes P l ( c ) reduce P r ( c ) entropy r l c c Take ( v , θ ) that maximises Goal: drive entropy information gain: at leaf nodes Δ𝐹 = − 𝑇 l 𝐹(S l ) − 𝑇 r 𝐹(S r ) to zero 𝑇 𝑜 𝑇 𝑜

Decision Forests Book • Theory – Tutorial & Reference • Practice – Invited Chapters • Software and Exercises • Tricks of the Trade

input depth inferred body parts no tracking or smoothing

body joint hypotheses input depth image body parts BPC Clustering side view front view top view

input depth inferred body parts front view side view top view inferred joint position hypotheses no tracking or smoothing

Body Part Recognition in Kinect Single frame at a time – > robust Large training corpus -> invariant Fast, parallel implementation Skeleton does not explain the depth data Limited ability to cope with hard poses

A few approaches Explain the data directly with a mesh model [Ballan et al . ‘08] [ Baak et al. ‘11] • GOOD: Full skeleton • GOOD: Kinematic constraints enforced from the outset • GOOD: Able to cope with occlusion and cropping • BAD: Many local minima • BAD: Highly sensitive to initial guess • BAD: Potentially slow

𝑆 l_arm (𝜄) Human Skeleton Model • Mesh is attached to a hierarchical skeleton • Each limb 𝑚 has a transformation matrix 𝑈 𝑚 𝜄 relating its local coordinate system to the world: 𝑆 global (𝜄) 𝑈 root 𝜄 = 𝑆 global (𝜄) 𝑈 𝑚 𝜄 = 𝑈 parent 𝑚 𝜄 𝑆 𝑚 (𝜄) • 𝑆 global (𝜄) encodes a global scaling, translation and rotation • 𝑆 𝑚 (𝜄) encodes a rotation and fixed translation relative to its parent • 13 parameterized joints using quaternions to represent unconstrained rotations • This gives 𝜄 a total of 1 + 3 + 4 + 4 ∗ 13 = 60 degrees of freedom

Linear Blend Skinning Each vertex 𝑣 • has position 𝑞 in base pose 𝜄 0 𝐿 𝐿 • is attached to K limbs 𝑚 𝑙 𝑙=1 with weights 𝛽 𝑙 𝑙=1 In a new pose 𝜄 , the skinned position 𝑣 of is: 𝐿 −1 𝜄 0 𝑞 𝑁 𝑣; 𝜄 = 𝛽 𝑙 𝑈 𝑚 𝑙 𝜄 𝑈 Mesh in base pose 𝜄 0 𝑚 𝑙 𝑙=1 position in limb l k ’s coordinate system position in world coordinate system

Test Time Model Fitting • Assume each observation 𝑦 𝑗 is generated by a point on our model 𝑣 𝑗 𝐷𝑝𝑠𝑠𝑓𝑡𝑞𝑝𝑜𝑒𝑗𝑜 𝑃𝑐𝑡𝑓𝑠𝑤𝑓𝑒 𝑄𝑝𝑗𝑜𝑢𝑡 : 𝑦 1 , … , 𝑦 𝑜 𝑁𝑝𝑒𝑓𝑚 𝑄𝑝𝑗𝑜𝑢𝑡 : 𝑣 1 , … 𝑣 𝑜 𝑦 𝑗 𝑦 𝑗 = 𝑁 𝑣 𝑗 ; 𝜄 What pose is the model in? • Optimize: Observed 3D Point Predicted 3D Point min 𝑣 1 …𝑣 𝑜 min 𝑒(𝑦 𝑗 , 𝑁 𝑣 𝑗 ; 𝜄 ) 𝜄 𝑗 Note: simplified energy - more details to come

min 𝑣 1 …𝑣 𝑜 min 𝑒(𝑦 𝑗 , 𝑁 𝑣 𝑗 ; 𝜄 ) Optimizing 𝜄 𝑗 • Alternating between pose 𝜄 and correspondences 𝑣 1 , … 𝑣 𝑜 Articulated Iterative Closest Point (ICP) • Traditionally, start from initial 𝜄 • manual initialization • track from previous frame • Could we instead infer initial correspondences 𝑣 1 , … 𝑣 𝑜 discriminatively? • And, do we even need to iterate?

One-Shot Pose Estimation: An Early Result Can we achieve a good result without iterating between pose 𝜄 and correspondences 𝑣 1 , … 𝑣 n ? test ground truth convergence depth image correspondences visualization

From Body Parts to Dense Correspondences increasing number of parts classification regression Body Parts The “Vitruvian Manifold” Texture is mapped across body shapes and poses

The “Vitruvian Manifold” Embedding in 3D u = -1 u = 1 v = 1 w = 1 v = -1 Geodesic surface distances approximated by Euclidean distance [L. Da Vinci, 1487] w = -1

Overview training images inferred dense final optimized correspondences poses regression forest model parameters 𝜄 optimization of … front right top energy test function images

Discriminative Model: Predicting Correspondences training images regression forest … input inferred dense images correspondences

Learning the Correspondences • How to learn the mapping from depth pixels to correspondences? 𝑦 𝑗 • Render synthetic training set: characters render mocap • Train regression forest

Each pixel-correspondence pair descends to a leaf in the tree Learning a Regression Model at the Leaf Nodes mean shift mode detection

Inferring Correspondences

infer correspondences 𝑉 optimize parameters min 𝜄 𝐹(𝜄, 𝑉)

Full Energy 𝐹 𝜄, 𝑉 = 𝜇 vis 𝐹 vis 𝜄, 𝑉 + 𝜇 prior 𝐹 prior 𝜄 + 𝜇 int 𝐹 int 𝜄 𝜍(𝑓) • Term E vis approximates hidden surface removal and uses robust error 𝑓 = 0 • Gaussian prior term E prior • Self-intersection prior term E int approximates interior volume 𝑑 𝑢 (𝜄 0 ) 𝑑 𝑡 (𝜄 0 ) Energy is robust to noisy correspondences • Correspondences far from their image points are “ignored” • Correspondences facing away from the camera are “ignored” • avoids model getting stuck in front of the image pixels

“Easy” Metric: Average Joint Accuracy 100% 90% 80% (% joints within distance D ) Joints average accuracy 70% 60% 50% 40% 30% Our algorithm 20% 𝑉 Given GT u 10% Optimal θ 𝜄 0% 0 0.05 0.1 0.15 0.2 D : max allowed distance to GT (m) Results on 5000 synthetic images

100% Hard Metric: (% frames with all joints within dist. D ) 90% “Perfect” Frame Accuracy 80% Worst-case accuracy 70% 60% 50% 40% 30% Our algorithm 20% Given GT u 𝑉 Results on 5000 10% Optimal θ synthetic images 𝜄 0% 0 0.05 0.1 0.15 0.2 0.25 0.3 D : max allowed distance to GT (m) D : 0.09m 0.11m 0.17m 0.21m 0.45m

Comparison (% frames with all joints within dist. D ) 70% Our algorithm Vitruvian Manifold [Shotton et al. '11] (top hypothesis) 60% [Girshick et al. 11] (top hypothesis) Worst case accuracy [Shotton et al. '11] (best of top 5) 50% [Girshick et al. '11] (best of top 5) 40% Require an oracle 30% Achievable algorithms 20% 10% 0% 0 0.05 0.1 0.15 0.2 0.25 0.3 D : max allowed distance to GT (m) Results on 5000 synthetic images

Sequence Result Each frame fit independently: no temporal information used

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.