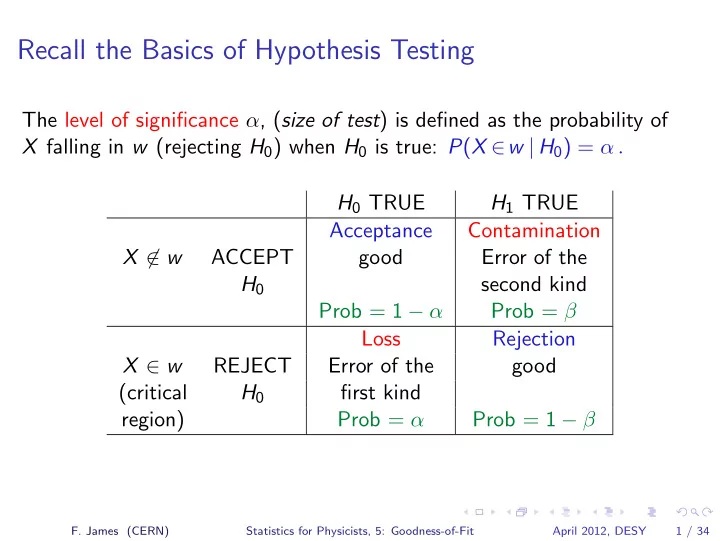

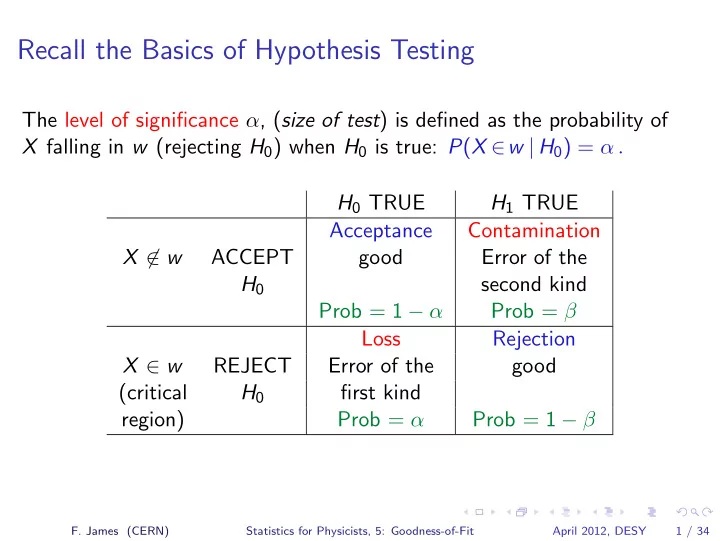

Recall the Basics of Hypothesis Testing The level of significance α , ( size of test ) is defined as the probability of X falling in w (rejecting H 0 ) when H 0 is true: P ( X ∈ w | H 0 ) = α . H 0 TRUE H 1 TRUE Acceptance Contamination X �∈ w ACCEPT good Error of the H 0 second kind Prob = 1 − α Prob = β Loss Rejection X ∈ w REJECT Error of the good (critical H 0 first kind region) Prob = α Prob = 1 − β F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 1 / 34

Goodness-of-Fit Testing Goodness-of-Fit Testing (GOF) As in hypothesis testing, we are again concerned with the test of a null hypothesis H 0 with a test statistic T , in a critical region w α , at a significance level α . Unlike the previous situations, however, the alternative hypothesis, H 1 is now the set of all possible alternatives to H 0 . Thus H 1 cannot be formulated, the risk of second kind, β , is unknown, and the power of the test is undefined. Since it is in general impossible to know whether one test is more powerful than another, the theoretical basis for goodness-of-fit (GOF) testing is much less satisfactory than the basis for classical hypothesis testing. Nevertheless, GOF testing is quantitatively the most successful area of statistics. In particular, Pearson’s venerable Chi-square test is the most heavily used method in all of statistics. F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 2 / 34

Goodness-of-Fit Testing GOF Testing: From the test statistic to the P-value. GOF Testing: From the test statistic to the P-value. Goodness-of-fit tests compare the experimental data with their p.d.f. under the null hypothesis H 0 , leading to the statement: If H 0 were true and the experiment were repeated many times, one would obtain data as far away (or further) from H 0 as the observed data with probability P. The quantity P is then called the P-value of the test for this data set and hypothesis. A small value of P is taken as evidence against H 0 , which the physicist calls a bad fit. F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 3 / 34

Goodness-of-Fit Testing GOF Testing: From the test statistic to the P-value. From the test statistic to the P-value. It is clear from the above that in order to construct a GOF test we need: 1. A test statistic, that is a function of the data and of H 0 , which is a measure of the “distance” between the data and the hypothesis, and 2. A way to calculate the probability of exceeding the observed value of the test statistic for H 0 . That is, a function to map the value of the test statistic into a P-value. If the data X are discrete and our test statistic is t = t ( X ) which takes on the value t 0 = t ( X 0 ) for the data X 0 , the P-value would be given by: � P X = P ( X | H 0 ) , X : t ≥ t 0 where the sum is taken over all values of X for which t ( X ) ≥ t 0 . F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 4 / 34

Goodness-of-Fit Testing GOF Testing: From the test statistic to the P-value. Example: Test of Poisson counting rate Example of discrete counting data: We have recorded 12 counts in one year, and we wish to know if this is compatible with the theory which predicts µ = 17 . 3 counts per year. The obvious test statistic is the absolute difference | N − µ | , and assuming that the probability of n decays is given by the Poisson distribution, we can calculate the P-value by taking the sum in the previous slide. 12 ∞ e − µ µ n e − 17 . 3 17 . 3 n e − 17 . 3 17 . 3 n � � � P 12 = = + n ! n ! n ! n =0 n =23 n : | n − µ |≥ 5 . 3 Evaluating the above P-value, we get P 12 = 0 . 229. The interpretation is that the observation is not significantly different from the expected value, since one should observe a number of counts at least as far from the expected value about 23% of the time. F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 5 / 34

Goodness-of-Fit Testing GOF Testing: From the test statistic to the P-value. Poisson Test Example seen visually The length of the vertical bars is proportional to the Poisson probability of n (the x-axis) for H 0 : µ = 17 . 3. probability p 10 20 30 40 n obs H 0 F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 6 / 34

Goodness-of-Fit Testing GOF Testing: From the test statistic to the P-value. Distribution-free Tests When the data are continuous, the sum becomes an integral: � P X = P ( X | H 0 ) , (1) X : t > t 0 and this can become quite complicated to compute, so that one tries to avoid using this form. Instead, one looks for a test statistic such that the distribution of t is known independently of H 0 . Such a test is called a distribution-free test. We consider here mainly distribution-free tests, such that the P-value does not depend on the details of the hypothesis H 0 , but only on the value of t , and possibly one or two integers such as the number of events, the number of bins in a histogram, or the number of constraints in a fit. Then the mapping from t to P-value can be calculated once for all and published in tables, of which the well-known χ 2 tables are an example. F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 7 / 34

Goodness-of-Fit Testing Pearson’s Chi-square Test Pearson’s Chi-square Test An obvious way to measure the distance between the data and the hypothesis H 0 is to 1. Determine the expectation of the data under the hypothesis H 0 . 2. Find a metric in the space of the data to measure the distance of the observed data from its expectation under H 0 . When the data consists of measurements Y = Y 1 , Y 2 , . . . , Y k of quantities V , which, under H 0 are equal to f = f 1 , f 2 , . . . , f k with covariance matrix ∼ the distance between the data and H 0 is clearly: V − 1 ( Y − f ) T = ( Y − f ) T ∼ This is just the Pearson test statistic usually called chi-square, because it is distributed as χ 2 ( k ) under H 0 if the measurements Y are Gaussian-distributed. That means the P-value may be found from a table of χ 2 ( k ), or by calling PROB(T,k). F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 8 / 34

Goodness-of-Fit Testing Tests on Histograms Pearson’s Chi-square test for histograms Karl Pearson made use of the asymptotic Normality of a multinomial p.d.f. in order to find the (asymptotic) distribution of: T = ( n − N p ) T ∼ V − 1 ( n − N p ) V is the covariance matrix of the observations (bin contents) n and where ∼ N is the total number of events in the histogram. In the usual case where the bins are independent, we have k k n 2 ( n i − Np i ) 2 T = 1 = 1 � � i − N . N p i N p i i =1 i =1 This is the usual χ 2 goodness-of-fit test for histograms. The distribution of T is generally accepted as close enough to χ 2 ( k − 1) when all the expected numbers of events per bin ( Np i ) are greater than 5. Cochran relaxes this restriction, claiming the approximation to be good if not more than 20% of the bins have expectations between 1 and 5. F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 9 / 34

Goodness-of-Fit Testing Tests on Histograms Chi-square test with estimation of parameters If the parent distribution depends on a vector of parameters θ = θ 1 , θ 2 , . . . , θ r , to be estimated from the data, one does not expect the T statistic to behave as a χ 2 ( k − 1), except in the limiting case where the r parameters do not actually affect the goodness of fit. In the more general and usual case, one can show that when r parameters are estimated from the same data, the cumulative distribution of T is intermediate between a χ 2 ( k − 1) (which holds when θ is fixed) and a χ 2 ( k − r − 1) (which holds for an optimal estimation method), always assuming the null hypothesis. The test is no longer distribution-free, but when k is large and r small, the two boundaries χ 2 ( k − 1) and χ 2 ( k − r − 1) become close enough to make the test practically distribution-free. In practice, the r parameters will be well chosen, and T will usually behave as χ 2 ( k − r − 1) . F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 10 / 34

Goodness-of-Fit Testing Tests on Histograms Binned Likelihood (Likelihood Chi-square) Pearson’s Chi-square is a good test statistic when fitting a curve to points with Gaussian errors, but for fitting histograms, we can make use of the known distribution of events in a bin, which is not exactly Gaussian: ◮ It is Poisson-distributed if the bin contents are independent (no constraint on the total number of events). ◮ Or it is Multinomial-distributed if the total number of events in the histogram is fixed. reference: Baker and Cousins, Clarification of the Use of Chi-square and Likelihood Functions in Fits to Histograms NIM 221 (1984) 437 Our test statistic will be the binned likelihood, which Baker and Cousins called the likelihood chi-square because it behaves as a χ 2 , although it is derived as a likelihood ratio. F. James (CERN) Statistics for Physicists, 5: Goodness-of-Fit April 2012, DESY 11 / 34

Recommend

More recommend