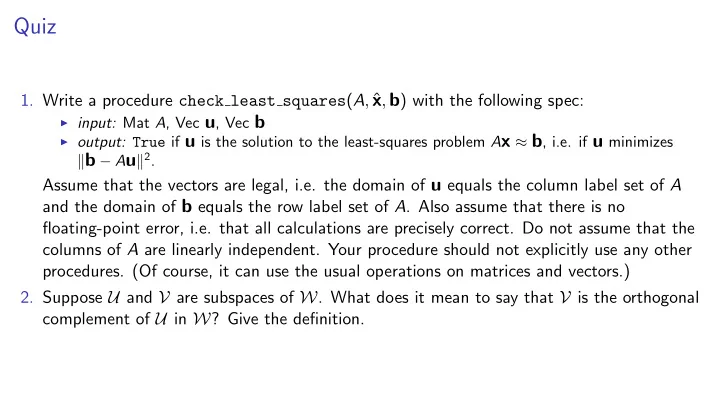

Quiz 1. Write a procedure check least squares ( A , ˆ x , b ) with the following spec: I input: Mat A , Vec u , Vec b I output: True if u is the solution to the least-squares problem A x ⇡ b , i.e. if u minimizes k b � A u k 2 . Assume that the vectors are legal, i.e. the domain of u equals the column label set of A and the domain of b equals the row label set of A . Also assume that there is no floating-point error, i.e. that all calculations are precisely correct. Do not assume that the columns of A are linearly independent. Your procedure should not explicitly use any other procedures. (Of course, it can use the usual operations on matrices and vectors.) 2. Suppose U and V are subspaces of W . What does it mean to say that V is the orthogonal complement of U in W ? Give the definition.

The Singular Value Decomposition [11] The Singular Value Decomposition

The Singular Value Decomposition Gene Golub’s license plate, photographed by Professor P. M. Kroonenberg of Leiden University.

Frobenius norm for matrices q x 2 1 + x 2 2 + · · · + x 2 We have defined a norm for vectors over R : k [ x 1 , x 2 , . . . , x n ] k = n Now we define a norm for matrices: interpret the matrix as a vector. p k A k F = sum of squares of elements of A called the Frobenius norm of a matrix. Squared norm is just sum of squares of the elements. 1 2 � � �� � 2 3 = 1 2 + 2 2 + 3 2 + 4 2 + 5 2 + 6 2 � � � � Example: � � � � 4 5 6 � � � � F Can group in terms of rows .... or columns 1 2 � � �� � 2 3 = (1 2 + 2 2 + 3 2 ) + (4 2 + 5 2 + 6 2 ) = k [1 , 2 , 3] k 2 + k [4 , 5 , 6] k 2 � � � � � � � � 4 5 6 � � � � F 1 2 � � �� � 2 3 = (1 2 + 4 2 ) + (2 2 + 5 2 ) + (3 2 + 6 2 ) = k [1 , 4] k 2 + k [2 , 5] k 2 + k [3 , 6] k 2 � � � � � � � � 4 5 6 � � � � F

Frobenius norm for matrices 1 2 � � �� � 2 3 = 1 2 + 2 2 + 3 2 + 4 2 + 5 2 + 6 2 � � � � Example: � � � � 4 5 6 � � � � F Can group in terms of rows .... or columns 1 2 � � �� � 2 3 = (1 2 + 2 2 + 3 2 ) + (4 2 + 5 2 + 6 2 ) = k [1 , 2 , 3] k 2 + k [4 , 5 , 6] k 2 � � � � � � � � 4 5 6 � � � � F 1 2 � � �� � 2 3 = (1 2 + 4 2 ) + (2 2 + 5 2 ) + (3 2 + 6 2 ) = k [1 , 4] k 2 + k [2 , 5] k 2 + k [3 , 6] k 2 � � � � � � � � 4 5 6 � � � � F Proposition: Squared Frobenius norm of a matrix is the sum of the squared norms of its rows ... 2 � � 2 a 1 3 � � � � � � � � . � � = k a 1 k 2 + · · · + k a m k 2 . � � 6 7 � � . � � 4 5 � � � � � � a m � � � � F

Frobenius norm for matrices 1 2 � � �� � 2 3 = 1 2 + 2 2 + 3 2 + 4 2 + 5 2 + 6 2 � � � � Example: � � � � 4 5 6 � � � � F Can group in terms of rows .... or columns 1 2 � � �� � 2 3 = (1 2 + 2 2 + 3 2 ) + (4 2 + 5 2 + 6 2 ) = k [1 , 2 , 3] k 2 + k [4 , 5 , 6] k 2 � � � � � � � � 4 5 6 � � � � F 1 2 � � �� � 2 3 = (1 2 + 4 2 ) + (2 2 + 5 2 ) + (3 2 + 6 2 ) = k [1 , 4] k 2 + k [2 , 5] k 2 + k [3 , 6] k 2 � � � � � � � � 4 5 6 � � � � F Proposition: Squared Frobenius norm of a matrix is the sum of the squared norms of its rows ... or of its columns. 2 � � � � 2 3 � � � � � � � � � � 6 7 � � = k v 1 k 2 + · · · + k v n k 2 � � 6 v 1 v n 7 � � · · · � � 6 7 � � � � 6 7 � � � � � � 4 5 � � � � � � � � F

Low-rank matrices Saving space and saving time 2 3 4 u 5 ⇥ v T ⇤ 0 2 3 1 2 3 2 3 0 2 3 1 4 u 4 w 4 u 4 w 5 = 5 ⇥ v T ⇤ @⇥ v T ⇤ @ A 5 5 A 2 3 v T � 4 u 1 1 u 2 5 v T 2

Silly compression Represent a grayscale m ⇥ n image by an m ⇥ n matrix A . (Requires mn numbers to represent.) Find a low-rank matrix ˜ A that is as close as possible to A . (For rank r , requires only r ( m + n ) numbers to represent.) Original image (625 ⇥ 1024, so about 625k numbers)

Silly compression Represent a grayscale m ⇥ n image by an m ⇥ n matrix A . (Requires mn numbers to represent.) Find a low-rank matrix ˜ A that is as close as possible to A . (For rank r , requires only r ( m + n ) numbers to represent.) Rank-50 approximation (so about 82k numbers)

The trolley-line-location problem Given the locations of m houses a 1 , . . . , a m , we must choose where to run a trolley line. a 2 The trolley line must go through downtown (origin) and must be a straight line. a 1 The goal is to locate the trolley line so that it is as close as possible to the m houses. a 3 Specify line by unit-norm vector v : line is Span { v } . In measuring objective, how to combine individual objectives? As in least squares, we minimize the 2-norm of the vector [ d 1 , . . . , d m ] of distances. a 4 Equivalent to minimizing the square of the 2-norm of this vector, i.e. d 2 1 + · · · + d 2 m .

The trolley-line-location problem Given the locations of m houses a 1 , . . . , a m , we must choose where to run a trolley line. a 2 The trolley line must go through downtown (origin) and must be a straight line. a 1 The goal is to locate the trolley line so that it is as close as possible to the m houses. v a 3 Specify line by unit-norm vector v : line is Span { v } . In measuring objective, how to combine individual objectives? As in least squares, we minimize the 2-norm of the vector [ d 1 , . . . , d m ] of distances. a 4 Equivalent to minimizing the square of the 2-norm of this vector, i.e. d 2 1 + · · · + d 2 m .

The trolley-line-location problem Given the locations of m houses a 1 , . . . , a m , we must choose where to run a trolley line. The trolley line must go through downtown (origin) and must be a straight line. distance to a 2 The goal is to locate the trolley line so that it is as distance to a 1 close as possible to the m houses. distance to a 3 Specify line by unit-norm vector v : line is Span { v } . In measuring objective, how to combine individual objectives? distance to a 4 As in least squares, we minimize the 2-norm of the vector [ d 1 , . . . , d m ] of distances. Equivalent to minimizing the square of the 2-norm of this vector, i.e. d 2 1 + · · · + d 2 m .

Solution to the trolley-line-location problem For each vector a i , write a i = a k v where a k v + a ? v is the projection of a i along v and a ? v is i i i i the projection orthogonal to v . By the Pythagorean Theorem, = a 1 � a k v a ? v 1 1 k a k v k a ? v k 2 k a 1 k 2 1 k 2 . = � . 1 . . . . = a m � a k v a ? v k a k v k a ? v m m m k 2 k a m k 2 m k 2 = � Since the distance from a i to Span { v } is k a ? v k , we have i k a k v (dist from a 1 to Span { v } ) 2 k a 1 k 2 1 k 2 = � . . . k a k v (dist from a m to Span { v } ) 2 k a m k 2 m k 2 = �

Solution to the trolley-line-location problem By the Pythagorean Theorem, = a 1 � a k v a ? v 1 1 k a k v k a ? v k 2 k a 1 k 2 1 k 2 . = � . 1 . . . . = a m � a k v a ? v k a k v k a ? v m m m k 2 k a m k 2 m k 2 = � Since the distance from a i to Span { v } is k a ? v k , we have i k a k v (dist from a 1 to Span { v } ) 2 k a 1 k 2 1 k 2 = � . . . k a k v (dist from a m to Span { v } ) 2 k a m k 2 m k 2 = � k a k v 1 k 2 + · · · + k a k v k a 1 k 2 + · · · + k a m k 2 P i (dist from a i to Span { v } ) 2 � m k 2 � = � h a 1 , v i 2 + · · · + h a m , v i 2 � k A k 2 � = � F using a || v = h a i , v i v and hence k a || v k 2 = h a i , v i 2 k v k 2 = h a i , v i 2 i i

Solution to the trolley-line-location problem, continued By dot-product interpretation of matrix-vector multiplication, 2 3 2 a 1 3 2 3 h a 1 , v i 6 7 . . . 6 7 . v = (1) 6 7 6 7 . . 6 7 4 5 4 5 6 7 a m h a m , v i 4 5 so h a 1 , v i 2 + h a 2 , v i 2 + · · · + h a m , v i 2 ⌘ k A v k 2 = ⇣ We get P i (distance from a i to Span { v } ) 2 || A || 2 k A v k 2 = � F Therefore best vector v is a unit vector that maximizes || A v || 2 (equiv., maximizes || A v || ).

Solution to the trolley-line-location problem, continued h a 1 , v i 2 + · · · + h a m , v i 2 � i (dist from a i to Span { v } ) 2 = k A k 2 � P F � By dot-product interpretation of matrix-vector multiplication, 2 3 2 a 1 3 2 3 h a 1 , v i 6 7 . . . 6 7 . v = (1) 6 7 6 7 . . 6 7 4 5 4 5 6 7 h a m , v i a m 4 5 so h a 1 , v i 2 + h a 2 , v i 2 + · · · + h a m , v i 2 ⌘ k A v k 2 = ⇣ We get P i (distance from a i to Span { v } ) 2 || A || 2 k A v k 2 = � F Therefore best vector v is a unit vector that maximizes || A v || 2 (equiv., maximizes || A v || ).

Solution to the trolley-line-location problem, continued i (distance from a i to Span { v } ) 2 || A || 2 k A v k 2 P = � F Therefore best vector v is a unit vector that maximizes || A v || 2 (equiv., maximizes || A v || ). def trolley line location ( A ): v 1 = arg max {|| A v || : || v || = 1 } σ 1 = || A v 1 || return v 1 So far, this is a solution only in principle since we have not specified how to actually compute v 1 . Definition: σ 1 is first singular value of A , and v 1 is first right singular vector .

Recommend

More recommend