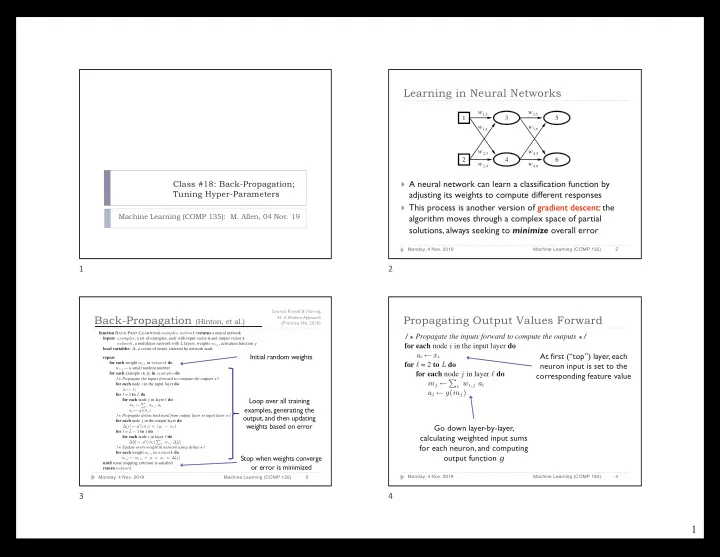

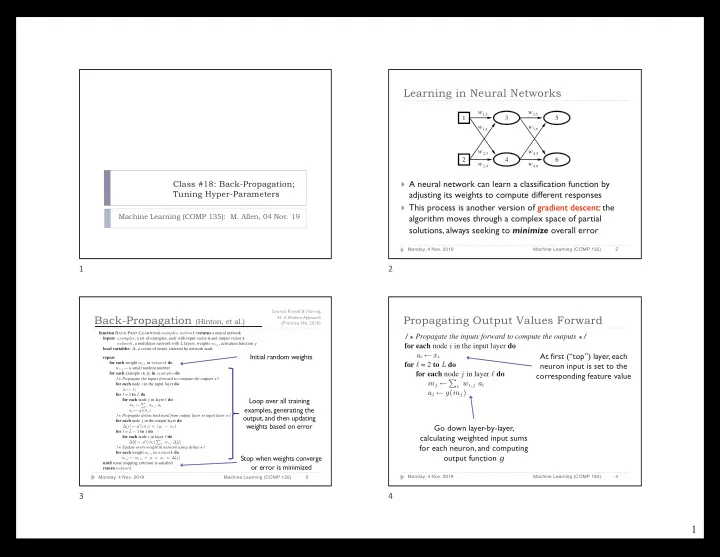

Learning in Neural Networks w 1,3 w 3,5 1 3 5 w w 1,4 3,6 w w 2,3 4,5 2 4 6 w w 2,4 4,6 Class #18: Back-Propagation; } A neural network can learn a classification function by Tuning Hyper-Parameters adjusting its weights to compute different responses } This process is another version of gradient descent: the Machine Learning (COMP 135): M. Allen, 04 Nov. 19 algorithm moves through a complex space of partial solutions, always seeking to minimize overall error 2 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 1 2 Source: Russel & Norvig, Back-Propagation (Hinton, et al.) Propagating Output Values Forward AI: A Modern Approach (Prentice Hal, 2010) for each example x y in do function B ACK -P ROP -L EARNING ( examples , network ) returns a neural network /* Propagate the inputs forward to compute the outputs */ inputs : examples , a set of examples, each with input vector x and output vector y network , a multilayer network with L layers, weights w i,j , activation function g for each node i in the input layer do local variables : ∆ , a vector of errors, indexed by network node At first (“top”) layer, each Initial random weights a i ← x i repeat for each weight w i,j in network do for ℓ = 2 to L do neuron input is set to the w i,j ← a small random number for each node j in layer ℓ do for each example ( x , y ) in examples do corresponding feature value /* Propagate the inputs forward to compute the outputs */ in j ← P i w i,j a i for each node i in the input layer do a i ← x i a j ← g ( in j ) for ℓ = 2 to L do Loop over all training for each node j in layer ℓ do /* Propagate deltas backward from output layer to input laye in j ← P i w i,j a i examples, generating the a j ← g ( in j ) /* Propagate deltas backward from output layer to input layer */ output, and then updating for each node j in the output layer do weights based on error Go down layer-by-layer, ∆ [ j ] ← g ′ ( in j ) × ( y j − a j ) for ℓ = L − 1 to 1 do calculating weighted input sums for each node i in layer ℓ do ∆ [ i ] ← g ′ ( in i ) P j w i,j ∆ [ j ] for each neuron, and computing /* Update every weight in network using deltas */ for each weight w i,j in network do output function g Stop when weights converge w i,j ← w i,j + α × a i × ∆ [ j ] until some stopping criterion is satisfied or error is minimized return network 4 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 3 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 3 4 1

Propagating Error Backward Hyperparameters for Neural Networks } Multi-layer (deep) neural networks involve a number of /* Propagate deltas backward from output layer to input layer */ different possible design choices, each of which can affect for each node j in the output layer do At output (“bottom”) ∆ [ j ] ← g ′ ( in j ) × ( y j − a j ) classifier accuracy: layer, each delta-value is for ℓ = L − 1 to 1 do } Number of hidden layers set to the error on that for each node i in layer ℓ do } Size of each hidden layer neuron, multiplied by the ∆ [ i ] ← g ′ ( in i ) P j w i,j ∆ [ j ] } Activation function employed derivative of function g /* Update every weight in network using deltas */ } Regularization term (controls over-fitting) for each weight w i,j in network do } This is not unique to neural networks w i,j ← w i,j + α × a i × ∆ [ j ] Go bottom-up and set until some stopping criterion is satisfied } Logistic regression: regularization ( C parameter in sklearn ), class delta to derivative value weights, etc. multiplied by sum of } SVM: kernel type, kernel parameters (like polynomial degree), error After all the delta values are computed, deltas at the next layer penalty ( C again), etc. update weights on every node in the down (weighting each network } Question is often how we can tune these model control such value appropriately) parameters effectively to find best combinations 6 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 5 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 5 6 Heldout Cross-Validation Modifying Model Parameters } We can use k -fold cross-validation techniques to estimate the } Using heldout validation techniques, we can begin to real effectiveness of various parameter settings: explore various parts of the hyperparameter-space } In each case, we try to maximize average performance on the Divide labeled data into k folds, each of size 1/ k 1. heldout validation data Repeat k times: 2. } For example: number of layers in a neural network can Hold aside one of the folds; train on the remaining ( k – 1 ); test on a. be explored iteratively, starting with one layer, and the heldout data increasing one at a time (up to some reasonable) limit Record classification error for both training and heldout data b. until over-fitting is detected Average over the k trials 3. } Similarly, we can explore a range of layer sizes , starting } This can give us a more robust estimate of real effectiveness with hidden layers of size equal to the number of input features, and increasing in some logarithmic manner until } It can also allow us to better detect over-fitting: when average heldout error is significantly worse than average training error, over-fitting occurs, or some practical limits reach model has grown too complex or otherwise problematic 8 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 7 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 7 8 2

Using Grid Search for Tuning Costs of Grid Search } One basic technique is to list out the different values of } When we have large numbers of combinations of possible each parameter that we want to test, and systematically parameters, we may decide to limit the range of some of try different combinations of those values the parts of our “grid” for feasibility } For P distinct tuning parameters, defines a P -dimensional space } For example, we might try: (or “grid”), that we can explore, one combination at a time # Hidden layers: 1, 2, …, 10 1. } In many cases, since building, training, and testing the Layer size: N, 2N, 5N, 10N, 20N ( N: # input features) 2. models for each combination all take some time, we may Activation: Sigmoid, ReLU, tanh 3. find that there are far too many such combinations to try Regularization ( alpha ): 10 -5 , 10 -3 , 10 -1 , 10 1 , 10 3 4. } One possibility: many such models can be explored in parallel , } Produces (10 x 5 x 3 x 5) = 750 different models allowing large numbers of combinations to be compared at the } If we are doing 10-fold validation, need to run 7,500 total tests same time, given sufficient resources } Still only a small fragment of the possible parameter-space 10 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 9 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 9 10 From : J. Bergstra & Y. Bengio, “Random search for hyper- Random Search Performance of Random Search parameter optimization,” Journal of Machine Learning Research 13 (2012). } Instead of limiting our grid even further, or trying to spend Grid Layout Random Layout even more time on more combinations, we might try to randomize the process Unimportant parameter Unimportant parameter } Instead of limiting values, we choose randomly from any of a (larger) range of values: # Hidden layers: [1, 20] 1. Layer size: [8, 1024] Important parameter Important parameter 2. Activation: [Sigmoid, ReLU, tanh] 3. e 1: Grid and random search of nine trials for optimizing a function f ( x , y ) = g ( x ) + h ( y ) ≈ g ( x ) with low effective dimensionality. Above each square g ( x ) is shown in green, and Regularization ( alpha ): [ 10 -7 ,10 7 ] 4. left of each square h ( y ) is shown in yellow. With grid search, nine trials only test g ( x ) in three distinct places. With random search, all nine trials explore distinct values of } For each of these, we assign a probability distribution over its g . This failure of grid search is the rule rather than the exception in high dimensional values (uniform or otherwise) hyper-parameter optimization. } We may presume these distributions are independent of one another This technique can sometimes out-perform grid search } When using a grid, it is sometimes possible that we just miss some intermediate, } } For T tests, we sample each of the ranges for one possible and important, value completely value, giving us T different combinations of those values The random approach can often hit upon the better combinations with the same } (or far less) testing involved 12 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 11 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 11 12 3

From : J. Bergstra & Y. Bengio, “Random search for hyper- This Week Performance of Random Search parameter optimization,” Journal of Machine Learning Research 13 (2012). } T opics : Neural Networks } Project 01 : due Monday, 04 November, 4:15 PM mnist rotated 1 . 0 } Can be handed in without penalty untilWed., 06 Nov., 4:15 PM Performance for grid search over 0 . 9 100 different neural network parameter combinations } Homework 04 : due Wednesday, 06 November, 9:00 AM 0 . 8 accuracy 0 . 7 } Office Hours : 237 Halligan, Tuesday, 11:00 AM – 1:00 PM 0 . 6 0 . 5 } TA hours can be found on class website as well 0 . 4 0 . 3 Statistically significant improvement 1 2 4 8 16 32 for as few as 8 randomly chosen experiment size (# trials) combination models 14 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 13 Monday, 4 Nov. 2019 Machine Learning (COMP 135) 13 14 4

Recommend

More recommend