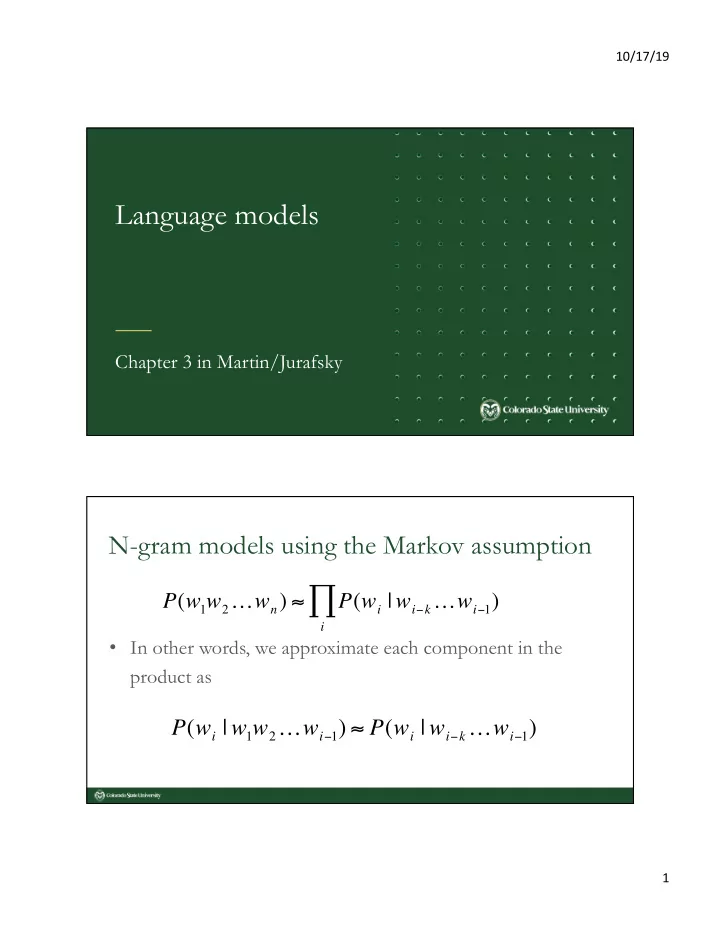

10/17/19 Language models Chapter 3 in Martin/Jurafsky N-gram models using the Markov assumption ∏ P ( w 1 w 2 … w n ) ≈ P ( w i | w i − k … w i − 1 ) i • In other words, we approximate each component in the product as P ( w i | w 1 w 2 … w i − 1 ) ≈ P ( w i | w i − k … w i − 1 ) 1

10/17/19 Estimating bigram probabilities • The Maximum Likelihood estimate P ( w i | w i − 1 ) = c ( w i − 1 , w i ) c ( w i − 1 ) • c(xy) is the count of the bigram xy Example <s> I am Sam </s> P ( w i | w i − 1 ) = c ( w i − 1 , w i ) <s> Sam I am </s> c ( w i − 1 ) <s> I do not like green eggs and ham </s> 2

10/17/19 Example: Berkeley Restaurant Project sentences • can you tell me about any good cantonese restaurants close by • mid priced thai food is what i’m looking for • tell me about chez panisse • can you give me a lisEng of the kinds of food that are available • i’m looking for a good place to eat breakfast • when is caffe venezia open during the day Raw bigram counts Out of 9222 sentences • 3

10/17/19 Raw bigram probabilities Normalize by unigrams: • • Result: Bigram estimates of sentence probabilities P(<s> I want english food </s>) = P(I|<s>) × P(want|I) × P(english|want) × P(food|english) × P(</s>|food) = .000031 4

10/17/19 What is encoded in bigram statistics? • P(english|want) = .0011 P(chinese|want) = .0065 • P(to|want) = .66 • P(eat | to) = .28 • • P(food | to) = 0 P(want | spend) = 0 • P (i | <s>) = .25 • Practical issue • BeWer to do everything in log space – Avoid underflow – (also adding is faster than mulEplying) log( p 1 × p 2 × p 3 × p 4 ) = log p 1 + log p 2 + log p 3 + log p 4 5

10/17/19 Google N-Gram Release, August 2006 … http://googleresearch.blogspot.com/2006/08/all-our-n-gram-are-belong-to-you.html https://books.google.com/ngrams Google N-Gram Release • serve as the incoming 92 • serve as the incubator 99 • serve as the independent 794 • serve as the index 223 • serve as the indication 72 • serve as the indicator 120 • serve as the indicators 45 • serve as the indispensable 111 • serve as the indispensible 40 • serve as the individual 234 6

10/17/19 Evaluation: How good is our model? • Does our language model prefer good sentences to bad ones? – Assign higher probability to “real” or “frequently observed” sentences than “ungrammatical” or “rarely observed” sentences? • We train parameters of our model on a training set . • We test the model’s performance on data we haven’t seen. – A test set is an unseen dataset that is different from our training set, totally unused. – An evaluation metric tells us how well our model does on the test set. Training on the test set • Testing on data from the training set will assign it an artificially high probability • “Training on the test set” • Bad science! • And violates the honor code 14 7

10/17/19 Extrinsic evaluation of N-gram models • Best evaluation for comparing models A and B – Put each model in a task • spelling corrector, speech recognizer, MT system – Run the task, get an accuracy for A and for B • How many misspelled words corrected properly • How many words translated correctly – Compare accuracy for A and B Difficulty of extrinsic evaluation of N-gram models • Extrinsic evaluation can be time-consuming – Time-consuming • Is there any easier way? – Sometimes use intrinsic evaluation: perplexity 8

10/17/19 The intuition for Perplexity mushrooms 0.1 • The Shannon Game: pepperoni 0.1 – How well can we predict the next word? anchovies 0.01 I always order pizza with cheese and ____ … . The 33 rd President of the US was ____ fried rice 0.0001 I saw a ____ … . – Unigrams are terrible at this game. (Why?) and 1e-100 • A better model of a text – is one which assigns a higher probability to the word that actually occurs Perplexity The best language model is one that best predicts an unseen test set • Gives the highest P(sentence) − 1 N PP ( W ) = P ( w 1 w 2 ... w N ) Perplexity is the inverse probability of the test set, normalized by the number of 1 = N words: P ( w 1 w 2 ... w N ) Chain rule: Minimizing perplexity is the same as maximizing probability 9

10/17/19 Perplexity The best language model is one that best predicts an unseen test set • Gives the highest P(sentence) − 1 N PP ( W ) = P ( w 1 w 2 ... w N ) Perplexity is the inverse probability of the test set, normalized by the number of 1 = N words: P ( w 1 w 2 ... w N ) Chain rule: For bigrams: Intuition on perplexity • Let’s suppose a sentence consisting of random digits • What is the perplexity of this sentence according to a model that assign P=1/10 to each digit? PP ( W ) = P ( w 1 w 2 ... w N ) − 1 N N = ( 1 ) − 1 N 10 − 1 1 = 10 = 10 10

10/17/19 Lower perplexity = better model • Training 38 million words, test 1.5 million words, WSJ N-gram Unigram Bigram Trigram Order Perplexity 962 170 109 Digression: information theory • I am thinking of an integer between 0 and 1,023. You want to guess it using the fewest number of questions. • Most of us would ask “ is it between 0 and 512?” • This is a good strategy because it provides the most information about the unknown number. • It provides the first binary digit of the number. • Initially you need to obtain log 2 (1024) = 10 bits of information. After the first question you only need log 2 (512) = 9 bits. 11

10/17/19 Information and Entropy • By halving the search space we obtained one bit. • In general, the information associated with a probabilistic outcome: I ( p ) = − log p • Why the logarithm? • Assume we have two independent events x, and y. We would like the information they carry to be additive. Let’s check: I ( x, y ) = − log P ( x, y ) = − log P ( x ) P ( y ) = − log P ( x ) − log P ( y ) = I ( x ) + I ( y ) Information and Entropy • By halving the search space we obtained one bit. • In general, the information associated with a probabilistic outcome: I ( p ) = log p • Now we can define the entropy, or information associated with a random variable X: X H ( X ) = − p ( x ) log 2 p ( x ) x ∈ χ principle, be computed in any base. call χ ) • is the space the observations belong to (words in the NLP setting) random variable • When the logarithm is in base 2, entropy is measured in bits 12

10/17/19 Entropy • For a Bernoulli random variable: H ( p ) = − p log p − (1 − p ) log(1 − p ) Entropy • Entropy of all sequences of length n in a language L: X p ( W n 1 ) log p ( W n H ( w 1 , w 2 ,..., w n ) = − 1 ) W n 1 ∈ L • Entropy rate (entropy per word): define the entropy rate (we could also think of this ) = − 1 X p ( W n 1 ) log p ( W n 1 ) n W n 1 ∈ L • What we're interested in: 1 H ( L ) = lim nH ( w 1 , w 2 ,..., w n ) n → ∞ 1 X = − lim p ( w 1 ,..., w n ) log p ( w 1 ,..., w n ) n n → ∞ W ∈ L 13

10/17/19 Entropy • What we're interested in: 1 H ( L ) = lim nH ( w 1 , w 2 ,..., w n ) n → ∞ 1 X = − lim p ( w 1 ,..., w n ) log p ( w 1 ,..., w n ) n n → ∞ W ∈ L • Using the Shannon-McMillan-Breiman theorem: Under certain conditions we have that: n → ∞ − 1 H ( L ) = lim n log p ( w 1 w 2 ... w n ) take a single sequence that is long enough Entropy • Therefore we can estimate H(L) as: H ( W ) = − 1 N log P ( w 1 w 2 ... w N ) model P on a sequence of words W is no • Which gives us: Perpelexity(W) = P ( w 1 , . . . , w N ) − 1 N = 2 H ( W ) 14

Recommend

More recommend