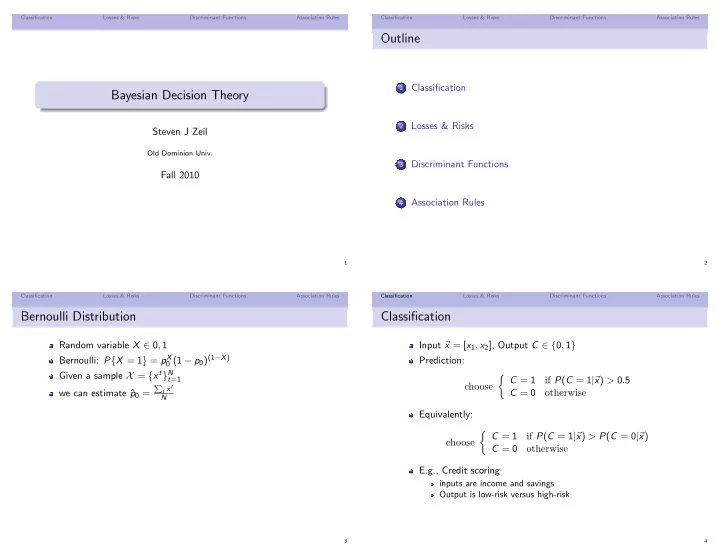

Classification Losses & Risks Discriminant Functions Association Rules Classification Losses & Risks Discriminant Functions Association Rules Outline Classification 1 Bayesian Decision Theory Losses & Risks 2 Steven J Zeil Old Dominion Univ. Discriminant Functions 3 Fall 2010 Association Rules 4 1 2 Classification Losses & Risks Discriminant Functions Association Rules Classification Losses & Risks Discriminant Functions Association Rules Bernoulli Distribution Classification Random variable X ∈ 0 , 1 Input � x = [ x 1 , x 2 ], Output C ∈ { 0 , 1 } 0 (1 − p 0 ) (1 − X ) Bernoulli: P { X = 1 } = p X Prediction: � C = 1 Given a sample X = { x t } N t =1 if P ( C = 1 | � x ) > 0 . 5 choose t x t � C = 0 we can estimate ˆ p 0 = otherwise N Equivalently: � C = 1 if P ( C = 1 | � x ) > P ( C = 0 | � x ) choose C = 0 otherwise E.g., Credit scoring inputs are income and savings Output is low-risk versus high-risk 3 4

Classification Losses & Risks Discriminant Functions Association Rules Classification Losses & Risks Discriminant Functions Association Rules Bayes’ Rule Bayes’ Rule - Multiple Classes x ) = P ( C ) p ( � x | C ) P ( C | � P ( C i ) p ( � x | C i ) p ( � x ) P ( C i | � x ) = p ( � x ) P ( C i ) p ( � x | C i ) P ( C | � x ): posterior probability = � K Given that we have learned something ( � x ), what is the prob k =1 p ( � x | C k ) P ( C k ) that � x is in class C ? P ( C ): prior probability P ( C i ) ≥ 0) and � K i =1 P ( C i ) = 1 What would we expect for the prob of getting something in C choose C i if P ( C i | � x ) = max k P ( C k | � x ) if we had no info about the specific case? P ( � x | C ): likelihood If we knew that an item really was in C , what is the prob that it would have values � x ? In effect, the reverse of what we are trying to find out. P ( � x ): evidence If we ignore the classes, how like are we to see a value � x ? 5 6 Classification Losses & Risks Discriminant Functions Association Rules Classification Losses & Risks Discriminant Functions Association Rules Unequal Risks Special Case: Equal Risks � 0 if i = k In many situations, different actions carry different potential Suppose λ ik = 1 if i � = k gains and costs. Expected risk of taking action α i : Actions: α i Let λ ik denote the loss incurred by taking action α i when the � R ( α i | � x ) = K λ ikP ( C k | � x ) current state is actually in C k k =1 Expected risk of taking action α i : � = P ( C k | � x ) k � = i K � = (1 − P ( C i | � x )) R ( α i | � x ) = λ ik P ( C k | � x ) k =1 Choose α i if R ( α i | � x ) = min k R ( α k | � x ) which happens when P ( C i | � x ) is largest This is simply the expected value of the loss function given So if all actions have equal cost, choose the action for the that we have chosen α i most probable class. Choose α i if R ( α i | � x ) = min k R ( α k | � x ) 7 8

Classification Losses & Risks Discriminant Functions Association Rules Classification Losses & Risks Discriminant Functions Association Rules Special Case: Indecision The Risk of Indecision Suppose that making the wrong decision is more expensive Risk: than making no decision at all (i.e., falling back to some other K procedure) � R ( α K +1 | � x ) = λ P ( C k | � x ) Introduce a special reject action α K +1 that denotes the k =1 decision to not select a “real” action = λ Cost of a reject is λ , 0 < λ < 1 K 0 if i = k � R ( α i | � x ) = P ( C k | � x ) λ ik = λ if i = K + 1 k � = i 1 if i � = k = 1 = P ( C i | � x ) Choose α i if P ( C i | � x ) > P ( C k | � x ) ∀ k � = i and P ( C i | � x ) > 1 − λ otherwise reject all actions 9 10 Classification Losses & Risks Discriminant Functions Association Rules Classification Losses & Risks Discriminant Functions Association Rules Discriminant Functions Why Discriminants? An alternate vision. In general, discriminants are more general because they do not Instead of searching for the have to lie in a 0 . . . 1 range, not correspond to actual most probable class probabilities. we seek a set of functions Allows us to use them when we have no info of the underlying that divide the space into K distribution decision regions R 1 , . . . R K Later techniques will seek discriminant functions directly. � � R i = � x | g i ( � x ) = max g k ( � x ) k 11 12

Classification Losses & Risks Discriminant Functions Association Rules Classification Losses & Risks Discriminant Functions Association Rules Bayes Classifier as Discriminant Functions Bayes Classifier as Discriminant Functions (cont.) We can form a discriminant function for the Bayes P ( C i ) p ( � x | C i ) classifier very simply: g i ( � x ) = p ( � x ) g i ( � x ) = − R ( α i | � x ) Because all the g i above would have the same If we have a constant loss denominator, we could function, we can use alternatively do: g i ( � x ) = P ( C i | � x ) P ( C i ) p ( � x | C i ) = g i ( � x ) = P ( C i ) p ( � x | C i ) p ( � x ) 13 14 Classification Losses & Risks Discriminant Functions Association Rules Classification Losses & Risks Discriminant Functions Association Rules Association Rules Association Rules Suppose that we want to learn an association rule X → Y Support( X → Y ) ≡ P ( X , Y ) e.g., customers who buy X often buy Y as well Confidence( X → Y ) ≡ P ( Y | X ) = P ( X , Y ) Three common measures: support , confidence , & lift (a.k.a., P ( X ) P ( X ) P ( Y ) = P ( Y | X ) P ( X , Y ) interest ) Lift( X → Y ) ≡ P ( Y ) Support( X → Y ) ≡ P ( X , Y ) Support and confidence are more common e.g., # customerswhoboughtboth Support and lift are symmetric # customers Confidence( X → Y ) ≡ P ( Y | X ) = P ( X , Y ) P ( X ) e.g., # customerswhoboughtboth # customerswhoboughtX P ( X ) P ( Y ) = P ( Y | X ) P ( X , Y ) Lift( X → Y ) ≡ P ( Y ) If X and Y are indep., lift should be 1 Lift > 1 means that having X makes Y more likely Lift < 1 means that having X makes Y less likely 15 16

Classification Losses & Risks Discriminant Functions Association Rules Classification Losses & Risks Discriminant Functions Association Rules Generalized Association Rules Agarwal’s Apriori Algorithm 1 Start by finding { X i } with high support Suppose that we want to learn an association rule { X i } → Y 2 Then find an association rule { X i } i � = k → X k , using the values Support( { X i } → Y ) ≡ P ( X 1 , X 2 , . . . , Y ) in each such tuple, that has high confidence Confidence( { X i } → Y ) ≡ P ( Y |{ X i } ) = P ( X 1 , X 2 ,..., Y ) P ( X 1 , X 2 ,... ) Let’s say we have a large database of tuples { X j i } j We want to find rules with support and confidence above designated thresholds 17 18 Classification Losses & Risks Discriminant Functions Association Rules Classification Losses & Risks Discriminant Functions Association Rules Apriori Algorithm - Subsets Apriori Algorithm - Finding Support 1 Start by finding { X i } with high support Support( { X i } → Y ) ≡ P ( X 1 , X 2 , . . . ) Support( { X i } → Y ) ≡ P ( X 1 , X 2 , . . . ) 1 Start with “frequent” (high support) single items If { X i } has high support, then all subsets of it must have high 2 By induction, support. Given a set of frequent k -item sets, 1 2 Then find an association rule { X i } i � = k → X k , using the values Construct all candidate k + 1-item sets 2 in each such tuple, that has high confidence Make a pass evaluating the support of these candidates, 3 discarding low-support sets. 3 Continue until no new sets discovered of until all inputsare gathered into one set. 19 20

Classification Losses & Risks Discriminant Functions Association Rules Apriori Algorithm - Finding Confidence Confidence( { X i } → Y ) = P ( X 1 , X 2 ,..., Y ) P ( X 1 , X 2 ,... ) Given a k -item set with high support: 1 Try each possible single-consequent rule. Move a term from the antecedent into the consequent 1 Evaluate confidence and discard if low. 2 2 By induction, Given a set of rules with j consequents, 1 Construct all candidates with j + 1 consequents 2 Discard those with low confidence 3 3 Repeat until no higher-consequent rules are found 21

Recommend

More recommend