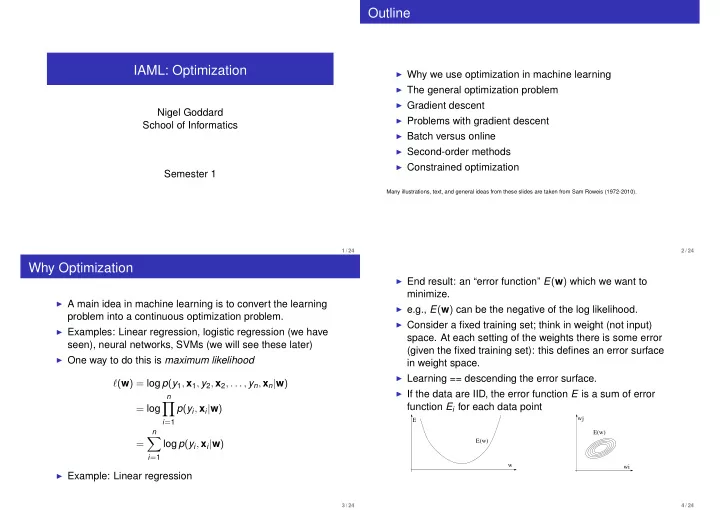

Outline IAML: Optimization ◮ Why we use optimization in machine learning ◮ The general optimization problem ◮ Gradient descent Nigel Goddard ◮ Problems with gradient descent School of Informatics ◮ Batch versus online ◮ Second-order methods ◮ Constrained optimization Semester 1 Many illustrations, text, and general ideas from these slides are taken from Sam Roweis (1972-2010). 1 / 24 2 / 24 Why Optimization ◮ End result: an “error function” E ( w ) which we want to minimize. ◮ A main idea in machine learning is to convert the learning ◮ e.g., E ( w ) can be the negative of the log likelihood. problem into a continuous optimization problem. ◮ Consider a fixed training set; think in weight (not input) ◮ Examples: Linear regression, logistic regression (we have space. At each setting of the weights there is some error seen), neural networks, SVMs (we will see these later) (given the fixed training set): this defines an error surface ◮ One way to do this is maximum likelihood in weight space. ◮ Learning == descending the error surface. ℓ ( w ) = log p ( y 1 , x 1 , y 2 , x 2 , . . . , y n , x n | w ) ◮ If the data are IID, the error function E is a sum of error n � function E i for each data point = log p ( y i , x i | w ) wj E i = 1 n E(w) � E(w) = log p ( y i , x i | w ) i = 1 w wi ◮ Example: Linear regression 3 / 24 4 / 24

Role of Smoothness Role of Derivatives If E completely unconstrained, minimization is impossible. ◮ If we wiggle w k and keep everything else the same, does the error get better or worse? ∂ E ◮ Calculus has an answer to exactly this question: ∂ w k ◮ So: use a differentiable cost function E and compute partial derivatives of each parameter E(w) ◮ The vector of partial derivatives is called the gradient of the error. It is written ∇ E = ( ∂ E ∂ w 1 , ∂ E ∂ w 2 , . . . , ∂ E ∂ w n ) . Alternate notation ∂ E ∂ w . ◮ It points in the direction of steepest error descent in weight space. w ◮ Three crucial questions: All we could do is search through all possible values w . ◮ How do we compute the gradient ∇ E efficiently? ◮ Once we have the gradient, how do we minimize the error? ◮ Where will we end up in weight space? Key idea: If E is continuous, then measuring E ( w ) gives information about E at many nearby values. 5 / 24 6 / 24 Numerical Optimization Algorithms Optimization Algorithm Cartoon ◮ Basically, numerical optimization algorithms are iterative. They generate a sequence of points ◮ Numerical optimization algorithms try to solve the w 0 , w 1 , w 2 , . . . general problem E ( w 0 ) , E ( w 1 ) , E ( w 2 ) , . . . min w E ( w ) ∇ E ( w 0 ) , ∇ E ( w 1 ) , ∇ E ( w 2 ) , . . . ◮ Most commonly, a numerical optimization procedure takes ◮ Basic optimization algorithm is two inputs: ◮ A procedure that computes E ( w ) initialize w ◮ A procedure that computes the partial derivative ∂ E ∂ w j while E ( w ) is unacceptably high ◮ (Aside: Some use less information, i.e., they don’t use calculate g = ∇ E gradients. Some use more information, i.e., higher order Compute direction d from w , E ( w ) , g derivative. We won’t go into these algorithms in the (can use previous gradients as well...) course.) w ← w − η d end while return w 7 / 24 8 / 24

A Choice of Direction Gradient Descent ◮ Simple gradient descent algorithm: ◮ The simplest choice d is the current gradient ∇ E . ◮ It is locally the steepest descent direction. initialize w while E ( w ) is unacceptably high ◮ (Technically, the reason for this choice is Taylor’s theorem calculate g ← ∂ E from calculus.) ∂ w w ← w − η g end while return w ◮ η is known as the step size (sometimes called learning rate ) ◮ We must choose η > 0. ◮ η too small → too slow ◮ η too large → instability 9 / 24 10 / 24 Effect of Step Size Effect of Step Size ◮ Take η = 1 . 1. Not so good. If you Goal: Minimize Goal: Minimize step too far, you can leap over the E ( w ) = w 2 E ( w ) = w 2 ◮ Take η = 0 . 1. Works well. region that contains the minimum 8 8 w 0 = 1 . 0 w 0 = 1 . 0 6 6 w 1 = w 0 − 1 . 1 · 2 w 0 = − 1 . 2 w 1 = w 0 − 0 . 1 · 2 w 0 = 0 . 8 E(w) E(w) 4 w 2 = w 1 − 1 . 1 · 2 w 1 = 1 . 44 w 2 = w 1 − 0 . 1 · 2 w 1 = 0 . 64 4 w 3 = w 2 − 1 . 1 · 2 w 2 = − 1 . 72 w 3 = w 2 − 0 . 1 · 2 w 2 = 0 . 512 2 2 · · · · · · 0 0 w 25 = 79 . 50 w 25 = 0 . 0047 −3 −2 −1 0 1 2 3 −3 −2 −1 0 1 2 3 w ◮ Finally, take η = 0 . 000001. What w happens here? 11 / 24 12 / 24

“Bold Driver” Gradient Descent Batch vs online ◮ So far all the objective function we have seen look like: ◮ Simple heuristic for choosing η which you can use if you’re n � E ( w ; D ) = E i ( w ; y i , x i ) . desperate. i = 1 initialize w , η D = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . ( x n , y n ) } is the training set. initialize e ← E ( w ) ; g ← ∇ E ( w ) while η > 0 ◮ Each term sum depends on only one training instance w 1 ← w − η g ◮ Example: Logistic regression: E i ( w ; y i , x i ) = log p ( y i | x i , w ) . e 1 = E ( w 1 ) ; g 1 = ∇ E ◮ The gradient in this case is always if e 1 ≥ e n ∂ E ∂ E i η = η/ 2 � ∂ w = else ∂ w i = 1 η = 1 . 01 η ; w ← w 1 ; g ← g 1 ; e = e 1 ◮ The algorithm on slide 10 scans all the training instances end while before changing the parameters. return w ◮ Seems dumb if we have millions of training instances. ◮ Finds a local minimum of E . Surely we can get a gradient that is “good enough” from fewer instances, e.g., a couple of thousand? Or maybe even from just one? 13 / 24 14 / 24 Batch vs online Algorithms for Batch Gradient Descent ◮ Batch learning: use all patterns in training set, and update weights after calculating ◮ Here is batch gradient descent. ∂ E ∂ E i initialize w � ∂ w = ∂ w while E ( w ) is unacceptably high i calculate g ← � N ∂ E i i = 1 ∂ w ◮ On-line learning: adapt weights after each pattern w ← w − η g presentation, using ∂ E i end while ∂ w ◮ Batch more powerful optimization methods return w ◮ Batch easier to analyze ◮ This is just the algorithm we have seen before. We have just “substituted in” the fact that E = � N i = 1 E i . ◮ On-line more feasible for huge or continually growing datasets ◮ On-line may have ability to jump over local optima 15 / 24 16 / 24

Algorithms for Online Gradient Descent Problems With Gradient Descent ◮ Here is (a particular type of) online gradient descent algorithm initialize w while E ( w ) is unacceptably high ◮ Setting the step size η Pick j as uniform random integer in 1 . . . N ◮ Shallow valleys calculate g ← ∂ E j ◮ Highly curved error surfaces ∂ w w ← w − η g ◮ Local minima end while return w ◮ This version is also called “stochastic gradient ascent” because we have picked the training instance randomly. ◮ There are other variants of online gradient descent. 17 / 24 18 / 24 Shallow Valleys Curved Error Surfaces ◮ Typical gradient descent can be fooled in several ways, which is why more sophisticated methods are used when ◮ A second problem with gradient descent is that the possible. One problem: quickly down the valley walls but very slowly along the valley bottom. gradient might not point towards the optimum. This is because of curvature directly at the nearest local minimum. dE dw dE dW ◮ Gradient descent goes very slowly once it hits the shallow ◮ Note: gradient is the locally steepest direction. Need not valley. directly point toward local optimum. ◮ One hack to deal with this is momentum ◮ Local curvature is measured by the Hessian matrix: H ij = ∂ 2 E /∂ w i w j . d t = β d t − 1 + ( 1 − β ) η ∇ E ( w t ) ◮ Now you have to set both η and β . Can be difficult and irritating. 19 / 24 20 / 24

Recommend

More recommend