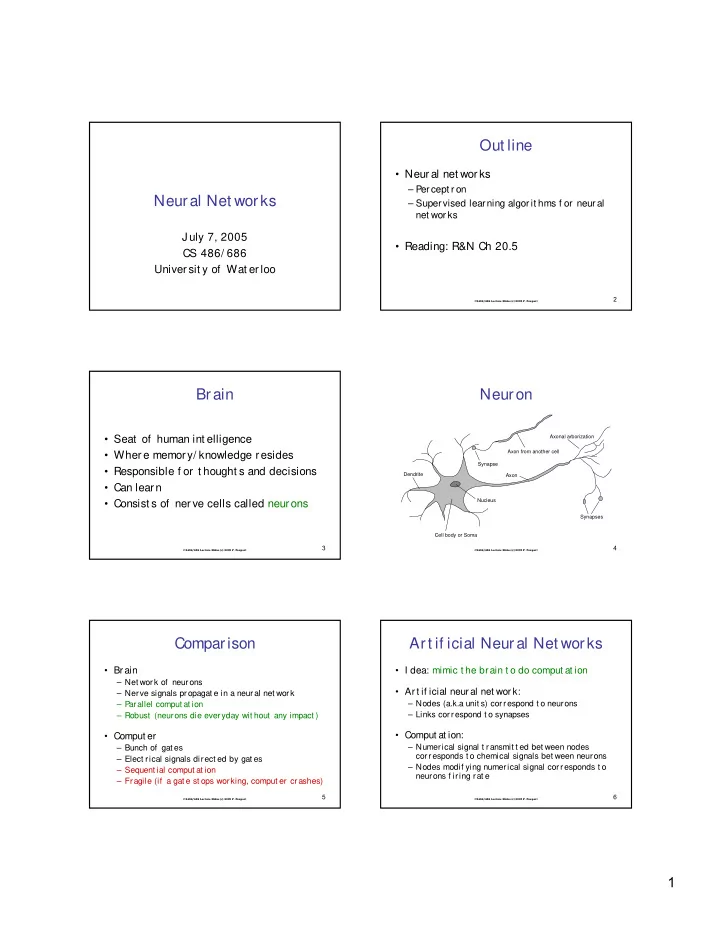

Out line • Neural net wor ks – Percept r on Neural Net works – Supervised learning algorit hms f or neur al net works J uly 7, 2005 • Reading: R&N Ch 20.5 CS 486/ 686 Univer sit y of Wat erloo 2 CS486/686 Lecture Slides (c) 2005 P. Poupart Brain Neuron • Seat of human int elligence Axonal arborization Axon from another cell • Where memory/ knowledge resides Synapse • Responsible f or t hought s and decisions Dendrite Axon • Can learn Nucleus • Consist s of ner ve cells called neurons Synapses Cell body or Soma 3 4 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart Comparison Art if icial Neural Net works • Brain • I dea: mimic t he brain t o do comput at ion – Net work of neurons • Art if icial neural net work: – Nerve signals propagat e in a neural net work – Parallel comput at ion – Nodes (a.k.a unit s) correspond t o neurons – Links correspond t o synapses – Robust (neurons die everyday wit hout any impact ) • Comput at ion: • Comput er – Numerical signal t ransmit t ed bet ween nodes – Bunch of gat es corresponds t o chemical signals bet ween neurons – Elect rical signals direct ed by gat es – Nodes modif ying numerical signal cor responds t o – Sequent ial comput at ion neurons f iring rat e – Fragile (if a gat e st ops working, comput er crashes) 5 6 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart 1

ANN Unit ANN Unit • For each unit i: a 0 = −1 Bias Weight a = g(in ) i i W 0,i • Weight s: W j i g – St rengt h of t he link f rom unit j t o unit i in i Σ W j,i a – I nput signals a j weight ed by W j i and linearly a j i combined: in i = Σ j W j i a j • Act ivat ion f unct ion: g Input Input Activation Output Output Links Function Function Links – Numerical signal pr oduced: a i = g(in i ) 7 8 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart Act ivat ion Funct ion Common Act ivat ion Funct ions • Should be nonlinear Thr eshold Sigmoid – Ot herwise net work is j ust a linear f unct ion g ( in i ) g ( in i ) • Of t en chosen t o mimic f ir ing in neurons +1 +1 – Unit should be “act ive” (out put near 1) when f ed wit h t he “right ” input s in i in i – Unit should be “inact ive” (out put near 0) (a) (b) when f ed wit h t he “wrong” input s g(x) = 1/ (1+e -x ) 9 10 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart Logic Gat es Net work St ruct ures • McCulloch and Pit t s (1943) • Feed-f orwar d net work – Design ANNs t o represent Boolean f ns – Direct ed acyclic gr aph – No int ernal st at e • What should be t he weight s of t he f ollowing unit s t o code AND, OR, NOT ? – Simply comput es out put s f rom input s • Recurrent net work -1 -1 -1 – Direct ed cyclic graph a 1 a 1 thresh thresh thresh a 1 – Dynamical syst em wit h int ernal st at es a 2 a 2 – Can memorize inf ormat ion 11 12 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart 2

Feed-f orward net work Percept ron • Simple net work wit h t wo input s, one • Single layer f eed-f orwar d net wor k hidden layer of t wo unit s, one out put unit W 1,3 1 3 W 3,5 W 1,4 5 W W 2,3 4,5 2 4 W 2,4 Input Output W j,i a 5 = g(W 3,5 a 3 + W 4,5 a 4 ) Units Units = g(W 3,5 g(W 1,3 a 1 + W 2,3 a 2 ) + W 4,5 g(W 1,4 a 1 + W 2,4 a 2 )) 13 14 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart Supervised Learning Threshold Percept ron Lear ning • Learning is done separat ely f or each unit • Given list of < input ,out put > pair s – Since unit s do not share weight s • Train f eed-f orwar d ANN • Percept r on lear ning f or unit i: – To comput e proper out put s when f ed wit h – For each < input s,out put > pair do: input s • Case 1: correct out put produced – Consist s of adj ust ing weight s W j i – ∀ j W j i � W j i • Case 2: out put produced is 0 inst ead of 1 – ∀ j W j i � W j i + a j • Simple lear ning algorit hm f or t hreshold • Case 3: out put produced is 1 inst ead of 0 percept rons – ∀ j W j i � W j i – a j – Unt il correct out put f or all t raining inst ances 15 16 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart Thr eshold Per cept r on Threshold Percept r on Lear ning Hypot hesis Space • Dot product s: a ● a ≥ 0 and -a ● a ≤ 0 • Hypot hesis space h W : – All binary classif icat ions wit h param. W s.t . • Percept ron comput es • a ● W > 0 � 1 – 1 when a ● W = Σ j a j W j i > 0 • a ● W < 0 � 0 – 0 when a ● W = Σ j a j W j i < 0 • I f out put should be 1 inst ead of 0 t hen • Since a ● W is linear in W, percept ron is – W � W+a since a ● (W+a) ≥ a ● W called a linear separ at or • I f out put should be 0 inst ead of 1 t hen – W � W-a since a ● (W-a) ≤ a ● W 17 18 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart 3

Thr eshold Per cept r on Sigmoid Percept ron Hypot hesis Space • Ar e all Boolean gat es linear ly separ able? • Represent “sof t ” linear separat or s I I I 1 1 1 1 1 1 ? 0 0 0 I I I 0 1 0 1 0 1 2 2 2 I I I I I xor I (a) and (b) or (c) 1 2 1 2 1 2 19 20 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart Sigmoid Percept ron Learning Percept ron Error Gradient • E = 0.5 Err 2 = 0.5 (y – h W ( x )) 2 • Formulat e lear ning as an opt imizat ion search in weight space • ∂ E/ ∂ W j = Err × ∂ Err/ ∂ W j – Since g dif f erent iable, use gradient descent = Err × ∂ (y – g( Σ j W j x j )) = -Err × g’( Σ j W j x j ) × x j • Minimize squared error: – E = 0.5 Er r 2 = 0.5 (y – h W ( x )) 2 • When g is sigmoid f n, t hen g’ = g(1-g) • x : input • y: t arget out put • h W ( x ): comput ed out put 21 22 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart Mult ilayer Feed-f or war d Percept ron Learning Algorit hm Neur al Net works • Percept r on-Learning(examples,net work) • Percept ron can only represent (sof t ) – Repeat linear separ at or s • For each e in examples do – Because single layer – in � Σ j W j x j [e] – Err � y[e] – g(in) – W j � W j + α × Err × g’(in) × x j [e] • Wit h mult iple layer s, what f ns can be – Unt il some st opping crit eria sat isf ied represent ed? – Ret urn learnt net work – Virt ually any f unct ion! • N.B. α is a lear ning r at e cor responding t o t he st ep size in gradient descent 23 24 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart 4

Mult ilayer Net works Mult ilayer Net works • Adding t wo sigmoid unit s wit h parallel • Adding t wo int er sect ing ridges (and but opposit e “clif f s” produces a ridge t hresholding) produces a bump Network output Network output 0.9 1 0.8 0.9 0.8 0.7 0.7 0.6 0.6 0.5 0.5 0.4 0.4 0.3 0.3 0.2 0.2 0.1 0.1 0 0 4 4 2 2 -4 -2 0 -4 -2 0 -4 -4 x2 x2 -2 -2 0 0 2 2 x1 x1 4 4 25 26 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart Mult ilayer Net works Neural Net Applicat ions • By t iling bumps of various height s t o- • Neural net s can approximat e any get her, we can approximat e any f unct ion f unct ion, hence 1000’s of applicat ions – NETt alk f or pronouncing English t ext • Training algorit hm: – Charact er recognit ion – Back-propagat ion – Paint -qualit y inspect ion – Essent ially gradient perf ormed by – Vision-based aut onomous driving propagat ing err ors backward int o t he – Et c. net work – See t ext book f or derivat ion 27 28 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart Next Class Neural Net Drawbacks • Common problems: • Next Class: •Ensemble lear ning – How should we int erpret unit s? •Russell and Norvig Sect . 18.4 – How many layers and unit s should a net work have? – How t o avoid local opt imum while t raining wit h gradient descent ? 29 30 CS486/686 Lecture Slides (c) 2005 P. Poupart CS486/686 Lecture Slides (c) 2005 P. Poupart 5

Recommend

More recommend