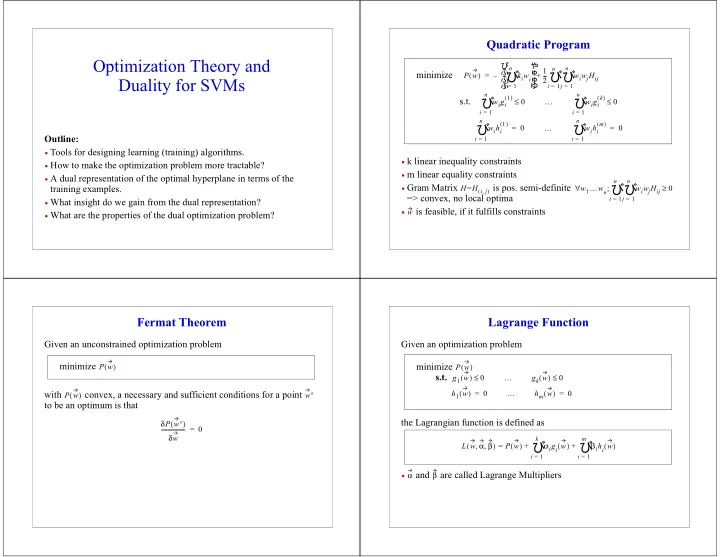

��������������� ��������������� �������� � � Quadratic � Program Optimization � Theory � and � � � n n n � � 1 � � � � minimize � P w = – k i w i + - - - w i w j H ij ( ) � � Duality � for � SVMs 2 � � i = 1 i = 1 j = 1 n n � 1 � k ( ) ( ) ������������������� s.t. �� w i g i 0 w i g i 0 ≤ … ≤ i = 1 i = 1 n n � 1 � m ( ) ( ) w i h i = 0 w i h i = 0 … Outline: i = 1 i = 1 • Tools � for � designing � learning � (training) � algorithms. • k � linear � inequality � constraints • How � to � make � the � optimization � problem � more � tractable? • m � linear � equality � constraints • A � dual � representation � of � the � optimal � hyperplane � in � terms � of � the � n n � � • Gram � Matrix � � is � pos. � semi-definite � training � examples. H H i j = w 1 … w n ; w i w j H ij 0 ∀ ≥ ( , ) => � convex, � no � local � optima i = 1 j = 1 • What � insight � do � we � gain � from � the � dual � representation? � is � feasible, � if � it � fulfills � constraints w • What � are � the � properties � of � the � dual � optimization � problem? • Fermat � Theorem Lagrange � Function Given � an � unconstrained � optimization � problem Given � an � optimization � problem � minimize � � minimize � P w P w ( ) ( ) ��������� s.t. �� g 1 w 0 g k w 0 ( ) ≤ … ( ) ≤ h 1 w = 0 h m w = 0 with � � convex, � a � necessary � and � sufficient � conditions � for � a � point � ( ) … ( ) P w w ° ( ) to � be � an � optimum � is � that � the � Lagrangian � function � is � defined � as δ P w ° ( ) - - - - - - - - - - - - - - - - - - = 0 δ w k m � � L w α β = P w + α i g i w + β i h i w ( , , ) ( ) ( ) ( ) i = 1 i = 1 � and � � are � called � Lagrange � Multipliers α β •

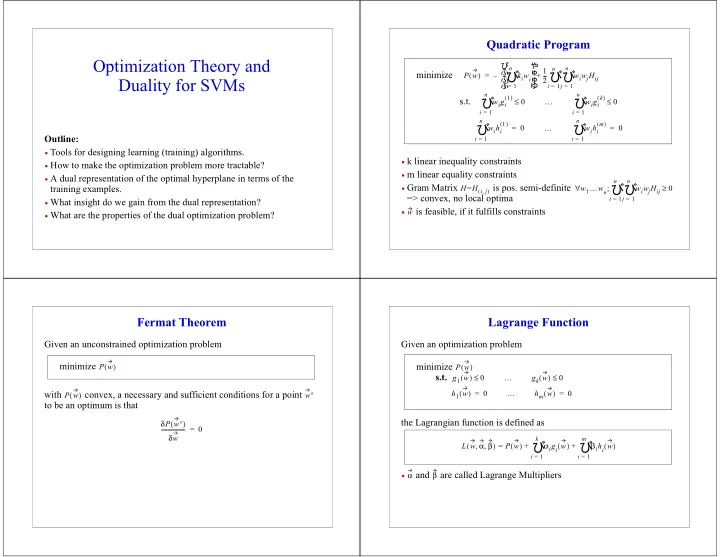

� � ��������������� Lagrange � Theorem Karush-Kuhn-Tucker � Theorem Given � an � optimization � problem Given � an � optimization � problem � minimize � P w ( ) � minimize � ��������� s.t. �� P w g 1 w 0 g k w 0 ( ) ( ) ≤ … ( ) ≤ ��������� s.t. �� h 1 w = 0 h m w = 0 ( ) … ( ) h 1 w = 0 h m w = 0 ( ) … ( ) with � � convex � and � all � g � and � h � affine, � necessary � and � sufficient � P w ( ) with � � convex � and � all � h � affine � (w*x+b), � necessary � and � sufficient � P w ( ) conditions � for � a � point � � to � be � an � optimum � are � the � existence � of � � and � w ° α° β° conditions � for � a � point � � to � be � an � optimum � are � the � existence � of � � such � w ° β° such � that that � δ L w ° α° β° δ L w ° α° β° ( , , ) ( , , ) - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - = 0 - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - = 0 m δ w δ L w ° β° δ L w ° β° δβ ( , ) ( , ) � - - - - - - - - - - - - - - - - - - - - - - - - - - - = 0 - - - - - - - - - - - - - - - - - - - - - - - - - - - = 0 L w β = P w + β i h i w ( , ) ( ) ( ) δ w δβ α i ° g i w ° = 0 i = 1 … k ( ) , , , i = 1 g i w ° 0 i = 1 … k ( ) ≤ , , , 0 i = 1 … k α i ° ≥ , , , => � L w ° β L w ° β° L w β° ( , ) ≤ ( , ) ≤ ( , ) Sufficient � for � convex � QP: � max min w L w α β ( , , ) α ≥ 0 β , Dual � Optimization � Problem Primal � <=> � Dual 1 Primal � OP: � minimize � , � with � P w b = - - - w w i y i w x i + b 1 ( , ) ⋅ ∀ [ ⋅ ] ≥ Theorem: � The � primal � OP � and � the � dual � OP � have � the � same � solution. � 2 Given � the � solution � � of � the � dual � OP, � α i ° Lemma: � The � solution � � can � always � be � written � as � a � linear � combination w ° n 1 pos neg � n � w ° = ° y i x i b ° = - - w 0 x - + w 0 x α i ( ⋅ ⋅ ) w ° = α i y i x i 0 α i ≥ 2 i = 1 i = 1 of � the � training � data. is � the � solution � of � the � primal � OP. ==> � Lagrange � multipliers � � 1 n n n � � � � � Dual � OP: � maximize � D α = – - - - α i α j y i y j x i x j ( ) α i ( ⋅ ) � � Theorem: � For � any � feasible � points � . P w b D α ( , ) ≥ ( ) 2 � � i = 1 i = 1 j = 1 n � ������������������������� s.t. �� α i y i = 0 and 0 ≤ α i => � two � alternative � ways � to � represent � the � learning � result i = 1 • weight � vector � and � threshold � � � w b ==> � positive � semi-definite � quadratic � program , • vector � of � “ influences” � α 1 … α n , ,

Properties � of � the � Dual � OP Properties � of � the � Soft-Margin � Dual � OP � � 1 � � 1 n n n n n n � � � � � � � � � � Dual � OP: � maximize � Dual � OP: � maximize � D α = – - - - α i α j y i y j x i x j D α = – - - - α i α j y i y j x i x j ( ) α i ( ⋅ ) ( ) α i ( ⋅ ) � � � � 2 2 � � � � i = 1 i = 1 j = 1 i = 1 i = 1 j = 1 n n � � ������������������������� s.t. �� ������������������������ s. � t. �� α i y i = 0 and 0 α i y i = 0 and 0 C ≤ α i ≤ α i ≤ i = 1 i = 1 • single � solution � ( i.e. � � is � unique) � � w b • (mostly) � single � solution � (i. � e. � � � � is � almost � always � unique) , w b , • one � factor � � for � each � training � example • one � factor � � for � each � training � example α i α i • describes � the � “ influence” � of � training � • “ influence” � of � single � training � examples � i � on � the � result example � limited � by � C � <=> � training � example � is � a � support � 0 � <=> � SV � with � α i > 0 C = 0 < α i < ξ i • • ξ i vector δ � <=> � SV � with � = C 0 α i ξ i > • � else = 0 α i � else • = 0 α i • ξ j • depends � exclusively � on � inner � product � • based � exclusively � on � inner � product � between � training � examples between � training � examples

Recommend

More recommend