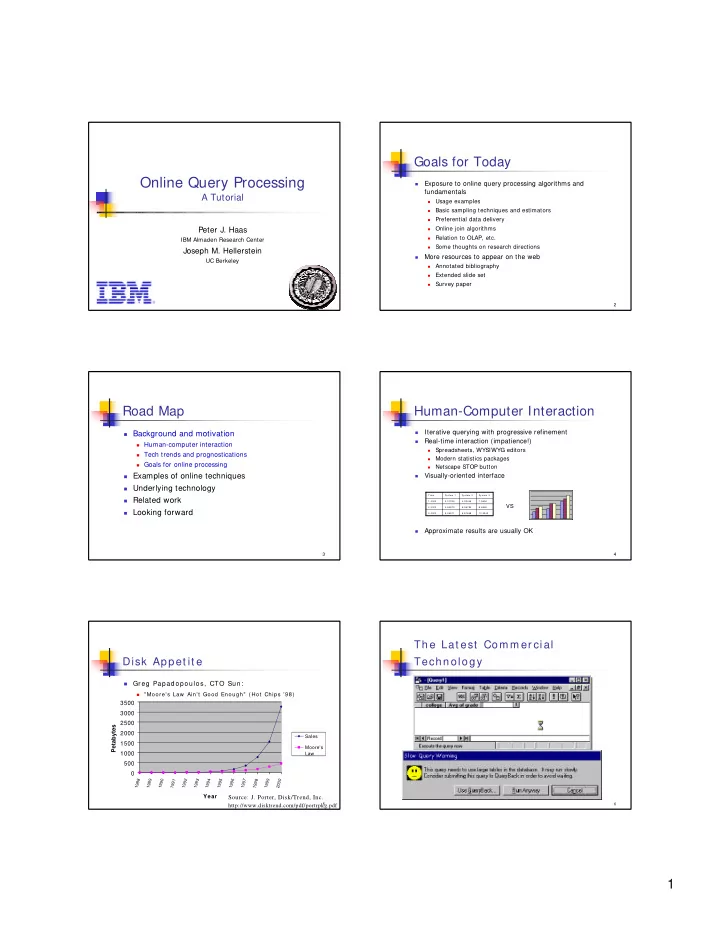

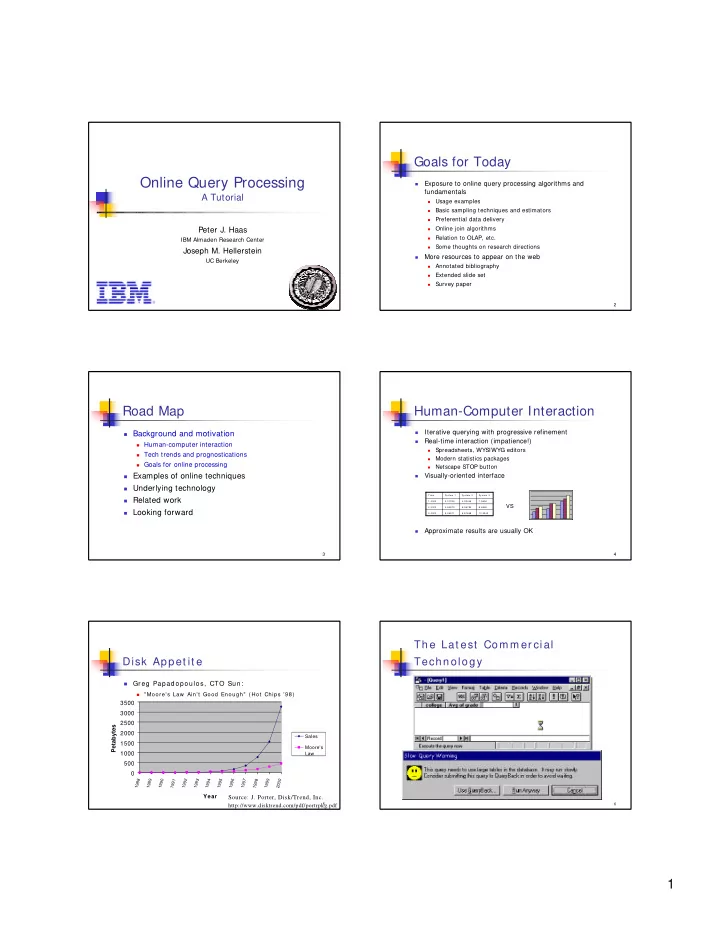

Goals for Today Online Query Processing Exposure to online query processing algorithms and � fundamentals A Tutorial Usage examples � Basic sampling techniques and estimators � Preferential data delivery � Peter J. Haas Online join algorithms � Relation to OLAP, etc. IBM Almaden Research Center � Some thoughts on research directions � Joseph M. Hellerstein More resources to appear on the web � UC Berkeley Annotated bibliography � Extended slide set � Survey paper � 1 2 Road Map Human-Computer Interaction Iterative querying with progressive refinement � Background and motivation � Real-time interaction (impatience!) � � Human-computer interaction Spreadsheets, WYSIWYG editors � � Tech trends and prognostications Modern statistics packages � � Goals for online processing Netscape STOP button � � Examples of online techniques Visually-oriented interface � � Underlying technology Tim e Syst em 1 Syst em 2 Syst em 3 � Related work 1.0000 3.01325 4.32445 7.5654 VS 2.0000 4.54673 6.56784 8.6562 � Looking forward 3.0000 5.46571 6.87658 10.3343 Approximate results are usually OK � 3 4 The Lat est Com m er cial Disk Appet it e Technology Greg Papadopoulos, CTO Sun: � � " Moore's Law Ain't Good Enough" ( Hot Chips ’98) 3500 3000 2500 Petabytes 2000 Sales 1500 Moore's 1000 Law 500 0 1988 1989 1990 1991 1992 1993 1994 1995 1996 1997 1998 1999 2000 Year Source: J. Porter, Disk/Trend, Inc. http://www.disktrend.com/pdf/portrpkg.pdf 5 6 1

Dr aw backs of Cur r ent Technology Goals f or Online Processing � Only exact answers are available New “ greedy” perf orm ance regim e � � A losing pr oposit ion as dat a volum e gr ow s Maximize 1 st derivat ive of t he “ mirt h index” � Har dw ar e im pr ovem en t s n ot su f f icien t � Mirt h defined on-t he-fly � I nt eract ive syst ems fail on massive dat a � � Theref ore need FEEDBACK and CONTROL � E. g. , spr eadsh eet pr ogr am s ( 6 4 Kr o w lim it ) � DBMS not int eract ive 100% No user f eedback or cont r ol ( “ back t o t he 60’s” ) � Online � Lon g pr ocessin g t im es ☺ Traditional Fu n dam en t al m ism at ch w it h pr ef er r ed m odes of HCI � OLAP: a part ial solut ion � Can’t handle ad hoc quer ies or dat a set s � Time 7 8 Road Map Online Aggregat ion Background and Mot ivat ion SELECT AVG( t em p) FROM t GROUP BY sit e � � Exam ples of Online Techniques 330K row s in t able � � � Aggregat ion, visualizat ion, cleaning/ browsing � t he exact answ er: Underlying t echnology � � Relat ed work � Looking Forw ard 9 10 Online Aggregat ion, cont ’d Online Aggregat ion, cont ’d A simple online aggregat ion int erface ( aft er 74 row s) Af t er 834 row s: � � 11 12 2

Exam ple: Online Aggr egat ion Online Dat a Visualizat ion I n Tioga Dat aSplash � Addit ional Feat ures: Speed up Slow dow n Term inat e 13 14 Online Enum er at ion Scalable Spreadsheet s Pot t er’s Wheel [ VLDB 2001] � Scalable spreadsheet � � A fract ion of dat a is mat erialized in GUI widget � Scrolling = preference for dat a delivery in a quant ile Permit s “ fuzzy” querying � I nt eract ive dat a cleaning � � Online st ruct ure and discrepancy det ect ion Online aggregat ion � 15 16 Visual Transf orm at ion Shot 17 18 3

Road Map Sam pling – Design I ssues Background and m ot ivat ion Granularit y of sample � � � I nst ance-level ( row -level): high I / O cost � Exam ples of online t echniques Block-level ( page-level) : high variabilit y from clust ering � � Underlying t echnology � Type of sam ple Building blocks: sampling, est imat ion � Oft en simple random sample ( SRS) � � Preferent ial dat a delivery � Especially f or on- t he-fly Pipelined adapt ive processing algorit hms � Wit h/ wit hout replacement usually not crit ical � � Relat ed work � Dat a st ruct ure from w hich t o sam ple Looking f orw ard � Files or relat ional t ables � I ndexes ( B + trees, etc) � 19 20 Row - level Sam pling Techniques Accept ance/ Rej ect ion Sam pling Maint ain file in random order � Accept row on page i w it h probabilit y = n i / n MAX � Sampling = scan � Or iginal pages Modif ied pages � I s file init ially in random order? r r r r r r r r r r r r r r r r r r � St at ist ical t est s needed: e.g., Runs t est , Sm ir nov t est r r r r r r r r r r r r r r r r r r r r r r r I n DB syst ems: clust er via RAND funct ion � � Must “ freshen” ordering ( online reorg) On -t he-fly sampling � � Via index on “ random” column � Com m only used in ot her set t ings � Else get random page, t hen row w it hin page E.g. sampling from j oins � Ex: ext ent - m ap sam plin g � � Pr oblem : var iable num ber of r ecor ds on page � E.g. sampling from indexes 21 22 Cost of Row - Level Sam pling Est im at ion f or Aggregat es Point est imat es � 1 0 0 � Easy : SUM, COUNT, AVERAGE Har d: MAX, MI N, quant iles, dist inct values � 8 0 Confidence int ervals – a measure of precision � Pages fetched (% ) 6 0 • 100,000 pages 4 0 • 200 2 0 row s/ page 0 Tw o cases: single-t able and j oins � 0 2 4 6 8 1 2 4 6 8 2 . . . . . . . . 0 0 0 0 1 1 1 1 Sam p l i n g Rat e ( % ) 23 24 4

Conf idence I nt ervals The Good and Bad New s Good n ew s: 1/ n 1 / 2 m agic ( n chosen on- t he-f ly) � 0.0075 1 0.8 0.0025 CI Endpoints Lg. Sample CI Length 0.6 Conserv. 0.4 Determ. -0.0025 0.2 0 -0.0075 0 100 200 300 400 500 Sample Size 1 50 99 � Bad n ew s: n eed le-in- a- h ayst ack pr oblem Sam p l e Si ze ( % ) 25 26 Sam pling Deployed in I ndust ry Pr ecom put at ion Techniques � “ Simulat ed” Bernoulli sampling � Tw o com ponent s � SQL: SELECT * WHERE RAND( ) < = 0 . 0 1 � Dat a r educt ion ( of t en expensive) � Sim ilar capabilit y in SAS � Appr oxim at e r econ st r u ct ion ( qu ick) � Bernoulli Sampling wit h pre- specified rat e � Pros and cons � I nf or m ix, Or acle 8i, ( DB2) � Ef f iciency vs flexibilit y � Ex : SELECT * FROM T1 SAMPLE ROW( 1 0 % ) , T2 � Class of quer ies t hat can be handled Degr ee of pr ecision � � Ex : SELECT * FROM T1 SAMPLE BLOCK( 1 0 % ) , T2 � Ease of im plem en t at ion � Not for novices � How m u ch of syst em m u st b e m od if ied � Need t o pr e- specif y pr ecision � How soph ist icat ed m u st developer be? n o f eed b ack / cont r ol � More w idely deployed in indust ry � r ecall t he “ m ult ir esolut ion” pat t er ns f r om exam ple � � Will give overview lat er No est im at or s pr ovided in cur r ent syst em s � 27 28 Road Map Pref erent ial Dat a Delivery Background and mot ivat ion Why needed � � � Speedup/ slow dow n arrow s � Examples of online t echniques Spreadsheet scrollbars � � Underlying t echnology � Pipeline quasi- sort Building blocks: sampling, est imat ion � � Cont inuous re- opt imizat ion ( eddies) � Preferent ial dat a delivery � I ndex st ride � Pipelined adapt ive processing algorit hms High I / O cost s, good for out liers � � Relat ed t echnology: precom put at ion � Online Reordering ( “ Juggle” ) Looking f orw ard � Excellent in most cases, no index required � � [ VLDB ’99, VLDBJ ’00] 29 30 5

Recommend

More recommend