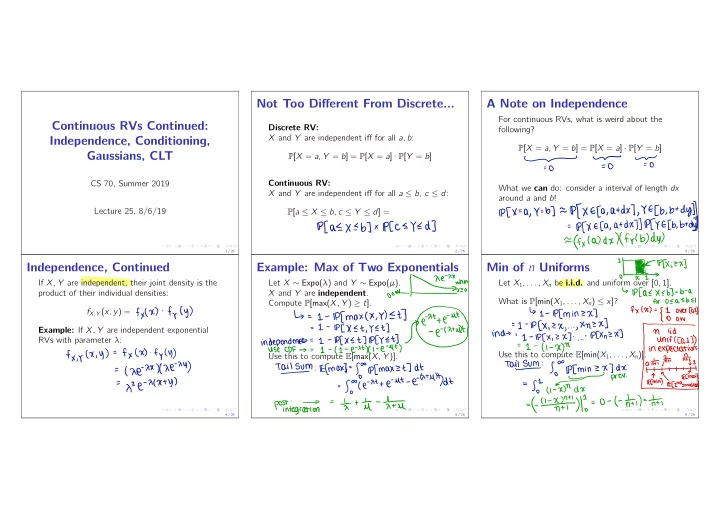

Not Too Different From Discrete... A Note on Independence For continuous RVs, what is weird about the Continuous RVs Continued: Discrete RV: following? X and Y are independent iff for all a , b : Independence, Conditioning, P [ X = a , Y = b ] = P [ X = a ] · P [ Y = b ] Gaussians, CLT P [ X = a , Y = b ] = P [ X = a ] · P [ Y = b ] To To - O = Continuous RV: CS 70, Summer 2019 What we can do: consider a interval of length dx X and Y are independent iff for all a ≤ b , c ≤ d : around a and b ! =p f X Efa , ) ) , YE [ b. - b) ) atdx btdy Lecture 25, 8/6/19 P [ a ≤ X ≤ b , c ≤ Y ≤ d ] = IPCX a , Y - - - , btdy at DX ) ) REY E [ b d ] Plas PEX E Ca , X Eb ] x IPCC EYE = dxlffyl ffx (a) b) dy ) = 1 / 26 2 / 26 3 / 26 ! ni Txizx ] " Independence, Continued Example: Max of Two Exponentials Min of n Uniforms ' is If X , Y are independent, their joint density is the Let X ∼ Expo( λ ) and Y ∼ Expo( µ ) . Let X 1 , . . . , X n be i.i.d. and uniform over [ 0 , 1 ] . : ↳ IPCAEXEBT b a product of their individual densities: X and Y are independent . - - - What is P [min( X 1 , . . . , X n ) ≤ x ] ? OEAEBEI Compute P [max( X , Y ) ≥ t ] . for - { . fy C y ) fxtx ) fxlx ) ↳ I overlay f X , Y ( x , y ) = Et ] ] - ↳ , Y ) IPC lpfmaxcx 1- minzx ut I = - - ° - IPCX , aw ] . Xnzx =L IP [ X 2X I , YET ] . , Et Example: If X , Y are independent exponential - = , . . " ' ' in " " . " ¥¥E¥¥ : : RVs with parameter λ : indecencies , ÷÷÷iL¥÷ f x ( x ) y ( y ) , y ) f f × , y ( X = Use this to compute E [min( X 1 , . . . , X n )] . - Use this to compute E [max( X , Y )] . M ) - xxxx - fo Tailsvm - : Tails Efmaxt - ECmin%ECzndsmanesty-f.a-xn.IT#I--o-tnttiI--nt - maxzt ] dt e p[ lpcmin ZXIDX So ( - : = xe HUH )dt iefmax y )ndx prer - e- = got . He - XCX ty ) Ut = So atte - - ' = ( e - ( I X - -1 tu life at = poftiegra.TN - 4 / 26 5 / 26 6 / 26

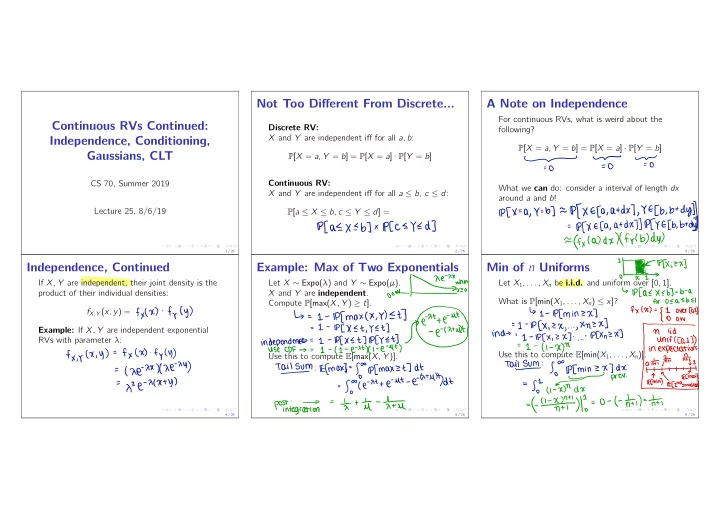

Min of n Uniforms Memorylessness of Exponential Conditional Density from We can’t talk about independence without talking What happens if we condition on events like prev xfgn slide What is the CDF of min( X 1 , . . . , X n ) ? about conditional probability ! X = a ? These have 0 probability! = BE × ] . , , Fmln l X ) mine z = . Let X ∼ Expo( λ ) . X is memoryless , i.e. The same story as discrete, except we now need to define a conditional density : What is the PDF of min( X 1 , . . . , X n ) ? P [ X ≥ s + t | X > t ] = P [ X ≥ s ] convention : d - Xin ) f Y | X ( y | x ) = f X , Y ( x , y ) C I CI too set this - Tx LHS p : f X ( x ) when tf n X ) - 1) n ( I × ( X ) O f O - ' - - . zsttfnfxstpredundant-pxteventj-f.ie Htt ) Think of f ( y | x ) as ] - I lpfxzstt e- n YIX Nfl x ) # = f- min - etat = T Pasty P [ Y 2 [ y , y + dy ] | X 2 [ x , x + dx ]] 7S=p[ XZS ] e- = 7 / 26 8 / 26 9 / 26 Conditional Density, Continued Example: Sum of Two Exponentials Example: Total Probability Rule Cy , ytdy ] ;D Given a conditional density f Y | X , compute Let X 1 , X 2 be i.i.d Expo( λ ) RVs. - pCY=yTx= Exercise What is the CDF of Y ? → a Let Y = X 1 + X 2 . Iffy .KZ/X)dz ↳ ±¥i¥¥¥7EE¥ P [ Y ≤ y | X = x ] = Cxxtdx What is P [ Y < y | X 1 = x ] ? I I What is the PDF of Y ? If we know P [ Y ≤ y | X = x ] , compute I XTfxtxdxotpafb.ru 1%1 ysylx What is P [ Y < y ] ? P [ Y ≤ y ] = - Xi ! . - for values case on . IPCX , X ] jipfyeylx x ) - I . PEX - PETEY IX X ] - § x ! - - , - If discrete case on 1%1 : X ' )f×¥dx Go with your gut! What worked for discrete also " Y - e- - I works for continuous. Exercise x ) ) → xxdx Hy a e - . - Soya - e . - 10 / 26 11 / 26 12 / 26

Break The Normal (Gaussian) Distribution Gaussian Tail Bound Let X ∼ N ( 0 , 1 ) . X is a normal or Gaussian RV if: About Easy upper bound on P [ | X | ≥ α ] , for α ≥ 1? SY mm # 1 . * 2 πσ 2 · e ( x − µ ) 2 / 2 σ 2 its mean p (Something we’ve seen before...) f X ( x ) = Chebyshev : s VarC If you could immediately gain one new skill, what Parameters: if FPC Ix O - of za ] , would it be? Ncel 02 ) Notation: X ∼ I , ± 42 E [ X ] = Var( X ) = 02 U ¥17 02=1 µ=o Standard Normal: , 13 / 26 14 / 26 15 / 26 Gaussian Tail Bound, Continued Shifting and Scaling Gaussians Shifting and Scaling Gaussians Let X ∼ N ( µ, σ ) and Y = X − µ 2 Turns out we can do better than Chebyshev. σ . Then: Can also go the other direction: - Mdx SETTEE R 1 NCO 2 π e − x 2 / 2 dx ≤ I ) 1 Y ∼ If X ∼ N ( 0 , 1 ) , and Y = µ + σ X : Idea: Use p α , Y is still Gaussian ! ¥19 pflXH2]=2lP[XZx ] N ° Proof: Compute P [ a ≤ Y ≤ b ] . to EAT XI out of ECU Notes scope E [ Y ] = to if : = =2SIfae-×%dx . if = Hkd shaded : ⇐ 210¥ Var fo X ) to X ) Var( Y ) = Var ( y ,e-X%dX = Change of variables: x = σ y + µ . Var ( X ) - 2 = o .lk#X-iDl : 2k¥ ⇒ I 2 f = 16 / 26 17 / 26 18 / 26

Sum of Independent Gaussians Example: Height Example: Height Exercise Let X , Y be independent standard Gaussians. Consider a family of a two parents and twins with the same height. The parents’ heights are E [ H ] = Let Z = [ aX + c ] + [ bY + d ] . independently drawn from a N ( 65 , 5 ) distribution. Then, Z is also Gaussian! (Proof optional.) The twins’ height are independent of the parents’, and from a N ( 40 , 10 ) distribution. E [ Z ] = Let H be the sum of the heights in the family. ECaxtctbytdf.at#yIIoaECY7/--ctdVar(aXtbYtCtd)--Var(aXtbY Define relevant RVs: Var[ H ] = Var( Z ) = ) I shift ) Varcax ) tvarcby = a2tb2 ) tbzvarcy azvarcx ) = = 19 / 26 20 / 26 21 / 26 Sample Mean The Central Limit Theorem (CLT) Example: Chebyshev vs. CLT We sample a RV X independently n times. Let X 1 , X 2 , . . . , X n be i.i.d. RVs with mean µ , Let X 1 , X 2 , . . . be i.i.d RVs with E [ X i ] = 1 and X has mean µ , variance σ 2 . variance σ 2 . (Assume mean, variance, are finite.) Var( X i ) = 1 2 . Let A n = X 1 + X 2 + ... + X n . n Denote the sample mean by A n = X 1 + X 2 + ... + X n Sample mean, as before: A n = X 1 + X 2 + ... + X n C- expectation E [ A n ] = 1 of single sample n n . Recall: E [ A n ] = it of single sample variance a Eftncxit 02 ← a- = If E [ X ] = Var( A n ) = Var( A n ) = An % . . IE CAN ] An - .tl/nD=tn(ECX.It:.tEEXnD--tnfnu)--UAnVarftnCXit...tXnD-- - Standdeairadtion - tartan Normalize the sample mean: f Normalize to get A 0 Var( X ) = n : - µ ECA 'n7=EfAn]= normalized An A 0 o n = : tvarcxn ) ] ' ntzfyarCXD.it - Ende A Ern 3 An as n → sammpleean . . . this ! . Then, as n ! 1 , P [ A 0 follows A In n ] ! = Var ( -1 '#fAo4= An ⇒ ' n ) = ( A WAY : easier Var = Ncaa ) dist . 22 / 26 23 / 26 24 / 26

Example: Chebyshev vs. CLT Summary Upper bound P [ A 0 n ≥ 2 ] for any n . I Independence and conditioning also (We don’t know if A 0 n is non-neg or symmetric .) generalize from the discrete RV case. 22 ] variant Plan 22 ] - of ' ' 1pct An E s 22 I The Gaussian is a very important continuous T s tf ECA n' I RV. It has several nice properties, including the fact that adding independent Gaussians If we take n ! 1 , upper bound on P [ A 0 n ≥ 2 ] ? gets you another Gaussian tail ① Gaussian - 2% 't # 22 ] const E X e = I The CLT tells us that if we take a sample PEA 'm const him - 95-99.7 average of a RV, the distribution of this ② 68 I " " 195% " average will approach a standard normal . approaches . ±¥9%¥Eao£ . 1) dist Nco 25 / 26 26 / 26

Recommend

More recommend