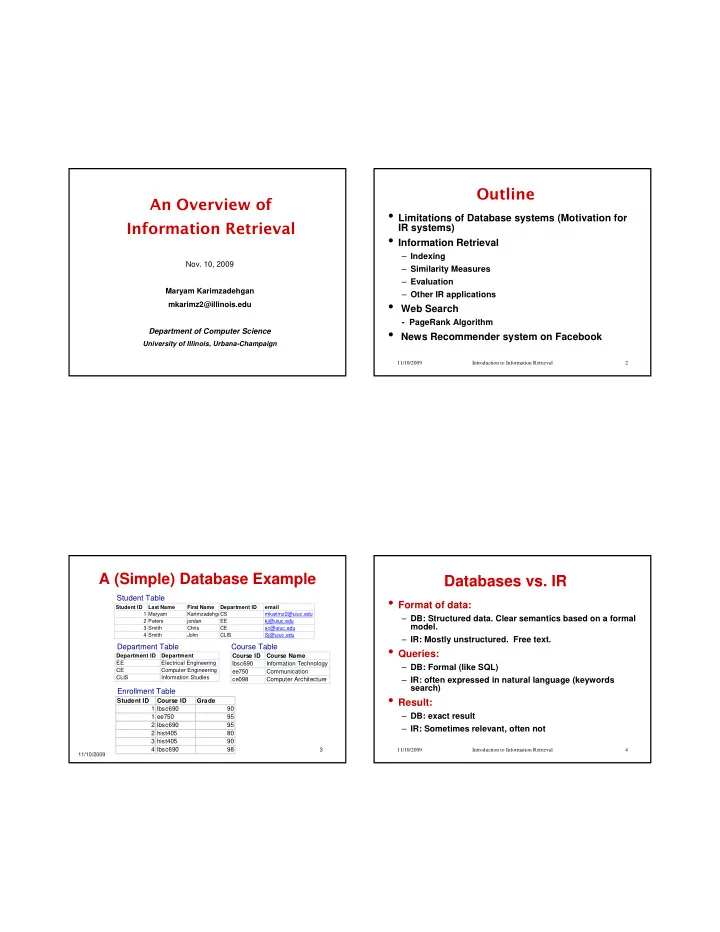

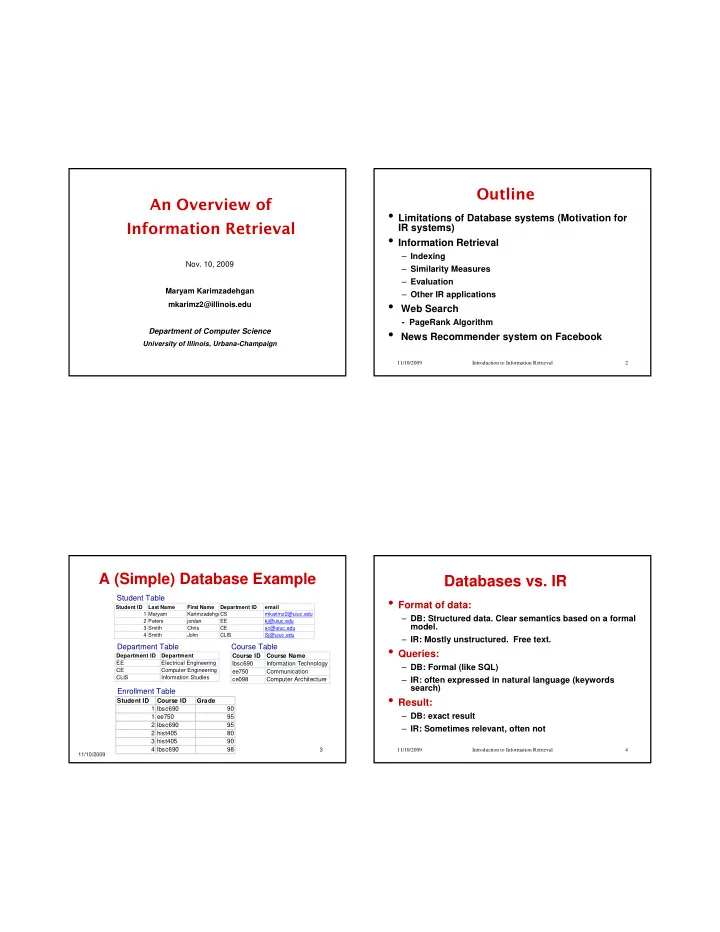

Outline An Overview of • Limitations of Database systems (Motivation for Information Retrieval IR systems) • Information Retrieval – Indexing Nov. 10, 2009 – Similarity Measures – Evaluation Maryam Karimzadehgan – Other IR applications • Web Search mkarimz2@illinois.edu - PageRank Algorithm Department of Computer Science • News Recommender system on Facebook University of Illinois, Urbana-Champaign 11/10/2009 Introduction to Information Retrieval 2 A (Simple) Database Example Databases vs. IR Student Table • Format of data: Student ID Last Name First Name Department ID email 1 Maryam KarimzadehgaCS mkarimz2@uiuc.edu – DB: Structured data. Clear semantics based on a formal 2 Peters jordan EE kj@uiuc.edu model. 3 Smith Chris CE sc@uiuc.edu 4 Smith John CLIS Sj@uiuc.edu – IR: Mostly unstructured. Free text. Department Table Course Table • Queries: Department ID Department Course ID Course Name EE Electrical Engineering lbsc690 Information Technology – DB: Formal (like SQL) CE Computer Engineering ee750 Communication CLIS Information Studies – IR: often expressed in natural language (keywords ce098 Computer Architecture search) Enrollment Table • Result: Student ID Course ID Grade 1 lbsc690 90 – DB: exact result 1 ee750 95 2 lbsc690 95 – IR: Sometimes relevant, often not 2 hist405 80 3 hist405 90 4 lbsc690 98 3 11/10/2009 Introduction to Information Retrieval 4 11/10/2009

Short vs. Long Term Info Need What is Information Retrieval? • Short-term information need (Ad hoc retrieval) • Goal: Find the documents most relevant to a – “Temporary need” certain query (information need) – Information source is relatively static – User “pulls” information • Dealing with notions of: – Application example: library search, Web search • Long-term information need (Filtering) – Collection of documents – “Stable need”, e.g., new data mining algorithms – Query (User’s information need) – Notion of Relevancy – Information source is dynamic – System “pushes” information to user – Applications: news filter 11/10/2009 Introduction to Information Retrieval 5 11/10/2009 Introduction to Information Retrieval 6 The Information Retrieval Cycle What Types of Information? Source • Text (Documents) Resource Selection • XML and structured documents Query Query Formulation • Images Search Ranked List • Audio (sound effects, songs, etc.) • Video Selection Documents • Source code query reformulation, relevance feedback • Applications/Web services result 11/10/2009 Introduction to Information Retrieval 7 11/10/2009 8 Introduction to Information Retrieval Slide is from Jimmy Lin’s tutorial

The IR Black Box Inside The IR Black Box Query Documents Query Documents Representation Representation Query Representation Document Representation Comparison Index Function Results Results 11/10/2009 Introduction to Information Retrieval 9 11/10/2009 Introduction to Information Retrieval 10 Slide is from Jimmy Lin’s tutorial Slide is from Jimmy Lin’s tutorial Typical IR System Architecture 1) Indexing docs query • Making it easier to match a query with a document Bag of Word Representation • Query and document should be represented Tokenizer using the same units/terms judgments Feedback INDEX DOCUMENT Doc Rep (Index) Query Rep User document information This is a document in retrieval Scorer Indexer results information retrieval Index is this 11/10/2009 Introduction to Information Retrieval 11 11/10/2009 Introduction to Information Retrieval 12 Slide is from ChengXiang Zhai’s CS410

What is a good indexing term? Inverted Index • Specific (phrases) or general (single word)? Doc 1 Dictionary Postings • Words with middle frequency are most useful This is a sample document Term # Total Doc id Freq with one sample docs freq – Not too specific (low utility, but still useful!) 1 1 sentence This 2 2 2 1 – Not too general (lack of discrimination, stop words) is 2 2 Doc 2 1 1 sample 2 3 – Stop word removal is common, but rare words are 2 1 another 1 1 kept in modern search engines 1 2 … … … 2 1 • Stop words are words such as: This is another sample document 2 1 – a, about, above, according, across, after, afterwards, … … again, against, albeit, all, almost, alone, already, also, although, always, among, as, at … … 11/10/2009 Introduction to Information Retrieval 13 11/10/2009 Introduction to Information Retrieval 14 2) Tokenization/Stemming 3) Relevance Feedback • Stemming: Mapping all inflectional forms of Results: words to the same root form, e.g. d 1 3.5 Query Retrieval d 2 2.4 – computer -> compute Engine … – computation -> compute d k 0.5 ... Updated – computing -> compute User query Document collection Judgments: • Porter’s Stemmer is popular for English d 1 + d 2 - d 3 + … Feedback d k - ... 11/10/2009 Introduction to Information Retrieval 15 11/10/2009 Introduction to Information Retrieval 16 Slide is from ChengXiang Zhai’s CS410

4) Scorer/Similarity Methods Boolean Model • Each index term is either present or absent 1) Boolean model • Documents are either Relevant or Not Relevant(no 2) Vector-space model ranking) 3) Probabilistic model • Advantages 4) Language model – Simple • Disadvantages – No notion of ranking (exact matching only) – All index terms have equal weight 11/10/2009 Introduction to Information Retrieval 17 11/10/2009 Introduction to Information Retrieval 18 Vector Space Model TF-IDF in Vector Space model • Query and documents are represented as vectors of index terms ⎛ ⎞ N ⎜ ⎟ = * log tfidf f ⎜ ⎟ i , k i , k ⎝ ⎠ df • Similarity calculated using COSINE similarity i between two vectors TF part – Ranked according to similarity IDF part IDF: A term is more discriminative if it occurs only in fewer documents 11/10/2009 Introduction to Information Retrieval 19 11/10/2009 Introduction to Information Retrieval 20

Retrieval as Language Models for Retrieval Language Model Estimation • Document ranking based on query Language Model Document likelihood … = ∑ text ? Query = log p ( q | d ) log p ( w | d ) mining ? i “data mining algorithms” Text mining assocation ? i clustering ? paper = where , q w w ... w Document language model … 1 2 n food ? ? … Which model would most • Retrieval problem ≈ Estimation of p(w i |d) likely have generated this query? … • Smoothing is an important issue, and food ? Food nutrition nutrition ? paper distinguishes different approaches healthy ? diet ? … 11/10/2009 21 11/10/2009 Introduction to Information Retrieval 22 Introduction to Information Retrieval Slide is from ChengXiang Zhai’s CS410 Slide is from ChengXiang Zhai’s CS410 Precision vs. Recall Information Retrieval Evaluation Retrieved – Coverage of information – Form of presentation – Effort required/ease of Use – Time and space efficiency Relevant – Recall All docs • proportion of relevant material actually retrieved – Precision | RelRetriev ed | | RelRetriev ed | Recall = Precision = • proportion of retrieved material actually relevan t | Rel in Collection | | Retrieved | 11/10/2009 Introduction to Information Retrieval 23 11/10/2009 Introduction to Information Retrieval 24

Characteristics of Web Information • “Infinite” size – Static HTML pages – Dynamically generated HTML pages (DB) Web Search – • Semi-structured Google PageRank Algorithm – Structured = HTML tags, hyperlinks, etc – Unstructured = Text • Different format (pdf, word, ps, …) • Multi-media (Textual, audio, images, …) • High variances in quality (Many junks) 11/10/2009 Introduction to Information Retrieval 25 11/10/2009 Introduction to Information Retrieval 26 PageRank: Capturing Page Exploiting Inter-Document Links “Popularity” [Page & Brin 98] “Extra text”/summary for a doc • Intuitions – Links are like citations in literature – A page that is cited often can be expected to be more useful in general Description (“anchor text”) – Consider “indirect citations” (being cited by a highly cited paper counts a lot…) – Smoothing of citations (every page is assumed to have a non- zero citation count) Links indicate the utility of a doc Authority Hub 11/10/2009 Introduction to Information Retrieval 27 11/10/2009 Introduction to Information Retrieval 28 Slide is from ChengXiang Zhai’s CS410

Recommend

More recommend