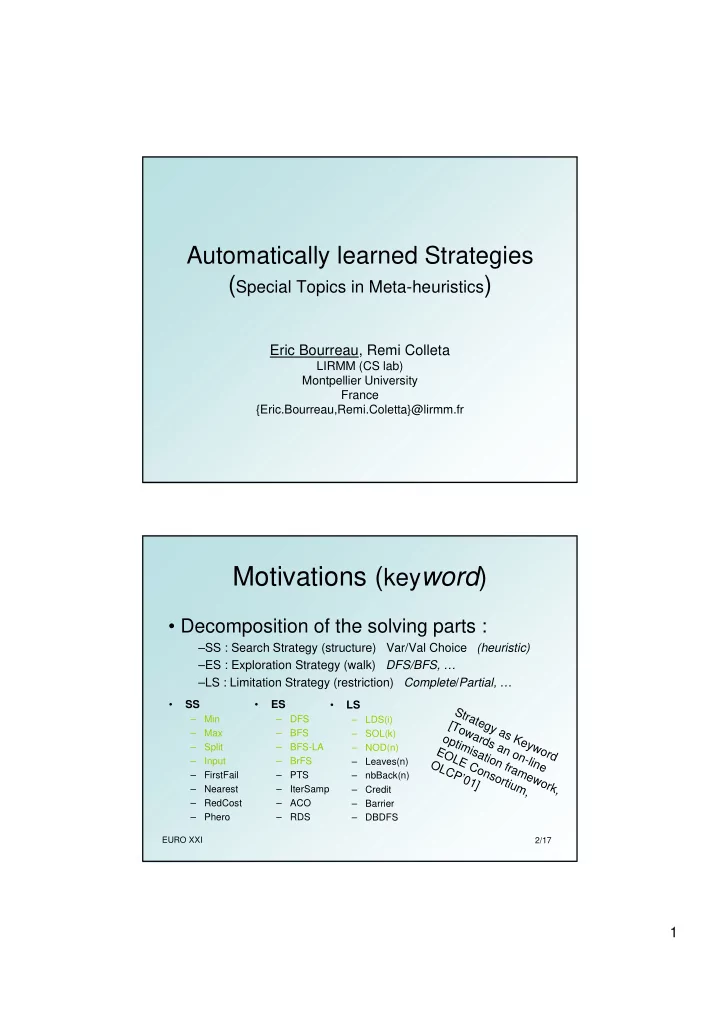

Automatically learned Strategies ( Special Topics in Meta-heuristics ) Eric Bourreau, Remi Colleta LIRMM (CS lab) Montpellier University France {Eric.Bourreau,Remi.Coletta}@lirmm.fr Motivations ( key word ) • Decomposition of the solving parts : –SS : Search Strategy (structure) Var/Val Choice (heuristic) –ES : Exploration Strategy (walk) DFS/BFS, … –LS : Limitation Strategy (restriction) Complete / Partial, … SS ES LS • • • Strategy as Keyword – Min – DFS – LDS(i) [Towards an on-line – Max – BFS – SOL(k) optimisation framework, – Split – BFS-LA – NOD(n) EOLE Consortium, – Input – BrFS – Leaves(n) OLCP’01] – FirstFail – PTS – nbBack(n) – Nearest – IterSamp – Credit – RedCost – ACO – Barrier – Phero – RDS – DBDFS EURO XXI 2/17 1

Motivations (sentence) Perron, ILOG, CP’99 • rewrite old heuristic Laburthe, Salsa, Cons’02 Degivry, Tools, Comp&OR’04 Masure = DFS(LOC(DP)) Pesant = DFS(k-Opt(Nearest)) Benoist = Branch(Move(Flow)) Rousseau = SEQ(LDS(3,vs1), VNS(1000, vs2)) • add grammar Search ::= BasicSearch | CompoundSearch BasicSearch ::= Decision | BasicComp • and control Decision ::= Refinement | Transformation Refinement ::= SETV(Variable, Value) | REMV(Variable, Value) | ... | • Prop • SEQ PROP(Integer) Transformation ::= ASS(Variable, Value) | • FAIL • ALT UNASS(Variable) | ... | • Success • While ENLARGE(Variable, Values) BasicComp ::= FAIL | • Assign • THEN SUCCESS | SEQ(Search1, Search2) | ALT(Search1, Search2) CompoundSearch ::= GENERATE(Variable) | GENERATE(Variables) | ...| FLIP(Variables) | EURO XXI 3/17 LOCS(Integer, Variables) | ... Motivations (extend and learn) ����������� �������������������� ����!�� "�#��������������������$���%#�����!��� ������������� �������������� < Build > :: Insert(i) | <LDS> | DO(<Build>,<Optimize>) | FORALL(<LDS>,<Optimize>) � < Optimize > :: CHAIN(n,m) | ' � % TREE(n,m,k) | � � ( LNS(n,h,<Build>) | ( ( � � ) � � LOOP(n,<Optimize>) | � � * � � � THEN(<Optimize>) * + * �������!�������� , * � � [Caseau, A Meta Heuristic %�� ����� ���!��%#���&�!�!�� < LDS > :: LDS(i,n,l) �������!����-��� factory for the VRP, CP99] %�� � ���$������$����� ��-�!�����!����������� � ����-�����!���������� ���$������$����� ����������!������������ EURO XXI 4/17 � ����!��������!�&������� 2

Main Drawbacks • In the discovery part : – During each new generation, you have to evaluate quality of the new strategy. It can be time consuming (especially for bad results !) • Still too closed from the problem area – Pattern are dedicated to • Routing problem (k-opt), • scheduling (shuffling), • frequency assignment (quasi-clique resolution) EURO XXI 5/17 Outline • Motivations / Drawbacks • My Solution : Constraint Programming • New Framework • Experiments (in progress) Encountered Problems • Conclusion EURO XXI 6/17 3

Time � Machine Learning • Quality predictor to evaluate performance quickly – Build a training set (few thousand) – Validate prediction on important results (time, number of nodes) – Evaluate it on new strategies in polynomial time [Hutter, Hamady, Hoos, Leyton • Possible choices Brown, CP06] [Adenso Daz, Laguna, OR’06] – Neural Network (perceptron), – Decision tree and regression (MP5, J48), – Instance base learning (k-means, SVM) EURO XXI 7/17 Restricted Vocabulary • Few parameters … use CSP • Decision tree on parameters to extract a still significant subset of parameters – First interest : reduce the number – Second interest : sort parameter (useful for step after : crossover) • Generic representation (integer) • Solving = variable ordering in DFS search EURO XXI 8/17 4

Constraint Satisfaction Problem • An instance of CSP is a triplet ( X,C,D ) – X : a set of variables – D : possible domain of X – C : a set of constraints on X • A solution is an : – instantiation for all variable in X – with a value in the domain D – satisfying each constraint C • During the search for a solution – a filtering algorithm for each constraint – remove in the variable domains, inconsistent values – and propagate this information to the constraint network EURO XXI 9/17 Illustrative example X • Map Coloring D M i :: [1,2,3,4] 1 3 … M i � M j … 4 alldifferent(M a ,M b ,M c ) 2 3 C 3 1 1,2 1,2 Solving Heuristic : VARIABLE( minimal domain, 1,2,3,4 maximum connected) CONSTRAINTS( familly, quality, efficiency) EURO XXI 10/17 Backdoor 5

Selected Attributes on variables and constraints • Static Attributes – Arity (number of variables involved in the constraint) – Propagation Cost (complexity of the filtering algorithm family) – Propagation Efficiency ( - Tightness (number of valid solutions divided by number of possible assignments) - Dynamic Attributes (x2 – specific/familly) P r - Average time o b l e d m e p / - Number of calls e i n n s d t a a n n t c - Average pruned values e s Score for the selected variable : � � . ��� � � � � � = � � � � � � � �...� � � ,��� � � ! � � �� �� � � .� �������� � � �� � � � � � = � � = � EURO XXI 11/17 Framework {a 1 0 ,e 1 0 ,a 1 1 ,e 1 1 ,…,e 1 22 , time 1 … n randomized heuristics a n 0 ,e n 0 ,a n 1 ,e n 1 ,…,e n 22 , time n } Problem Database of (modeled with CSP) running examples Decision Classical Heuristic tree a * σ (0) ,a * σ (1) ,…,e * σ (15) , time ~ validation Neural Best generation : Filtered Network best heuristic database Genetic {a 1 σ (0) ,a 1 σ (1) ,…,e 1 σ (15) , time 1 … algorithm a n σ (0) ,a n σ (1) ,…,e n σ (15) , time n } New generations New generations Best New generations {a 1 σ (0) ,a 1 σ (1) ,…,e 1 σ (15) , time 1 candidates … EURO XXI 12/17 a n’ σ (0) ,a n’ σ (1) ,…,e n’ σ (15) , time n’ } 6

Encountered problems (1) • Diversification of heuristics – Necessary for a good learning curve for the prediction – Decision tree haven’t enough information • Did not reject attribute Size of database must • Did not sort attribute be big Learning curve impacted Genes order in (retropropagation) chromosome samples will take Running impacted long time Learning phase will Introduce more take long time complex crossover than basic simple EURO XXI 13/17 Encountered Problems (2) • Discretization problem for the targeted class : – Non uniform distribution – Extreme points are very long to compute EURO XXI 14/17 7

Preliminary experiments • Sudoku – Trained on • CPU time • Number of nodes – Promising good results • -24% CPU / -2% Nodes • -27% CPU / -12% Nodes • Extension to MagicSquare … TimeTabling • Another branch : [map coloring/RLFAP/RCPSP] EURO XXI 15/17 Conclusion • Promising new directions (no programming skill, no Ph’D in OR, …) (solving part fully automatised) Perspectives • Dynamic calibration = add depth in learning curve (kind of adaptive strategy) EURO XXI 16/17 8

Thanks to the crew EURO XXI 17/17 9

Recommend

More recommend