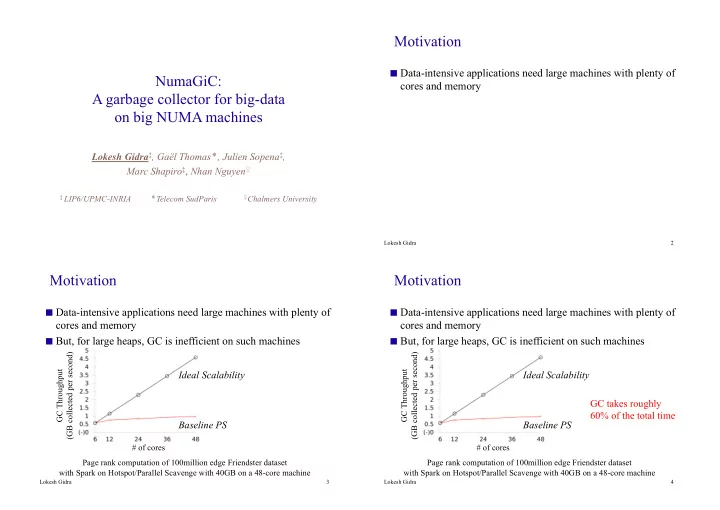

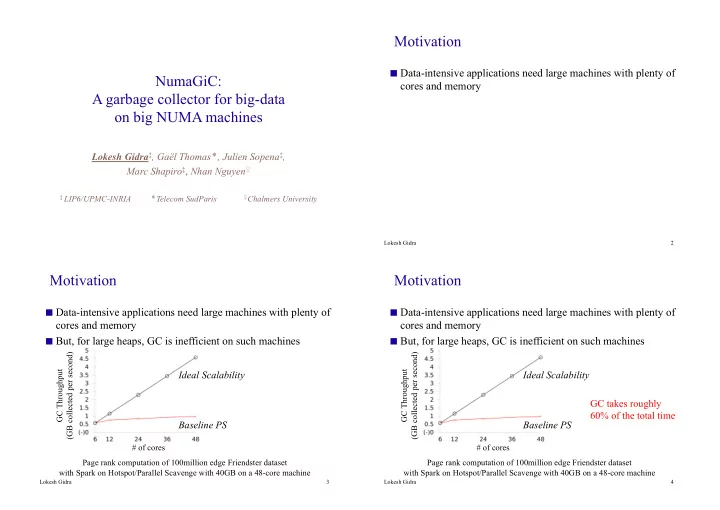

Motivation ◼ Data-intensive applications need large machines with plenty of NumaGiC: cores and memory A garbage collector for big-data on big NUMA machines Lokesh Gidra ‡ , Gaël Thomas � , Julien Sopena ‡ , Marc Shapiro ‡ , Nhan Nguyen ♀ ‡ LIP6/UPMC-INRIA � Telecom SudParis ♀ Chalmers University Lokesh Gidra 2 Motivation Motivation ◼ Data-intensive applications need large machines with plenty of ◼ Data-intensive applications need large machines with plenty of cores and memory cores and memory ◼ But, for large heaps, GC is inefficient on such machines ◼ But, for large heaps, GC is inefficient on such machines (GB collected per second) (GB collected per second) GC Throughput Ideal Scalability GC Throughput Ideal Scalability GC takes roughly 60% of the total time Baseline PS Baseline PS # of cores # of cores Page rank computation of 100million edge Friendster dataset Page rank computation of 100million edge Friendster dataset with Spark on Hotspot/Parallel Scavenge with 40GB on a 48-core machine with Spark on Hotspot/Parallel Scavenge with 40GB on a 48-core machine Lokesh Gidra 3 Lokesh Gidra 4

Outline GCs don’t scale because machines are NUMA Hardware hides the distributed memory � application silently creates inter-node references ◼ Why GC doesn’t scale? Node 0 Node 1 ◼ Our Solution: NumaGiC Memory Memory ◼ Evaluation Memory Memory Node 3 Node 2 Lokesh Gidra 5 Lokesh Gidra 6 GCs don’t scale because machines are NUMA GCs don’t scale because machines are NUMA But memory distribution is also hidden to the GC threads But memory distribution is also hidden to the GC threads when they traverse the object graph when they traverse the object graph GC thread GC thread Node 0 Node 1 Node 0 Node 1 Memory Memory Memory Memory Memory Memory Memory Memory Node 2 Node 3 Node 2 Node 3 Lokesh Gidra 7 Lokesh Gidra 8

GCs don’t scale because machines are NUMA GCs don’t scale because machines are NUMA A GC thread thus silently traverses remote references A GC thread thus silently traverses remote references and continues its graph traversal on any node GC thread GC thread Node 0 Node 1 Node 0 Node 1 Memory Memory Memory Memory Memory Memory Memory Memory Node 3 Node 3 Node 2 Node 2 Lokesh Gidra 9 Lokesh Gidra 10 GCs don’t scale because machines are NUMA GCs don’t scale because machines are NUMA A GC thread thus silently traverses remote references When all GC threads access any memory nodes, the inter-connect potentially saturates and continues its graph traversal on any node => high memory access latency GC thread Node 0 Node 1 Node 0 Node 1 Memory Memory Memory Memory Memory Memory Memory Memory Node 2 Node 3 Node 2 Node 3 Lokesh Gidra 11 Lokesh Gidra 12

Outline How can we fix the memory locality issue? ◼ Why GC doesn’t scale? ◼ Our Solution: NumaGiC Simply by preventing any remote memory access ◼ Evaluation Lokesh Gidra 13 Lokesh Gidra 14 Prevent remote access using messages Prevent remote access using messages Enforces memory access locality Enforces memory access locality by trading remote memory accesses by messages by trading remote memory accesses by messages Node 0 Node 1 Node 0 Node 1 Memory Memory Memory Memory Thread 0 Thread 1 Thread 0 Thread 1 Lokesh Gidra 15 Lokesh Gidra 16

Prevent remote access using messages Prevent remote access using messages Enforces memory access locality Enforces memory access locality by trading remote memory accesses by messages by trading remote memory accesses by messages Node 0 Node 1 Node 0 Node 1 Memory Memory Memory Memory Thread 0 Thread 1 Thread 0 Thread 1 Remote reference � sends it to its home-node Remote reference � sends it to its home-node Lokesh Gidra 17 Lokesh Gidra 18 Prevent remote access using messages Prevent remote access using messages Enforces memory access locality Enforces memory access locality by trading remote memory accesses by messages by trading remote memory accesses by messages Node 0 Node 1 Node 0 Node 1 Memory Memory Memory Memory Thread 0 Thread 1 Thread 0 Thread 1 And continue the graph traversal locally And continue the graph traversal locally Lokesh Gidra 19 Lokesh Gidra 20

Problem1: a msg is costlier than a remote access Node 0 Node 1 Inter-node messages must be minimized Using messages enforces local access… Too many messages …but opens up other performance challenges Lokesh Gidra 21 Lokesh Gidra 22 Problem1: a msg is costlier than a remote access Problem1: a msg is costlier than a remote access Node 0 Node 1 Node 0 Node 1 Inter-node messages Inter-node messages must be minimized must be minimized Too many messages Too many messages • Observation: app threads naturally create clusters of new allocated objs • Observation: app threads naturally create clusters of new allocated objs • 99% of recently allocated objects are clustered • 99% of recently allocated objects are clustered Node 0 Node 1 Approach: let objects allocated by a thread stay on its node Lokesh Gidra 23 Lokesh Gidra 24

Problem2: Limited parallelism Problem2: Limited parallelism ◼ Due to serialized traversal of object clusters across nodes ◼ Due to serialized traversal of object clusters across nodes Node 0 Node 1 Node 0 Node 1 Node 1 idles while node 0 collects its memory Node 1 idles while node 0 collects its memory ◼ Solution: adaptive algorithm Trade-off between locality and parallelism 1. Prevent remote access by using messages when not idling 2. Steal and access remote objects otherwise Lokesh Gidra 25 Lokesh Gidra 26 Outline Evaluation ◼ Comparison of NumaGiC with – 1. ParallelScavenge (PS): baseline stop-the-world GC of Hotspot ◼ Why GC doesn’t scale? 2. Improved PS: PS with lock-free data structures and interleaved heap space ◼ Our Solution: NumaGiC 3. NAPS: Improved PS + slightly better locality, but no messages ◼ Metrics • GC throughput – ◼ Evaluation – amount of live data collected per second (GB/s) – Higher is better • Application performance – – Relative to improved PS – Higher is better Lokesh Gidra 27 Lokesh Gidra 28

Experiments GC Throughput (GB collected per second) NAPS NumaGiC Improved PS Name Description Heap Size Amd48 Intel80 Spark Neo4j SpecJBB13 SpecJBB05 Spark In-memory data analytics 110 to 250 to (page rank computation) 160GB 350GB GC Throughput Neo4j Object graph database 110 to 250 to (Single Source Shortest Path) 160GB 350GB on SPECjbb2013 Business-logic server 24 to 40GB 24 to 40GB Amd48 SPECjbb2005 Business-logic server 4 to 8GB 8 to 12GB 1 billion edge The 1.8 billion edge Friendster dataset Friendster dataset Heap Sizes Hardware settings – 1. AMD Magny Cours with 8 nodes, 48 threads, 256 GB of RAM 2. Xeon E7-2860 with 4 nodes, 80 threads, 512 GB of RAM Lokesh Gidra 29 Lokesh Gidra 30 GC Throughput (GB collected per second) GC Throughput (GB collected per second) Spark Neo4j SpecJBB13 SpecJBB05 NAPS NumaGiC Improved PS Spark Neo4j SpecJBB13 SpecJBB05 on Amd48 GC Throughput GC Throughput 5.4X on 2.9X Amd48 on 3.6X Intel80 Heap Sizes NumaGiC multiplies GC performance up to 5.4X Heap Sizes Lokesh Gidra 31 Lokesh Gidra 32

GC Throughput Scalability Application speedup 94% Ideal Scalability 82% 64% 61% 55% 42% NumaGiC Speedup relative 36% 27% to Improved PS GC Throughput NAPS Improved PS Baseline PS Spark on Amd48 with a smaller dataset of 40GB Spark Neo4j SpecJBB13 SpecJBB05 # of nodes NAPS NumaGiC Lokesh Gidra 33 Lokesh Gidra 34 Application speedup Conclusion 37% 33% 35% ◼ Performance of data-intensive apps relies on GC performance 37% 37% Speedup relative 21% to Improved PS 26% 12% ◼ Memory access locality has huge effect on GC performance ◼ Enforcing locality can be detrimental for parallelism in GCs ◼ Future work: NUMA-aware concurrent GCs Spark Neo4j SpecJBB13 SpecJBB05 NAPS NumaGiC Lokesh Gidra 35 Lokesh Gidra 36

Large multicores provide this power Conclusion But scalability is hard to achieve because software stack was not designed for ◼ Performance of data-intensive apps relies on GC performance Application Data analytic ◼ Memory access locality has huge effect on GC performance Hadoop, Spark, Middleware Neo4j, Cassandra… Language runtime JVM, CLI, Python, R… ◼ Enforcing locality can be detrimental for parallelism in GCs Operating system Linux, Windows… ◼ Future work: NUMA-aware concurrent GCs Hypervisor Xen, VMWare… I/O Memory Thank You J Cores controllers Banks Lokesh Gidra 37 Lokesh Gidra 38 Large multicores provide this power But scalability is hard to achieve because software stack was not designed for Application Data analytic Hadoop, Spark, Middleware Neo4j, Cassandra… Language runtime JVM, CLI, Python, R… Operating system Linux, Windows… Do not consider hypervisors in this talk: Hypervisor Software stack is already complex and hard to analyze! I/O Memory Cores controllers Banks Lokesh Gidra 39

Recommend

More recommend