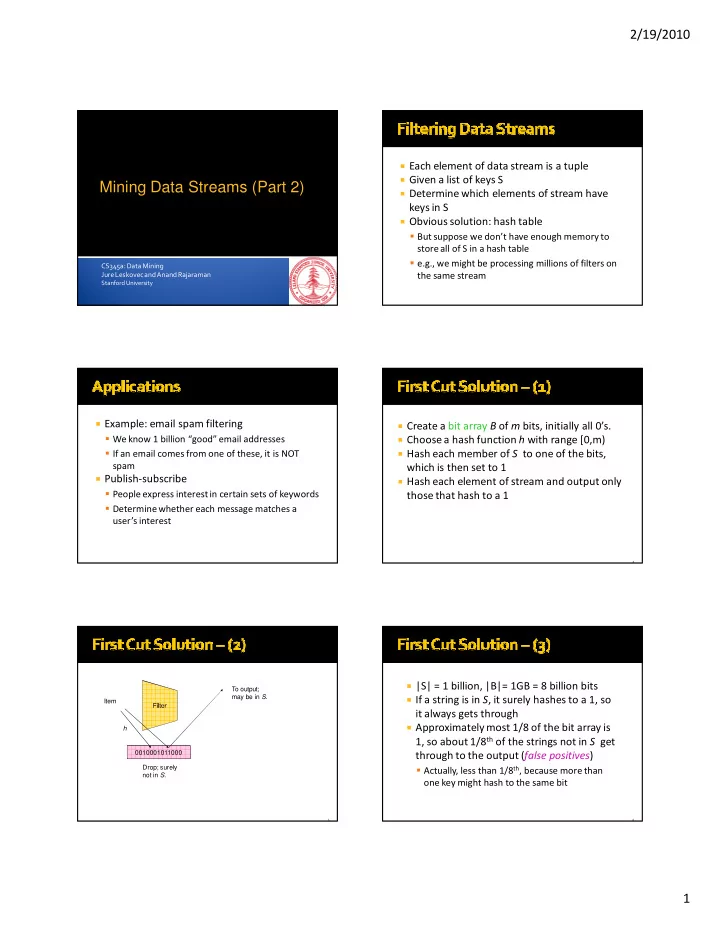

2/19/2010 � Each element of data stream is a tuple � Given a list of keys S Mining Data Streams (Part 2) � Determine which elements of stream have keys in S � Obvious solution: hash table � But suppose we don’t have enough memory to store all of S in a hash table � e.g., we might be processing millions of filters on CS345a: Data Mining Jure Leskovec and Anand Rajaraman the same stream Stanford University � Example: email spam filtering � Create a bit array B of m bits, initially all 0’s. � We know 1 billion “good” email addresses � Choose a hash function h with range [0,m) � If an email comes from one of these, it is NOT � Hash each member of S to one of the bits, spam which is then set to 1 � Publish-subscribe � Hash each element of stream and output only � People express interest in certain sets of keywords those that hash to a 1 � Determine whether each message matches a user’s interest 4 � |S| = 1 billion, |B|= 1GB = 8 billion bits To output; may be in S . � If a string is in S , it surely hashes to a 1, so Item it always gets through � Approximately most 1/8 of the bit array is h 1, so about 1/8 th of the strings not in S get 0010001011000 through to the output ( false positives ) Drop; surely � Actually, less than 1/8 th , because more than not in S . one key might hash to the same bit 5 6 1

2/19/2010 � If we throw m darts into n equally likely m darts, n targets targets, what is the probability that a target gets at least one dart? Equals 1/e as n → � Equivalent n( m /n) � Targets = bits, darts = hash values 1 - (1 – 1/n) 1 – e –m/n Probability target not hit by one dart Probability at least one dart hits target 7 8 � Fraction of 1’s in array = probability of false � Say |S| = m , |B| = n positive = 1 – e -m/n � Use k independent hash functions h 1 ,…,h k � Initialize B to all 0’s � Example: 10 9 darts, 8*10 9 targets. � Hash each element s in S using each function, � Fraction of 1’s in B = 1 – e -1/8 = 0.1175. and set B[ h i (s) ] = 1 for i = 1,.., k � When a stream element with key x arrives � Compare with our earlier estimate: 1/8 = 0.125. � If B[ h i (x) ] = 1 for i = 1,.., k , then declare that x is in S � Otherwise discard the element 9 2/19/2010 Jure Leskovec & Anand Rajaraman, Stanford CS345a: Data Mining 10 � What fraction of bit vector B is 1’s? � m = 1 billion, n = 8 billion � Throwing km darts at n targets � k = 1: (1 – e -1/8 ) = 0.1175 � k = 2: (1 – e -1/4 ) 2 = 0.0493 � So fraction of 1’s is (1 – e -km/n ) � What happens as we keep increasing k ? � k independent hash functions � False positive probability = (1 – e -km/n ) k � “Optimal” value of k : n/m ln 2 2/19/2010 Jure Leskovec & Anand Rajaraman, Stanford CS345a: Data Mining 11 2/19/2010 Jure Leskovec & Anand Rajaraman, Stanford CS345a: Data Mining 12 2

2/19/2010 � Bloom filters guarantee no false negatives, � Problem: a data stream consists of and use limited memory elements chosen from a set of size n . � Great for pre-processing before more expensive Maintain a count of the number of distinct elements seen so far. checks � E.g., Google’s BigTable, Squid web proxy � Obvious approach: maintain the set of � Suitable for hardware implementation elements seen. � Hash function computations can be parallelized 2/19/2010 Jure Leskovec & Anand Rajaraman, Stanford CS345a: Data Mining 13 14 � How many different words are found among � Real Problem: what if we do not have space to the Web pages being crawled at a site? store the complete set? � Unusually low or high numbers could indicate artificial pages (spam?) � Estimate the count in an unbiased way. � How many different Web pages does each � Accept that the count may be in error, but customer request in a week? limit the probability that the error is large. 15 16 � Pick a hash function h that maps each of the � The probability that a given h ( a ) ends in at n elements to at least log 2 n bits least r 0’s is 2 - r � Probability of NOT seeing a tail of length r � For each stream element a , let r ( a ) be the among m elements: (1 - 2 - r ) m number of trailing 0’s in h ( a ) � Record R = the maximum r ( a ) seen Prob. All Prob. a given h(a) end in fewer than ends in fewer than r 0’s. r 0’s. � Estimate = 2 R . * Really based on a variant due to AMS (Alon, Matias, and Szegedy) 17 18 3

2/19/2010 � Since 2 -r is small, prob. of NOT finding a tail of � E(2 R ) is actually infinite. length r is: � Probability halves when R -> R +1, but value doubles. � If m<< 2 r , tends to 1. So probability of finding � Workaround involves using many hash a tail of length r tends to 0. functions and getting many samples. � How are samples combined? � Ifm>> 2 r , tends to 0. So probability of finding a tail of length r tends to 1. � Average? What if one very large value? � Median? All values are a power of 2. � Thus, 2 R will almost always be around m . 19 20 � Partition your samples into small groups � Suppose a stream has elements chosen from a set of n values. � Take the average of groups � Let m i be the number of times value i occurs. � Then take the median of the averages � The k th moment is 21 22 � Stream of length 100; 11 distinct values � 0 th moment = number of distinct elements � Item counts: 10, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9 � The problem just considered. Surprise # = 910 � 1 st moment = count of the numbers of elements = length of the stream. � Item counts: 90, 1, 1, 1, 1, 1, 1, 1 ,1, 1, 1 � Easy to compute. Surprise # = 8,110. � 2 nd moment = surprise number = a measure of how uneven the distribution is. 23 24 4

2/19/2010 � Assume stream has length n . � Works for all moments; gives an unbiased � Pick a random time to start, so that any time estimate. � We’ll just concentrate on 2 nd moment. is equally likely. � Let the chosen time have element a in the � Based on calculation of many random stream variables X . � Maintain a count c of the number a’s in the � Each requires a count in main memory, so number stream starting at the chosen time is limited. � X = n *(2c– 1) � Store n once, count of a ’s for each X . 25 26 1 2 3 m a � Compute as many variables X as can fit in a a a a available memory. � X = n(2c – 1) � E[X] = (1/n) � all times t n (2c - 1) � Average them in groups. = � all times t (2c - 1) = � a (1 + 3 + 5 + … + 2m a -1) � Take median of averages. = � � �� � � � 2/19/2010 Jure Leskovec & Anand Rajaraman, Stanford CS345a: Data Mining 27 28 � We assumed there was a number n , the The variables X have n as a factor – keep n 1. number of positions in the stream. separately; just hold the count in X � But real streams go on forever, so n is a Suppose we can only store k counts. We 2. variable – the number of inputs seen so far. must throw some X ’s out as time goes on. � Objective: each starting time t is selected with probability k / n � How can we do this? 29 30 5

2/19/2010 � Stream a 1 , a 2 ,… � Define exponentially decaying window at time t to be: � i = 1,2,…,t a i (1-c) t-i � c is a constant, presumably tiny, like 10 -6 or 10 -9 . . . . 1/c 2/19/2010 Jure Leskovec & Anand Rajaraman, Stanford CS345a: Data Mining 31 32 � Key use case is when the stream’s statistics can vary over time � Finding the most popular elements “currently” � Stream of Amazon items sold � Stream of topics mentioned in tweets � Stream of music tracks streamed 2/19/2010 Jure Leskovec & Anand Rajaraman, Stanford CS345a: Data Mining 33 6

Recommend

More recommend