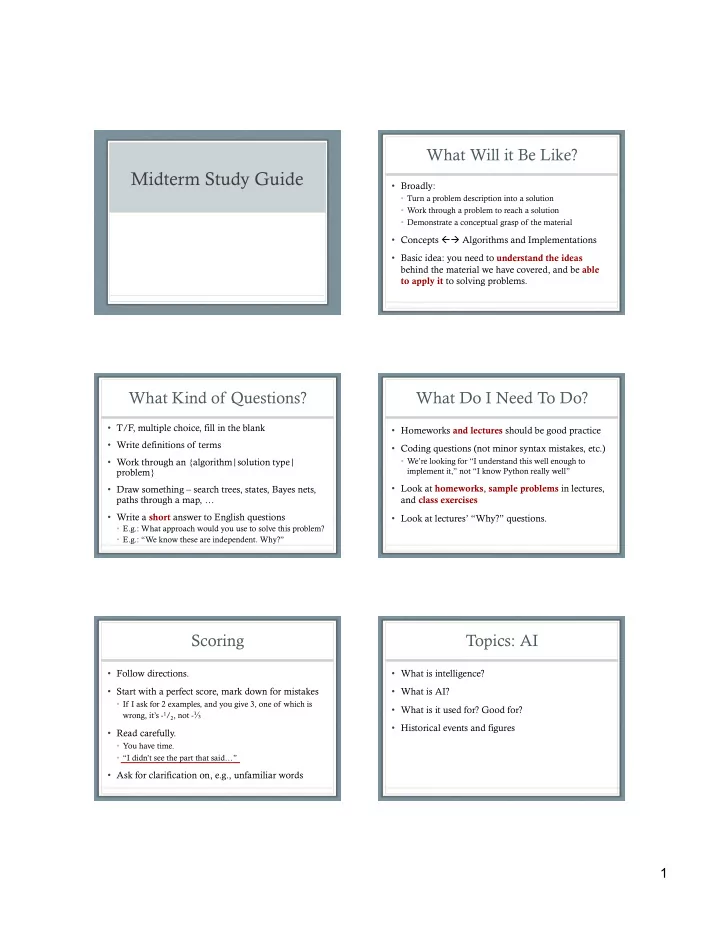

What Will it Be Like? Midterm Study Guide • Broadly: • Turn a problem description into a solution • Work through a problem to reach a solution • Demonstrate a conceptual grasp of the material • Concepts ßà Algorithms and Implementations • Basic idea: you need to understand the ideas behind the material we have covered, and be able to apply it to solving problems. What Kind of Questions? What Do I Need To Do? • T/F, multiple choice, fill in the blank • Homeworks and lectures should be good practice • Write definitions of terms • Coding questions (not minor syntax mistakes, etc.) • Work through an {algorithm|solution type| • We’re looking for “I understand this well enough to problem} implement it,” not “I know Python really well” • Look at homeworks , sample problems in lectures, • Draw something – search trees, states, Bayes nets, paths through a map, … and class exercises • Write a short answer to English questions • Look at lectures’ “Why?” questions. • E.g.: What approach would you use to solve this problem? • E.g.: “We know these are independent. Why?” Scoring Topics: AI • Follow directions. • What is intelligence? • Start with a perfect score, mark down for mistakes • What is AI? • If I ask for 2 examples, and you give 3, one of which is • What is it used for? Good for? wrong, it’s - 1 / 2 , not - ⅓ • Historical events and figures • Read carefully. • You have time. • “I didn’t see the part that said…” • Ask for clarification on, e.g., unfamiliar words 1

Topics: Agents Topics: Search • What is it for? • Agents • What kinds are there? • Elements of a search problem • What do they do? • State spaces, actions, costs, ... • How do we characterize them (what traits do they have)? • How do state spaces pertain to search? • Autonomy, rationality, ..? • To problem-solving? • How do they interact with an environment? • Exploring search space (selecting, expanding, • Environments generating, evaluating) • What’s an environment? • Specific algorithms: How do they work? What are • How is it characterized? they good for? What are their weaknesses? Topics: Formalizing Search Topics: Uninformed Search • What are the elements of a search problem? • Why do uninformed search? • “Express [ X ] as a search problem.” What does that mean? • Come up with some examples of uninformed • States: every state a puzzle can be in search problems • Actions/Operations: how you get between states • Important algorithms: BFS, DFS, iterative deepening, uniform cost • Solutions: you need a goal test (and sometimes a heuristic , or estimate of distance from goal) • A (very) likely question: “What would be the best • Sometimes we care about path (planning), sometimes just goal choice of search method for [ problem ], and why ?” (identification). Can you say which, for a given problem? • Characteristics of algorithms • Costs: not all solutions or actions are equal • Completeness, optimality, time and space complexity, … Sample 8 Topics: Informed Search S Problem 8 3 1 • Some external or pre-existing information says what arc 4 8 3 part of state space is more likely to have a solution A B C cost 3 15 • Heuristics encode this information: h ( n ) 7 20 h value 5 • Pop quiz: What does h ( n ) = 0 mean? A heuristic applies to a ∞ ∞ D E 0 node , can give optimal • Admissibility & Optimality G solution with the right • Some algorithms can be optimal when using an admissible heuristic algorithm Apply the following to search this space. At each search step, show: current node being expanded, g ( n ) (path cost so far), h ( n ) (heuristic • Algorithms: best-first, greedy search, A*, IDA*, SMA* estimate), f ( n ) (evaluation function), and h *( n ) (true goal distance). • What’s a good heuristic for a problem? Why? Depth-first search Breadth-first search A* search Uniform-cost search Greedy search 2

Topics: Local Search HW2 • Idea: Keep a single “current” state, try to improve it • How many states are there? • Don’t keep path to goal • What operations fully encode this search problem? • Don’t keep entire search in memory • That is: how can you reach every state? • Go to “successor states” • Are there loops? • Concepts: hill climbing, local maxima/minima, random restarts • How many states does pure DFS visit? • If there are loops? • Important algorithms: hill climbing, local beam search, simulated annealing • What’s a good algorithm? A bad one? Topics: CSPs Topics: Constraint Networks • Constraint Satisfaction: subset of search problems • Constraint propagation : constraints can be propagated through a constraint network 1. State is defined by random variables X i • Goal: maintain consistency (constraints aren’t violated) 2. With values from a domain D • Concepts: Variable ordering, value ordering, fail-first 3. Knowledge about problem can be expressed as constraints on what values X i can take • Important algorithms: • Special algorithms, esp. on constraint networks 1. Backtracking: DFS, but at each point: • Only consider a single variable • How would you express [X] as a CSP? As a • Only allow legal assignments search? How would you represent the constraints? • E.g.: “Must be alphabetical” 2. Forward checking Topics: Games Topics: Basic Probability • Why play games? What games, and how? • What is uncertainty? • Characteristics: zero-sum, deterministic, perfect • What are sources of uncertainty in a problem? information (or not) • Non-deterministic, partially observable, noisy observations, noisy reasoning, uncertain cause/effect world model, • What’s the search tree for a game? How big is it? continuous problem spaces… • How would you express game [ X ] as a search? What are • World of all possible states: a complete assignment the states, actions, etc.? How would you solve it? of values to random variables • Algorithms: (expecti)minimax, alpha-beta pruning • Joint probability, conditional probability • Many examples on slides 3

Topics: Basic Probability Topics: Basic Probability P( a ∧ b ) • Independence: A and B are independent • P( a | b ) = • P( a ∧ b ) = P( a | b ) P( b ) P( b ) • P (A) ⫫ P(B) iff P (A ∧ B) = P (A) P (B) • A and B do not affect each other’s probability P ( smart ∧ smart ¬ smart study ∧ prep ) study ¬ study study ¬ study • Conditional independence: A and B are independent prepared .432 .16 .084 .008 given C ¬ prepared .048 .16 .036 .072 • P (A ∧ B | C) = P (A | C) P (B | C) • What is the prior probability of smart? • A and B don’t affect each other if C is known • What is the conditional probability of prepared, given study and smart? • Is prepared independent of study? Topics: Probabilistic Reasoning Topics: Joint Probability What is the joint probability of A and B? • Concepts: • Posteriors and Priors; Bayesian Reasoning; Induction and • P (A,B) Deduction; Probabilities of Events • The probability of any set of legal assignments. • [In]dependence, conditionality, marginalization • Booleans: expressed as a matrix/table • What is Bayes’ Rule and what is it useful for? A B alarm ¬ alarm ≍ T T 0.09 P ( H i | E j ) = P ( E j | H i ) P ( H i ) T F 0.1 burglary 0.09 0.01 P ( E j ) F T 0.01 ¬ burglary 0.1 0.8 F F 0.8 P ( cause | effect ) = P ( effect | cause ) P ( cause ) • Continuous domains à probability functions P ( effect ) 22 Conditional Probability Tables BBN Definition • For X i , CPD P ( X i | Parents ( X i )) q uantifies effect of parents on X i • AKA Bayesian Network, Bayes Net • Parameters are probabilities in conditional probability tables (CPTs): A B P(B|A) • A graphical model (as a DAG) of probabilistic false false 0.01 A P(A) relationships among a set of random variables false true 0.99 false 0.6 true false 0.7 true 0.4 • Links represent direct influence of one variable on true true 0.3 A another B C P(C|B) B D P(D|B) false false 0.4 B false false 0.02 false true 0.6 false true 0.98 true false 0.9 true false 0.05 C D true true 0.1 true true 0.95 Example from web.engr.oregonstate.edu/~wong/slides/BayesianNetworksTutorial.ppt Slides from Dr. Oates, UMBC 4

Recommend

More recommend