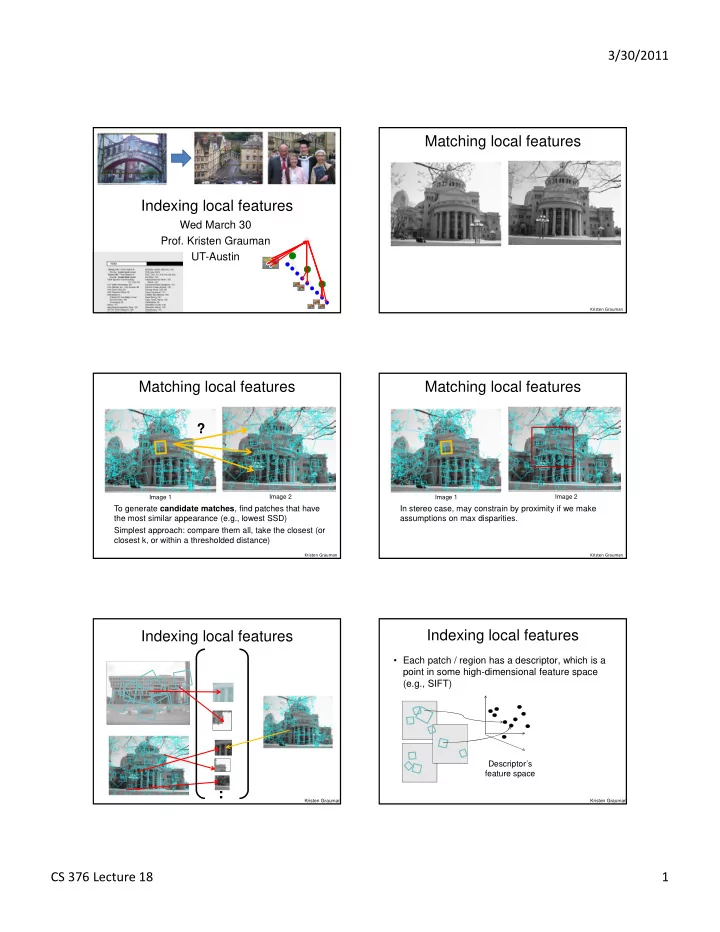

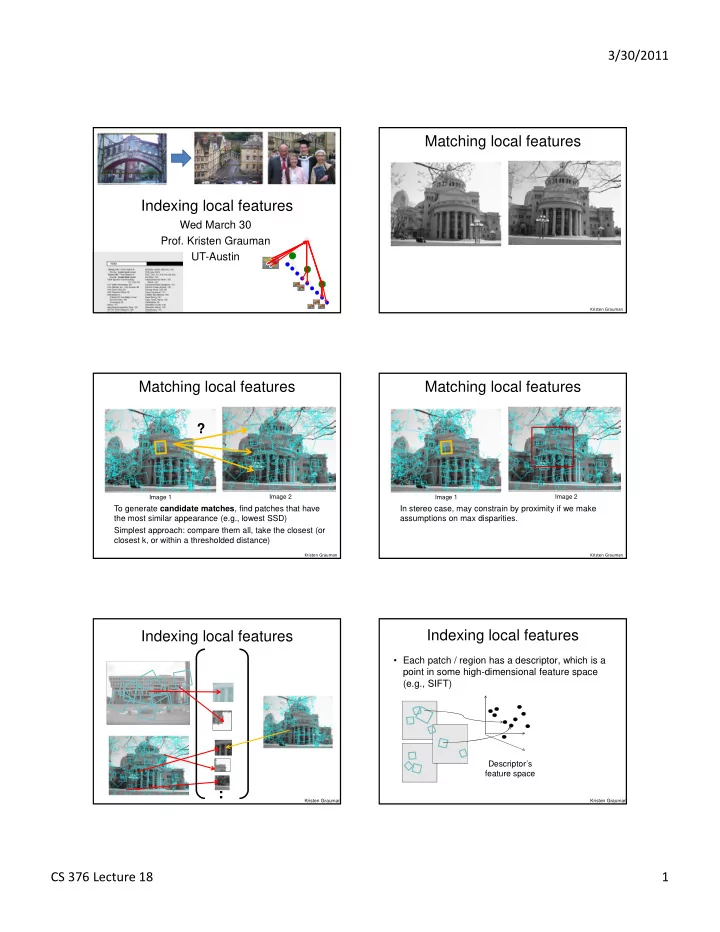

3/30/2011 Matching local features Indexing local features Wed March 30 Prof. Kristen Grauman UT-Austin Kristen Grauman Matching local features Matching local features ? Image 2 Image 2 Image 1 Image 1 To generate candidate matches , find patches that have In stereo case, may constrain by proximity if we make the most similar appearance (e.g., lowest SSD) assumptions on max disparities. Simplest approach: compare them all, take the closest (or closest k, or within a thresholded distance) Kristen Grauman Kristen Grauman Indexing local features Indexing local features • Each patch / region has a descriptor, which is a point in some high-dimensional feature space (e.g., SIFT) Descriptor’s feature space … Kristen Grauman Kristen Grauman CS 376 Lecture 18 1

3/30/2011 Indexing local features Indexing local features • When we see close points in feature space, we • With potentially thousands of features per have similar descriptors, which indicates similar image, and hundreds to millions of images to local content. search, how to efficiently find those that are relevant to a new image? Query Descriptor’s image feature space Database images Kristen Grauman Kristen Grauman Indexing local features: Text retrieval vs. image search inverted file index • For text • What makes the problems similar, different? documents, an efficient way to find all pages on which a word occurs is to use an index… • We want to find all images in which a feature occurs. • To use this idea, we’ll need to map our features to “visual words”. Kristen Grauman Kristen Grauman Visual words: main idea Visual words: main idea • Extract some local features from a number of images … e.g., S IFT descriptor space: each point is 128-dimensional Slide credit: D. Nister, CVPR 2006 CS 376 Lecture 18 2

3/30/2011 Visual words: main idea Visual words: main idea Each point is a local descriptor, e.g. SIFT vector. Visual words Visual words • Example: each • Map high-dimensional descriptors to tokens/words group of patches by quantizing the feature space belongs to the same visual word • Quantize via clustering, let cluster centers be the prototype “words” Word #2 • Determine which word to assign to Descriptor’s each new image feature space region by finding the closest cluster center. Figure from S ivic & Zisserman, ICCV 2003 Kristen Grauman Kristen Grauman CS 376 Lecture 18 3

3/30/2011 Visual words and textons Recall: Texture representation example Windows with primarily horizontal Both • First explored for texture and edges Dimension 2 (mean d/dy value) material representations mean mean • Texton = cluster center of d/dx d/dy value value filter responses over collection of images Win. #1 4 10 • Describe textures and Win.#2 18 7 … materials based on Win.#9 20 20 distribution of prototypical texture elements. Dimension 1 (mean d/dx value) … statistics to summarize patterns Windows with Windows with Leung & Malik 1999; Varma & small gradient in in small windows primarily vertical Zisserman, 2002 both directions edges Kristen Grauman Kristen Grauman Inverted file index Visual vocabulary formation Issues: • Sampling strategy: where to extract features? • Clustering / quantization algorithm • Unsupervised vs. supervised • What corpus provides features (universal vocabulary?) • Vocabulary size, number of words • Database images are loaded into the index mapping words to image numbers Kristen Grauman Kristen Grauman Inverted file index When will this give us a significant gain in efficiency? • If a local image region is a visual word, how can we summarize an image (the document)? • New query image is mapped to indices of database images that share a word. Kristen Grauman CS 376 Lecture 18 4

3/30/2011 Analogy to documents Of all the sensory impressions proceeding to China is forecasting a trade surplus of $90bn the brain, the visual experiences are the (£51bn) to $100bn this year, a threefold dominant ones. Our perception of the world increase on 2004's $32bn. The Commerce around us is based essentially on the Ministry said the surplus would be created by messages that reach the brain from our eyes. a predicted 30% jump in exports to $750bn, For a long time it was thought that the retinal compared with a 18% rise in imports to sensory, brain, China, trade, image was transmitted point by point to visual $660bn. The figures are likely to further centers in the brain; the cerebral cortex was a annoy the US, which has long argued that visual, perception, surplus, commerce, movie screen, so to speak, upon which the China's exports are unfairly helped by a retinal, cerebral cortex, exports, imports, US, image in the eye was projected. Through the deliberately undervalued yuan. Beijing discoveries of Hubel and Wiesel we now eye, cell, optical agrees the surplus is too high, but says the yuan, bank, domestic, know that behind the origin of the visual yuan is only one factor. Bank of China nerve, image foreign, increase, perception in the brain there is a considerably governor Zhou Xiaochuan said the country Hubel, Wiesel trade, value more complicated course of events. By also needed to do more to boost domestic following the visual impulses along their path demand so more goods stayed within the to the various cell layers of the optical cortex, country. China increased the value of the Hubel and Wiesel have been able to yuan against the dollar by 2.1% in July and demonstrate that the message about the permitted it to trade within a narrow band, but image falling on the retina undergoes a step- the US wants the yuan to be allowed to trade wise analysis in a system of nerve cells freely. However, Beijing has made it clear that stored in columns. In this system each cell it will take its time and tread carefully before has its specific function and is responsible for allowing the yuan to rise further in value. a specific detail in the pattern of the retinal image. ICCV 2005 short course, L. Fei-Fei Bags of visual words Comparing bags of words • Rank frames by normalized scalar product between their • Summarize entire image (possibly weighted) occurrence counts--- nearest based on its distribution neighbor search for similar images. (histogram) of word � � , � [1 8 1 4] [5 1 1 0] occurrences. ��� � � , � � � � � • Analogous to bag of words representation commonly � ∑ � � � ∗ ���� used for documents. ��� � ∑ � � � ��� � ∑ � ���� � ∗ ��� ��� q for vocabulary of V words d j Kristen Grauman Bags of words for content-based tf-idf weighting image retrieval • T erm f requency – i nverse d ocument f requency • Describe frame by frequency of each word within it, downweight words that appear often in the database • (Standard weighting for text retrieval) Total number of Number of documents in occurrences of word database i in document d Number of documents Number of words in word i occurs in, in document d whole database Slide from Andrew Zisserman Kristen Grauman Sivic & Zisserman, ICCV 2003 CS 376 Lecture 18 5

3/30/2011 Video Google System Query region 1. Collect all words within query region Perceptual and Sensory Augmented Computing 2. Inverted file index to find relevant frames 3. Compare word counts Visual Object Recognition Tutorial 4. Spatial verification Retrieved frames Sivic & Zisserman, ICCV 2003 • Demo online at : http://www.robots.ox.ac.uk/~vgg/r esearch/vgoogle/index.html Slide from Andrew Zisserman 32 Sivic & Zisserman, ICCV 2003 K. Grauman, B. Leibe Vocabulary Trees: hierarchical clustering Scoring retrieval quality for large vocabularies • Tree construction: Results (ordered): Database size: 10 images Perceptual and Sensory Augmented Computing Query Relevant (total): 5 images precision = #relevant / #returned recall = #relevant / #total relevant Visual Object Recognition Tutorial 1 0.8 precision 0.6 0.4 0.2 0 [Nister & Stewenius, CVPR’ 06] 0 0.2 0.4 0.6 0.8 1 recall Slide credit: Ondrej Chum Slide credit: David Nister Vocabulary Tree Vocabulary Tree • Training: Filling the tree • Training: Filling the tree Perceptual and Sensory Augmented Computing Perceptual and Sensory Augmented Computing Visual Object Recognition Tutorial Visual Object Recognition Tutorial [Nister & Stewenius, CVPR’ 06] [Nister & Stewenius, CVPR’ 06] K. Grauman, B. Leibe Slide credit: David Nister K. Grauman, B. Leibe Slide credit: David Nister CS 376 Lecture 18 6

Recommend

More recommend