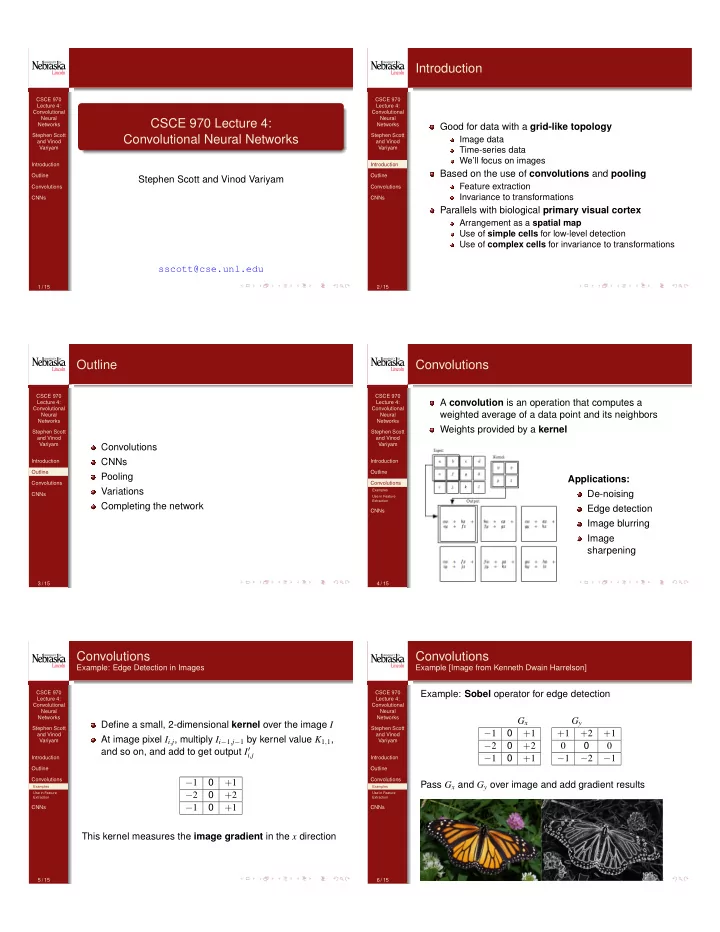

Introduction CSCE 970 CSCE 970 Lecture 4: Lecture 4: Convolutional Convolutional Neural Neural CSCE 970 Lecture 4: Networks Networks Good for data with a grid-like topology Stephen Scott Convolutional Neural Networks Stephen Scott Image data and Vinod and Vinod Variyam Variyam Time-series data We’ll focus on images Introduction Introduction Based on the use of convolutions and pooling Outline Outline Stephen Scott and Vinod Variyam Feature extraction Convolutions Convolutions Invariance to transformations CNNs CNNs Parallels with biological primary visual cortex Arrangement as a spatial map Use of simple cells for low-level detection Use of complex cells for invariance to transformations sscott@cse.unl.edu 1 / 15 2 / 15 Outline Convolutions CSCE 970 CSCE 970 A convolution is an operation that computes a Lecture 4: Lecture 4: Convolutional Convolutional weighted average of a data point and its neighbors Neural Neural Networks Networks Weights provided by a kernel Stephen Scott Stephen Scott and Vinod and Vinod Variyam Variyam Convolutions Introduction CNNs Introduction Outline Outline Pooling Applications: Convolutions Convolutions Variations Examples De-noising CNNs Use in Feature Extraction Completing the network Edge detection CNNs Image blurring Image sharpening 3 / 15 4 / 15 Convolutions Convolutions Example: Edge Detection in Images Example [Image from Kenneth Dwain Harrelson] CSCE 970 CSCE 970 Example: Sobel operator for edge detection Lecture 4: Lecture 4: Convolutional Convolutional Neural Neural Networks Networks G x G y Define a small, 2-dimensional kernel over the image I Stephen Scott Stephen Scott 0 − 1 + 1 + 1 + 2 + 1 and Vinod and Vinod At image pixel I i , j , multiply I i � 1 , j � 1 by kernel value K 1 , 1 , Variyam Variyam − 2 0 + 2 0 0 0 and so on, and add to get output I 0 i , j 0 Introduction Introduction − 1 + 1 − 1 − 2 − 1 Outline Outline Convolutions Convolutions 0 − 1 + 1 Pass G x and G y over image and add gradient results Examples Examples Use in Feature − 2 0 + 2 Use in Feature Extraction Extraction 0 − 1 + 1 CNNs CNNs This kernel measures the image gradient in the x direction 5 / 15 6 / 15

Convolutions Convolutions Example: Image Blurring Use in Feature Extraction CSCE 970 CSCE 970 Lecture 4: Lecture 4: A box blur kernel computes uniform average of neighbors Convolutional Convolutional Neural Neural Networks Networks 1 1 1 Stephen Scott Stephen Scott Use of pre-defined kernels has been common in and Vinod and Vinod 1 1 1 Variyam Variyam feature extraction for image analysis 1 1 1 But how do we know if our pre-defined kernels are best Introduction Introduction for the specific learning task? Outline Outline Apply same approach and divide by 9: Convolutions Convolutions Convolutional nodes in a CNN will allow the network to Examples Examples Use in Feature Use in Feature learn which features are best to extract Extraction Extraction CNNs CNNs We can also have the network learn which invariances are useful 7 / 15 8 / 15 Basic Convolutional Layer Basic Convolutional Layer Parameter Sharing CSCE 970 CSCE 970 Imagine kernel represented as weights into a hidden Lecture 4: Lecture 4: Convolutional Convolutional layer Neural Neural Networks Networks Output of a linear unit is exactly the kernel output Stephen Scott Stephen Scott If instead use, e.g., ReLU, get nonlinear transformation and Vinod and Vinod Sparse connectivity from input to hidden greatly Variyam Variyam of kernel reduces paramters Introduction Introduction Can further reduce model complexity via parameter Outline Outline sharing (aka weight sharing ) Convolutions Convolutions CNNs CNNs E.g., weight w 1 , 1 that multiplies the upper-left value of Basic Convolutional Basic Convolutional Layer Layer the window is the same for all applications of kernel Pooling Pooling Complete Network Complete Network Note that, unlike other network architectures, do not have complete connectivity ⇒ Many fewer parameters to tune 9 / 15 10 / 15 Basic Convolutional Layer Pooling Multiple Sets of Kernels CSCE 970 CSCE 970 Weight sharing forces the convolution layer to learn a Often more Lecture 4: Lecture 4: Convolutional Convolutional specific feature extractor interested in Neural Neural Networks Networks To learn multiple extractors simultaneously, can have presence/absence of Stephen Scott Stephen Scott multiple convolution layers a feature rather than and Vinod and Vinod Variyam Variyam Each is independent of the other its exact location Each uses its own weight sharing Introduction Introduction To help achieve Outline Outline translation Convolutions Convolutions invariance, can feed CNNs CNNs output of neighboring Basic Convolutional Basic Convolutional Layer Layer Pooling Pooling convolution nodes Complete Network Complete Network into a pooling node Pooling function can be average of inputs, max, etc. 11 / 15 12 / 15

Pooling Pooling Other Transformations Downsampling CSCE 970 CSCE 970 Lecture 4: Lecture 4: Convolutional Convolutional Neural Neural Networks Pooling on its Networks To further reduce complexity, can space pooled regions Stephen Scott own won’t be Stephen Scott and Vinod and Vinod at k > 1 pixels apart Variyam Variyam invariant to, e.g., Parameters: window width (3) and stride (2) rotations Introduction Introduction Dynamically adjusting stride can allow for Outline Can leverage Outline Convolutions Convolutions variable-sized inputs multiple, parallel CNNs CNNs convolutions Basic Convolutional Basic Convolutional Layer Layer feeding into Pooling Pooling Complete Network Complete Network single (max) pooling unit 13 / 15 14 / 15 Completing the Network CSCE 970 Lecture 4: Convolutional Neural Networks Can use multiple applications of convolution and pooling Stephen Scott layers and Vinod Variyam Introduction Outline Convolutions CNNs Basic Convolutional Layer Pooling Complete Network Final result of these steps feeds into fully connected subnetworks with, e.g., ReLU and softmax units 15 / 15

Recommend

More recommend