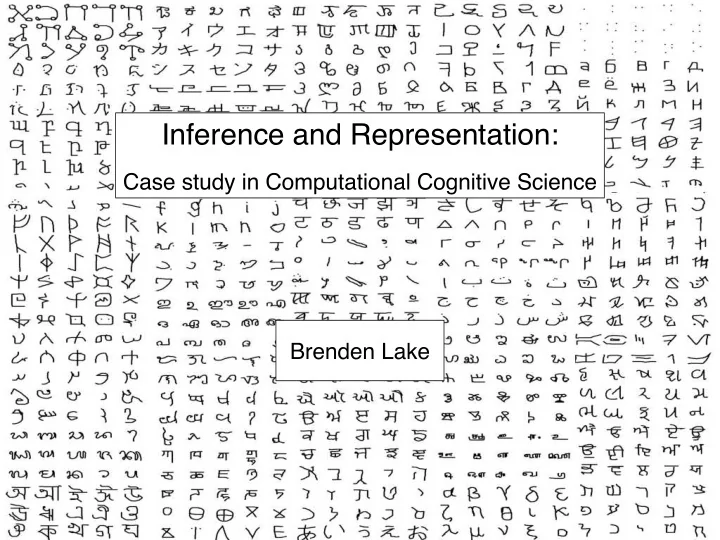

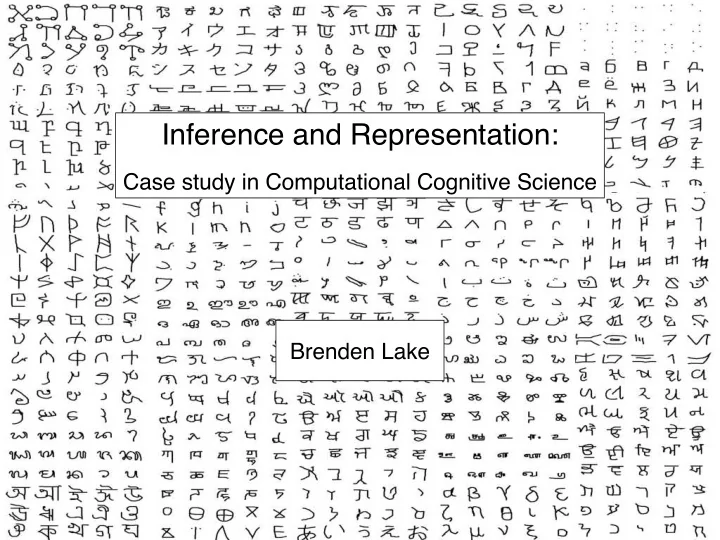

Inference and Representation: � � Case study in Computational Cognitive Science Brenden Lake

“Learning classifiers” in cognitive science concept learning � classification � = (cognitive science � (data science � & � & � psychology) machine learning) labeled data for � labeled data for � “dogs” “cats” generalization task: � dog or cat?

human-level concept learning the richness of representation the speed of learning parsing “one-shot learning” generating new concepts generating new examples

portable immersion circulator bucket-wheel excavator spring-loaded camming device drawing knife

A testbed for studying human-level concept learning We would like to investigate a domain with… � � 1) A relatively even slate for comparing humans and machines. � � 2) Natural, high-dimensional concepts. � � 3) A reasonable chance of building computational models that can see most of the structure that people see. � � 4) Insights that generalize across domains.

https://github.com/brendenlake Standard machine learning Our testbed MNIST � 10 concepts � 6000 examples each Omniglot dataset � 1600+ concepts � 20 examples each

Sanskrit Tagalog Balinese Hebrew Latin Braille

Angelic Alphabet of the Magi Futurama ULOG

Original Image 20 People’s Strokes 2 2 2 1 1 1 1 2 2 3 2 1 1 1 4 1 5 2 3 2 6 7 2 1 1 2 1 1 3 67 4 2 2 5 8 2 1 1 1 1 2 2 2 2 2 1 1 2 2 3 1 1 Stroke order:

Original Image 20 People’s Strokes 2 3 4 3 2 1 1 4 2 3 2 3 1 1 4 3 2 2 1 12 4 3 2 1 1 1 3 4 2 1 3 4 2 2 1 3 2 4 1 1 3 1 3 2 2 4 4 12 4 3 3 2 1 2 1 3 1 4 2 3 3 1 2 4 2 1

Original Image 20 People’s Strokes 12 3 3 1 2 2 1 23 4 1 4 3 6 45 5 4 5 6 7 5 6 7 7 3 2 1 4 3 4 2 2 3 1 4 3 1 2 1 7 5 6 5 6 6 5 5 6 7 8 4 89 7 7 10 11 8 1 4 3 2 2 3 4 1 1 2 3 1 3 2 4 4 5 5 6 56 7 6 5 6 8 7 7 9 8 8 7 9 12 2 34 1 4 4 3 3 4 1 1 2 3 2 5 6 6 5 6 5 5 7 8 6 7 7 8 7 8 8 1 4 2 1 4 5 1 2 2 12 3 3 3 6 4 4 3 5 8 5 7 5 6 7 9 6 8 6 7 10 11 7

human-level concept learning the speed of learning the richness of representation parsing “one-shot learning” generating new concepts generating new examples

human-level concept learning the speed of learning the richness of representation parsing generating new concepts 1 iv) 2 3 generating new examples

Bayesian Program Learning A B ... i) primitives ii) sub-parts iii) parts iv) object relation: relation: relation: template attached at start attached along attached along type level token level v) exemplars vi) raw data

human-level concept learning the speed of learning the richness of representation parsing generating new concepts 1 iv) 2 3 generating new examples

generating new examples

“Draw a new example” Which grid is produced by the model? A B A B A B A B

“Draw a new example” Which grid is produced by the model? A B A B A B A B

“Draw a new example” Which grid is produced by the model? A B A B A B A B

“Draw a new example” Which grid is produced by the model? A B A B A B A B

human-level concept learning the speed of learning the richness of representation parsing generating new concepts 1 iv) 2 3 generating new examples

generating new concepts iv) Task: “Design a new character from the same alphabet” 3 seconds � remaining

Task: “Design a new character from the same alphabet” Which grid is produced by the model? A B A B A B

Task: “Design a new character from the same alphabet” Which grid is produced by the model? A B A B A B

Task: “Design a new character from the same alphabet” Which grid is produced by the model? A B A B A B

Task: “Design a new character from the same alphabet” Which grid is produced by the model? A B A B A B

Generate a new characters from the same alphabet Alphabet of characters New machine-generated characters in each alphabet Alphabet of characters New machine-generated characters in each alphabet

Bayesian Program Learning primitives � ... (1D curvelets, 2D θ latent variables patches, 3D geons, I raw binary image actions, sounds, etc.) prior on parts, renderer relations, etc. Bayes’ rule P ( θ | I ) = P ( I | θ ) P ( θ ) sub-parts P ( I ) parts Concept learning as program induction. � relation relation Key ingredients for learning good programs: object template connected at inference 1) Learning-to-learn connected at 2) Compositionality 3) Causality ... exemplars raw data

Bayesian Program Learning primitives � ... (1D curvelets, 2D patches, 3D geons, actions, sounds, etc.) procedure G ENERATE T YPE sub-parts ← P ( ) Sample number of parts for i = 1 ... do n i ← P ( n i | ) Sample number of sub-parts parts S i ← P ( S i | n i ) Sample sequence of sub-parts R i ← P ( R i | S 1 , ..., S i − 1 ) Sample relation C relation relation end for connected at object template connected at procedure G ENERATE T OKEN ( ) ← { , R, S } for i = 1 ... do return @G ENERATE T OKEN ( ) S ( m ) ← P ( S ( m ) | S i ) Add motor variance i i Return handle to a stochastic program end procedure Sample part’s ! L ( m ) ← P ( L ( m ) | R i , T ( m ) , ..., T ( m ) ... i − 1 ) 1 start location i i T ( m ) ← f ( L ( m ) , S ( m ) C ) Compose a part’s pen i i i trajectory end for A ( m ) ← P ( A ( m ) ) Sample affine transform I ( m ) ← P ( I ( m ) | T ( m ) , A ( m ) ) return I ( m ) Render and sample the binary image end procedure

Learning-to-learn programs learned action primitives learned primitive transitions seed primitive 1250 primitives scale selective translation invariant

Learning-to-learn programs Start position for strokes in each position stroke start positions number of strokes 1 3 Number of strokes 5000 4000 frequency 3000 2000 1000 0 ≥ 3 1 2 1 2 3 4 5 6 7 8 9 10 Stroke number of sub-strokes for a character with strokes global transformations κ Number of sub-strokes for a character with κ strokes κ = 1 κ = 2 κ = 3 κ = 4 κ = 5 1 1 1 1 1 probability 0.5 0.5 0.5 0.5 0.5 0 0 0 0 0 1 2 3 4 5 6 7 8 910 1 2 3 4 5 6 7 8 910 1 2 3 4 5 6 7 8 910 1 2 3 4 5 6 7 8 910 1 2 3 4 5 6 7 8 910 κ = 6 κ = 7 κ = 8 κ = 9 κ = 10 1 1 1 1 1 0.5 0.5 0.5 0.5 0.5 0 0 0 0 0 1 2 3 4 5 6 7 8 910 1 2 3 4 5 6 7 8 910 1 2 3 4 5 6 7 8 910 1 2 3 4 5 6 7 8 910 1 2 3 4 5 6 7 8 910 number of sub-strokes relations between strokes 1 1 2 1 1 2 2 2 attached along (50%) independent (34%) attached at start (5%) attached at end (11%)

Inferring latent motor programs θ latent variables ... raw binary image I primitives renderer prior on programs Bayes’ rule P ( θ | I ) = P ( I | θ ) P ( θ ) P ( I ) sub-strokes Discrete ( K =5) approximation to posterior P K i =1 w i δ ( θ − θ [ i ] ) strokes P ( θ | I ) ≈ P K i =1 w i such that relation connected object template w i ∝ P ( θ [ i ] | I ) Intuition: Fit strokes to the observed pixels inference as closely as possible, with these I raw data constraints: • fewer strokes • high-probability primitive sequence • use relations • stroke order • stroke directions

Inference Step 1: characters as undirected graphs planning cleaned Thinned image Binary image Step 2: guided random parses more likely less likely 1 3 1 3 1 1 4 1 4 3 4 3 1 3 1 4 a) b) c) 3 2 2 2 3 2 2 2 2 1 2 4 3 1 1 3 4 3 4 3 1 1 2 3 4 3 4 1 5 3 1 5 4 2 2 1 2 2 2 1 2 2 ... 3 4 3 5 1 1 4 3 1 4 5 2 2 3 1 2 2 Step 3: Top-down fitting with gradient-based optimization 2 2 1 3 3 3 1 3 2 2 3 2 1 1 1 − 531 − 560 − 568 − 582 − 588 log-probability

One-shot classification

HBPL: Computing the classification score Class 1 Class 2 1 2 1 − 19.9 ** − 778 − 1.88e+03 0 Which class is image I in? 12 1 − 1.88e+03 − 758 log P ( I | class 2) ≈ − 1880 log P ( I | class 1) ≈ − 758

Comparing human and machine performance on five tasks One-shot classification � Generating new examples Generating new concepts � (20-way) (from type) Alphabet Human or Machine? Human or Machine? 51% Identification (ID) Level � 4.5% human error rate � 3.2% machine error rate [% judges who correctly ID machine vs. human] Alphabet Generating new examples � Generating new concepts � (dynamic) (unconstrained) Human or Machine? Human or Machine? Human � Human or Machine? or Machine? 49% ID Level 59% ID Level 51% ID Level

Recommend

More recommend