INEQUALITY CONSTRAINTS Introduction of Slack Variables • Consider the very general situation in which we have a nonlinear objective function, nonlinear equality, and nonlinear inequality constraints. • The simplest way to handle inequality constraints is to convert them to equality constraints using slack variables and then use the Lagrange theory. • Consider the inequality constraints ( ) ≥ 1 2 … r , , h j x 0 j = and define the real-valued slack variables θ j such that 2 θ j ( ) ≥ 1 2 … r , , , = h j x 0 j = but at the expense of introducing r new variables. • If we now consider the general problem written as ( ) (1) minimize f x x ( ) ≥ ( ) r subject to h j x 0 j = 1 1 (2) • Introducing the slack variables: 2 ( ) θ j ( ) r h j x – = 0 j = 1 1 the Lagrangian is written as:

r 2 ∑ L x λ θ ( , , ) ( ) λ j h j x ( ( ) θ j ) = f x + – (3) j = 1 • The necessary conditions for an optimum are: r ∂ h j ∂ L ∂ f ∑ λ j x i ( ) n = + = 0 i = 1 1 (4) ∂ ∂ ∂ x i x i j = 1 x * x = * λ λ = ∂ L 2 ( ) θ j ( ) r = h j x – = 0 j = 1 1 (5) ∂ λ j x * x = * θ θ = ∂ L * θ j * 2 λ j ( ) r = – = 0 j = 1 1 (6) ∂ θ j * • From the last expression (6), it is obvious that either λ * = 0 or θ j = or both. 0 * * • Case 1: λ j , θ j ≠ = 0 0 2 * is ignored since h j x * ( ) ≥ ( ) ( θ j ) > In this case, the constraint h j x 0 = 0 ( i.e. the constraint is not binding). * , then (4) implies that ∇ f x * If all λ j ( ) = 0 = 0 which means that the the solution is the unconstrained minimum.

* * • Case 2: θ j , λ j ≠ = 0 0 In this case, we have h j x * ( ) = 0 which means that the optimal solution is on the boundary of the j th constraint. * this implies that ∇ f x * Since λ j ≠ ( ) ≠ and therefore we are not at the 0 0 unconstrained minimum. * * • Case 3: θ j and λ j = 0 = 0 for all j . In this case, we have h j x * for all j and ∇ f x * ( ) ( ) = 0 = 0 . Therefore, the boundary passes through the unconstrained optimum which is also the constrained optimum. Example: Now consider the problem ) 2 ( ) ( minimize f x = x – a + b x ≥ subject to: x c ( ) f x ) 2 ( ) ( f x = x – a + b ≥ x c b c a x Sketch of the constrained one dimensional problem.

• The location of the minimum depends on whether or not the unconstrained minimum is inside the feasible region or not. > • If c then the minimum lies at x = , which is the boundary of the feasible a c ≥ reagion defined by x c . ≤ • If c then the minimum lies at the unconstrained minimum, x = . a a • Introducing a single slack variable, θ 2 ≥ = x – c 0 : θ 2 x – c – = 0 and we can write the Lagrangian as ) 2 θ 2 L x λ θ ( , , ) ( λ x ( ) = – + + – – x a b c where λ is the Lagrange multiplier. ∂ L λ * 2 x * ( ) = – + = (7) a 0 ∂ x θ * 2 ∂ L x * = – c – = 0 (8) ∂ λ ∂ L 2 λ * θ * = – = 0 (9) ∂ θ • In general, we need to know how c and a compare.

• Case 1: , θ * From (9), assume λ * ≠ = 0 0 . θ * 2 Therefore, from (7) x * = a and thus from (8), a – c – = 0 which gives that θ * 2 and we have that θ * is real only for c ≤ = – . a c a Now since λ * = 0 we have L x * λ * θ * ∂ f f x * ( , , ) ( ) = and x = 0 ∂ x * This tells us that the unconstrained minimum is the constrained minimum. • Case 2: Now let us assume that λ * , θ * ≠ 0 = 0 . and from (7) λ * From (8) we have x * ( ) = = – . c 2 a c Since λ * ≠ ≤ > 0 and in the previous case we had c a , now we have c a . • Case 3: For the case λ * θ * , (7) tells us that x * = = 0 – a = 0 and therefore x * = . a and therefore x * From (8) we have x – = = = . The uncostrained c 0 a c minimum lies on the boundary since from (7) ∂ L ∂ f = = 0 . ∂ ∂ x x x * x *

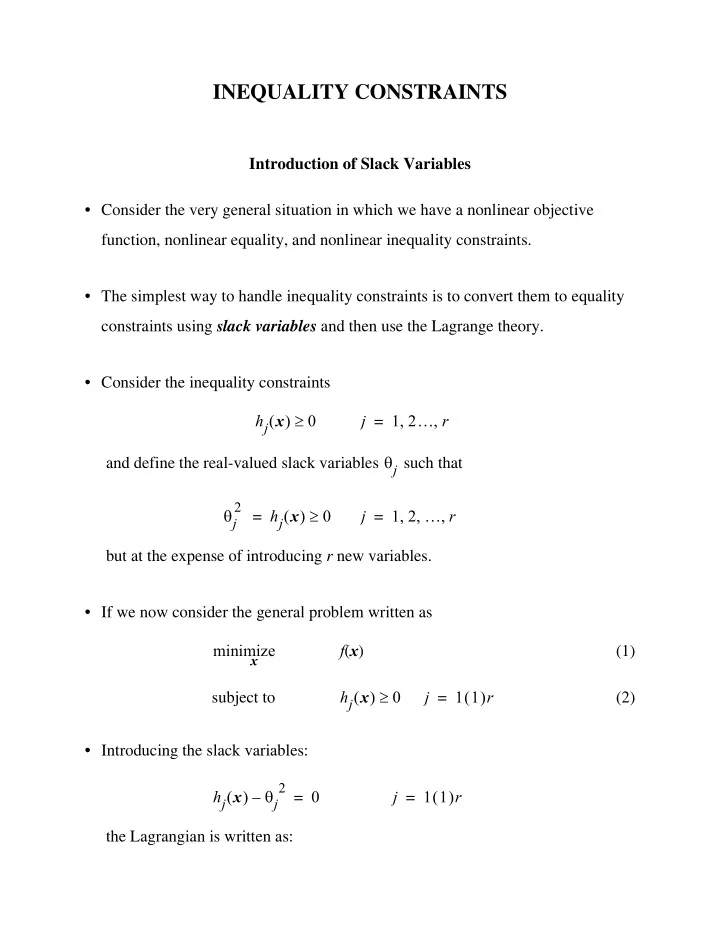

Example: As a two dimensional example, consider ) 2 ) 2 ( ( minimize f x ( ) = x 1 – 3 + 2 x 2 – 5 x ≤ subject to: g x ( ) = 2 x 1 + 3 x 2 – 5 0 ) 2 ) 2 x 2 ( ( f x ( ) = x 1 – 3 + 2 x 2 – 5 6 4 g = 2 x 1 + 3 x 2 – 5 2 x 1 3 6 Contours and feasible region for the example problem. • Unless one was to draw a very accurate contour plot, it is hard to find the minimum from such a graphical method. • It is obvious from the graph though, that the minimum will lie on the line g x ( ) = 0 . • We introduce a single slack variable, θ 2 , and construct the Lagrangian as ) 2 ) 2 θ 2 L x λ θ ( , , ) ( ( λ 2 x 1 ( ) = x 1 – 3 + 2 x 2 – 5 + + 3 x 2 – 5 + . θ 2 • The inequality constraint was changed to the equality constraint g x ( ) + = 0 , using the slack variable θ 2 ≥ = – ( ) . g x 0

• The necessary conditions become ∂ L 2 λ * * ( ) = – + = (10) 2 x 1 3 0 ∂ x 1 ∂ L 3 λ * * ( ) = 4 x 2 – 5 + = 0 (11) ∂ x 2 ∂ L 2 θ * λ * = = (12) 0 ∂ θ θ * 2 ∂ L * * = 2 x 1 + 3 x 2 – 5 + = 0 (13) ∂ λ From (10) and (11): * λ * = – x 1 3 3 - λ * * - x 2 = 5 – 4 substituting these expressions in (13) we have: θ * 2 3 ⎛ ⎞ λ * - λ * ( ) - – + – – + = 2 3 3 5 5 0 ⎝ ⎠ 4 θ * 2 17 - λ * - - - - - 16 – + = 0 . 4 then θ * will be complex. If θ * If λ * then λ * ⁄ = 0 = 0 = 64 17 and therefore 13 37 * * = – - - - - - - = - - - - - - x 1 x 2 17 17 θ * = 0 means there is no slack in the constraint as expected from the plot.

The Kuhn-Tucker Theorem • Kuhn-Tucker theorem gives the necessary conditions for optimum of a nonlinear objective function constrained by a set of nonlinear inequality constraints. • The general problem is written as � n ∈ ( ) minimize f x x x ≤ 1 2 … r , , subject to: g i x ( ) 0 i = If we had equality constraints, then we could introduce two inequality constraints in place of it. ( ) For instance if it was required that h x = 0 , then we could just impose ( ) ≤ ( ) ≥ ≤ h x 0 and h x 0 or h x – ( ) 0 . ( ) and g i x ( ) are differentiable functions; The Lagrangian is: • Now assume that f x r ∑ L x λ ( , ) ( ) λ i ( ) = + f x g i x i = 1 The necessary conditions for x * to be the solution to the above problem are: r ∂ f x * ∂ g i x * ∑ * λ i ( ) 1 2 … n , , , ( ) + = 0 j = (14) ∂ ∂ x j x j i = 1 g i x * ≤ ( ) r ( ) 0 i = 1 1 (15) * g i x * λ i ( ) ( ) r = 0 i = 1 1 (16) * λ i ≥ ( ) r = (17) 0 i 1 1

• These are known as the Kuhn-Tucker stationary conditions; written compactly as: ∇ x L x * λ * ( , ) = (18) 0 ∇ λ L x * λ * g x * ( , ) ( ) ≤ = 0 (19) T g x * λ * ( ) ( ) = 0 (20) λ * ≥ 0 (21) • If our problem is one of maximization instead of minimization then � n ∈ maximize f x ( ) x x ≤ 1 2 … r , , subject to: ( ) = g i x 0 i we can replace f x ( ) by f x – ( ) in the first condition r ∂ f x * ∂ g i x * ∑ * λ i ( ) 1 2 … n , , , – ( ) + = 0 j = (22) ∂ ∂ x j x j i = 1 r ∂ f x * ∂ g i x * ∑ * ( λ ) x j ( ) 1 2 … n , , , ( ) + – i = 0 j = . (23) ∂ ∂ x j i = 1 * : • For the maximization problem is one of changing the sign of λ i ∇ x L x * λ * ( , ) = 0 (24) ∇ λ L x * λ * g x * ( , ) ( ) ≤ = 0 (25) T g x * λ * ( ) ( ) = (26) 0

λ * ≤ 0 (27)

Transformation via the Penalty Method • The Kuhn-Tucker necessary conditions give us a theoretical framework for dealing with nonlinear optimization • From a practical computer algorithm point of view we are not much further than we were when we started. • We require practical methods of solving problems of the form: � n ∈ ( ) (28) minimize f x x x ( ) ≤ ( ) J subject to g j x 0 j = 1 1 (29) ( ) K ( ) = = (30) h k x 0 k 1 1 • We introduce a new objective function called the penalty function ( ) ( ) Ω R g x , , P x R ; = f x + ( ( ) h x ( ) ) where the vector R contains the penalty parameters and Ω R g x , , ( ( ) h x ( ) ) is the penalty term. , • The penalty term is a function of R and the constraint functions, g x ( ) h x ( ) . • The purpose of the addition of this term to the objective function is to penalize the objective function when a set of decision variables, x , which are not feasible are chosen.

Use of a parabolic penalty term • Consider the minimization of an objective function, f x ( ) with equality constraints, h x ( ). • We create a penalty function by adding a positive coefficient times each constraint, that is K } 2 ∑ ( ) ( ) { ( ) minimize P x R ; = f x + R k h k x . (31) x k = 1 , more weight is attached to satisfying the k th → ∞ As the penalty parameters R k constraint. , then the k th equality If a specific parameter is chosen as zero, say R k = 0 constraint is ignored. The user specifies value of R k according to the importance of satisfying each equality constraint. Example: x 2 2 minimize + x 2 1 x subject to: x 2 = 1 We construct a penalty function as: x 2 x 2 ) 2 ( ) ( ; = + + – P x R R x 2 1 1 2 ( ) for particular values of R . and we proceed to minimizing P x R ;

Recommend

More recommend