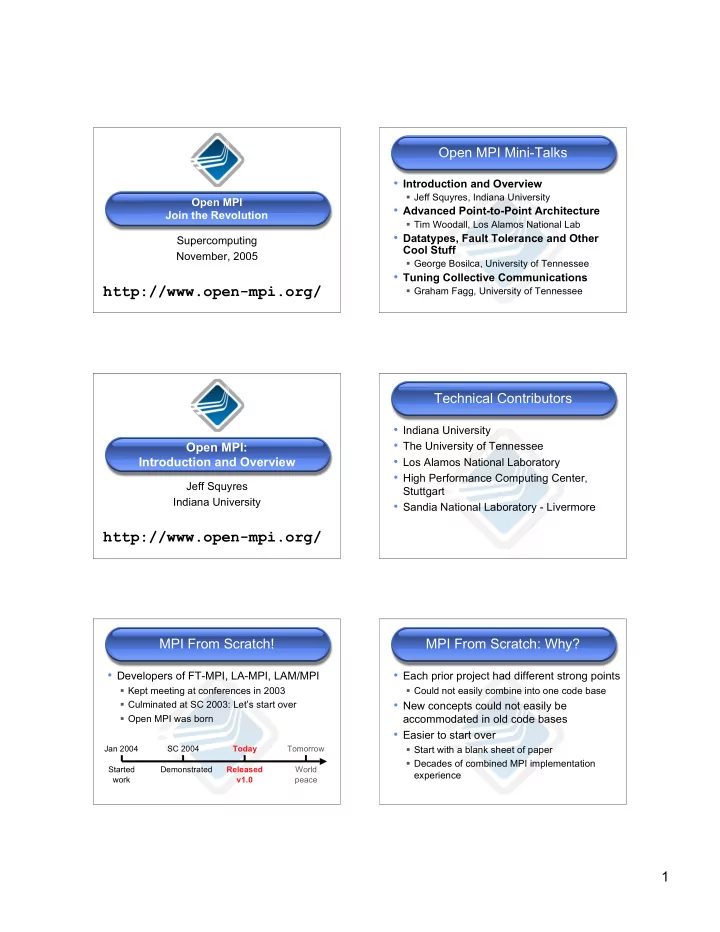

Open MPI Mini-Talks • Introduction and Overview Jeff Squyres, Indiana University Open MPI • Advanced Point-to-Point Architecture Join the Revolution Tim Woodall, Los Alamos National Lab • Datatypes, Fault Tolerance and Other Supercomputing Cool Stuff November, 2005 George Bosilca, University of Tennessee • Tuning Collective Communications http://www.open-mpi.org/ Graham Fagg, University of Tennessee Technical Contributors • Indiana University • The University of Tennessee Open MPI: Introduction and Overview • Los Alamos National Laboratory • High Performance Computing Center, Jeff Squyres Stuttgart Indiana University • Sandia National Laboratory - Livermore http://www.open-mpi.org/ MPI From Scratch! MPI From Scratch: Why? • Developers of FT-MPI, LA-MPI, LAM/MPI • Each prior project had different strong points Kept meeting at conferences in 2003 Could not easily combine into one code base • New concepts could not easily be Culminated at SC 2003: Let’s start over accommodated in old code bases Open MPI was born • Easier to start over Jan 2004 SC 2004 Today Tomorrow Start with a blank sheet of paper Decades of combined MPI implementation Started Demonstrated Released World experience work v1.0 peace 1

MPI From Scratch: Why? Open MPI Project Goals • All of MPI-2 • Merger of ideas from PACX-MPI • Open source FT-MPI (U. of Tennessee) LAM/MPI LA-MPI LA-MPI (Los Alamos) Vendor-friendly license (modified BSD) FT-MPI LAM/MPI (Indiana U.) • Prevent “forking” problem PACX-MPI (HLRS, U. Stuttgart) Community / 3rd party involvement Production-quality research platform (targeted) Open MPI Open MPI Rapid deployment for new platforms • Shared development effort Open MPI Project Goals Design Goals • Actively engage the • Extend / enhance previous ideas HPC community Component architecture Researchers Researchers Users Message fragmentation / reassembly Sys. Sys. Researchers Users Users Admins Admins System administrators Design for heterogeneous environments Vendors • Multiple networks (run-time selection and striping) • Solicit feedback and Developers Developers Vendors Vendors • Node architecture (data type representation) contributions Automatic error detection / retransmission Process fault tolerance Open MPI Open MPI True open source Thread safety / concurrency model Design Goals Plugins for HPC (!) • Design for a changing environment • Run-time plugins for combinatorial functionality Hardware failure Underlying point-to-point network support Resource changes Different MPI collective algorithms Application demand (dynamic processes) • Portable efficiency on any parallel resource Back-end run-time environment / scheduler support Small cluster • Extensive run-time tuning capabilities “Big iron” hardware Allow power user or system administrator to “Grid” (everyone a different definition) tweak performance for a given platform … 2

Plugins for HPC (!) Plugins for HPC (!) Run-time Run-time Networks Networks environments environments Your MPI application Your MPI application Shmem Your MPI application Shmem Your MPI application rsh/ssh rsh/ssh TCP TCP SLURM SLURM Shmem OpenIB OpenIB rsh/ssh PBS PBS TCP mVAPI mVAPI BProc BProc GM GM Xgrid Xgrid MX MX Plugins for HPC (!) Plugins for HPC (!) Run-time Run-time Networks Networks environments environments Your MPI application Your MPI application Your MPI application Your MPI application Shmem Shmem rsh/ssh rsh/ssh TCP TCP SLURM SLURM Shmem Shmem OpenIB rsh/ssh OpenIB rsh/ssh PBS PBS TCP TCP mVAPI mVAPI BProc BProc GM GM GM GM Xgrid Xgrid MX MX Plugins for HPC (!) Plugins for HPC (!) Run-time Run-time Networks Networks environments environments Your MPI application Your MPI application Your MPI application Your MPI application Shmem Shmem rsh/ssh rsh/ssh TCP TCP SLURM SLURM Shmem Shmem OpenIB SLURM OpenIB SLURM PBS PBS TCP TCP mVAPI mVAPI BProc BProc GM GM GM GM Xgrid Xgrid MX MX 3

Plugins for HPC (!) Plugins for HPC (!) Run-time Run-time Networks Networks environments environments Your MPI application Your MPI application Shmem Your MPI application Shmem Your MPI application rsh/ssh rsh/ssh TCP TCP SLURM SLURM Shmem Shmem OpenIB PBS OpenIB PBS PBS PBS TCP TCP mVAPI mVAPI BProc BProc GM GM GM GM Xgrid Xgrid MX MX Plugins for HPC (!) Plugins for HPC (!) Run-time Run-time Networks Networks environments environments Your MPI application Your MPI application Your MPI application Your MPI application Shmem Shmem rsh/ssh rsh/ssh TCP TCP SLURM SLURM Shmem Shmem OpenIB PBS OpenIB PBS PBS PBS TCP TCP mVAPI mVAPI BProc BProc TCP TCP GM GM Xgrid Xgrid GM GM MX MX Plugins for HPC (!) Plugins for HPC (!) Run-time Run-time Networks Networks environments environments Your MPI application Your MPI application Your MPI application Your MPI application Shmem Shmem rsh/ssh rsh/ssh TCP TCP SLURM SLURM Shmem Shmem OpenIB BProc OpenIB BProc PBS PBS TCP TCP mVAPI mVAPI BProc BProc TCP TCP GM GM Xgrid Xgrid GM GM MX MX 4

Current Status • v1.0 released (see web site) • Much work still to be done Open MPI: Advanced Point-to- Point Architecture More point-to-point optimizations Data and process fault tolerance Tim Woodall New collective framework / algorithms Los Alamos National Laboratory Support more run-time environments New Fortran MPI bindings … http://www.open-mpi.org/ • Come join the revolution! Component Based Architecture Advanced Point-to-Point Architecture • Component-based • Uses Modular Component Architecture (MCA) • High performance Plugins for capabilities (e.g., different • Scalable networks) • Multi-NIC capable Tunable run-time parameters • Optional capabilities Asynchronous progress Data validation / reliability Point-to-Point Point-to-Point Component Component Frameworks Frameworks • BTL Management • Byte Transfer Layer Layer (BML) (BTL) Multiplexes access to Abstracts lowest native BTL's network interfaces • Memory Pool • Point-to-Point Provides for memory Messaging Layer management / (PML) registration • Registration Cache Implements MPI semantics, message Maintains cache of fragmentation, and most recently used striping across BTLs memory registrations 5

Network Support High Performance • Native support for: • Planned support for: • Component-based architecture does not impact performance Infiniband: Mellanox IBM LAPI Verbs DAPL • Abstractions leverage network capabilities Infiniband: OpenIB Quadrics Elan4 RDMA read / write Gen2 Myrinet: GM Scatter / gather operations Third party contributions Myrinet: MX Zero copy data transfers welcome! Portals • Performance on par with ( and exceeding ) Shared memory vendor implementations TCP Performance Results: Infiniband Performance Results: Myrinet Scalability Memory Usage Scalability • On-demand connection establishment TCP Infiniband (RC based) • Resource management Infiniband Shared Receive Queue (SRQ) support RDMA pipelined protocol (dynamic memory registration / deregistration) Extensive run-time tuneable parameters: • Maximum fragment size • Number of pre-posted buffers • .... 6

Latency Scalability Multi-NIC Support • Low-latency interconnects used for short messages / rendezvous protocol • Message stripping across high bandwidth interconnects • Supports concurrent use of heterogeneous network architectures • Fail-over to alternate NIC in the event of network failure (work in progress) Optional Capabilities Multi-NIC Performance (Work in Progress) • Asynchronous Progress Event based (non-polling) Allows for overlap of computation with communication Potentially decreases power consumption Leverages thread safe implementation • Data Reliability Memory to memory validity check (CRC/checksum) Lightweight ACK / retransmission protocol Addresses noisy environments / transient faults Supports running over connectionless services (Infiniband UD) to improve scalability User Defined Data-type • MPI provides many functions allowing users to describe non-contiguous memory layouts Open MPI: Datatypes, Fault MPI_Type_contiguous, MPI_Type_vector, Tolerance, and Other Cool Stuff MPI_Type_indexed, MPI_Type_struct • The send and receive type must have the same George Bosilca signature, but not necessary have the same memory layout University of Tennessee • The simplest way to handle such data is to … http://www.open-mpi.org/ Timeline Pack Network transfer Unpack 7

Recommend

More recommend