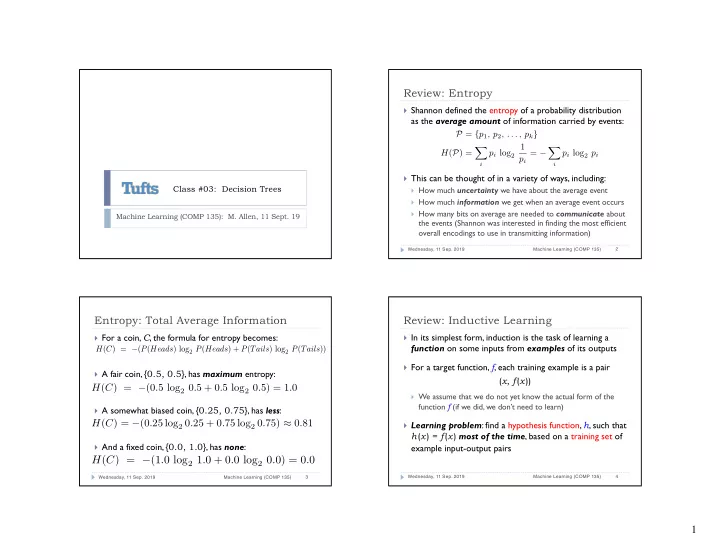

Review: Entropy } Shannon defined the entropy of a probability distribution as the average amount of information carried by events: P = { p 1 , p 2 , . . . , p k } 1 X X H ( P ) = p i log 2 = − p i log 2 p i p i i i } This can be thought of in a variety of ways, including: Class #03: Decision Trees } How much uncertainty we have about the average event } How much information we get when an average event occurs } How many bits on average are needed to communicate about Machine Learning (COMP 135): M. Allen, 11 Sept. 19 the events (Shannon was interested in finding the most efficient overall encodings to use in transmitting information) 2 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) Entropy: Total Average Information Review: Inductive Learning } For a coin, C , the formula for entropy becomes: } In its simplest form, induction is the task of learning a function on some inputs from examples of its outputs H ( C ) = − ( P ( Heads ) log 2 P ( Heads ) + P ( Tails ) log 2 P ( Tails )) } For a target function, f , each training example is a pair } A fair coin, { 0.5, 0.5 }, has maximum entropy: ( x , f ( x )) H ( C ) = − (0 . 5 log 2 0 . 5 + 0 . 5 log 2 0 . 5) = 1 . 0 } We assume that we do not yet know the actual form of the function f (if we did, we don’t need to learn) } A somewhat biased coin, { 0.25, 0.75 }, has less : H ( C ) = − (0 . 25 log 2 0 . 25 + 0 . 75 log 2 0 . 75) ≈ 0 . 81 } Learning problem : find a hypothesis function, h , such that h ( x ) = f ( x ) most of the time , based on a training set of } And a fixed coin, { 0.0, 1.0 }, has none : example input-output pairs H ( C ) = − (1 . 0 log 2 1 . 0 + 0 . 0 log 2 0 . 0) = 0 . 0 4 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) 3 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) 1

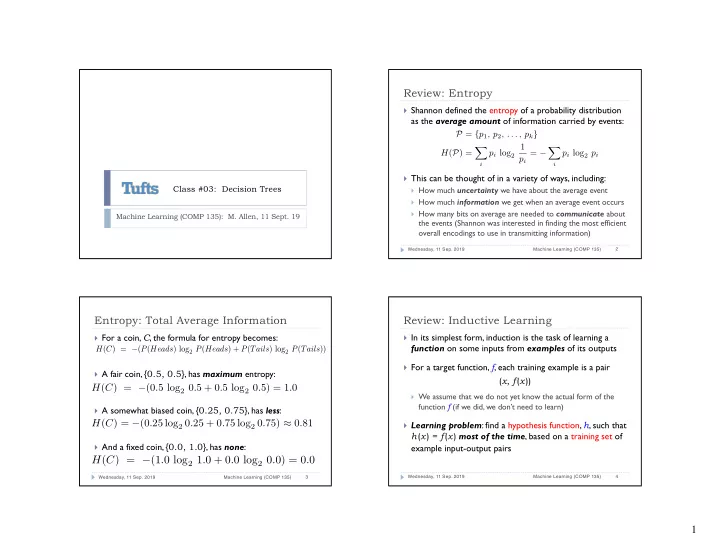

Decision Trees Decisions Based on Attributes } A decision tree leads us from a set of attributes (features of the input) to } Training set : cases where patrons have decided to wait or not, along some output with the associated attributes for each case } For example, we have a database of customer records for restaraunts } These customers have made a number of decisions about whether to wait for a table, based on a number of attributes: Alternate : is there an alternative restaurant nearby? 1. 2. Bar : is there a comfortable bar area to wait in? Fri/Sat : is today Friday or Saturday? 3. Hungry : are we hungry? 4. Patrons : number of people in the restaurant ( None, Some, Full ) 5. Price : price range ( $, $$, $$$ ) 6. Raining : is it raining outside? 7. Reservation : have we made a reservation? 8. Type : kind of restaurant ( French, Italian, Thai, Burger ) 9. WaitEstimate : estimated wait time in minutes ( 0-10, 10-30, 30-60, >60 ) 10. Image source: Russel & Norvig, AI: A Modern Approach (Prentice Hal, 2010) } The function we want to learn is whether or not a (future) customer will } We now want to learn a tree that agrees with the decisions already decide to wait, given some particular set of attributes made, in hopes that it will allow us to predict future decisions 6 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) 5 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) Decision Tree Functions Decision Trees are Expressive } For the examples given, here is a “true” tree (one that will lead A A B A && !B from the inputs to the same outputs) T F T T F T F T Patrons? B B F T F None Some Full T F T F F F F No Yes WaitEstimate? F T F F >60 30-60 10-30 0-10 No Alternate? Hungry? Yes } Such trees can express any deterministic function we: No Ye s No Ye s For example, in boolean functions, each row of a truth-table will correspond to a } Reservation? Fri/Sat? Yes Alternate? path in a tree No Ye s No Ye s No Ye s For any such function, there is always a tree: just make each example a different } path to a correct leaf output Bar? Yes No Yes Yes Raining? No Ye s No Ye s } A Problem : such trees most often do not generalize to new examples No Yes No Yes } Another Problem : we want compact trees to simplify inference Image source: Russel & Norvig, AI: A Modern Approach (Prentice Hal, 2010) 8 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) 7 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) 2

<latexit sha1_base64="MKAseyjvA83exL9uTviFAcMQPo=">AD4XicpVPb9MwFHYTfozyYx0cuVi0SC2aqQc4I0AQcOHIa0bpPmqnKcl9aYwfbKVR7nCDXfmfuPO3cMFJOqlh4QlS0/fe+/7Pr+8RJngxgbBr47n37h56/bOne7de/cf7Pb2Hh4blWsGU6aE0qcRNSC4hKnlVsBpoGmkYCT6PxNlT9ZgTZcySO7zmCW0oXkCWfUOmi+1/lNpOIyBmlxl1j4bKOkSHLJqnSJa8Sw4i0wXnNogCNuSwHw5hauk/2sYbUAVwuyDwBanMNpoIzqh0pmS9yMGY0wIR0ydJklMGzIhiHFUWdwkRAYqnW6lMjV6TKWJxo+JhXrgSNQGAucYkrxeuILn3zpMRDKkTY6qmQdNjaAojfFmndIkHw2t841eYQJrZtQFbWd7U2yVUs2jpTrZ0NbjurWm9B5qUw+Z5g9G/TqPgCvTtct8f/Wbk2/7aF2sIqVNfNePxgH9cFXg3AT9NHmHM57P0msWJ46aiaoMWdhkNlZQbXlTEDZJbkBJ3JOF1DUu1nipw6KcaK0u+5r1mirTipb72Kr+y3yctZwW5CsoUlyga3C1RrjmGtgVqxdQJnmTh+zJdWUWbfsLSadC4j38ar6Q2LnVSyUq1+mE+fXDSD8+7lXg+PJOHw+nyY9A9eb0axgx6jJ2iIQvQCHaB36BNEfMi74v3bvwmf/V/+ZfNKVeZ9PzCLWO/+MPmXJHQA=</latexit> <latexit sha1_base64="nOpu9yxClTjP8pkm6lbpyBIfqXg=">AGaXicpVTLbhMxFJ2WJpTwSmEDZXNFBilFaZSkC9gVYAQSCAVqS+pU0WO505i1WMPtqcPRbPi/9jzC/AT2DMTaF6wCvLvec43Ov7yDhTJtO5/vK6o21SvXm+q3a7Tt3792vbzw41DJVFA+o5FIdD4hGzgQeGY4HicKSTzgeDQ4e+Puj85RaSbFvrlK8DQmQ8EiRomxR/2Nta+BkEyEKAzUAoOXZhCNo1RQd51BfqLp+C1SlmMoxH1FmMj8ZkgMaQUtUBjbAyaGQT9CYlKF2h0nRFnQoD9MUestH4KgFox0Qig+H3faXQeRX0HAMTJEKXlR0I1jqQ1ECr+kThUnA+TABGTgGBcBTXSzKIMm4bzI0S7JL3I0iXELJnFSZeA3F+iGVxBgnJgrjcZJLuPNCJ0XU7y9a7wKbfY1tz4ibJm8Tx/a7leqxHBic5VXif/b+4p9/+lIZuH98F2qi52liCT7Y6H+JEKkOEyRa52MoLNVfyHNY10HzFgYDACwjLgPjoi6YGYGS0myXwLYDclWLgH+3rlSAhI7gnPAUrbORDzICf5JYhoVy5s07FqMoQz+a0meLMb6EwLZSXqNAp1bV5UT3iGgo1W3njFZjlDmBMI+u08EM+NLf1SyULPlesF0Y4a7/fK95RhKG1tmBIsIaYiT4zle/MDb/IqVmZxIRIViBufOFUn+5yhCa/SCtpntyrze/l+6T4S2/v1RqfdyRfMb7rlpuGVa69f/xaEkqaxbXHKidYn3U5iTsdEGUY5ZrUg1WjpzsgQx/mMzOCZPQrBtUck7VTJT6fihDT5TJzKPklN9PJ0zESGhS0gIlS7tx04xRCpAafmU3hCpm+YGOiCLU2KE7haRSjmELzt2kDq1WPpQ2fhT3rF5rQHf2ufObw167u9Pufe41dl+XVqx7T7ynXtPrei+8Xe+9t+cdeHTtR6VeVzZrPysblQfVTeL0NWVMuehN7WqjV/OPizM</latexit> Why Not Search for Trees? Building Trees Top-Down } One thing we might consider would be to search through } Rather than search for all trees, we build our trees by: possible trees to find ones that are most compact and Choosing an attribute A from our set 1. consistent with our inputs Dividing our examples according to the values of A 2. } Exhaustive search is too expensive, however, due to the large Placing each subset of examples into a sub-tree below the 3. number of possible functions (trees) that exist node for attribute A } For n binary-valued attributes, and boolean decision } This can be implemented in a number of ways, but is outputs, there are 2 2 n possibilities perhaps most easily understood recursively } For 5 such attributes, we have 4,294,967,296 trees! } The main question becomes: how do we choose the attribute A that we use to split our examples? } Even restricting our search to conjunctions over attributes, it is easy to get 3 n possible trees 10 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) 9 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) Decision Tree Learning Algorithm Base Cases function DecisionTreeTrain ( data, remaining features, parent guess ) function DecisionTreeTrain ( data, remaining features, parent guess ) guess ← most frequent label in data guess ← most frequent label in data if (all labels in data same) or ( remaining features = ∅ ) then if (all labels in data same) or ( remaining features = ∅ ) then return Leaf ( guess ) return Leaf ( guess ) else if data = ∅ then else if data = ∅ then return Leaf ( parent guess ) return Leaf ( parent guess ) . . else . F ? ← MostImportant ( remaining features, data ) Tree ← a new decision tree with root-feature F ? } The algorithm stops in three cases: for each value f of F ? do data f ← { x ∈ data | x has feature-value f } Perfect classification of data found: use it as a leaf-label 1. sub f ← DecisionTreeTrain ( data f , remaining features − F ? , guess ) No features left: use most common class add a branch to tree with label-value f and subtree sub f 2. endfor No data left: use most common class of parent data 3. return Tree endif 12 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) 11 Wednesday, 11 Sep. 2019 Machine Learning (COMP 135) 3

Recommend

More recommend