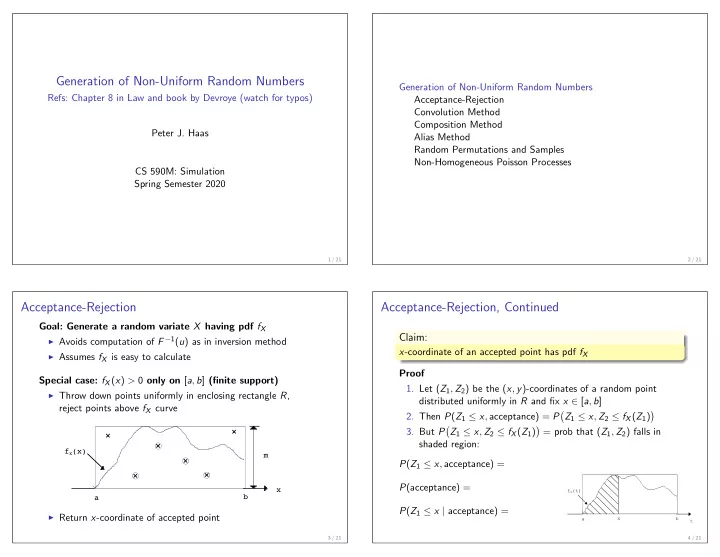

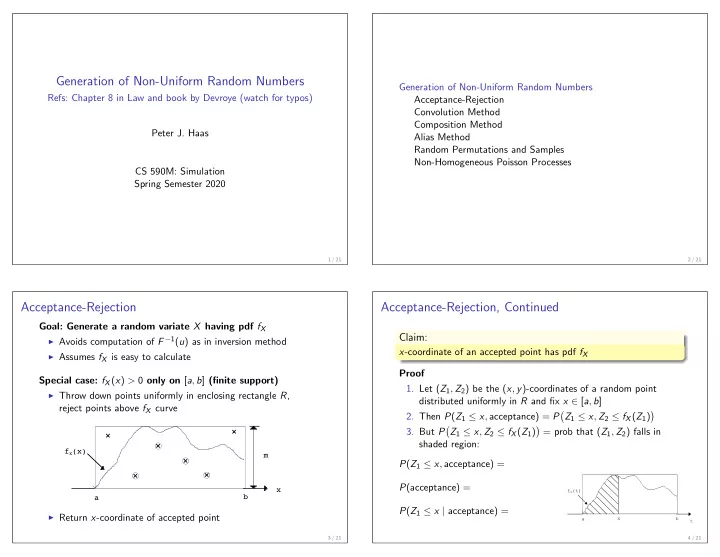

Generation of Non-Uniform Random Numbers Generation of Non-Uniform Random Numbers Refs: Chapter 8 in Law and book by Devroye (watch for typos) Acceptance-Rejection Convolution Method Composition Method Peter J. Haas Alias Method Random Permutations and Samples Non-Homogeneous Poisson Processes CS 590M: Simulation Spring Semester 2020 1 / 21 2 / 21 Acceptance-Rejection Acceptance-Rejection, Continued Goal: Generate a random variate X having pdf f X Claim: ◮ Avoids computation of F − 1 ( u ) as in inversion method x -coordinate of an accepted point has pdf f X ◮ Assumes f X is easy to calculate Proof Special case: f X ( x ) > 0 only on [ a , b ] (finite support) 1. Let ( Z 1 , Z 2 ) be the ( x , y )-coordinates of a random point ◮ Throw down points uniformly in enclosing rectangle R , distributed uniformly in R and fix x ∈ [ a , b ] reject points above f X curve � � 2. Then P ( Z 1 ≤ x , acceptance) = P Z 1 ≤ x , Z 2 ≤ f X ( Z 1 ) � � 3. But P Z 1 ≤ x , Z 2 ≤ f X ( Z 1 ) = prob that ( Z 1 , Z 2 ) falls in shaded region: f X (x) m P ( Z 1 ≤ x , acceptance) = P (acceptance) = x f X (t) b a P ( Z 1 ≤ x | acceptance) = ◮ Return x -coordinate of accepted point a x b t 3 / 21 4 / 21

Acceptance-Rejection, Continued Generalized Acceptance-Rejection: Infinite Support Find density g that majorizes f X ◮ There exists a constant c such that f X ( x ) / c ≤ g ( x ) for all x Acceptance-Rejection Algorithm (Finite Support) ◮ Smallest such constant is c = sup � � f X ( x ) / g ( x ) D 1. Generate U 1 , U 2 ∼ Uniform(0 , 1) ( U 1 and U 2 are independent) x 2. Set Z 1 = a + ( b − a ) U 1 and Z 2 = mU 2 (inversion method) 3. if Z 2 ≤ f X ( Z 1 ), return X = Z 1 , else go to step 1 How many ( U 1 , U 2 ) pairs must we generate? ◮ N (= number pairs generated) has geometric dist’n: Generalized Acceptance-Rejection Algorithm P ( N = k ) = p (1 − p ) k − 1 where p = 1 / (area of R ) 1. Generate Z D ∼ g and U D ∼ Uniform(0 , 1) ( Z , U independent) ◮ So E [ N ] = 1 / p = (area of R ) = ( b − a ) m 2. if U g ( Z ) ≤ f X ( Z ) / c , return X = Z , else go to step 1 ◮ So make m as small as possible f X (x) m Expected number of ( Z , U ) pairs generated: E [ N ] = c x a b 5 / 21 6 / 21 Convolution Method Composition Method Goal: Generate X where X = Y 1 + · · · + Y m [ Y 1 , . . . , Y m i.i.d.] ◮ f X = f Y 1 ∗ f Y 2 ∗ · · · ∗ f Y m Suppose that we can write ◮ Where the convolution f ∗ g is defined by ◮ F X ( x ) = p 1 F Y 1 ( x ) + p 2 F Y 2 ( x ) + · · · + p m F Y m ( x ) or � ∞ ( f ∗ g )( x ) = −∞ f ( x − y ) g ( y ) dy ◮ f X ( x ) = p 1 f Y 1 ( x ) + p 2 f Y 2 ( x ) + · · · + p m f Y m ( x ) where p i ’s are nonnegative and � m i =1 p i = 1 Convolution Algorithm ◮ Generate Y 1 , . . . , Y m Composition Method ◮ Return X = Y 1 + · · · + Y m 1. Generate a discrete RV J where P ( J = j ) = p j for 1 ≤ j ≤ m 2. Generate Y J from F Y J or f Y J Example: Binomial Distribution 3. Return X = Y J ◮ Suppose X D ∼ Binom( m , p ) D ◮ Then X = Y 1 + · · · + Y m where Y i ∼ Bernoulli( p ) Can lead to very fast generation algorithms ◮ Often part of a more complex algorithm (e.g., do something else if m large) 7 / 21 8 / 21

Composition Method: Example Alias Method for Discrete Random Variables R 3 Goal: Generate X with P ( X = x i ) = p i for 1 ≤ i ≤ n f X (x) Easy case: p 1 = p 2 = · · · = p n R 1 R 2 1/4 a b c ◮ Let p i = area of R i for 1 ≤ i ≤ 3 1 2 3 4 ◮ Then f X = p 1 f Y 1 + p 2 f Y 2 + p 3 f Y 3 , where � 2( x − a ) � 2( c − x ) if a ≤ x ≤ b ; if b ≤ x ≤ c ; 0 4 ( b − a ) 2 ( c − b ) 2 f Y 1 ( x ) = f Y 2 ( x ) = 0 otherwise 0 otherwise Algorithm 1. Generate U D ∼ Uniform(0 , 1) � � f Y 3 ( x ) = (1 / p 3 ) f X ( x ) − p 1 f Y 1 ( x ) − p 2 f Y 2 ( x ) 2. Return x J , where J = ⌈ nU ⌉ ◮ Easy to generate Y 1 and Y 2 (the “usual case”): ◮ Y 1 = max( U 1 , U 2 ) and Y 2 = min( U 1 , U 2 ) or use inversion ⌈ x ⌉ = smallest integer ≥ x (ceiling function) 9 / 21 10 / 21 Alias Method: General Case Alias Method: Another Example 3/7 9/21 1/2 1/2 i x i p i a i r i 7/21 1 x 1 1/6 x 2 0.5 2/7 1/3 1/3 5/21 x 2 2 x 2 1/2 x 2 1.0 x 2 1/6 1/6 x 3 3 x 3 1/3 x 3 1.0 1/7 3/21 x 1 x 1 x 2 x 3 x 1 x 2 x 3 Alias Algorithm D 1. Generate U 1 , U 2 ∼ Uniform(0 , 1) i x i p i a i r i 1 3/7 x 1 2. Set I = ⌈ nU 1 ⌉ 2 3/7 x 2 3. If U 2 ≤ r I return X = x I else return X = a I 3 x 3 1/7 11 / 21 12 / 21

Random Permutations: Fisher-Yates Shuffle Goal: Create random permutation of array x of length n Fisher-Yates Algorithm 1. Set i ← n Generation of Non-Uniform Random Numbers 2. Generate random integer N between 1 and i (e.g., as ⌈ iU ⌉ ) Acceptance-Rejection Convolution Method 3. Swap x [ N ] and x [ i ] Composition Method 4. Set i ← i − 1 Alias Method 5. If i = 1 then exit Random Permutations and Samples Non-Homogeneous Poisson Processes a b c d a d c b a d c b d a c b Q: What about this algorithm: For i = 1 to n : swap x [ i ] with random entry Other random objects: graphs, matrices, random vectors, ... 13 / 21 14 / 21 Sequential Bernoulli Sampling Reservoir Sampling ◮ Stream of items x 1 , x 2 , . . . , ◮ Stream of items x 1 , x 2 , . . . , ◮ Insert each item into sample with probability p ◮ Maintain a uniform random sample of size N w.o.replacement ◮ Expected sample size after n th item = np ◮ Fast implementation: generate skips directly (geometrically distributed) Reservoir Sampling: Upon arrival of x i : Bernoulli Sampling: ◮ if i ≤ N , then include x i in sample Generate U D ∼ Uniform(0 , 1) ◮ if i > N , then Set ∆ ← 1 + ⌊ ln U / ln(1 − p ) ⌋ [Geometric on { 1 , 2 , . . . } , HW #4] D ◮ Generate U ∼ Uniform(0 , 1) Set m ← ∆ ◮ If U ≤ N / i , then include x i in sample, Upon arrival of x i : replacing randomly chosen victim ◮ if i = m , then ◮ Include x i in sample ◮ Can generate skips directly using acceptance-rejection D ◮ Generate U ∼ Uniform(0 , 1) ◮ Set ∆ ← 1 + ⌊ ln U / ln(1 − p ) ⌋ [JS Vitter, ACM Trans. Math. Softw., 11(1): 37–57, 1985] ◮ set m ← m + ∆ 15 / 21 16 / 21

Reservoir Sampling: Simple Example ◮ Sample size = 1 ◮ S i = sample state after processing j th item (called i j ) ◮ accept item i 1 into S 1 with probability 1 Generation of Non-Uniform Random Numbers P ( i 1 ∈ S 1 ) = 1 Acceptance-Rejection Convolution Method ◮ accept item i 2 into S 2 with probability 1 / 2 Composition Method Alias Method P ( i 1 ∈ S 2 ) = P ( i 1 ∈ S 1 ) × P ( i 2 �∈ S 2 ) = (1)(1 / 2) = 1 / 2 Random Permutations and Samples P ( i 2 ∈ S 2 ) = 1 / 2 Non-Homogeneous Poisson Processes ◮ accept item i 3 into S 3 with probability 1 / 3 P ( i 1 ∈ S 3 ) = P ( i 1 ∈ S 2 ) × P ( i 3 �∈ S 3 ) = (1 / 2)(2 / 3) = 1 / 3 P ( i 2 ∈ S 3 ) = P ( i 2 ∈ S 2 ) × P ( i 3 �∈ S 3 ) = (1 / 2)(2 / 3) = 1 / 3 P ( i 3 ∈ S 2 ) = 1 / 3 17 / 21 18 / 21 Non-Homogeneous Poisson Processes: Thinning Thinning, Continued Suppose that λ ( t ) ≤ λ max Ordinary (Homogeneous) Poisson process for t ∈ [0 , τ ] ◮ Times between successive events are i.i.d. Exp( λ ) ◮ Idea: Generate “too many” ◮ Event probabilities for disjoint time intervals events according to a Poisson( λ max ) process, then are independent (Markov property) reject some of the events ◮ P (event in t + ∆ t ) ≈ λ ∆ t for ∆ t very small ◮ P ( T n > y + z | T n − 1 = y ) = e − λ z P ( n events in [ t , t + ∆ t ]) = Thinning Algorithm: ( λ ∆ t ) n e − λ ∆ t / n ! Non-Homogeneous Poisson process 1. Set T 0 = 0 , V = 0, and n = 0 ◮ Event probabilities for disjoint time intervals are independent 2. Set n ← n + 1 [Generate T n ] ◮ P (event in t + ∆ t ) ≈ λ ( t )∆ t for ∆ very small 3. Generate E D ∼ Exp( λ max ) and U D ∼ Uniform(0 , 1) [ λ ( t ) is sometimes called a hazard function] 4. Set V ← V + E [Proposed event time] � y + z λ ( u ) du ◮ P ( T n > y + z | T n − 1 = y ) = e − y 5. If U ≤ λ ( V ) /λ max then set T n = V else go to Step 3 ◮ Can capture, e.g., time-of-day effects 6. If T n < τ then go to Step 2 else exit 19 / 21 20 / 21

Thinning, Continued Ross’s Improvement ◮ Piecewise-constant upper-bounding to reduce rejections ◮ Correction for event times that span segments Many other approaches ◮ Inversion (see HW #4) ◮ Idiosyncratic methods: Exploit special properties of Poisson process 21 / 21

Recommend

More recommend