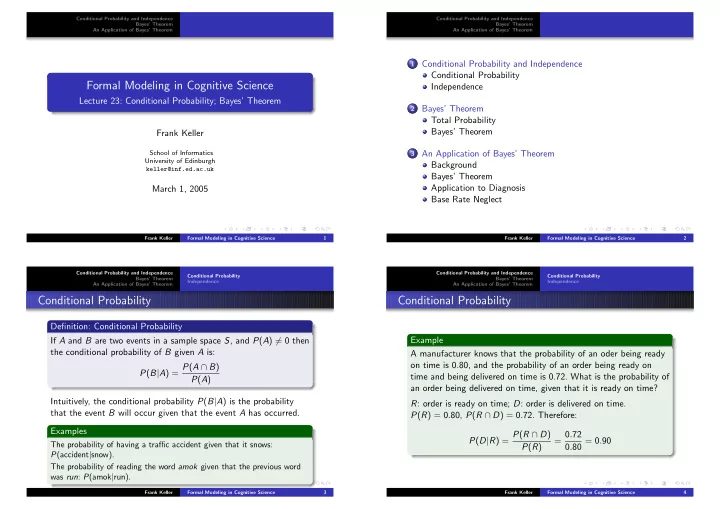

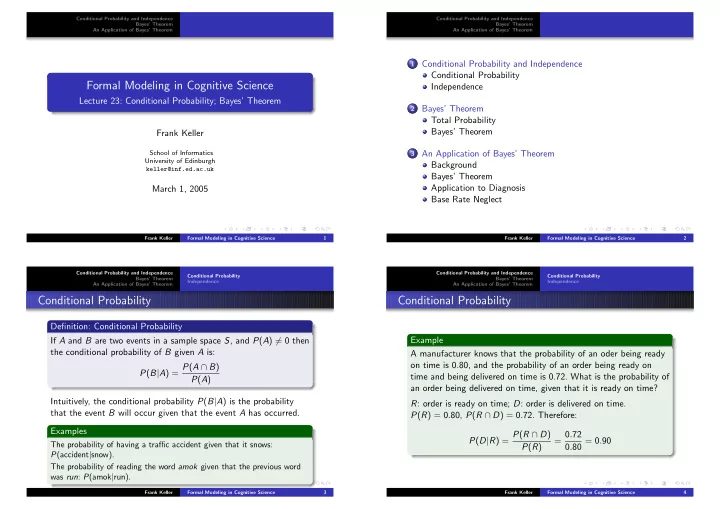

Conditional Probability and Independence Conditional Probability and Independence Bayes’ Theorem Bayes’ Theorem An Application of Bayes’ Theorem An Application of Bayes’ Theorem 1 Conditional Probability and Independence Conditional Probability Formal Modeling in Cognitive Science Independence Lecture 23: Conditional Probability; Bayes’ Theorem 2 Bayes’ Theorem Total Probability Bayes’ Theorem Frank Keller 3 An Application of Bayes’ Theorem School of Informatics University of Edinburgh Background keller@inf.ed.ac.uk Bayes’ Theorem Application to Diagnosis March 1, 2005 Base Rate Neglect Frank Keller Formal Modeling in Cognitive Science 1 Frank Keller Formal Modeling in Cognitive Science 2 Conditional Probability and Independence Conditional Probability and Independence Conditional Probability Conditional Probability Bayes’ Theorem Bayes’ Theorem Independence Independence An Application of Bayes’ Theorem An Application of Bayes’ Theorem Conditional Probability Conditional Probability Definition: Conditional Probability Example If A and B are two events in a sample space S , and P ( A ) � = 0 then the conditional probability of B given A is: A manufacturer knows that the probability of an oder being ready on time is 0.80, and the probability of an order being ready on P ( B | A ) = P ( A ∩ B ) time and being delivered on time is 0.72. What is the probability of P ( A ) an order being delivered on time, given that it is ready on time? Intuitively, the conditional probability P ( B | A ) is the probability R : order is ready on time; D : order is delivered on time. that the event B will occur given that the event A has occurred. P ( R ) = 0 . 80, P ( R ∩ D ) = 0 . 72. Therefore: Examples P ( D | R ) = P ( R ∩ D ) = 0 . 72 0 . 80 = 0 . 90 The probability of having a traffic accident given that it snows: P ( R ) P (accident | snow). The probability of reading the word amok given that the previous word was run : P (amok | run). Frank Keller Formal Modeling in Cognitive Science 3 Frank Keller Formal Modeling in Cognitive Science 4

Conditional Probability and Independence Conditional Probability and Independence Conditional Probability Conditional Probability Bayes’ Theorem Bayes’ Theorem Independence Independence An Application of Bayes’ Theorem An Application of Bayes’ Theorem Conditional Probability Example Back to lateralization of language (see last lecture). Let P ( A ) = 0 . 15 be the probability of being left-handed, P ( B ) = 0 . 05 From the definition of conditional probability, we obtain: be the probability of language being right-lateralized, and P ( A ∩ B ) = 0 . 04. Theorem: Multiplication Rule The probability of language being right-lateralized given that a If A and B are two events in a sample space S , and P ( A ) � = 0 then: person is left-handed: P ( A ∩ B ) = P ( A ) P ( B | A ) P ( B | A ) = P ( A ∩ B ) = 0 . 04 0 . 15 = 0 . 267 P ( A ) As A ∩ B = B ∩ A , it follows also that: The probability being left-handed given that language is P ( A ∩ B ) = P ( B ) P ( A | B ) right-lateralized: P ( A | B ) = P ( A ∩ B ) = 0 . 04 0 . 05 = 0 . 80 P ( B ) Frank Keller Formal Modeling in Cognitive Science 5 Frank Keller Formal Modeling in Cognitive Science 6 Conditional Probability and Independence Conditional Probability and Independence Conditional Probability Conditional Probability Bayes’ Theorem Bayes’ Theorem Independence Independence An Application of Bayes’ Theorem An Application of Bayes’ Theorem Independence Independence Example Definition: Independent Events A coin is flipped three times. Each of the eight outcomes is equally likely. Two events A and B are independent if and only if: A : head occurs on each of the first two flips, B : tail occurs on the third flip, C : exactly two tails occur in the three flips. Show that A and B are P ( B ∩ A ) = P ( A ) P ( B ) independent, B and C dependent. Intuitively, two events are independent if the occurrence of P ( A ) = 1 A = { HHH , HHT } 4 P ( A ) = 1 B = { HHT , HTT , THT , TTT } non-occurrence of either one does not affect the probability of the 2 P ( C ) = 3 C = { HTT , THT , TTH } occurrence of the other. 8 P ( A ∩ B ) = 1 A ∩ B = { HHT } 8 Theorem: Complement of Independent Events P ( B ∩ C ) = 1 B ∩ C = { HTT , THT } 4 If A and B are independent, then A and ¯ B are also independent. P ( A ) P ( B ) = 1 4 · 1 2 = 1 8 = P ( A ∩ B ), hence A and B are independent. P ( B ) P ( C ) = 1 2 · 3 3 8 = 16 � = P ( B ∩ C ), hence B and C are dependent. This follows straightforwardly from set theory. Frank Keller Formal Modeling in Cognitive Science 7 Frank Keller Formal Modeling in Cognitive Science 8

Conditional Probability and Independence Conditional Probability and Independence Total Probability Total Probability Bayes’ Theorem Bayes’ Theorem Bayes’ Theorem Bayes’ Theorem An Application of Bayes’ Theorem An Application of Bayes’ Theorem Total Probability Total Probability Example Theorem: Rule of Total Probability In an experiment on human memory, participants have to If events B 1 , B 2 , . . . , B k constitute a partition of the sample space memorize a set of words ( B 1 ), numbers ( B 2 ), and pictures ( B 3 ). S and P ( B i ) � = 0 for i = 1 , 2 , . . . , k , then for any event A in S : These occur in the experiment with the probabilities P ( B 1 ) = 0 . 5, k P ( B 2 ) = 0 . 4, P ( B 3 ) = 0 . 1. � P ( A ) = P ( B i ) P ( A | B i ) Then participants have to recall the items (where A is the recall i =1 event). The results show that P ( A | B 1 ) = 0 . 4, P ( A | B 2 ) = 0 . 2, P ( A | B 3 ) = 0 . 1. Compute P ( A ), the probability of recalling an item. B B By the theorem of total probability: 1 B 1 , B 2 , . . . , B k form a B 6 2 partition of S if they are � k P ( A ) = i =1 P ( B i ) P ( A | B i ) B pairwise mutually exclusive 5 = P ( B 1 ) P ( A | B 1 ) + P ( B 2 ) P ( A | B 2 ) + P ( B 3 ) P ( A | B 3 ) and if B 1 ∪ B 2 ∪ . . . ∪ B k = S . B 7 B B = 0 . 5 · 0 . 4 + 0 . 4 · 0 . 2 + 0 . 1 · 0 . 1 = 0 . 29 3 4 Frank Keller Formal Modeling in Cognitive Science 9 Frank Keller Formal Modeling in Cognitive Science 10 Conditional Probability and Independence Conditional Probability and Independence Total Probability Total Probability Bayes’ Theorem Bayes’ Theorem Bayes’ Theorem Bayes’ Theorem An Application of Bayes’ Theorem An Application of Bayes’ Theorem Bayes’ Theorem Bayes’ Theorem Bayes’ Theorem If B 1 , B 2 , . . . , B k are a partition of S and P ( B i ) � = 0 for Example i = 1 , 2 , . . . , k , then for any A in S such that P ( A ) � = 0: Reconsider the memory example. What is the probability that an item that is correctly recalled ( A ) is a picture ( B 3 )? P ( B r ) P ( A | B r ) P ( B r | A ) = By Bayes’ theorem: � k i =1 P ( B i ) P ( A | B i ) P ( B 3 ) P ( A | B 3 ) P ( B 3 | A ) = This can be simplified by renaming B r = B and by substituting � k i =1 P ( B i ) P ( A | B i ) P ( A ) = � k i =1 P ( B i ) P ( A | B i ) (theorem of total probability): 0 . 1 · 0 . 1 = = 0 . 0345 0 . 29 Bayes’ Theorem (simplified) The process of computing P ( B | A ) from P ( A | B ) is sometimes P ( B | A ) = P ( B ) P ( A | B ) called Bayesian inversion. P ( A ) Frank Keller Formal Modeling in Cognitive Science 11 Frank Keller Formal Modeling in Cognitive Science 12

Recommend

More recommend