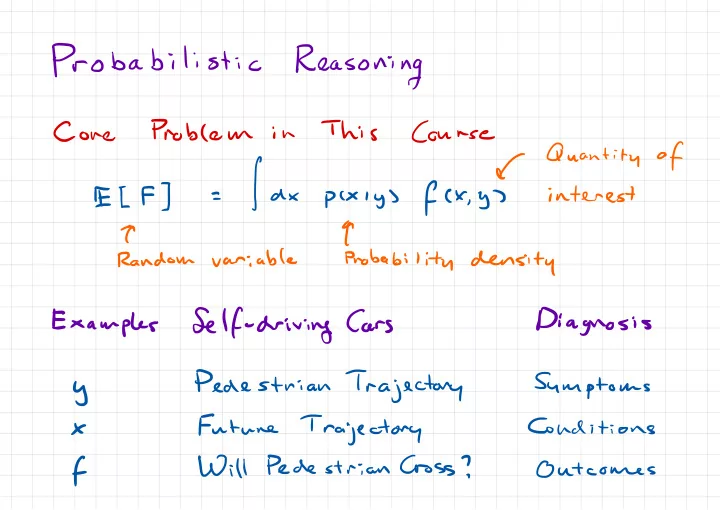

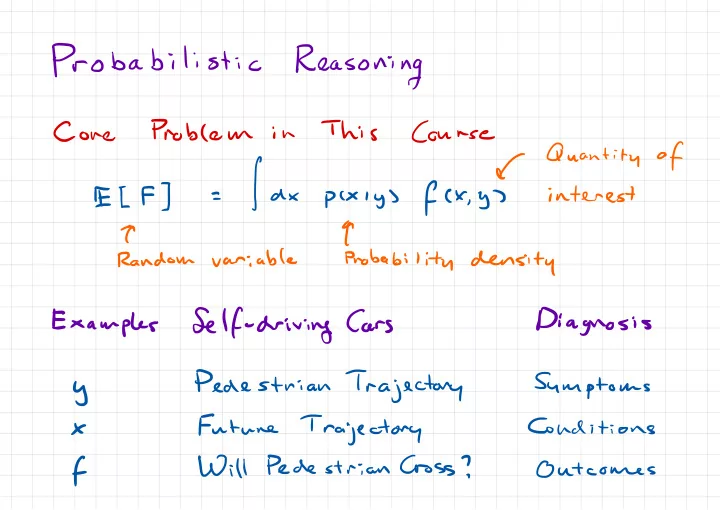

Probabilistic Reasoning Problem This Course Cone in of Quantity f fax pcxiysfex.gs ] ELF interest = t T variable Probability density Random - driving Self Diagnosis Cars Pedestrian Trajectory Symptoms y Examples Future Trajectory Conditions x Cross ? Will Pedestrian f Outcomes

Random Variables Random A Variable variable : Event stochastic mutually ) ( with outcome exclusive a X { 1. 2,345,6 } variable outcome × e x A of outcomes set : 3) ( X { 4 } ( { 5,6 } ) X=n → 3. z = 4. Probability that The chance an : event occurs 1 16 } ) 4/6 PCX ) P(X=u ? = =

Distributions A distribution outcomes maps probabilities to P(X=x 1/6 ) { } 3.4.56 xe i. z = , ) ( abused shorthand Commonly used ; or P(X=x ) PK ) =

516 316 Condition Xf5 .at Probabilities Probability Joint 133=216 B ) B) A PCA PLAN :X 4 > i = , 9 W In B : Event Events DIA ) = Probability Conditional pc B) = B ) PCA B ) PLA pl A. I i= , PCAIB ) 21.5 PCB ) i P( BIA ) 213 - -

Sum Rule General PCB PLA Au Case Men with short hair short hair Either man on f 0.4 - B ) P ( ) PC A. B) ) + = - p in 0.5+0.6 orrolariies Short hair oh Is - man 0,6 0.5 0.7 = ( Random Outcome var To f [ P(4=y)={P(4=y,X=× ) PCA P(X=x ) ) = A x e r form ) ( Most Event common

Bayes Rule . Rule Product PCB ) } . B ) ) From P AIB P( ( def A. = . A ) P( ) BIA p( conditional of = Bayes Rule ' B ) ( A. B) P ( ) P ( definition A 1 = ) PCB PIA ) PLBIA ) ( pnod rule ) = PCB )

Example You A have PCA disease : rare a ) PGA PCA ) 9999 ooo o = = , o . . for positive B Test disease is i P ( A) B I 0.0001=0.00099 0.99 0.99 = . lol I I 0 ooo A ) . P ( 0.9999 B I PIBlA)P(A)- . ol o.O I o n = , = . o.o PCB ) PCBIA ) PCA ) Pl BHA ) PGA ) t = = o.o Question ? What ) 1B is : 0.000 ' p ( ) A/B = = o.o , = PCB ) 0.0101

Densities Probability Suppose X variable that continuous is a P(X=x ) then for outcome 0 is × any PCX=i)=o Normal ( X~ 0,1 ) 4) PC =/ SXS 3 o Define \ density event function ) informal ( +8 ) P( 8s×< x x - lim pxcxs = . 28 8 → o

P ) Probability ( Space PC [ D F PCs , , R Sample space ) ( set of outcomes F- Set events of possible subsets ( set of outcomes ) of Probability P measure probabilities events ) C far = ? C Ei ) Ei ) ! P P , ] F [ : → o , probs ) events to maps C ¢ ) P when Ei disjoint C empty o - at - o ) Was prob ) ( probabilities =L ( all of Sample satisfy Sum hers prob I ) rule ) space

Measures Examples ) probability of not C measure Lebesgue Measure : ) ( of b- width a ,b ] ) µ( interval [ a = Measure Counting : µ ( ; ! , ) ) ( elements { xi 3 of number n = Product Measure : ) ( E ) ( µ{ Cartesian µ , ( , ) Ez ) µ E product = ( E E , ,Ez ) :=

PCA ) /×eA Definition Probability Measure of If ! differential of f reference measure Px ' xw.r.t.ir it Radar µ ihodynr - derivative Notation Machine Learning Implicitly assumes = ↳ f P ( A ) dx measure ) reference µ pix \ Implicitly refers to density µ of X w.at .

Values Expected ( X is hand van a ) ) X pcx ) with density ~ pcx :-. / X ) II [ dx pcx ) x Expectation Conditional := ) f II [ f ( x. I 4=y ] 4) ( dx pcxiy ) g) x. Expectation different distribution w.at a . = / fix Eacx , [ f ( x ) ] ( learning ) Machine dx > ) qcx

Central problem this in course ) ) µ Quantity Interest of I a Epcxiyslftx know Things we Things know We don't Examples - driving Self Cars Diagnosis Past trajectory Symptoms y Condition Future trajectory × f- Treatment Will pedestrian Outcome ? cross

Probabilistic | IT ' Stochastic Simulators as FE→① of ② Models f - - ' 2- goal 7- 7 goal ) I i p C u - " - ' c assumptions about ! ! ) likely destinations . . i i g ! 7 goal .to ) pctii.tk Z ~ lit ③ o - / H ) simulation . pedestrian C p µ to ) PC Ftt I F. ~ Zenit it , , ) ( about trajectories inference

HED But General Bayesian Inference is , ) Eti to P to ) it ( Ft , I Z P lit = , T , is o ) 7- 7 t PC ii. , ( known ) distribution predictive as =/ dtgpcz.it/Zo.2-g)pc7g ) - o ) 7- pc 2- lit , ¢ all integrate to need over possible locations goal ( ) almost intractable always

Making Tractable Inference Conditional Independence I . Conjugacy 2 . Approximate 3 Inference . Methods Monte Carlo Epcxiyslfk.gs/=ssf?fCx5y1xsnpcxiy7 Variational Methods : D ( ) ) It # ;¢ 4 got potty ) = argqmin

Conditional Independence Example Clustering : E , Lu ply , E ) b- . ,k Mh i - . . . , , " pen an ni - . . . . , M , Eu ) Nlplh - k ynlzn a - " ( Yi N ) = ldptdfdttpy.7.lu KXZXZ K P k×2 - - - , , E) Intractable ply ) : pgjtiy.lu EI ) E) I Tractable pltnlyn.lu : , = , , Kk " KXN

Conjugacy Yn Biased Example Coins : Beta Bernoulli X C a , B ) ( X ) n n Bayes Rule yn ) p ( X 4 4µ= I y = , , . . , . , l 41 X ) p CX ) 4N I Yi P y - = - . " . , . , = yn ) ( 4 Up , p = y e , . , . . . ,

Prior ;qp7= . ×sP ' 1- xa ' " Betak ( , BC 0,137 Same dependence B 19,13 ) p( a) p( p > = × on ; - P( bur ) dtp xfoo x ) ( Likelihood , - N M Yn ply lx ) plynlx ) i. d i. :N are = , n =| . Yn { ' YE ×Yn × ' Plyntx ) × , ( , = . = yn=o x ) 1 i -

Conjngacy ) Plx I ply , :µ,× ) a ply yi :µ,×7 ,u : = , - ) plyiin ( Yi ) x ) P ply ) 1 :µ,× pk = , :X , xo N ' ' M×Yn( , " " . xsp -9 "c , 1 × , = . .nl?Ya.ynDtB Bam n= , ×lEYmto ' ' . ' 1 , , = Blair Tµunw (" Number heads tails of of N ) µ ) out of ( out ( of

Conjngacy ) Plx I ply , :µ,× ) yi a ply ,×7 :µ = in , - ) plyiin ( Yi ) × ) I P ply :µ,× pk = ) , :X , " 17×9 xo N " " -9 . xsp "a 1 " × , ( i. = .nl?Yn.ynDtB B( a ,p7 n= t ' , = E. ×l§ £ ynlta ynta ' ' . . , , , . , = × ' B( a ,p7 ={ § yn)tB ( i - no , BK.PT ×£' am PKII ' , ,5l Bk ci = = . Bcqp , , ,3 ,

Does Depends not Conjhgacy dependant x on . . BC I. B ) Betak;I F) × ) ply = :n , , , Bla B) , ) ) PCX plyi yi 1 = :u :µ Posterior ) Betak 2 p ) ; pcxiyi :n = 's , 2=a+µheadspn=p+µtai Marginal Likelihood BLEE ) ) ply :u = , BK ,p )

Predictive Distribution | :X , ) ( , ) 1 dx Yi Yi plymti × p 1 yµ+ = :X , , / ) 1×1 pklyi dx ( yµ+ = p :N , ) ) :* ) [ Epcxiy plyntilx = , Weighted Example Coin ) ( Exercise

Approximate Inference

Recommend

More recommend