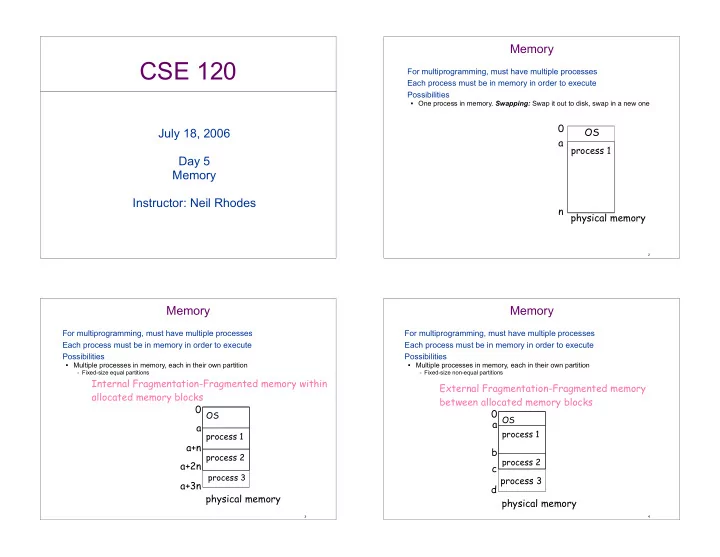

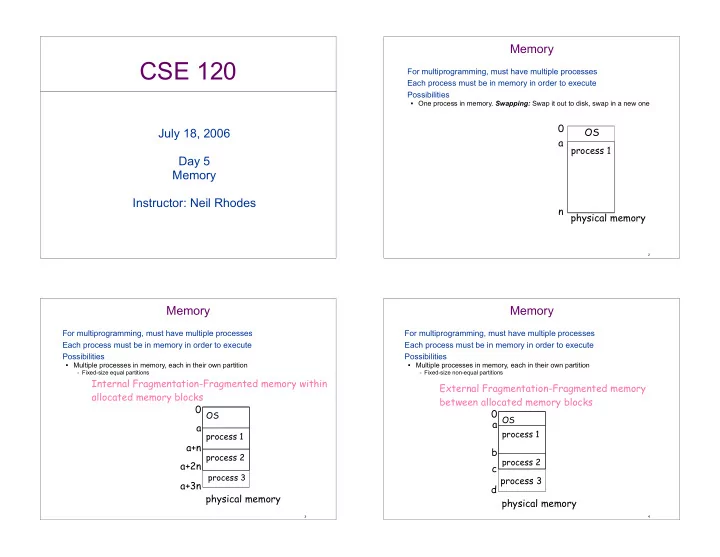

Memory CSE 120 For multiprogramming, must have multiple processes Each process must be in memory in order to execute Possibilities � One process in memory. Swapping: Swap it out to disk, swap in a new one 0 OS July 18, 2006 a process 1 Day 5 Memory Instructor: Neil Rhodes n physical memory 2 Memory Memory For multiprogramming, must have multiple processes For multiprogramming, must have multiple processes Each process must be in memory in order to execute Each process must be in memory in order to execute Possibilities Possibilities � Multiple processes in memory, each in their own partition � Multiple processes in memory, each in their own partition – Fixed-size equal partitions – Fixed-size non-equal partitions Internal Fragmentation-Fragmented memory within External Fragmentation-Fragmented memory allocated memory blocks between allocated memory blocks 0 0 OS OS a a process 1 process 1 a+n b process 2 process 2 a+2n c process 3 process 3 a+3n d physical memory physical memory 3 4

Placement Algorithms Address Binding Need to allocate memory block of size n When to map instructions and data references to actual memory locations First-fit � Find first block � n Link time � Absolute addressing. Works if well-known address at which user programs Best-fit are loaded � Find smallest open memory block ( � n) Load time � Linker-loader relocates code as it loads it into memory Worst-fit – Change all data references to take into account where loaded � Find largest open memory block ( � n) � Position-independent code. – On Mac OS 9, subroutine calls are PC-relative; data references are relative to A5 Next-fit register. Linker sets A5 register at load time � Starting with last open block considered, find next block � n Execution time � Code uses logical addresses which are converted to physical addresses Buddy system � Hardware: relocation register relocation register: � Round n up to a power of 2. Free memory is kept in powers of 2. If no block a 0 of size n, split a larger chunk in half until size n is found. 0 � When a block is freed, if its buddy (of the same size) is free, merge them a n process 1 together (recursively) a+n a+2n + a+3n Memory 5 6 Process Protection Within a Processes Address Space How to protect the memory of a process (or the OS) from other running processes? � Hardware solution: base (aka relocation) and limit registers 0 limit register: relocation register: text (code) n a 0 0 a n data process 1 a+n heap a+2n yes < + a+3n Memory no stack n exception process – Disadvantage: can’t easily share memory between processes � Software: – Tagged data (e.g., Smalltalk, Lisp). No raw pointers – Virtual machine 7 8

Fragmentation Segmentation External Fragmentation Provides multiple address spaces � Space wasted between allocated memory blocks � Handy for separate data/stack/code � Good for sharing code/data between processes � Easy way to specify protection Internal Fragmentation – No execute on stack! � Space wasted within allocated memory blocks � Address is (segment_number offset within segment) � Need segment base/segment limit registers (array of registers) � Programmer (or compiler) must specify different segments Solutions to external fragmentation � Compaction – Move blocks around dynamically to make free space contiguous 0 0 0 text (code) data – Only possible if relocation is dynamic at execution time text (code) q-p p � Non-contiguous p Segment 1 Segment 0 data q – Don’t require all of the memory of a process to be contiguous heap 0 0 - Segmentation heap stack - Paging Segment 2 Segment 3 stack n Solutions to internal fragmentation � Don’t use fixed-size blocks process with single segment process with 4 segments 9 10 Segmentation Segmentation Example Pros 8-bit address: � Easy to share segments between processes � 2 bits segment � Segment-specific protection � 6 bits offset � Programmer/Compiler/Linker must be aware of different segments Cons � Still must worry about external fragmentation: each segment must have 0 0 text (code) data contiguous physical memory 12 40 Segment 1 Segment 0 0 0 A 5 x heap stack 0 P = S 4 , 32 0 35 x = 0 C P 0 Segment 2 Segment 3 � Example code – 0x04: load 0x19, r0 – 0x06: push r0, sp – 0x08: add sp, #2 11 12

Swapping Paging Swapping moves the memory of a segment (or entire process) out to Map contiguous virtual address space to non-contiguous physical disk address space � Process can’t run without its memory � Idea � On context switch in, memory is loaded back from memory – Break virtual address space into fixed-size pages (aka virtual pages) – Map those fixed-size pages into same-sized physical frames (aka physical pages) Slow since can be lots of data 0 0 a k k b b 2k 2k c 3k 3k d a 4k 4k process d 5k 6k 7k c 8k memory 13 14 Implementing Paging Implementing Paging Page table Usually done in hardware ( Memory Management Unit—MMU ) � Index into table is page number. � Page-Table Base Register and Page-Table Limit Register � Each entry is a page table entry: – Must be saved/restored on a context-switch � Free list of frames – Frame number � Page-table per process (or per segment) – Valid/invalid bit – Permissions convertToPhysicalAddress(physicalAddress) { � – … pageNumber = upper bits of physicalAddress if pageNumber ouf of range, generate exception (aka fault) if pageTable[pageNumber].invalid generate page fault offset = lower bits of physicalAddress 0 0 3 rx upper bits of virtualAddress=pageTable[pageNumber].frameNumber text (code) 1 rx text (2 of 3) lower bits of virtualAddres = offset data 5 rw Advantages heap 8 rw text (1 of 3) � No external fragmentation 10 rw – Unallocated memory can be allocated to any process invalid stack text (3 of 3) � Transparent to programmer/linker/compiler invalid � Can put individual frames out to disk (virtual memory) … stack process with single segment Disadvantages invalid heap (1 of 2) � Translating from virtual to physical address requires an additional memory 7 rw access heap (2 of 2) � Unused pages in a process still require page table entries memory � Internal fragmentation – On average, 1/2 frame size per segment 15 16

Relationship between physical and virtual addresses Dealing with holes in virtual address space Virtual address same size as physical address With large address space (and small pages), page table can be very large � 32-bit virtual addresses, 4KB page: 2 20 page table entries per process page # offset frame # offset virtual address physical address Multilevel page table � Virtual address broken up into multiple page numbers: PT1 and PT2. � PT1 used as index into top-level page table to find second-level page table Virtual address smaller than physical address � PT2 used as index into second-level page table to find page table entry � If parts of address space are unused, top-level page table can show second- page # offset frame # offset level page table not present virtual address physical address 0 0 a 3 0 Virtual address bigger than physical address 1 1 1 b 1 b 2 2 unused page # offset frame # offset 3 2 3 unused invalid physical address virtual address 3 a 4 4 unused 4 d 5 d 5 6 7 process memory 17 18 Dealing with holes in virtual address space Speed Combination of segmentation/paging Imagine memory access is 100ns � Top n bits are segment number – Except some machines, current segment stored in separate register, not in address itself � Next m bits are page number How long to access memory using paging? � 100ns to access page table entry � Remainder are offset � 100ns to access physical memory address segment size: 500 Using virtual addresses slows down by factor of 2 0 0 a 3 1 1 b 1 b Segment 0 2 3 a Solution segment size: 200 � Use a cache 4 d d 4 0 – definition; copy on a faster medium of data that resides elsewhere 5 Segment 1 – Examples: 6 - Caching contents of files in memory - Caching contents of memory in off-chip (Level 2) cache 7 - Caching contents of off-chip cache in on-chip (Level 1) cache memory - Caching contents of page-table entries – Kinds of cache: - write-back (changes to data are made in cache. written to permanent storage later). - write-through (changes to data are made in cache and permanent storage) 19 20

Recommend

More recommend