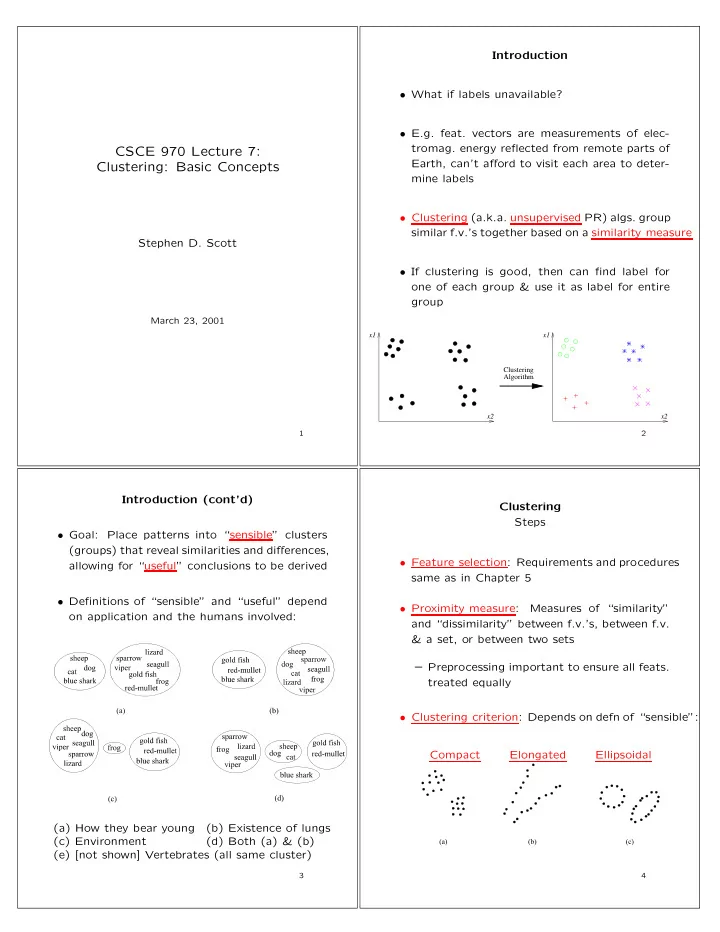

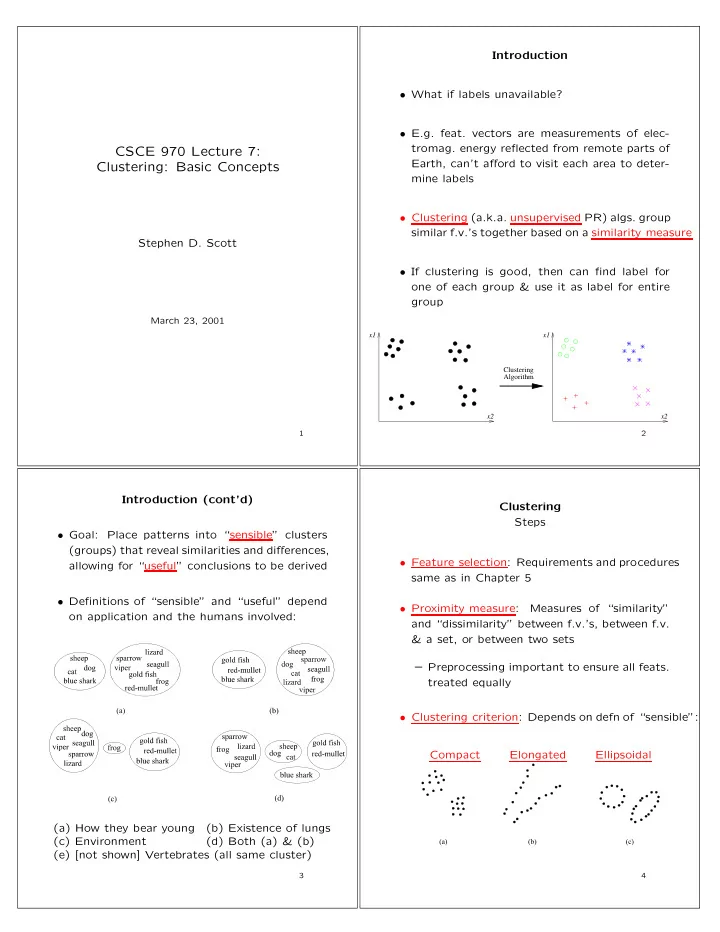

Introduction • What if labels unavailable? • E.g. feat. vectors are measurements of elec- tromag. energy reflected from remote parts of CSCE 970 Lecture 7: Earth, can’t afford to visit each area to deter- Clustering: Basic Concepts mine labels • Clustering (a.k.a. unsupervised PR) algs. group similar f.v.’s together based on a similarity measure Stephen D. Scott • If clustering is good, then can find label for one of each group & use it as label for entire group March 23, 2001 x1 x1 Clustering Algorithm x2 x2 1 2 Introduction (cont’d) Clustering Steps • Goal: Place patterns into “sensible” clusters (groups) that reveal similarities and differences, • Feature selection: Requirements and procedures allowing for “useful” conclusions to be derived same as in Chapter 5 • Definitions of “sensible” and “useful” depend • Proximity measure: Measures of “similarity” on application and the humans involved: and “dissimilarity” between f.v.’s, between f.v. & a set, or between two sets – Preprocessing important to ensure all feats. treated equally • Clustering criterion: Depends on defn of “sensible”: Compact Elongated Ellipsoidal (a) How they bear young (b) Existence of lungs (c) Environment (d) Both (a) & (b) (e) [not shown] Vertebrates (all same cluster) 3 4

Clustering Steps (cont’d) • Verify clustering tendency (Sec. 16.6) Clustering • Clustering algorithm: Chapters 12–15 Applications • Cluster validation: Verify that choices of alg. • Data reduction (compression): Represent each params. & cluster shape match data’s cluster- cluster with single item ing structure (Chapt. 16) • Interpretation: The expert interprets results • Suggest hypotheses about nature of data with other information • Warning: Each step is subjective and depends • Test hypotheses about data, e.g. that certain on expert’s biases! feats. are correlated while others are indepen- dent • Prediction based on groups: e.g. Slide 7.2 5 6 Clustering Clustering Cluster Types Types of Features • Start with X = { x 1 , . . . , x N } and place into m • Nominal: Name only, no quantitative compar- clusters C 1 , . . . , C m isons possible, e.g. { male, female } • Type 1: Hard (crisp) • Ordinal: Can be meaningfully ordered, but no quantitative meaning on the differences, e.g. m � C i � = ∅ , i = 1 , . . . , m C i = X { 4, 3, 2, 1 } to represent { excellent, very good, i =1 good, poor } C i ∩ C j = ∅ , i � = j, i, j ∈ { 1 , . . . , m } – F.v.’s in C i “more similar” to others in C i • Interval-scaled: Difference is meaningful, ratio than those in C j , j � = i is not, e.g. temperature measures on Celsius scale • Type 2: Fuzzy: C j has membership function µ j : X → [0 , 1] s.t. • Ratio-scaled: Difference and ratio both mean- m ingful, e.g. weight � µ j ( x i ) = 1 , i ∈ { 1 , . . . , N } j =1 N • Each type possesses the properties of the pre- � 0 < µ j ( x i ) < N, j ∈ { 1 , . . . , m } ceding types i =1 7 8

Proximity Measures Proximity Measures Definitions Definitions (cont’d) • Dissimilarity measure is func. d : X × X → ℜ s.t. • Can also define proximity measures between ∃ d 0 ∈ ℜ : −∞ < d 0 ≤ d ( x , y ) < + ∞ , ∀ x , y ∈ X sets of f.v.’s d ( x , x ) = d 0 ∀ x ∈ X d ( x , y ) = d ( y , x ) ∀ x , y ∈ X • Let U = { D 1 , . . . , D k } , D i ⊂ X , PM α : U × U → ℜ • d is a metric DM if d ( x , y ) = d 0 ⇔ x = y and d ( x , z ) ≤ d ( x , y ) + d ( y , z ) ∀ x , y , z ∈ X • E.g. X = { x 1 , x 2 , x 3 , x 4 , x 5 , x 6 } , U = {{ x 1 , x 2 } , – E.g. d 2 ( · , · ) = Euclidean distance, d 0 = 0 { x 1 , x 4 } , { x 3 , x 4 , x 5 } , { x 1 , x 2 , x 3 , x 4 , x 5 }} , d ss � � D i , D j = min d 2 ( x , y ) min x ∈ D i , y ∈ D j • Similarity measure is func. s : X × X → ℜ s.t. ∃ s 0 ∈ ℜ : −∞ < s ( x , y ) ≤ s 0 < + ∞ , ∀ x , y ∈ X • Min. value is d ss min, 0 = 0, d ss min ( D i , D i ) = d ss min, 0 , s ( x , x ) = s 0 ∀ x ∈ X � � � � and d ss = d ss , so d ss D i , D j D j , D i min ( · , · ) min min s ( x , y ) = s ( y , x ) ∀ x , y ∈ X is a DM • s is a metric SM if s ( x , y ) = s 0 ⇔ x = y • However, d ss min ( { x 1 , x 2 } , { x 1 , x 4 } ) = d ss min, 0 and and s ( x , y ) s ( y , z ) ≤ [ s ( x , y ) + s ( y , z )] s ( x , z ) { x 1 , x 2 } � = { x 1 , x 4 } , so not a metric DM ∀ x , y , z ∈ X 9 10 Proximity Measures Between Points Real-Valued Vectors Example Dissimilarity Measures (pp. 361–362) Proximity Measures Between Points Real-Valued Vectors • Common, general-purpose metric DM is weighted Example Similarity Measures (pp. 362–363) L p norm: 1 /p ℓ • Inner product: w i | x i − y i | p � d p ( x , y ) = i =1 ℓ s inner ( x , y ) = x T y = � x i y i i =1 • Special cases include weighted Euclidian dis- tance ( p = 2), weighted Manhattan distance • If � x � 2 , � y � 2 ≤ a , then − a 2 ≤ s inner ( x , y ) ≤ a 2 ℓ � d 1 ( x , y ) = w i | x i − y i | , i =1 • Tanimoto distance: and weighted L ∞ norm x T y 1 s T ( x , y ) = 2 − x T y = , d ∞ ( x , y ) = max 1 ≤ i ≤ ℓ { w i | x i − y i |} 1 + ( x − y ) T ( x − y ) � x � 2 2 + � y � 2 x T y which is inversely prop. to • Generalization of weighted L 2 norm is (squared Euclid. dist.)/(correlation measure) � ( x − y ) T B ( x − y ) , d ( x , y ) = e.g. Mahalanobis distance 11 12

Proximity Measures Between Points Fuzzy Measures Proximity Measures Between Points • Let x i ∈ [0 , 1] be measure of how much x pos- Discrete-Valued Vectors sesses i th feature • If x i , y i ∈ { 0 , 1 } , then • If the coordinates of f.v.’s come from { 0 , . . . , k − 1 } , can use SMs and DMs defined for real- ( x i ≡ y i ) = (( ¬ x i ∧ ¬ y i ) ∨ ( x i ∧ y i )) valued f.v.’s, (e.g. weighted L p norm) plus: • Generalize to fuzzy values: – Hamming distance: DM measuring number of places where x and y differ s ( x i , y i ) = max { min { 1 − x i , 1 − y i } , min { x i , y i }} – Tanimoto measure: SM measuring number of places where x and y are same, divided • To measure similarity between vectors: by total number of places 1 /p ℓ s p s ( x i , y i ) p � F ( x , y ) = ∗ Ignore places i where x i = y i = 0 i =1 / 2 ≤ s q � ℓ 1 /p � F ( · , · ) ≤ ℓ 1 /p · Useful for ordinal features where x i is degree to which x possesses i th feature • So s ∞ F = max 1 ≤ i ≤ ℓ s ( x i , y i ) and s 1 F = � ℓ i =1 s ( x i , y i ) = generalization of Ham- ming distance 13 14 Prox. Measures Between a Point and a Set Prox. Measures Between a Point and a Set Representatives • Might want to measure proximity of point x to existing cluster C • Alternative: Measure distance between point x and a representative of the set C • Can measure proximity α by using all points of C or by using a representative of C • Appropriate choice of representative depends on type of cluster • If all points of C used, common choices: Compact Elongated Hyperspherical Point Hyperplane Hypersphere α ps max ( x , C ) = max y ∈ C { α ( x , y ) } α ps min ( x , C ) = min y ∈ C { α ( x , y ) } avg ( x , C ) = 1 α ps � α ( x , y ) , | C | y ∈ C where α ( x , y ) is any measure between x and y 15 16

Prox. Measures Between a Point and a Set Prox. Measures Between a Point and a Set Examples of Point Representatives Hyperplane & Hyperspherical Representatives • Mean vector: m p = 1 � y | C | • Definition of hyperplane H and dist. function: y ∈ C a T x + a 0 = 0 d ( x , H ) = min z ∈ H d ( x , z ) • Works well in ℜ ℓ , but might not exist in dis- crete space • Definition of hypersphere Q and dist. function: • Mean center m c ∈ C : ( x − c ) T ( x − c ) = r 2 d ( x , Q ) = min z ∈ Q d ( x , z ) � � d ( m c , y ) ≤ d ( z , y ) ∀ z ∈ C , y ∈ C y ∈ C Hyperplane Hypersphere where d ( · , · ) is DM (if SM used, reverse ineq.) • Median center: For each point y ∈ C , find me- dian dissimilarity from y to all other points of C , then take min; so m med ∈ C is defined as med y ∈ C { d ( m med , y ) } ≤ med y ∈ C { d ( z , y ) } ∀ z ∈ C • Examples p. 375 • Given set of points, can find representative via regression techniques, minimizing sum of dis- • Now can measure proximity between C ’s rep tances between points and representative and x with standard measures 17 18 Prox. Measures Between Two Sets Prox. Measures Between Two Sets • Given sets of f.v.’s D i and D j and prox. meas. (cont’d) α ( · , · ) • Min: α ss min ( D i , D j ) = min { α ( x , y ) } • Max: α ss max ( D i , D j ) = max { α ( x , y ) } is a x ∈ D i , y ∈ D j x ∈ D i , y ∈ D j is a measure (but not a metric) iff α is a DM measure (but not necessarily a metric) iff α is a SM 1 • Average: α ss � � avg ( D i , D j ) = α ( x , y ) – E.g. α is Euclid. dist. (a DM), ℓ = 1, D 1 = | D i | | D j | x ∈ D i y ∈ D j { (1) , (10) } , D 2 = { (4) , (7) } : is not necessarily a measure even if α is α ss max ( D 1 , D 1 ) = 9 � = 3 = α ss max ( D 2 , D 2 ) – α is SM ⇒ α ( x , y ) ≤ s 0 ∀ x , y and • Representative (mean): α ss α ( x , x ) = s 0 ∀ x , so rep ( D i , D j ) = α ( m D i , m D j ), ( m D i is point rep. of D i ) is a measure whenever α ss max ( D i , D j ) ≤ s 0 ∀ D i , D j , and ∀ D α is α ss x ∈ D, y ∈ D { α ( x , y ) } = max x ∈ D { α ( x , x ) } = s 0 max ( D, D ) = max α ss max ( D i , D j ) = α ss max ( D j , D i ) 19 20

Recommend

More recommend