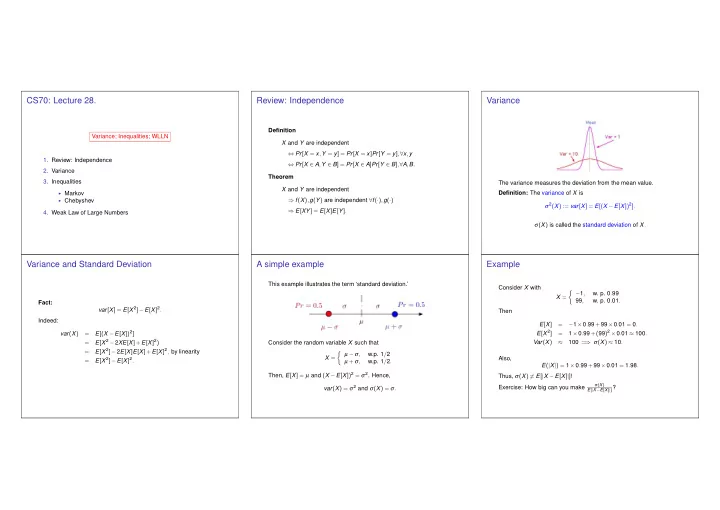

CS70: Lecture 28. Review: Independence Variance Definition Variance; Inequalities; WLLN X and Y are independent ⇔ Pr [ X = x , Y = y ] = Pr [ X = x ] Pr [ Y = y ] , ∀ x , y 1. Review: Independence ⇔ Pr [ X ∈ A , Y ∈ B ] = Pr [ X ∈ A ] Pr [ Y ∈ B ] , ∀ A , B . 2. Variance Theorem 3. Inequalities The variance measures the deviation from the mean value. X and Y are independent ◮ Markov Definition: The variance of X is ◮ Chebyshev ⇒ f ( X ) , g ( Y ) are independent ∀ f ( · ) , g ( · ) σ 2 ( X ) := var [ X ] = E [( X − E [ X ]) 2 ] . ⇒ E [ XY ] = E [ X ] E [ Y ] . 4. Weak Law of Large Numbers σ ( X ) is called the standard deviation of X . Variance and Standard Deviation A simple example Example This example illustrates the term ‘standard deviation.’ Consider X with � − 1 , w. p. 0 . 99 X = 99 , w. p. 0 . 01 . Fact: var [ X ] = E [ X 2 ] − E [ X ] 2 . Then Indeed: E [ X ] = − 1 × 0 . 99 + 99 × 0 . 01 = 0 . 1 × 0 . 99 +( 99 ) 2 × 0 . 01 ≈ 100 . E [( X − E [ X ]) 2 ] E [ X 2 ] = var ( X ) = E [ X 2 − 2 XE [ X ]+ E [ X ] 2 ) = Consider the random variable X such that Var ( X ) ≈ 100 = ⇒ σ ( X ) ≈ 10 . E [ X 2 ] − 2 E [ X ] E [ X ]+ E [ X ] 2 , by linearity = � µ − σ , w.p. 1 / 2 X = Also, E [ X 2 ] − E [ X ] 2 . = µ + σ , w.p. 1 / 2 . E ( | X | ) = 1 × 0 . 99 + 99 × 0 . 01 = 1 . 98 . Then, E [ X ] = µ and ( X − E [ X ]) 2 = σ 2 . Hence, Thus, σ ( X ) � = E [ | X − E [ X ] | ] ! σ ( X ) var ( X ) = σ 2 and σ ( X ) = σ . Exercise: How big can you make E [ | X − E [ X ] | ] ?

Uniform Variance of geometric distribution. Fixed points. Assume that Pr [ X = i ] = 1 / n for i ∈ { 1 ,..., n } . Then Number of fixed points in a random permutation of n items. X is a geometrically distributed RV with parameter p . “Number of student that get homework back.” Thus, Pr [ X = n ] = ( 1 − p ) n − 1 p for n ≥ 1. Recall E [ X ] = 1 / p . n n i × Pr [ X = i ] = 1 ∑ ∑ E [ X ] = i X = X 1 + X 2 ··· + X n n p + 4 p ( 1 − p )+ 9 p ( 1 − p ) 2 + ... E [ X 2 ] i = 1 i = 1 = where X i is indicator variable for i th student getting hw back. 1 n ( n + 1 ) = n + 1 − [ p ( 1 − p )+ 4 p ( 1 − p ) 2 + ... ] − ( 1 − p ) E [ X 2 ] = = . n 2 2 p + 3 p ( 1 − p )+ 5 p ( 1 − p ) 2 + ... pE [ X 2 ] = E ( X 2 ) ∑ E ( X 2 i )+ ∑ = E ( X i X j ) . 2 ( p + 2 p ( 1 − p )+ 3 p ( 1 − p ) 2 + .. ) Also, = E [ X ] ! i i � = j − ( p + p ( 1 − p )+ p ( 1 − p ) 2 + ... ) Distribution. n n n × 1 1 i 2 Pr [ X = i ] = 1 E [ X 2 ] ∑ ∑ i 2 = n +( n )( n − 1 ) × = pE [ X 2 ] = 2 E [ X ] − 1 n ( n − 1 ) n i = 1 i = 1 2 ( 1 p ) − 1 = 2 − p = 1 + 1 = 2 . 1 + 3 n + 2 n 2 = = , as you can verify. p 6 E ( X 2 i ) = 1 × Pr [ X i = 1 ]+ 0 × Pr [ X i = 0 ] ⇒ E [ X 2 ] = ( 2 − p ) / p 2 and = 1 = This gives n var [ X ] = E [ X 2 ] − E [ X ] 2 = 2 − p p 2 = 1 − p E ( X i X j ) = 1 × Pr [ X i = 1 ∩ X j = 1 ]+ 0 × Pr [“ anything else’ ′ ] p 2 − 1 p 2 . = n 2 − 1 √ var ( X ) = 1 + 3 n + 2 n 2 − ( n + 1 ) 2 = 1 × 1 × ( n − 2 )! 1 = 1 − p . n ! n ( n − 1 ) σ ( X ) = ≈ E [ X ] when p is small(ish). 6 4 12 p Var ( X ) = E ( X 2 ) − ( E ( X )) 2 = 2 − 1 = 1 . Variance: binomial. Properties of variance. Variance of sum of two independent random variables Theorem: If X and Y are independent, then 1. Var ( cX ) = c 2 Var ( X ) , where c is a constant. Var ( X + Y ) = Var ( X )+ Var ( Y ) . Scales by c 2 . Proof: 2. Var ( X + c ) = Var ( X ) , where c is a constant. n Since shifting the random variables does not change their variance, � n � Shifts center. E [ X 2 ] i 2 p i ( 1 − p ) n − i . ∑ = let us subtract their means. i i = 0 Proof: That is, we assume that E ( X ) = 0 and E ( Y ) = 0. = Really???!!##... E (( cX ) 2 ) − ( E ( cX )) 2 Var ( cX ) = Then, by independence, Too hard! c 2 E ( X 2 ) − c 2 ( E ( X )) 2 = c 2 ( E ( X 2 ) − E ( X ) 2 ) = E ( XY ) = E ( X ) E ( Y ) = 0 . Ok.. fine. c 2 Var ( X ) = Let’s do something else. E (( X + c − E ( X + c )) 2 ) Var ( X + c ) = Maybe not much easier...but there is a payoff. Hence, E (( X + c − E ( X ) − c ) 2 ) = E (( X − E ( X )) 2 ) = Var ( X ) = E (( X + Y ) 2 ) = E ( X 2 + 2 XY + Y 2 ) var ( X + Y ) = E ( X 2 )+ 2 E ( XY )+ E ( Y 2 ) = E ( X 2 )+ E ( Y 2 ) = = var ( X )+ var ( Y ) .

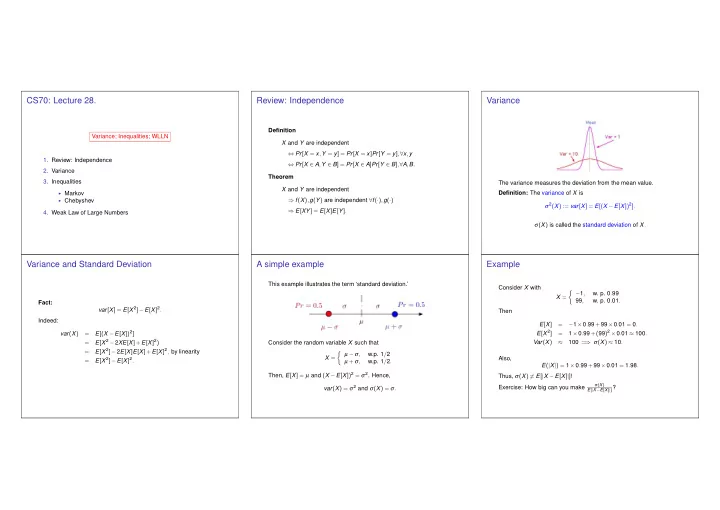

n n a µ n µ Variance of sum of independent random variables Variance of Binomial Distribution. Inequalities: An Overview Theorem: If X , Y , Z ,... are pairwise independent, then Flip coin with heads probability p . X - how many heads? var ( X + Y + Z + ··· ) = var ( X )+ var ( Y )+ var ( Z )+ ··· . Chebyshev Distribution Markov Proof: � 1 if i th flip is heads X i = Since shifting the random variables does not change their variance, 0 otherwise let us subtract their means. p n p n p n i ) = 1 2 × p + 0 2 × ( 1 − p ) = p . E ( X 2 That is, we assume that E [ X ] = E [ Y ] = ··· = 0. Var ( X i ) = p − ( E ( X )) 2 = p − p 2 = p ( 1 − p ) . Then, by independence, p = 0 = ⇒ Var ( X i ) = 0 � � p n E [ XY ] = E [ X ] E [ Y ] = 0 . Also, E [ XZ ] = E [ YZ ] = ··· = 0 . p = 1 = ⇒ Var ( X i ) = 0 X = X 1 + X 2 + ... X n . Hence, P r [ X > a ] P r [ | X − µ | > � ] X i and X j are independent: Pr [ X i = 1 | X j = 1 ] = Pr [ X i = 1 ] . E (( X + Y + Z + ··· ) 2 ) var ( X + Y + Z + ··· ) = E ( X 2 + Y 2 + Z 2 + ··· + 2 XY + 2 XZ + 2 YZ + ··· ) = Var ( X ) = Var ( X 1 + ··· X n ) = np ( 1 − p ) . E ( X 2 )+ E ( Y 2 )+ E ( Z 2 )+ ··· + 0 + ··· + 0 = = var ( X )+ var ( Y )+ var ( Z )+ ··· . Andrey Markov Markov’s inequality A picture The inequality is named after Andrey Markov, although it appeared earlier in the work of Pafnuty Chebyshev. It should be (and is sometimes) called Chebyshev’s first inequality. Theorem Markov’s Inequality Andrey Markov is best known for his work on Assume f : ℜ → [ 0 , ∞ ) is nondecreasing. Then, stochastic processes. A primary subject of his research later became known as Markov Pr [ X ≥ a ] ≤ E [ f ( X )] , for all a such that f ( a ) > 0 . chains and Markov processes. f ( a ) Pafnuty Chebyshev was one of his teachers. Proof: Markov was an atheist. In 1912 he protested Observe that Leo Tolstoy’s excommunication from the 1 { X ≥ a } ≤ f ( X ) f ( a ) . Russian Orthodox Church by requesting his own excommunication. The Church complied Indeed, if X < a , the inequality reads 0 ≤ f ( X ) / f ( a ) , which holds with his request. since f ( · ) ≥ 0. Also, if X ≥ a , it reads 1 ≤ f ( X ) / f ( a ) , which holds since f ( · ) is nondecreasing. Taking the expectation yields the inequality, because expectation is monotone.

Markov Inequality Example: G(p) Markov Inequality Example: P ( λ ) Chebyshev’s Inequality p and E [ X 2 ] = 2 − p Let X = G ( p ) . Recall that E [ X ] = 1 p 2 . Let X = P ( λ ) . Recall that E [ X ] = λ and E [ X 2 ] = λ + λ 2 . This is Pafnuty’s inequality: Theorem: Choosing f ( x ) = x , we Choosing f ( x ) = x , we Pr [ | X − E [ X ] | > a ] ≤ var [ X ] get get , for all a > 0 . a 2 Pr [ X ≥ a ] ≤ E [ X ] = λ Pr [ X ≥ a ] ≤ E [ X ] = 1 a . ap . a a Proof: Let Y = | X − E [ X ] | and f ( y ) = y 2 . Then, Choosing f ( x ) = x 2 , Choosing f ( x ) = x 2 , Pr [ Y ≥ a ] ≤ E [ f ( Y )] = var [ X ] . we get we get a 2 f ( a ) Pr [ X ≥ a ] ≤ E [ X 2 ] = λ + λ 2 Pr [ X ≥ a ] ≤ E [ X 2 ] = 2 − p . p 2 a 2 . a 2 a 2 a 2 This result confirms that the variance measures the “deviations from the mean.” Chebyshev and Poisson Chebyshev and Poisson (continued) Fraction of H ’s Let X = P ( λ ) . Then, E [ X ] = λ and var [ X ] = λ . Thus, Let X = P ( λ ) . Then, E [ X ] = λ and var [ X ] = λ . By Markov’s inequality, Here is a classical application of Chebyshev’s inequality. Pr [ X ≥ a ] ≤ E [ X 2 ] = λ + λ 2 Pr [ | X − λ | ≥ n ] ≤ var [ X ] = λ n 2 . . How likely is it that the fraction of H ’s differs from 50 % ? n 2 a 2 a 2 Let X m = 1 if the m -th flip of a fair coin is H and X m = 0 otherwise. Also, if a > λ , then X ≥ a ⇒ X − λ ≥ a − λ > 0 ⇒ | X − λ | ≥ a − λ . λ Define Hence, for a > λ , Pr [ X ≥ a ] ≤ Pr [ | X − λ | ≥ a − λ ] ≤ ( a − λ ) 2 . Y n = X 1 + ··· + X n , for n ≥ 1 . n We want to estimate Pr [ | Y n − 0 . 5 | ≥ 0 . 1 ] = Pr [ Y n ≤ 0 . 4 or Y n ≥ 0 . 6 ] . By Chebyshev, Pr [ | Y n − 0 . 5 | ≥ 0 . 1 ] ≤ var [ Y n ] ( 0 . 1 ) 2 = 100 var [ Y n ] . Now, var [ Y n ] = 1 n 2 ( var [ X 1 ]+ ··· + var [ X n ]) = 1 n var [ X 1 ] ≤ 1 4 n . Var ( X i ) = p ( 1 − lp ) ≤ ( . 5 )( . 5 ) = 1 4

Recommend

More recommend