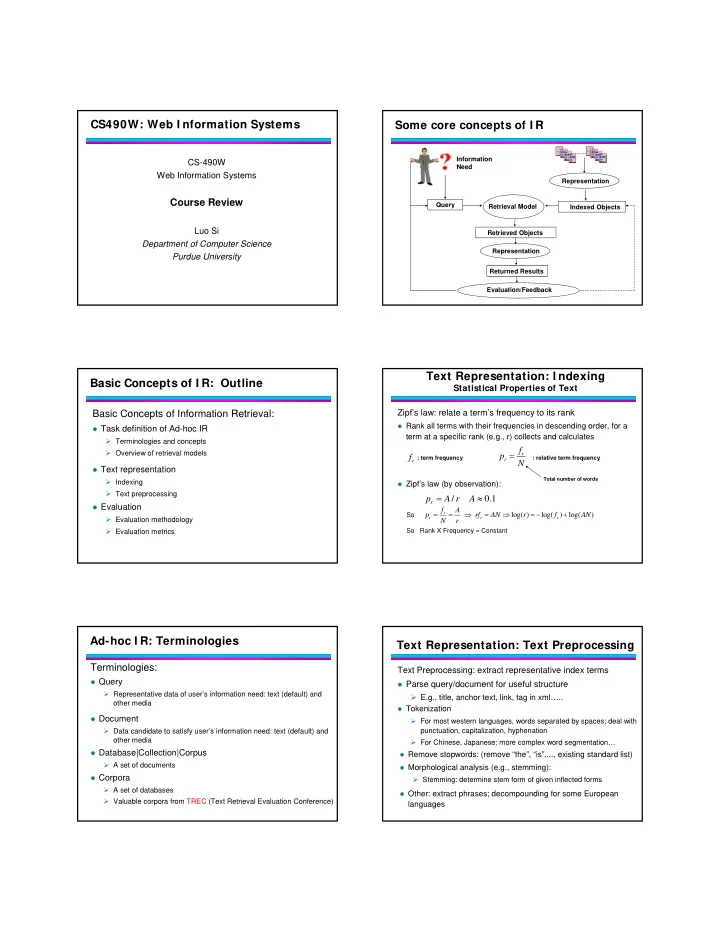

CS490W: Web I nformation Systems Some core concepts of I R Information CS-490W Need Web Information Systems Representation Course Review Query Retrieval Model Indexed Objects Luo Si Retrieved Objects Department of Computer Science Representation Purdue University Returned Results Evaluation/Feedback Text Representation: I ndexing Basic Concepts of I R: Outline Statistical Properties of Text Zipf’s law: relate a term’s frequency to its rank Basic Concepts of Information Retrieval: � Rank all terms with their frequencies in descending order, for a � Task definition of Ad-hoc IR term at a specific rank (e.g., r) collects and calculates � Terminologies and concepts f � Overview of retrieval models = r p f : term frequency : relative term frequency r r N � Text representation Total number of words � Indexing � Zipf’s law (by observation): � Text preprocessing = ≈ p A r / A 0.1 r � Evaluation f A = = ⇒ = ⇒ = − + r log( ) log( ) log( ) So p rf AN r f AN � Evaluation methodology r r r N r � Evaluation metrics So Rank X Frequency = Constant Ad-hoc I R: Terminologies Text Representation: Text Preprocessing Terminologies: Text Preprocessing: extract representative index terms � Query � Parse query/document for useful structure � Representative data of user’s information need: text (default) and � E.g., title, anchor text, link, tag in xml….. other media � Tokenization � Document � For most western languages, words separated by spaces; deal with � Data candidate to satisfy user’s information need: text (default) and punctuation, capitalization, hyphenation other media � For Chinese, Japanese: more complex word segmentation… � Database|Collection|Corpus � Remove stopwords: (remove “the”, “is”,..., existing standard list) � A set of documents � Morphological analysis (e.g., stemming): � Corpora � Stemming: determine stem form of given inflected forms � A set of databases � Other: extract phrases; decompounding for some European � Valuable corpora from TREC (Text Retrieval Evaluation Conference) languages

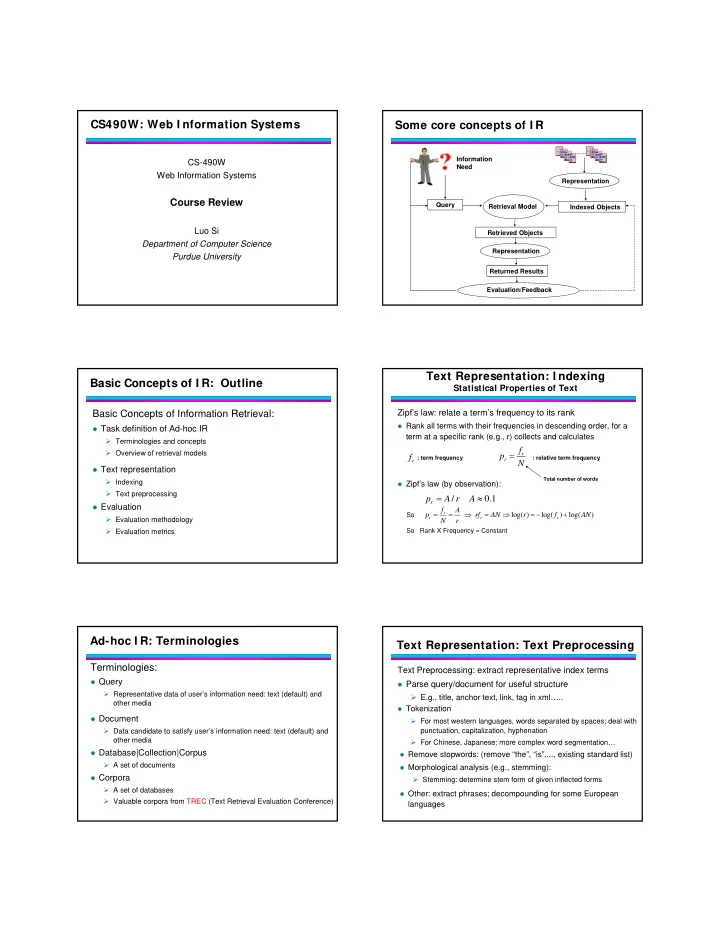

Evaluation Evaluation Sample Results Evaluation criteria � Effectiveness � Favor returned document ranked lists with more relevant documents at the top � Objective measures Recall and Precision Mean-average precision Rank based precision For documents in a subset of a Relevant docs retrieved ranked lists, if we know the truth Precision= Retrieved docs Relevant docs retrieved Recall= Relevant docs Evaluation Retrieval Models: Outline Pooling Strategy Retrieval Models � Retrieve documents using multiple methods � Exact-match retrieval method � Judge top n documents from each method � Unranked Boolean retrieval method � Whole retrieved set is the union of top retrieved documents � Ranked Boolean retrieval method from all methods � Best-match retrieval method � Problems: the judged relevant documents may not be � Vector space retrieval method complete � Latent semantic indexing � It is possible to estimate size of true relevant documents by randomly sampling Evaluation Retrieval Models: Unranked Boolean Unranked Boolean: Exact match method Single value metrics � Selection Model � Mean average precision � Retrieve a document iff it matches the precise query � Calculate precision at each relevant document; average over all precision values � Often return unranked documents (or with chronological order) � 11-point interpolated average precision � Operators � Calculate precision at standard recall points (e.g., 10%, 20%...); � Logical Operators: AND OR, NOT smooth the values; estimate 0 % by interpolation � Approximately operators: #1(white house) (i.e., within one word � Average the results distance, phrase) #sen(Iraq weapon) (i.e., within a sentence) � Rank based precision � String matching operators: Wildcard (e.g., ind* for india and indonesia) � Calculate precision at top ranked documents (e.g., 5, 10, 15…) � Field operators: title(information and retrieval)… � Desirable when users care more for top ranked documents

Retrieval Models: Unranked Boolean Retrieval Models: Ranked Boolean Advantages: Advantages: � Work well if user knows exactly what to retrieve � All advantages from unranked Boolean algorithm � Works well when query is precise; predictive; efficient � Predicable; easy to explain � Results in a ranked list (not a full list); easier to browse and � Very efficient find the most relevant ones than Boolean Disadvantages: � Rank criterion is flexible: e.g., different variants of term evidence � It is difficult to design the query; high recall and low precision for loose query; low recall and high precision for strict query Disadvantages: � Results are unordered; hard to find useful ones � Still an exact match (document selection) model: inverse � Users may be too optimistic for strict queries. A few very correlation for recall and precision of strict and loose queries relevant but a lot more are missing � Predictability makes user overestimate retrieval quality Retrieval Models: Ranked Boolean Retrieval Models: Vector Space Model Ranked Boolean: Exact match Vector space model � Similar as unranked Boolean but documents are ordered by � Any text object can be represented by a term vector some criterion � Documents, queries, passages, sentences Retrieve docs from Wall Street Journal Collection � A query can be seen as a short document Query: (Thailand AND stock AND market) � Similarity is determined by distance in the vector space Which word is more important? Reflect importance of � Example: cosine of the angle between two vectors document by its words Many “stock” and “market”, but fewer � The SMART system “Thailand”. Fewer may be more indicative � Developed at Cornell University: 1960-1999 Term Frequency (TF): Number of occurrence in query/doc; larger � Still quite popular number means more important Total number of docs Inversed Document Frequency (IDF): Number of docs Larger means more important contain a term There are many variants of TF, IDF: e.g., consider document length Retrieval Models: Ranked Boolean Retrieval Models: Vector Space Model Ranked Boolean: Calculate doc score Vector representation � Term evidence: Evidence from term i occurred in doc j: (tf ij ) Java and (tf ij *idf i ) D 3 D 1 � AND weight: minimum of argument weights Query � OR weight: maximum of argument weights D 2 Min=0.2 Max=0.6 Sun AND OR Term 0.2 0.6 0.4 0.2 0.6 0.4 evidence Starbucks Query: (Thailand AND stock AND market)

Retrieval Models: Vector Space Model Retrieval Models: Vector Space Model Advantages: Give two vectors of query and document � � Best match method; it does not need a precise query = q ( q q , ,..., q ) � query as � 1 2 n � � � Generated ranked lists; easy to explore the results q = � document as d ( d , d ,..., d ) j j 1 j 2 jn � Simplicity: easy to implement � � � � calculate the similarity θ ( , ) q d � Effectiveness: often works well j � � Cosine similarity: Angle between vectors � Flexibility: can utilize different types of term weighting d � � � � � � j = θ methods ( , ) cos( ( , )) sim q d q d j j � � � � Used in a wide range of IR tasks: retrieval, classification, θ cos( ( , )) q d j summarization, content-based filtering… � � � + + + + + + i q d q d ... q d q d q d ... q d q d = j = = � � � 1 j ,1 2 � j ,2 � � j j n , 1 j ,1 2 j ,2 j j n , + + + + q d q d 2 ... 2 2 ... 2 q q d d 1 n j 1 jn Retrieval Models: Vector Space Model Retrieval Models: Vector Space Model Disadvantages: Vector Coefficients � Hard to choose the dimension of the vector (“basic concept”); � The coefficients (vector elements) represent term terms may not be the best choice evidence/ term importance � Assume independent relationship among terms � It is derived from several elements � Heuristic for choosing vector operations � Document term weight: Evidence of the term in the document/query � Collection term weight: Importance of term from observation of collection � Choose of term weights � Length normalization: Reduce document length bias � Choose of similarity function � Naming convention for coefficients: � Assume a query and a document can be treated in the same way = . . First triple represents query term; q d DCL DCL , k j k second for document term Retrieval Models: Vector Space Model Retrieval Models: Latent Semantic I ndexing Common vector weight components: Latent Semantic Indexing (LSI): Explore correlation � lnc.ltc: widely used term weight between terms and documents � “l”: log(tf)+1 � “n”: no weight/normalization � Two terms are correlated (may share similar semantic � “t”: log(N/df) concepts) if they often co-occur � “c”: cosine normalization � Two documents are correlated (share similar topics) if they have many common words ⎡ ( )( ) ⎤ ∑ N + + ⎢ log( ( ) 1 log( ( ) 1 log ⎥ tf k tf k + + q j q d q d .. q d ⎣ df ( k ) ⎦ 1 1 2 2 = j j n jn k Latent Semantic Indexing (LSI): Associate each term and [ ] ⎡ ⎤ 2 q d ( ) ( ) ∑ ∑ N j + 2 + log( tf ( k ) 1 ⎢ log( tf ( k ) 1 log ⎥ document with a small number of semantic concepts/topics q ⎣ j ⎦ df ( k ) k k

Recommend

More recommend