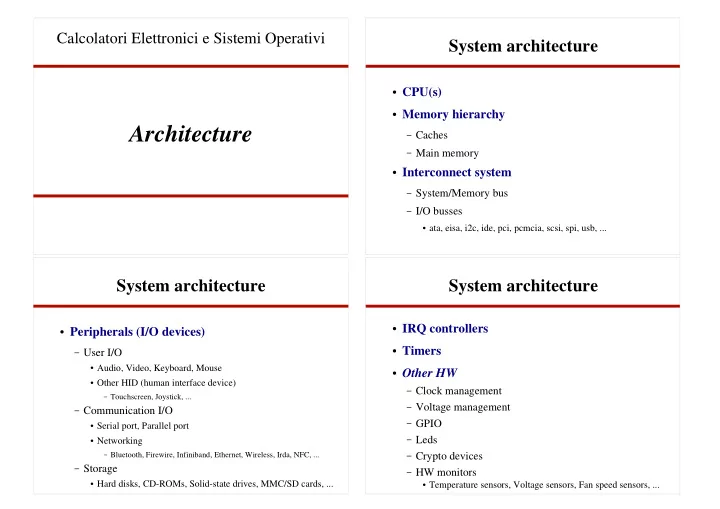

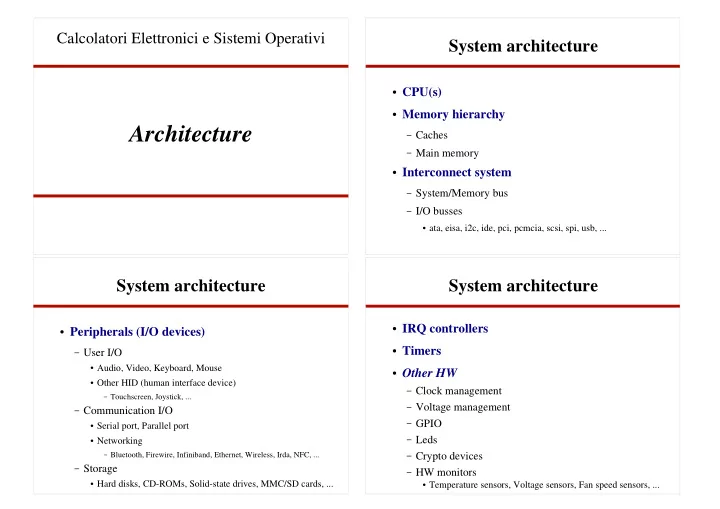

Calcolatori Elettronici e Sistemi Operativi System architecture � CPU(s) � Memory hierarchy Architecture – Caches – Main memory � Interconnect system – System/Memory bus – I/O busses � ata, eisa, i2c, ide, pci, pcmcia, scsi, spi, usb, ... System architecture System architecture � IRQ controllers � Peripherals (I/O devices) � Timers – User I/O � Audio, Video, Keyboard, Mouse � Other HW � Other HID (human interface device) – Clock management – Touchscreen, Joystick, ... – Voltage management – Communication I/O – GPIO � Serial port, Parallel port – Leds � Networking – Crypto devices – Bluetooth, Firewire, Infiniband, Ethernet, Wireless, Irda, NFC, ... – Storage – HW monitors � Hard disks, CD-ROMs, Solid-state drives, MMC/SD cards, ... � Temperature sensors, Voltage sensors, Fan speed sensors, ...

Architecture System architecture (example) � P Memory cache Memory hierarchy System bus HW I/O I/O bus I/O I/O I/O Memory Hierarchy Memory Hierarchy � CPU / Memory speed mismatch � Temporal locality – Memory access time – an accessed memory location is likely to be accessed again in the near future � 1-2 cycles � high cost (area/energy/$) � 5-10 cycles � 50-500 cycles � Spatial locality – Many accesses for small areas – after accessing memory location X, it is probable that � Program characteristics: a program will access locations X±1, X±2, X±n (n – Predictability / Structure / Linear data structures / Sequential flow small) � Principle of locality / Locality of reference – Temporal locality – Spatial locality

Memory Hierarchy Memory Hierarchy � CPU registers Small Expensive � Cache (one o more levels, on- and off-chip) Fast � RAM � Mass storage (HDD, Flash) � Backups (Tape) Big Cheap Slow Memory structure Memory structure Data in Data in Line precharge write Line precharge write Memory Address Address Memory Row Row Decoder Array Decoder Array Sense amplifiers read Sense amplifiers read MUX Data out Data out

SRAM cell DRAM cell V BL DD BL_b BL P2 P1 N3 N4 N1 N2 WL GND WL � Small BL BL_b 1. precharge bitlines � Destructive read � restore data after each read • read: V DD /2 � Need refresh • write: V DD /2+ � , V DD /2- � N3 2. address wordlines N4 • write: keep bitlines driven WL DRAM access Memory Hierarchy DRAM � – usually address is sent in two phases ( COL and ROW ) ROW COL T -CAS R H A CPU L1 L2 L3 A D P -RAS M D REGS E DATA RAS time CAS time Column Address RAS hold time access time

Cache Cache ADDRESS DATA ADDRESS DATA ADDRESS = i ADDRESS = i 0 0 Cache-Miss 123 123 i i DATA = 123 Miss Penalty (time/energy) N-1 N-1 Cache Cache � Read ADDRESS DATA ADDRESS = i 0 – Hit i 123 – Miss � Read data from next levels DATA = 123 – Read a whole line (exploits spatial locality) Cache-Hit 123 i � Write – Hit <Access> = Access cache · (1+MR·MP) � Write-through time/energy � Write-back – Miss N-1 � Write-allocate � Write-no-allocate

Cache Cache � Associative � Associative – addressed by content V ADDRESS COMP DATA � CAM (content addressable memories) LINE ADDRESS DATA � standard memories + control HIT/MISS V: Valid Cache Cache � Direct-Mapped � Direct-Mapped Lsize = 2 #DSP Lsize = 2 #DSP (or block size) (or block size) Lines = 2 #IDX Lines = 2 #IDX V TAG DATA V TAG DATA (or blocks) (or blocks) { } { } LINE LINE ADDRESS ADDRESS TAG IDX DSP TAG IDX DSP more data words in MUX MUX a cache line COMP COMP DATA DATA HIT/MISS HIT/MISS Cache Size = Lines · Lsize (DATA Size) Actual size = Cache Size + (TAG+V) · Lines

Cache Cache � Direct-Mapped � Set-Associative TAG IDX DSP V TAG DATA V TAG DATA V TAG DATA V TAG DATA 0000 0001 DSP { LINE 0001 0001 DM-Cache TAG IDX ADDRESS 0010 0001 COMP COMP COMP More memory addresses are H 1 H 2 0011 0001 H n (statically) mapped to the same MUX cache line Memory MUX HIT = H 1 + H 2 + ... + H n address DATA Cache Cache � Set-Associative � Replacement #TAG = #ADDRESS - #IDX - #DSP – LRU � counters or shift registers (nWays x Lines) Lines = 2 #IDX Lsize = 2 #DSP � pseudo LRU – FIFO Cache Size = nWays · Lines · Lsize (DATA Size) – Random Actual size = Cache Size + [ (TAG+V) · Lines · nWays ] nWays = 1 � Direct-Mapped Lines = 1 � Associative

Cache Cache � Replacement � Replacement 4 ways, 3 bits (B 0 , B 1 , B 2 ) for each line: (B 0 ,B 1 ,B 2 ) = 00x � replace way 0 ; (B 0 ,B 1 ,B 2 ) = 11x (B 0 ,B 1 ,B 2 ) = 01x � replace way 1 ; (B 0 ,B 1 ,B 2 ) = 10x – LRU – LRU (B 0 ,B 1 ,B 2 ) = 1x0 � replace way 2 ; (B 0 ,B 1 ,B 2 ) = 0x1 � counters or shift registers (nWays x Lines) (B 0 ,B 1 ,B 2 ) = 1x1 � replace way 3 ; (B 0 ,B 1 ,B 2 ) = 0x0 � pseudo LRU access LRU stack for line i access counters for line i 0 1 B 0 reg-0 reg-1 reg-2 reg-3 way-0 way-1 way-2 way-3 0 1 2 3 initial 0 1 2 3 initial 3 3 0 1 2 3 1 2 3 0 0 1 0 1 B 1 B 2 1 1 3 0 2 1 2 0 3 1 3 3 1 0 2 3 2 1 3 0 3 3 1 0 2 3 2 1 3 0 replace way 0 replace way 1 replace way 2 replace way 3 B 0 = not B 0 B 0 = not B 0 B 0 = not B 0 B 0 = not B 0 Insert the last accessed way, Reset the last accessed way's counter B 1 = not B 1 B 1 = not B 1 B 2 = not B 2 B 3 = not B 3 shift other values increment counters below the modified one Cache Cache � Misses (3-C's model) conflict misses can avoid capacity misses – Compulsory repeated, sequential accesses from 0 to B (B+1 bytes) � � cold-start miss 1. Associative cache, size B, LS=4 • access to 0: miss (compulsory) � insert the whole line (addresses 0,1,2,3) – Capacity • access to 1,2,3: hit • access to 4: miss (compulsory) � insert the whole line (addresses 4,5,6,7) � miss in a fully associative cache • ... • access to B: miss (capacity) � replace reference to addresses 0,1,2,3 – Conflict (Collision) • access to 0: miss (capacity) � replace reference to addresses 4,5,6,7 • access to 1,2,3: hit � miss not happened in a fully associative cache • access to 4: miss (capacity) • ... – associative caches do not have conflict misses MR ~ 0.25 ( MR = (B/4 + 1)/(B+1) ) � too many conflicts: trashing 2. DM cache, size B, LS=4 – conflict misses can avoid capacity misses

Cache Cache � Rules of thumb conflict misses can avoid capacity misses – MR(DM N ) ~ MR(2-ways N/2 ) repeated, sequential accesses from 0 to B (B+1 bytes) � 1. Associative cache, size B, LS=4 – 4 x size � ½ miss rate MR = 0.25 – Enlarging Lsize: 2. DM cache, size B, LS=4 • access to 0: miss (compulsory) � insert the whole line (addresses 0,1,2,3) SPEC92 • access to 1,2,3: hit decrease MR 10 MR% • access to 4: miss (compulsory) � insert the whole line (addresses 4,5,6,7) increase MP • ... 4K • access to B: miss (conflict) � replace reference to addresses 0,1,2,3 16K 64K • access to 0: miss (conflict) � replace reference to addresses 0,1,2,3 5 256K • access to 1,2,3: hit • access to 4: hit • ... � 2/(B+1) MR = [0.25 + N · 2/(B+1)] / (N+1) 0 LS 16 32 64 128 256 Cache Cache � Stack Distance � Stack Distance – program memory references – program memory references � 100, 104, 108, 100, 108 � addr 1 , addr 2 , addr 3 , ..., addr n 108 0 – push references in a stack 100 1 104 2 � SD(108) = 1 � (removing from stack if already present) 3 4 – stack distance of reference R � position in stack (if present) � ∞ (if not present)

Cache Cache � Stack Distance � Multi-level cache a � � L � � L � � D � a a L � W W � 1 – Inclusive: data in L1 are in L2, in ... too W � a = 0 D – P HIT = – Exclusive: data are in L1, or in L2, or ... (only one) � L � D L � 1 DM cache P HIT = – Mainly inclusive (intermediate) D: stack distance D=0 � P HIT =1 (two consecutive refs) L: Lines – Victim cache D=1 � access sequence : addr, other, addr W: nWays miss if other replaced addr – L0 cache P MISS = 1/L Hyp: uniform distribution of cache line accesses P HIT = 1-1/L = (L-1)/L – Loop cache D: � prob other 1 , other 2 , ..., other D did not evict addr – ... P HIT = P HIT (D=1) D = [(L-1)/L] D

Architecture Cache coherence � Several caches Memory hierarchy – parallel architectures Cache coherence � Same data stored in more than one cache � Writes on a cache: what to do with other copies? Cache coherence Cache coherence datum � Software based � P mem $ – compiler or OS support mem � P – with or without HW assistance $ mem – tough problem � P $ mem � need perfect information mem � P $ � Hardware based private copies enforce data coherence on private changes

Recommend

More recommend