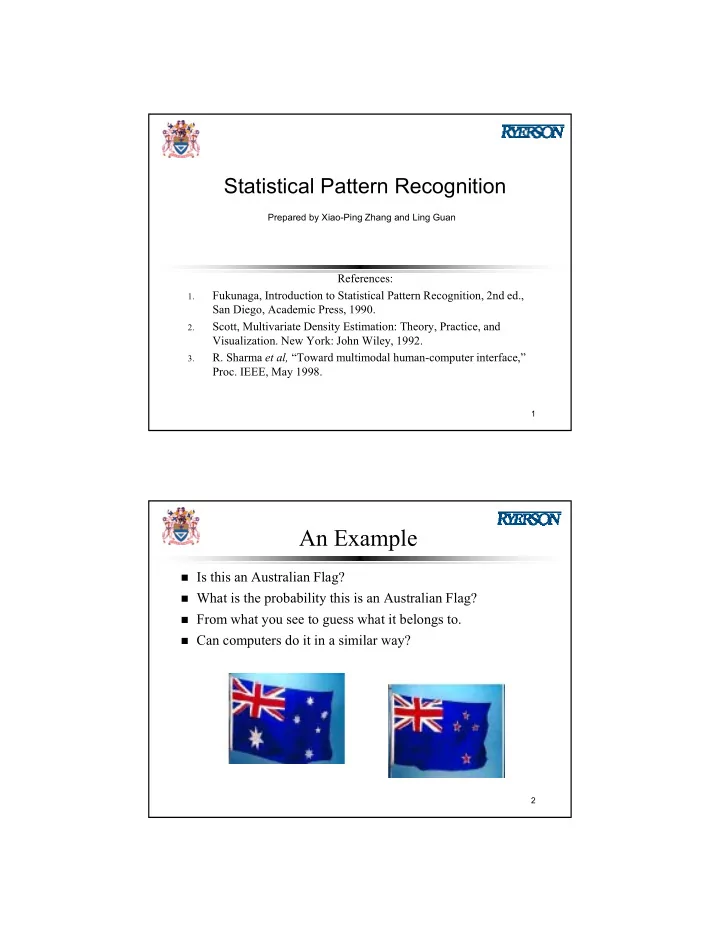

Statistical Pattern Recognition Prepared by Xiao-Ping Zhang and Ling Guan References: Fukunaga, Introduction to Statistical Pattern Recognition, 2nd ed., 1. San Diego, Academic Press, 1990. Scott, Multivariate Density Estimation: Theory, Practice, and 2. Visualization. New York: John Wiley, 1992. R. Sharma et al, “Toward multimodal human-computer interface,” 3. Proc. IEEE, May 1998. 1 An Example Is this an Australian Flag? What is the probability this is an Australian Flag? From what you see to guess what it belongs to. Can computers do it in a similar way? 2

Bayesian Decision Theory ● In a nutshell... ● Use a training set of samples of the data to derive some basic probabilistic relationships ● Use these probabilities to make a decision about how to categorise the next (unseen) data sample 3 Classification Example Salmon Sea Bass 4

Simple approach: Consider a world comprised of ● states of nature ω i State of nature ω i = category/class ● Assume only 2 types of fish: ● Basic way of deciding which ● category an unknown fish belongs to: Use PRIOR knowledge ● 5 Simple approach [2] 6

Better Approach? recap: observations aid decisions 7 Bayesian Decision Theory (BDT) Use observations to condition our decisions rather than rely on fixed thresholds based on prior knowledge 8

Conditional Probability P(x|ω 1 ) = ω 1 x P(ω 1 |x) = 9 Conditional Probability [2] More generally: ω 2 ω 3 ω 1 ω 4 ω c ... x 10

Bayes Rule p( x | ω i ) · P( ω i ) P( ω i | x ) = ____________________ p( x ) where p( x ) = Σ i=1..c [ p ( x | ω i ) · P( ω i ) ] 11 BDT Model likelihoods from an initial sample set ● Use likelihood models + BAYES rule to estimate posteriors for a given ● sample Use posteriors to make a decision about which class sample belongs to ● 12

Decision Theory ● Concerned with the relationship between the choice of decision boundary and associated cost – Threshold = decision boundary ● Decision boundary – Chosen to minimise some notion of cost ● Cost ↔ Error / Loss 13 Cost, Error & Loss In general, we want to reduce the chances of making bad ● decisions! What constitutes a bad decision?? ● 14

Bayes Decision Rule ● decide ω i if P( ω 1 | x ) > P( ω 2 | x ) 15 What is the error incurred for each decision we make? ● P(error | x) = ω 2 ω 1 ... How to minimise error ?? x 16

Average probability of error ● Why is Bayes decision rule good in this case? 17 Bayes Rule Posterior probabilities ( ω i C k ) p ( | C ) P ( C ) x P ( C | ) k k X x k p ( ) x X M p ( ) p ( | C ) P ( C ) x x k k X X k 1 M P ( C | ) 1 x k k 1 likelihood prior posterior normalizat ion factor 18

Definitions P ( C k | x ) – posterior, given the observed value x , the probability x belongs to class C k P x ( x | C k ) – likelihood, given that the sample is from class C k , the probability x is observed P ( C k ) – prior, the probability that samples from class C k are observed (discrete values) P x ( x ) – normalization factor, the probability x is observed 19 Minimum Error Probability Decision Decision rule R : C if P ( C | ) P ( C | ), j k x x x k k k j Error probability of misclassification P P ( R , C ) x e k j k j k P ( R | C ) P ( C ) x k j j k j k P ( | C ) P ( C ) d x x j j R k k j k Given x , the above decision rule will minimize P( x |C j )P (C j ) (kick out the largest one) 20

Discriminant Functions Define a set of discriminant functions y 1 ( x ),…, y M ( x ) such that R : x C if y ( x ) y ( x ), j k k k k j Choose y ( ) P ( C | ) x x k k y ( ) p ( | C ) P ( C ) x x k k k X We can also write y ( x ) ln p ( x | C ) ln P ( C ) k X k k 21 Maximum Likelihood ((ML) Decision If the a priori probabilities P ( C k ) of the classes are not known, we assume that they are uniformly distributed (maximum entropy), P(C 1 )=P(C 2 )=… , then R : x C if p ( x | C ) p ( x | C ), j k k k k j Likelihood function (maximum likelihood) L ( ) ln p ( | C ) x x k X 22

The Gaussian Distribution (1) PDF 1 1 T 1 p ( x | C ) exp ( x m ) ( x m ) k X d / 2 1 / 2 ( 2 ) | | 2 T m E [ x ], E [( x m )( x m ) ] Discrimnant function y ( ) ln p ( | C ) ln P ( C ) x x k k k X 1 1 T 1 ( ) ( ) ln | | ln P ( C ) x m x m k k k k 2 2 Mahalanobis distance 23 The Gaussian Distribution (2) Assume the distribution of x is iid (independent & identically distributed), then becomes an identity matrix I And the discrimnant function becomes y ( x ) ln p ( x | C ) ln P ( C ) k X k k 1 T ( x m ) ( x m ) ln P ( C ) k k k 2 24

The Gaussian Distribution (3) Since P ( C k ) is assumed uniformly distributed (maximum entropy), ln P ( C k ) is a constant. Then what is left is to maximize T 2 ( x m ) ( x m ) || x m || k k k This is equivalent to minimize the Euclidian distance between x and m k T 2 ( x m ) ( x m ) || x m || k k k 25 The Gaussian Distribution (4) So the ML decision is equivalent to minimum Euclidian distance decision under the following conditions: P ( C k ) is assumed uniformly distributed The distribution of x is Gaussian and iid 26

Bayes Rule (revisit) Posterior probabilities p ( | C ) P ( C ) x P ( C | ) k k X x k p ( ) x X M p ( ) p ( | C ) P ( C ) x x k k X X k 1 M P ( C | ) 1 x k k 1 likelihood prior posterior normalizat ion factor 27 FINITE MIXTURE MODEL The general model: k p m p x x | m | m m 1 When m = 2 . p p p 1 1 2 2 Why Gaussian mixture is so important? 28

LMM vs GMM (2 COMPONENTS) T. Amin, M. Zeytinoglu and L. Guan, “Application of Laplacian mixture model for image and video retrieval,” IEEE Transactions on Multimedia, vol. 9, no, 7, pp. 1416-1429, November 2007. 29 Content-based Multimedia Processing Content-based (object-based) description Segmentation and recognition Content-based information retrieval Indexing and retrieval One popular ranking criterion T 2 ( x m ) ( x m ) || x m || k k k e.g. retrieve a song from the music database by humming a small piece of it Multimedia information fusion Multi-modality -- Multi-dimensional space 30

Recommend

More recommend