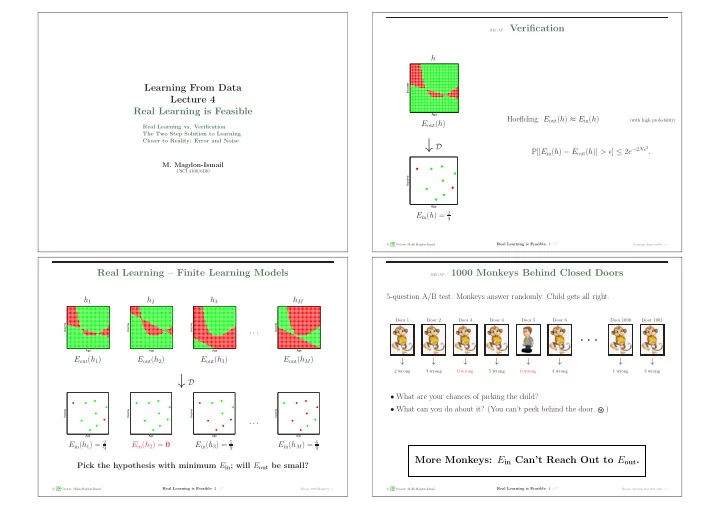

recap: Verification h Income Learning From Data Lecture 4 Real Learning is Feasible Age Hoeffding: E out ( h ) ≈ E in ( h ) (with high probability) E out ( h ) Real Learning vs. Verification The Two Step Solution to Learning ↓ D Closer to Reality: Error and Noise P [ | E in ( h ) − E out ( h ) | > ǫ ] ≤ 2 e − 2 Nǫ 2 . M. Magdon-Ismail CSCI 4100/6100 Income Age E in ( h ) = 2 9 � A M Real Learning is Feasible : 2 /17 c L Creator: Malik Magdon-Ismail Learning: finite model − → Real Learning – Finite Learning Models recap: 1000 Monkeys Behind Closed Doors 5-question A/B test. Monkeys answer randomly. Child gets all right. h 1 h 2 h 3 h M Door 1 Door 2 Door 3 Door 4 Door 5 Door 6 Door 1000 Door 1001 Income Income Income Income · · · · · · Age Age Age Age E out ( h 1 ) E out ( h 2 ) E out ( h 3 ) E out ( h M ) ↓ ↓ ↓ ↓ ↓ ↓ ↓ ↓ 2 wrong 3 wrong 0 wrong 5 wrong 0 wrong 4 wrong 1 wrong 3 wrong ↓ D • What are your chances of picking the child? • What can you do about it? (You can’t peek behind the door. ) Income Income Income Income · · · Age Age Age Age E in ( h 1 ) = 2 E in ( h 3 ) = 5 E in ( h M ) = 6 E in ( h 2 ) = 0 9 9 9 More Monkeys: E in Can’t Reach Out to E out . Pick the hypothesis with minimum E in ; will E out be small? � A c M Real Learning is Feasible : 3 /17 � A c M Real Learning is Feasible : 4 /17 L Creator: Malik Magdon-Ismail Recap: 1000 Monkeys − → L Creator: Malik Magdon-Ismail Recap: selection bias and coins − →

recap: Selection Bias Illustrated with Coins Hoeffding says that E in ( g ) ≈ E out ( g ) for Finite H Coin tossing example: P [ | E in ( g ) − E out ( g ) | > ǫ ] ≤ 2 |H| e − 2 ǫ 2 N , for any ǫ > 0 . • If we toss one coin and get no heads , its very surprising. P = 1 2 N P [ | E in ( g ) − E out ( g ) | ≤ ǫ ] ≥ 1 − 2 |H| e − 2 ǫ 2 N , for any ǫ > 0 . We expect it is biased: P [heads] ≈ 0. • Tossing 70 coins, and find one with no heads. Is it surprising? � 70 � 1 − 1 P = 1 − 2 N Do we expect P [heads] ≈ 0 for the selected coin? We don’t care how g was obtained, as long as it is from H Similar to the “birthday problem”: among 30 people, two will likely share the same birthday. • This is called selection bias . Some Basic Probability Proof: Let M = |H| . Selection bias is a very serious trap. For example medical screening. Events A, B The event “ | E in ( g ) − E out ( g ) | > ǫ ” implies Implication “ | E in ( h 1 ) − E out ( h 1 ) | > ǫ ” OR . . . OR “ | E in ( h M ) − E out ( h M ) | > ǫ ” If A = ⇒ B ( A ⊆ B ) then P [ A ] ≤ P [ B ]. So, by the implication and union bounds: Union Bound � M � P [ A or B ] = P [ A ∪ B ] ≤ P [ A ] + P [ B ]. P [ | E in ( g ) − E out ( g ) | > ǫ ] ≤ P OR m =1 | E in ( h M ) − E out ( h M ) | > ǫ Bayes’ Rule Search Causes Selection Bias M P [ A | B ] = P [ B | A ] · P [ A ] � ≤ P [ | E in ( h m ) − E out ( h m ) | > ǫ ] , P [ B ] m =1 2 Me − 2 ǫ 2 N . ≤ (The last inequality is because we can apply the Hoeffding bound to each summand) � A M Real Learning is Feasible : 5 /17 � A M Real Learning is Feasible : 6 /17 c L Creator: Malik Magdon-Ismail Hoeffding: finite model − → c L Creator: Malik Magdon-Ismail Hoeffding as error bar − → Interpreting the Hoeffding Bound for Finite |H| E in Reaches Outside to E out when |H| is Small P [ | E in ( g ) − E out ( g ) | > ǫ ] ≤ 2 |H| e − 2 ǫ 2 N , for any ǫ > 0 . � 2 N log 2 |H| 1 E out ( g ) ≤ E in ( g ) + δ . P [ | E in ( g ) − E out ( g ) | ≤ ǫ ] ≥ 1 − 2 |H| e − 2 ǫ 2 N , for any ǫ > 0 . If N ≫ ln |H| , then E out ( g ) ≈ E in ( g ). Theorem. With probability at least 1 − δ , Proof: Let δ = 2 |H| e − 2 ǫ 2 N . Then • Does not depend on X , P ( x ) , f or how g is found. � P [ | E in ( g ) − E out ( g ) | ≤ ǫ ] ≥ 1 − δ. 2 N log 2 |H| 1 • Only requires P ( x ) to generate the data points independently and also the test point. E out ( g ) ≤ E in ( g ) + δ . In words, with probability at least 1 − δ , | E in ( g ) − E out ( g ) | ≤ ǫ. This implies E out ( g ) ≤ E in ( g ) + ǫ. We don’t care how g was obtained, as long as g ∈ H From the definition of δ , solve for ǫ : What about E out ≈ 0 ? � 2 N log 2 |H| 1 ǫ = . δ � A c M Real Learning is Feasible : 7 /17 � A c M Real Learning is Feasible : 8 /17 L Creator: Malik Magdon-Ismail E in is close to E out for small H − → L Creator: Malik Magdon-Ismail 2 step approach − →

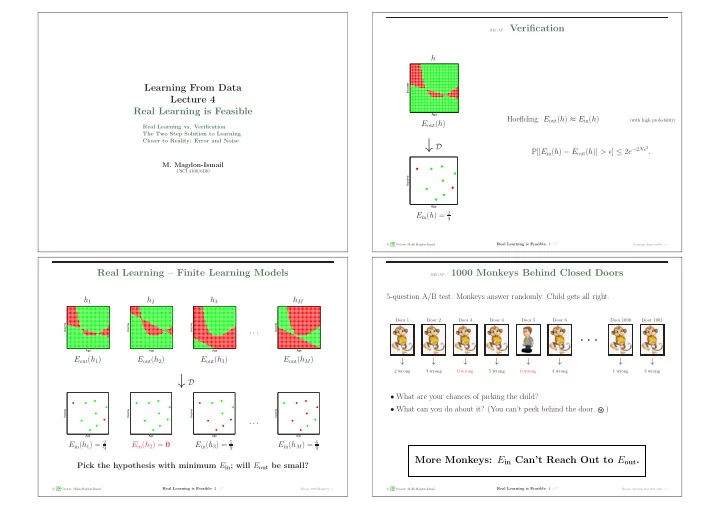

The 2 Step Approach to Getting E out ≈ 0 : Feasibility of Learning (Finite Models) (1) E out ( g ) ≈ E in ( g ). (2) E in ( g ) ≈ 0. • No Free Lunch: can’t know anything outside D , for sure . Together, these ensure E out ≈ 0. • Can “learn” with high probability if D is i.i.d. from P ( x ). E out ≈ E in ( E in can reach outside the data set to E out ). How to verify (1) since we do not know E out – must ensure it theoretically - Hoeffding. • We want E out ≈ 0 . We can ensure (2) (for example PLA) – modulo that we can guarantee (1) • The two step solution. We trade E out ≈ 0 for 2 goals: (i) E out ≈ E in ; out-of-sample error (ii) E in ≈ 0. We know E in , not E out , but we can ensure ( i ) if |H| is small. There is a tradeoff: model complexity Error This is a big step! • Small |H| = ⇒ E in ≈ E out � 2 N log 2 |H| 1 δ • Large |H| = ⇒ E in ≈ 0 is more likely. • What about infinite H - the perceptron? in-sample error |H| |H| ∗ � A M Real Learning is Feasible : 9 /17 � A M Real Learning is Feasible : 10 /17 c L Creator: Malik Magdon-Ismail Summary: feasibility of learning − → c L Creator: Malik Magdon-Ismail Complex f are harder to learn − → “Complex” Target Functions are Harder to Learn Revising the Learning Problem – Adding in Probability UNKNOWN TARGET FUNCTION f : X �→ Y UNKNOWN y n = f ( x n ) INPUT DISTRIBUTION What happened to the “difficulty” (complexity) of f ? P ( x ) TRAINING EXAMPLES x 1 , x 2 , . . . , x N ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . , ( x N , y N ) x g ( x ) ≈ f ( x ) FINAL LEARNING HYPOTHESIS ALGORITHM • Simple f = ⇒ can use small H to get E in ≈ 0 (need smaller N ). g A • Complex f = ⇒ need large H to get E in ≈ 0 (need larger N ). HYPOTHESIS SET H � A c M Real Learning is Feasible : 11 /17 � A c M Real Learning is Feasible : 12 /17 L Creator: Malik Magdon-Ismail Learning setup with probability − → L Creator: Malik Magdon-Ismail Error and Noise − →

Error and Noise Finger Print Recognition Two types of error. +1 you f f +1 − 1 − 1 intruder h +1 no error false accept − 1 false reject no error Error Measure: How to quantify that h ≈ f . In any application you need to think about how to penalize each type of error. Noise: y n � = f ( x n ). f f Take Away +1 − 1 +1 − 1 Error measure is specified by the user. h +1 0 1 h +1 0 1000 − 1 10 0 − 1 1 0 If not, choose one that is – plausible (conceptually appealing) – friendly (practically appealing) Supermarket CIA � A M Real Learning is Feasible : 13 /17 � A M Real Learning is Feasible : 14 /17 c L Creator: Malik Magdon-Ismail Finger print example − → c L Creator: Malik Magdon-Ismail Pointwise errors − → Almost All Error Mearures are Pointwise Noisy Targets Compare h and f on individual points x using a pointwise error e ( h ( x ) , f ( x )): age 32 years gender male Binary error: e ( h ( x ) , f ( x )) = � h ( x ) � = f ( x ) � (classification) salary 40,000 debt 26,000 Consider two customers with the same credit data. years in job 1 year e ( h ( x ) , f ( x )) = ( h ( x ) − f ( x )) 2 Squared error: (regression) They can have different behaviors. years at home 3 years . . . . . . Approve for credit? In-sample error: N E in ( h ) = 1 � e ( h ( x n ) , f ( x n )) . N The target ‘function’ is not a deterministic function but a stochastic function. n =1 Out-of-sample error: ‘ f ( x )’ = P ( y | x ) E out ( h ) = E x [ e ( h ( x ) , f ( x ))] . � A c M Real Learning is Feasible : 15 /17 � A c M Real Learning is Feasible : 16 /17 L Creator: Malik Magdon-Ismail Noisy targets − → L Creator: Malik Magdon-Ismail Learning setup with error and noise − →

Learning Setup with Error Measure and Noisy Targets UNKNOWN TARGET DISTRIBUTION (target function f plus noise) P ( y | x ) UNKNOWN y n ∼ P ( y | x n ) INPUT DISTRIBUTION P ( x ) TRAINING EXAMPLES ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . , ( x N , y N ) x 1 , x 2 , . . . , x N x ERROR MEASURE g ( x ) ≈ f ( x ) FINAL LEARNING HYPOTHESIS ALGORITHM g A HYPOTHESIS SET H � A M Real Learning is Feasible : 17 /17 c L Creator: Malik Magdon-Ismail

Recommend

More recommend