Results 8.1-8.3: Sample principal components (cf. sections 8.3-8.5) Assume that Σ have eigenvalue-eigenvector pairs We start out by recapitulating the results for the population λ e λ e λ e λ ≥ λ ≥ ≥ λ > ⋯ principal components … 0 ( , ), ( , ), , ( , ) where 1 2 p 1 1 2 2 p p We then consider a p -variate random vector X (not necessarily The i- th principal component is given by multivariate normal) with covariance matrix Σ e X ′ = = + + + = ⋯ … Y e X e X e X ( i 1,2, , ) p a X ′ i i i 1 1 i 2 2 ip p First principal component: The linear combination that 1 ′ ′ ′ ′ ′ ′ a X a X = = a Σ a a Σ a a a a a = = = = λ λ Var( Var( ) ) 1 1 maximizes subject to and we have that Var( ) Y 1 1 1 1 1 i i a X ′ p p p p Second principal component: The linear combination ∑ ∑ ∑ ∑ = σ = λ = 2 Further Var( X ) Var( ) Y ′ ′ a a ′ a X = a Σ a = i ii i i Var( ) 1 that maximizes subject to and = = = = 2 2 2 2 2 i 1 i 1 i 1 i 1 ′ ′ ′ a X a X = a Σ a = Cov( , ) 0 1 2 1 2 λ e = ik i and corr( , Y X ) ETC. i k σ kk 1 2 We consider the observations x 1 , x 2 , .... , x n as a random We then turn to the situation where we want to summarize sample from a population with mean vector � , covariance the variation in n observations of p variables in a few linear matrix Σ, and correlation matrix ρ combinations We may then estimate the population quantities by the x As one illustration we will corresponding sample quantities , S , and R look at the measurements of length, width and height The linear combinations of the carapace (shell) of of the carapace (shell) of ′ a x = + + + = ⋯ a x a x a x j 1,2,...., n painted turtles 1 j 11 j 1 12 j 2 1 p jp a Sa ′ a x ′ have sample mean and sample variance 1 1 1 (cf. section 3.6) a x ′ a x ′ ( , ) Also the pairs for two linear combinations 1 j 2 j a Sa ′ have sample covariance 1 2 3 4

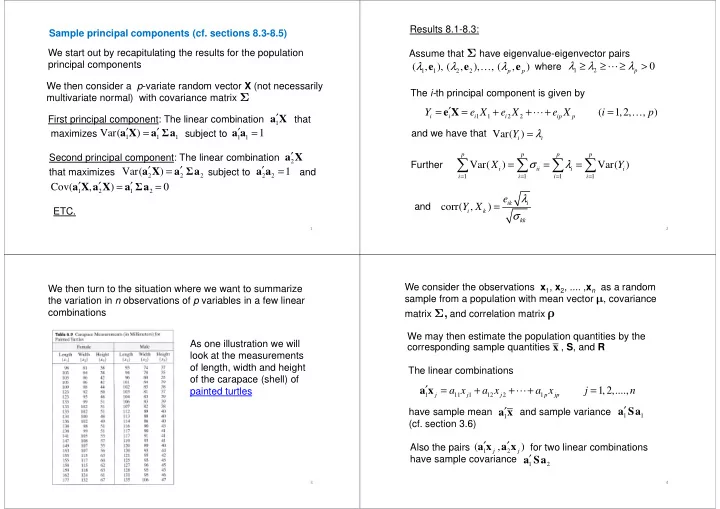

Result: The sample principal components are the linear Assume that S have eigenvalue-eigenvector pairs combinations which have maximum sample variance and e e e λ ≥ λ ≥ ≥ λ > λ ˆ λ ˆ λ ˆ ˆ ˆ ⋯ ˆ … ˆ ˆ ˆ where 0 ( , ), ( , ), , ( , ) 1 2 p 1 1 2 2 p p sample covariance zero: The i- th sample principal component is given by First sample principal component: ′ = e x = + + + = ⋯ … a x ′ y ˆ ˆ e x ˆ e x ˆ e x ˆ ( i 1,2, , ) p The linear combination that maximizes the i i i 1 1 i 2 2 ip p 1 j ′ a x a a ′ = 1 sample variance of subject to sample variance of subject to = = λ λ ˆ ˆ 1 1 j j 1 1 1 1 also and the sample covariance also and the sample covariance sample variance( sample variance( y y ˆ ˆ ) ) i i ≠ ˆ ˆ of the pairs is zero ( y , y ), i k , Second principal component: i k ′ a x The linear combination that maximizes the p p 2 j ∑ ∑ = = λ ˆ a x ′ a a ′ = Further total sample variance s 1 sample variance of subject to ii i 2 2 2 j a x ′ a x ′ = = i 1 i 1 and zero sample covariance for the pairs ( , ) 1 j 2 j λ ˆ ˆ e = ik i sample correlation( y x ˆ , ) and ETC. i k s kk 5 5 6 Example 8.4 x x The observations are often “centered” by subtracting Measurements of length, width and height of the j carapace (shell) of male painted turtles This has no effect on S , and hence no effect on the eigenvalues/eigenvectors The “centered” principal components become e x e x ′ ′ x x = = − − = = … … ˆ ˆ ˆ ˆ y y ( ( ) ) ( ( i i 1,2, 1,2, , ) , ) p p i i i i The values of the i- th “centered” principal component are ′ = e x − x = … ˆ y ˆ ( ) ( j 1,2, , ) n ji i j Note that n n 1 1 ˆ ∑ ∑ ′ = = e x − x = y ˆ y ˆ ( ) 0 ji ji j n n = = j 1 j 1 7 8

Example 8.4 Socioeconomic variables in census tracts for the Observations that are on different scales are often Madison, Wisconsin, area standardized: ′ − − − x x x x x x z = = j 1 1 j 2 2 … jp p … , , , ( j 1,2, , ) n j s s s 11 22 pp Note that the standardized observations are “centered” and that their covariance matrix is the correlation matrix R 9 10 Result for standardized observations: Example 8.5 Assume that R have eigenvalue-eigenvector pairs Weekly rates of return for five stocks on New York e e e λ ≥ λ ≥ ≥ λ > Stock Exchange Jan 2004 through Dec 2005 λ ˆ λ ˆ λ ˆ ˆ ˆ ⋯ ˆ … where ˆ ˆ ˆ 0 ( , ), ( , ), , ( , ) 1 2 p 1 1 2 2 p p The i- th sample principal component is given by ′ = e z = + + + = ⋯ … ˆ y ˆ e z ˆ e z ˆ e z ˆ ( i 1,2, , ) p i i i 1 1 i 2 2 ip p = = λ λ ˆ ˆ also and the sample covariance also and the sample covariance sample variance( sample variance( y y ˆ ˆ ) ) i i ≠ ˆ ˆ of the pairs is zero ( y , y ), i k , i k p = ∑ = λ ˆ Further total sample variance p i = i 1 = λ ˆ ˆ ˆ and sample correlation( y z , ) e i k ik i 11 12

Assume now that the observations x 1 , x 2 , .... , x n Plots of principal components may help to detect suspect observations and provide check for independent realizations from a multivariate normal distribution with mean vector � , covariance matrix Σ multivariate normality The principal components are linear combinations of Then the sample principal components the original variables, so they should be ′ = e x − x = = … … y ˆ ˆ ( ) ( i 1,2, , ; p j 1,2, , ) n (approximately) independent and normally distributed ji i j if the original observations are multivariate normal are realizations of the population principal components This may be checked by making normal probability e X ′ = − = � … Y ( ) ( i 1,2, , ) p i i plots and scatter plots of the observed values of the principal components Also these population principal components are independent and normally distributed with means zero and variances λ given by the eigenvalues of the covariance matrix Σ i 13 14 λ e ˆ ' i s ˆ ' i s What are the properties of the and the considered λ e i s ' i s ' as estimators of the and the ? Assume now that our observations are independent realizations from a multivariate normal distribution one may prove the following results for large samples (i.e. large n ) The sample eigenvalues are approximately independent and λ λ ˆ ˆ λ λ λ λ ∼ ∼ 2 2 N N ( ,2 ( ,2 / ) approximately / ) approximately n n i i i e e E ∼ ˆ N ( , / ) approximately n where i p i i λ p ∑ ′ E = λ e e k λ − λ i i k k 2 1 ( ) = k k i ≠ k ì 15

Recommend

More recommend