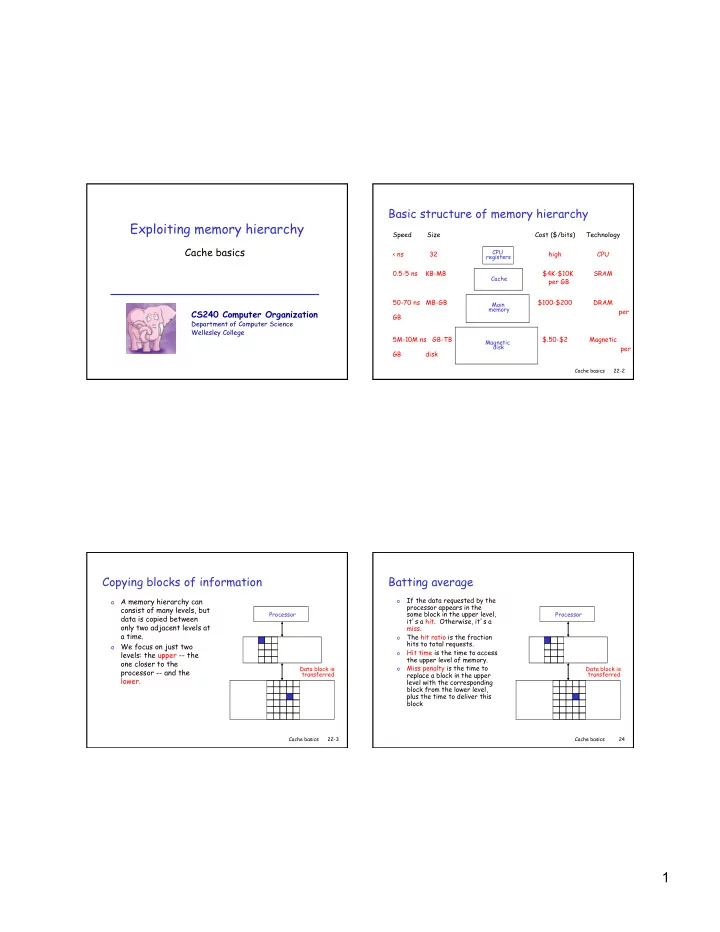

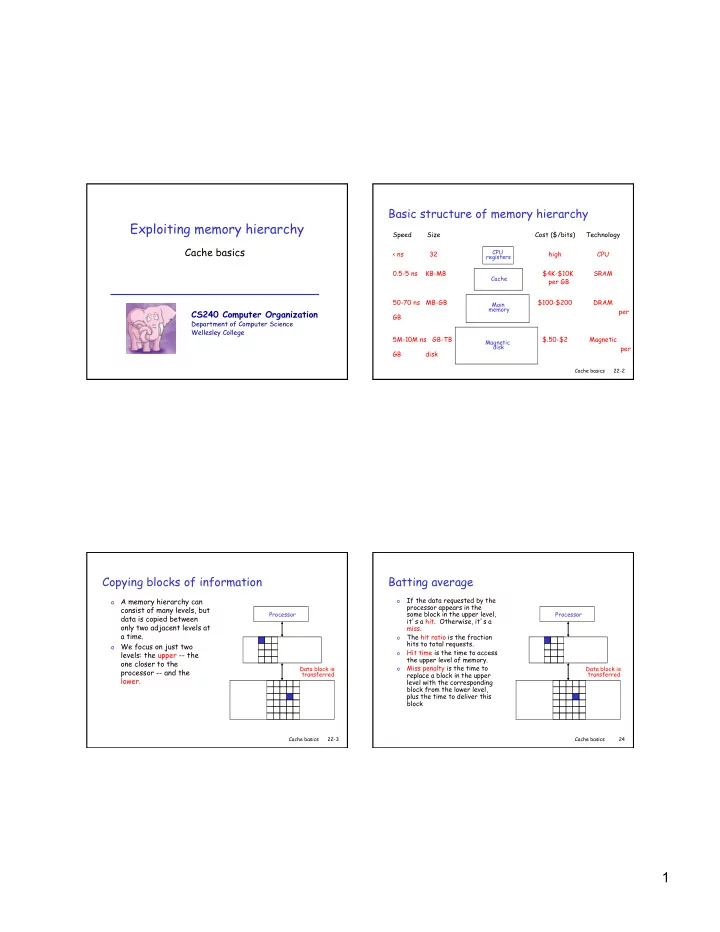

Basic structure of memory hierarchy Exploiting memory hierarchy Speed Size Cost ($/bits) Technology Cache basics CPU < ns 32 high CPU registers 0.5-5 ns KB-MB $4K-$10K SRAM Cache per GB 50-70 ns MB-GB $100-$200 DRAM Main memory per CS240 Computer Organization GB Department of Computer Science Wellesley College 5M-10M ns GB-TB $.50-$2 Magnetic Magnetic disk per GB disk Cache basics 22-2 Copying blocks of information Batting average o A memory hierarchy can If the data requested by the o processor appears in the consist of many levels, but some block in the upper level, Processor Processor data is copied between it ’ s a hit. Otherwise, it ’ s a only two adjacent levels at miss. a time. The hit ratio is the fraction o hits to total requests. o We focus on just two Hit time is the time to access o levels: the upper -- the the upper level of memory. one closer to the Miss penalty is the time to Data block is o Data block is processor -- and the transferred replace a block in the upper transferred lower. level with the corresponding block from the lower level, plus the time to deliver this block Cache basics 22-3 Cache basics 24 1

Direct-mapped cache Tags and validation stickers Address (showing bit positions) 31 30 13 12 11 2 1 0 Byte Cache offset 20 10 0 1 0 1 1 Hit 0 1 0 0 0 1 1 0 0 1 1 Tag 0 0 0 0 1 1 1 1 Data Index Index Valid Tag Data 0 1 2 1021 1022 1023 20 32 = 00001 00101 01001 01101 10001 10101 11001 11101 Memory *MIPS words are aligned to multiples of 4 bytes, so the least significant 2 bits of every address are ignored. Cache basics 22-5 Cache basics 22-6 Miss rate versus block size Handling cache misses Send the original PC value 1. o Data are move between (current PC - 4) to the memory units in blocks of memory. words. Instruct main memory to 2. perform a read and wait for o Larger blocks exploit 40% the memory to complete its 35% spatial locality to lower access. 30% the miss rates. 25% Write the cache, putting the 3. e t a r s 20% o However, the miss rate data from memory in the data s i M 15% portion, writing the upper bits may increase if the block 10% of the address into the tag size becomes significant 5% field, and turning the valid bit 0% fractional of the cache 4 16 64 256 on. Block size (bytes) 1 KB size. Why? Restart the instruction 8 KB 4. 16 KB execution at the first step, o Increased block size also 64 KB 256 KB which will refetch the increases the miss instruction. penalty. Cache basics 22-7 Cache basics 22-8 2

What ’ s wrong with this picture? Write-through A store A store Processor Processor instruction instruction writes data writes data into the cache into the cache and memory at the same time Cache basics 22-9 Cache basics 22-10 Write buffer Write-back . . . and into a write buffer A store A store Processor Processor instruction instruction writes data writes data into the cache ONLY into the cache The modified block is . . . where it written into can cool its main memory heels until only when it is written to replaced main memory Cache basics 22-11 Cache basics 22-12 3

An example: Intrinsity FastMATH 256 block cache with 16 words per block o MIPS architecture with a 12-stage pipeline and a Address (showing bit positions) 31 14 13 6 5 2 1 0 simple cache implementation. 18 8 4 Hit Byte Data Tag offset Index Block offset o To pipeline without 18 bits 512 bits stalling, separate V Tag Data instruction and data 256 caches are used. entries o Each cache is 16 KB, or 4K words, with 16-word 16 32 32 32 blocks. = Mux 32 Cache basics 22-13 Cache basics 22-14 Approximate instruction and data Increasing physical or logical memory width misses for SPEC2000 benchmarks CPU CPU CPU Instruction Data Effective Multiplexor miss rate miss rate combined miss rate Cache Cache Cache 0.4% 11.4% 3.2% Bus Bus Bus Memory Memory Memory Memory Memory bank 0 bank 1 bank 2 bank 3 b. Wide memory organization c. Interleaved memory organization Memory a. One-word-wide memory organization *This isn ’ t the whole story, the ultimate measure is the effect of *Assume four word cache block; 1 bus cycle to send address; 15 bus memory on program execution time. More soon . . . cycles for each DRAM access initiate; 1 bus cycle to send word. Cache basics 22-15 Cache basics 22-16 4

Memory references 0 1 2 3 4 3 4 15 Memory references 0 4 0 4 0 4 0 4 0 miss 1 miss 2 miss 3 miss miss miss miss miss 0 4 0 4 01 00 01 00 Mem(0) 00 Mem(0) 00 Mem(0) 4 0 00 Mem(0) 4 00 Mem(0) 00 Mem(0) 01 Mem(4) 00 Mem(0) 00 Mem(1) 00 Mem(1) 00 Mem(1) 00 Mem(2) 00 Mem(2) 00 Mem(3) miss 3 hit 4 hit 15 miss 4 4 0 4 0 miss miss miss miss 01 4 01 00 01 00 4 0 0 4 00 Mem(0) 01 Mem(4) 01 Mem(4) 01 Mem(4) 01 Mem(4) 00 Mem(0) 01 Mem(4) 00 Mem(0) 00 Mem(1) 00 Mem(1) 00 Mem(1) 00 Mem(1) 00 Mem(2) 00 Mem(2) 00 Mem(2) 00 Mem(2) 00 Mem(3) 00 Mem(3) 00 Mem(3) 00 Mem(3) 11 15 *Start with an empty cache - all blocks initially marked as not valid. *Start with an empty cache - all blocks initially marked as not valid. Improving cache 22-17 Improving cache 22-18 We seek to decrease miss rate, Fully associative while not increasing hit time o At the other extreme is a o We may be able to reduce scheme where a block can cache misses by more be placed anywhere in flexible placement of cache. blocks. o In direct mapped cache, a o But then how do we find block can go in exactly one it? place. o That makes it easy to find, but . . . Improving cache 22-19 Improving cache 22-20 5

Middle ground: n-way set-associative cache Memory references 0 4 0 4 0 4 0 4 One-way set associative miss miss (direct mapped) 0 4 0 hit 4 hit Block Tag Data 0 Two-way set associative 00 Mem(0) 01 Mem(4) 00 Mem(0) 01 Mem(4) 1 Set Tag Data Tag Data 2 0 3 1 4 2 5 3 6 7 hit hit Four-way set associative hit 4 hit 0 4 0 Set Tag Data Tag Data Tag Data Tag Data 0 00 Mem(0) 01 Mem(4) 00 Mem(0) 01 Mem(4) 1 Eight-way set associative (fully associative) Tag Data Tag Data Tag Data Tag Data Tag Data Tag Data Tag Data Tag Data *The set containing a memory block is given by (Block number) modulo (Number of sets in the cache). *Start with an empty cache - all blocks initially marked as not valid. Improving cache 22-21 Improving cache 22-22 Performance of set-associative caches Implementing a 4-way set-associative cache Address 15% 31 30 12 11 10 9 8 3 2 1 0 22 8 1 KB 12% Index V Tag Data V Tag Data V Tag Data V Tag Data 0 2 KB 1 9% 2 253 4 KB 254 6% 255 22 32 8 KB 16 KB 3% 32 KB 128 KB 64 KB 0 One-way Two-way Four-way Eight-way 4-to-1 multiplexor Associativity Hit Data Improving cache 22-23 Improving cache 22-24 6

Choosing which block to replace Reducing miss penalty using multiple caches o When a miss occurs in a o A two-level cache allows direct-mapped cache, the the primary cache to requested block can only focus on minimizing the go in one place. hit time to yield a shorter clock cycle, o In an associative cache we have a choice. o . . . while the secondary cache focuses on miss o The most commonly rate to reduce the penalty scheme least recently of long memory access used (LRU). times. Improving cache 22-25 Improving cache 22-26 Balancing cache accounts If only things were that simple Suppose we have a 5 GHz o processor with a base CPI of 1.0, assuming all references 1200 2000 hit in primary cache. Radix sort Radix sort 1000 1600 Assume main memory access o 800 time of 100 ns, including all 1200 600 miss handling. 800 400 Suppose miss rate per o Quicksort 400 Quicksort 200 instruction at primary cache 0 0 is 2%. 4 8 16 32 64 128 256 512 1024 2048 4096 4 8 16 32 64 128 256 512 1024 2048 4096 Size (K items to sort) Size (K items to sort) How much faster would the o processor be if we add a Theoretical behavior of Observed behavior of secondary cache with a 5 ns Radix sort vs. Quicksort Radix sort vs. Quicksort access time that is large enough to reduce miss rate to 0.5% ? Improving cache 22-27 Improving cache 22-28 7

The real scoop o Memory system performance is often critical factor 5 Radix sort 4 3 2 1 Quicksort 0 4 8 16 32 64 128 256 512 1024 2048 4096 Size (K items to sort) o Multilevel caches, pipelined processors, make it harder to predict outcomes Improving cache 22-29 8

Recommend

More recommend