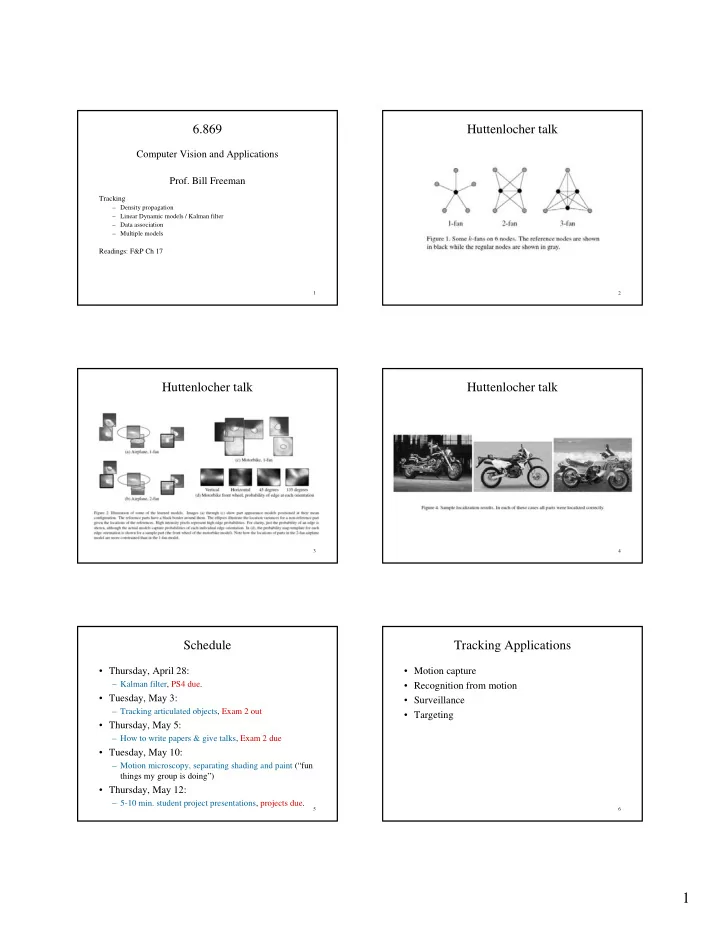

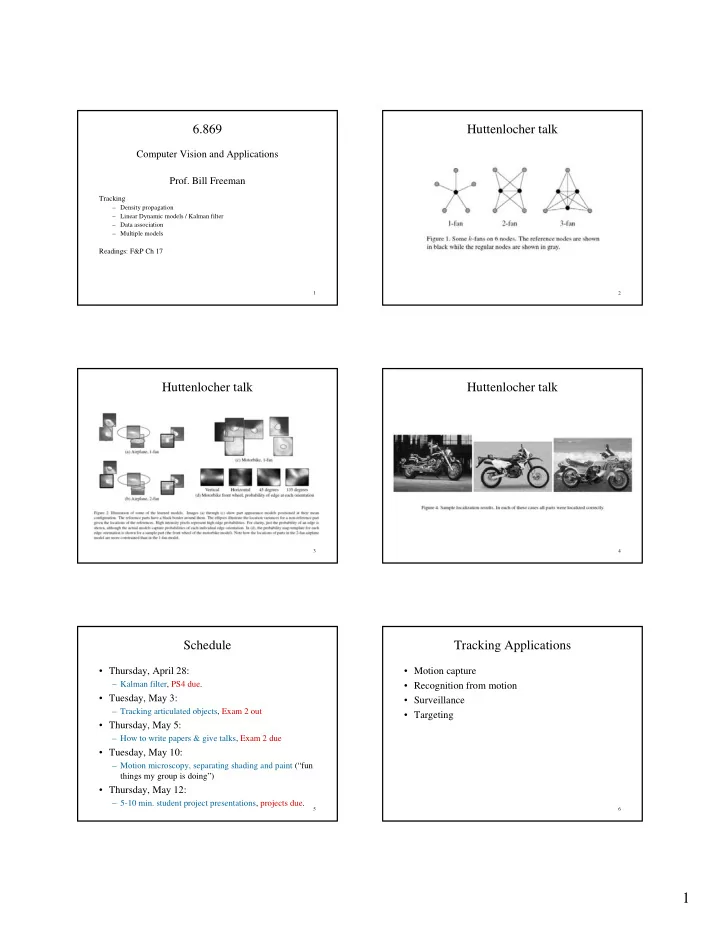

6.869 Huttenlocher talk Computer Vision and Applications Prof. Bill Freeman Tracking – Density propagation – Linear Dynamic models / Kalman filter – Data association – Multiple models Readings: F&P Ch 17 1 2 Huttenlocher talk Huttenlocher talk 3 4 Schedule Tracking Applications • Thursday, April 28: • Motion capture – Kalman filter, PS4 due. • Recognition from motion • Tuesday, May 3: • Surveillance – Tracking articulated objects, Exam 2 out • Targeting • Thursday, May 5: – How to write papers & give talks, Exam 2 due • Tuesday, May 10: – Motion microscopy, separating shading and paint (“fun things my group is doing”) • Thursday, May 12: – 5-10 min. student project presentations, projects due. 5 6 1

Things to consider in tracking Density propogation What are the • Tracking == Inference over time • Real world dynamics • Much simplification is possible with linear dynamics and Gaussian probability models • Approximate / assumed model • Observation / measurement process 7 8 Outline Tracking and Recursive estimation • Recursive filters • Real-time / interactive imperative. • State abstraction • Task: At each time point, re-compute estimate of position or pose. • Density propagation – At time n, fit model to data using time 0…n • Linear Dynamic models / Kalman filter – At time n+1, fit model to data using time 0…n+1 • Data association • Repeat batch fit every time? • Multiple models 9 10 Recursive estimation Tracking • Decompose estimation problem • Very general model: – We assume there are moving objects, which have an underlying – part that depends on new observation state X – part that can be computed from previous history – There are measurements Y, some of which are functions of this state – There is a clock • E.g., running average: • at each tick, the state changes a t = α a t-1 + (1- α ) y t • at each tick, we get a new observation • Examples – object is ball, state is 3D position+velocity, measurements are • Linear Gaussian models: Kalman Filter stereo pairs • First, general framework… – object is person, state is body configuration, measurements are frames, clock is in camera (30 fps) 11 12 2

Three main issues in tracking Simplifying Assumptions 13 14 Kalman filter graphical model Tracking as induction • Assume data association is done – we’ll talk about this later; a dangerous assumption • Do correction for the 0’th frame x 1 x 2 x 3 x 4 • Assume we have corrected estimate for i’th frame – show we can do prediction for i+1, correction for i+1 y 1 y 2 y 3 y 4 15 16 Base case Induction step given 17 18 3

Update step Linear dynamic models • A linear dynamic model has the form ( ) x i = N D i − 1 x i − 1 ; Σ d i given ( ) y i = N M i x i ; Σ m i • This is much, much more general than it looks, and extremely powerful 19 20 ( ) ( ) x i = N D i − 1 x i − 1 ; Σ d i x i = N D i − 1 x i − 1 ; Σ d i Examples Constant velocity ( ) ( ) y i = N M i x i ; Σ m i y i = N M i x i ; Σ m i • We have • Drifting points – assume that the new position of the point is the old one, u i = u i − 1 + ∆ tv i − 1 + ε i plus noise v i = v i − 1 + ς i D = Id – (the Greek letters denote noise terms) • Stack (u, v) into a single state vector ∆ t ⎛ ⎜ ⎞ ⎛ ⎞ ⎜ ⎞ ⎛ u = 1 u + noise ⎟ ⎜ ⎟ ⎟ ⎝ ⎠ ⎝ ⎠ ⎝ ⎠ v 0 1 v i − 1 i – which is the form we had above 21 22 cic.nist.gov/lipman/sciviz/images/random3.gif http://www.grunch.net/synergetics/images/random 3.jpg ( ) velocity position x i = N D i − 1 x i − 1 ; Σ d i Constant acceleration ( ) y i = N M i x i ; Σ m i • We have u i = u i − 1 + ∆ tv i − 1 + ε i v i = v i − 1 + ∆ ta i − 1 + ς i a i = a i − 1 + ξ i position time – (the Greek letters denote noise terms) measurement,position • Stack (u, v) into a single state vector Constant ∆ t ⎛ ⎞ ⎛ ⎞ ⎛ ⎞ 1 0 Velocity u u ⎜ ⎟ ⎜ ⎟ ⎜ ⎟ = ∆ t + noise Model v 0 1 v ⎜ ⎟ ⎜ ⎟ ⎜ ⎟ ⎝ ⎠ ⎝ ⎠ ⎝ ⎠ a 0 0 1 a i − 1 i – which is the form we had above time 23 24 4

( ) x i = N D i − 1 x i − 1 ; Σ d i Periodic motion ( ) y i = N M i x i ; Σ m i velocity position Assume we have a point, moving on a line with a periodic movement defined with a differential eq: position time can be defined as Constant Acceleration with state defined as stacked position and Model velocity u=(p, v) 25 26 ( ) x i = N D i − 1 x i − 1 ; Σ d i Periodic motion Higher order models ( ) y i = N M i x i ; Σ m i • Independence assumption Take discrete approximation….(e.g., forward • Velocity and/or acceleration augmented position Euler integration with ∆ t stepsize.) • Constant velocity model equivalent to – velocity == – acceleration == – could also use , etc. 27 28 The Kalman Filter Recall the three main issues in tracking • Key ideas: – Linear models interact uniquely well with Gaussian noise - make the prior Gaussian, everything else Gaussian and the calculations are easy – Gaussians are really easy to represent --- once you know the mean and covariance, you’re done (Ignore data association for now) 29 30 5

The Kalman Filter The Kalman Filter in 1D • Dynamic Model • Notation Predicted mean Corrected mean 31 32 [figure from http://www.cs.unc.edu/~welch/kalman/kalmanIntro.html] The Kalman Filter Prediction for 1D Kalman filter • The new state is obtained by – multiplying old state by known constant – adding zero-mean noise • Therefore, predicted mean for new state is – constant times mean for old state • Old variance is normal random variable – variance is multiplied by square of constant – and variance of noise is added. 33 34 The Kalman Filter 35 36 6

Correction for 1D Kalman filter Notice: – if measurement noise is small, we rely mainly on the measurement, – if it’s large, mainly on the prediction – σ does not depend on y 37 38 velocity position position position time Constant Velocity Model time 39 40 position position time The o-s give state, x-s measurement. time 41 42 7

Smoothing position • Idea – We don’t have the best estimate of state - what about the future? – Run two filters, one moving forward, the other backward in time. – Now combine state estimates • The crucial point here is that we can obtain a smoothed estimate by viewing the backward filter’s prediction as yet another measurement for the forward filter time The o-s give state, x-s measurement. 43 44 Forward estimates. Backward estimates. position position The o-s give state, x-s measurement. The o-s give state, x-s measurement. time time 45 46 Combined forward-backward estimates. n-D Generalization to n-D is straightforward but more complex. position The o-s give state, x-s measurement. time 47 48 8

n-D n-D Prediction Generalization to n-D is straightforward but more complex. Generalization to n-D is straightforward but more complex. Prediction: • Multiply estimate at prior time with forward model: • Propagate covariance through model and add new noise: 49 50 n-D Correction n-D correction Generalization to n-D is straightforward but more complex. Find linear filter on innovations which minimizes a posteriori error covariance: Correction: ( ) ( ) ⎥ ⎡ ⎤ • Update a priori estimate with measurement to form a T − + − + E x x x x ⎢ ⎣ ⎦ posteriori K is the Kalman Gain matrix. A solution is 51 52 Kalman Gain Matrix As measurement becomes more reliable, K weights residual more heavily, = M − 1 K i lim Σ → 0 m As prior covariance approaches 0, measurements are ignored: = K 0 lim i Σ − → 0 i 53 54 9

2-D constant velocity example from Kevin Murphy’s Matlab toolbox 2-D constant velocity example from Kevin Murphy’s Matlab toolbox • MSE of filtered estimate is 4.9; of smoothed estimate. 3.2. • Not only is the smoothed estimate better, but we know that it is better, as illustrated by the smaller uncertainty ellipses • Note how the smoothed ellipses are larger at the ends, because these points have seen less data. • Also, note how rapidly the filtered ellipses reach their steady-state (“Ricatti”) values. 55 56 [figure from http://www.ai.mit.edu/~murphyk/Software/Kalman/kalman.html] [figure from http://www.ai.mit.edu/~murphyk/Software/Kalman/kalman.html] Data Association Data Association In real world y i have clutter as well as data… Approaches: • Nearest neighbours – choose the measurement with highest probability given E.g., match radar returns to set of aircraft predicted state trajectories. – popular, but can lead to catastrophe • Probabilistic Data Association – combine measurements, weighting by probability given predicted state – gate using predicted state 57 58 Red: tracks of 10 drifting points. Blue, black: point being tracked Red: tracks of 10 drifting points. Blue, black: point being tracked position position 59 60 time time 10

Recommend

More recommend