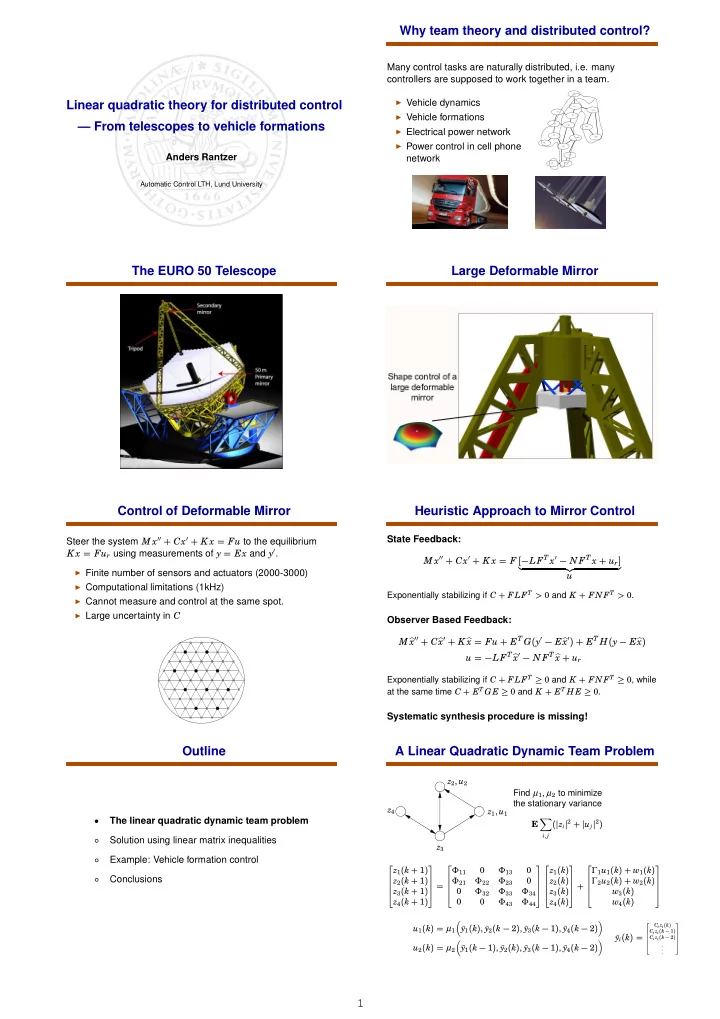

Why team theory and distributed control? Many control tasks are naturally distributed, i.e. many controllers are supposed to work together in a team. G2 ◮ Vehicle dynamics Linear quadratic theory for distributed control G7 G14 G3 ◮ Vehicle formations G8 — From telescopes to vehicle formations G4 G9 ◮ Electrical power network G15 G5 G16 G10 ◮ Power control in cell phone G6 G11 Anders Rantzer network G12 G1 G13 Automatic Control LTH, Lund University The EURO 50 Telescope Large Deformable Mirror Control of Deformable Mirror Heuristic Approach to Mirror Control Steer the system M x ′′ + Cx ′ + K x = Fu to the equilibrium State Feedback: K x = Fu r using measurements of y = Ex and y ′ . M x ′′ + Cx ′ + K x = F [− LF T x ′ − N F T x + u r ] � �� � ◮ Finite number of sensors and actuators (2000-3000) u ◮ Computational limitations (1kHz) Exponentially stabilizing if C + FLF T > 0 and K + FNF T > 0 . ◮ Cannot measure and control at the same spot. ◮ Large uncertainty in C Observer Based Feedback: x ′′ + C � x ′ + K � x = Fu + E T G ( y ′ − E � x ′ ) + E T H ( y − E � x ) M � x ′ − N F T � u = − LF T � x + u r Exponentially stabilizing if C + FLF T ≥ 0 and K + FNF T ≥ 0 , while at the same time C + E T GE ≥ 0 and K + E T H E ≥ 0 . Systematic synthesis procedure is missing! Outline A Linear Quadratic Dynamic Team Problem z 2 , u 2 Find µ 1 , µ 2 to minimize the stationary variance z 4 z 1 , u 1 � The linear quadratic dynamic team problem • (� z i � 2 + � u j � 2 ) E i , j ○ Solution using linear matrix inequalities z 3 ○ Example: Vehicle formation control z 1 ( k + 1 ) Φ 11 0 Φ 13 0 z 1 ( k ) Γ 1 u 1 ( k ) + w 1 ( k ) ○ Conclusions z 2 ( k + 1 ) Φ 21 Φ 22 Φ 23 z 2 ( k ) Γ 2 u 2 ( k ) + w 2 ( k ) 0 = + z 3 ( k + 1 ) 0 Φ 32 Φ 33 Φ 34 z 3 ( k ) w 3 ( k ) z 4 ( k + 1 ) 0 0 Φ 43 Φ 44 z 4 ( k ) w 4 ( k ) � � 2 C i z i ( k ) 3 u 1 ( k ) = µ 1 y 1 ( k ) , ¯ ¯ y 2 ( k − 2 ) , ¯ y 3 ( k − 1 ) , ¯ y 4 ( k − 2 ) C i z i ( k − 1 ) 6 7 y i ( k ) = � � ¯ C i z i ( k − 2 ) 6 7 6 7 6 7 . u 2 ( k ) = µ 2 y 1 ( k − 1 ) , ¯ ¯ y 2 ( k ) , ¯ y 3 ( k − 1 ) , ¯ y 4 ( k − 2 ) 4 . 5 . 1

The Witsenhausen counterexample Finite time optimal LQ optimal control [Yu-Chi Ho and K’ai-Ching Chu (1972)]: “If a decision-makers action affects our information, then knowing what he knows will yield linear optimal solutions” x 1 = x 0 + µ 1 ( x 0 ) z 2 , u 2 x 2 = x 1 − µ 2 ( x 1 + v ) Find µ 1 , µ 2 to minimize z 4 z 1 , u 1 T � � (� z i ( k )� 2 + � u j ( k )� 2 ) Minimize E [ k 2 ( µ 1 ( x 0 )) 2 + ( x 2 ) 2 ] E k = 0 i , j z 3 when x 0 and v are given Gaussian variables. z 1 ( k + 1 ) Φ 11 Φ 13 z 1 ( k ) Γ 1 u 1 ( k ) + w 1 ( k ) 0 0 The best controllers are not linear. The output of the first controller z 2 ( k + 1 ) Φ 21 Φ 22 Φ 23 z 2 ( k ) Γ 2 u 2 ( k ) + w 2 ( k ) 0 = + appears as input of the second. Hence there is an incentive for the z 3 ( k + 1 ) 0 Φ 32 Φ 33 Φ 34 z 3 ( k ) w 3 ( k ) z 4 ( k + 1 ) 0 0 Φ 43 Φ 44 z 4 ( k ) w 4 ( k ) first controller to “signal” its information to the second controller. � � 2 C i z i ( k ) 3 u 1 ( k ) = µ 1 y 1 ( k ) , ¯ y 2 ( k − 2 ) , ¯ y 3 ( k − 1 ) , ¯ y 4 ( k − 2 ) ¯ [ Radner (1962) Team decision problems ] C i z i ( k − 1 ) 6 7 y i ( k ) = ¯ � � C i z i ( k − 2 ) 6 7 [ Witsenhausen (1968) A counterexample in stochastic control ] 6 7 6 . 7 u 2 ( k ) = µ 2 y 1 ( k − 1 ) , ¯ ¯ y 2 ( k ) , ¯ y 3 ( k − 1 ) , ¯ y 4 ( k − 2 ) 4 . 5 . Convexity in distributed control Quadratic Invariance [Rotkowitz, Lall (2002)]: Let S be a linear space. Original problem: Minimize K ∈ S � T 11 + T 12 K ( I − T 22 K ) − 1 T 21 � A recent observation by [Bamieh, Voulgaris (2002)] and [Rotkowitz, Lall (2002)]: Modified problem: The signaling incentive disappears and the distributed control synthesis problem becomes convex provided that Minimize Q ∈ S � T 11 + T 12 QT 21 � communication links propagate information faster than the process does. Conditions for equivalence between the two: The two are equivalent if S is quadratically invariant under T 22 , i.e. K T 22 K ∈ S for all K ∈ S This holds even for nonlinear operators if K 1 T 22 K 2 ∈ S for all K 1 , K 2 ∈ S Outline Standard linear quadratic optimal control Find u = Lx to minimize E ( x T Q xx x + u T Q uu u + 2 x T Q xu u ) when E ww T = I x + = Ax + Bu + w ○ The linear quadratic dynamic team problem Solution by convex optimization: Solution using linear matrix inequalities • �� � � �� Q xx Q xu X xx X xu ○ Example: Vehicle formation control Minimize trace Q ux Q uu X ux X uu ○ Conclusions A T X xx X xu 0 � � B T subject to X xx = A B I X ux X uu 0 I 0 0 I � �� � > 0 Then put u = X ux X − 1 xx x Control with disturbance measurements With one-step delay information pattern � � w to minimize E ( x T Q xx x + u T Q uu u + 2 x T Q xu u ) Find u = Lx to minimize E ( x T Q xx x + u T Q uu u + 2 x T Q xu u ) when M 1 0 Find u = Lx + 0 M 2 where E ww T = I x + = Ax + Bu + w E ww T = I x + = Ax + Bu + w Solution by convex optimization: Solution by convex optimization: �� � � �� �� � � �� Q xx Q xu X xx X xu Q xx Q xu X xx X xu Minimize trace Minimize trace Q ux Q uu X ux X uu Q ux Q uu X ux X uu A T A T X xx X xu 0 X xx X xu 0 � � � � B T B T subject to X xx = subject to X xx = A B I X ux X uu X uw A B I X ux X uu X uw X wu I 0 X wu I I 0 I � �� � � �� � > 0 > 0 � M 1 � X uw = X T wu = 0 Then put u = X ux X − 1 0 M 2 xx x + X uw w Then put u = X ux X − 1 xx x + X uw w 2

Lagrange multiplier method works here! Theorem 1 The following statements are equivalent for the system Already in Yakubovich’s SCL-92 paper, a new result by Megretski and Trail was used to prove that z ( t + 1 ) = Φ z ( t ) + Γ [ u ( t ) + w ( t )] (1) Minimize φ 0 ( u ) ( i ) There exist feedback laws � � subject to φ 1 ( u ) = 0, . . . , φ n ( u ) = 0 u i ( t ) = µ i z 1 ( t − d i 1 ) , . . ., ¯ ¯ z J ( t − d iJ ) is equivalent to that together with (1) have a stationary zero mean solution satisfying E � Cz + Du � 2 ≤ γ . Minimize φ 0 ( u ) + λ 1 φ 1 ( u ) + ⋅ ⋅ ⋅ + λ n φ n ( u ) ( ii ) There exists a feedback law u ( k ) = µ ( x ) that for some value of λ 1 , . . . , λ n . together with (1) has a stationary zero mean solution satisfying Hence covariance constraints can be used for distributed E � Cz + Du � 2 ≤ γ control. Separation holds because the second problem is E u i ( k ) w j ( k − l ) = 0 ordinary LQG! for 1 ≤ j ≤ J and 1 ≤ l ≤ d ij . Outline A vehicle formation x 1 x 2 x 3 x 4 x 5 ○ The linear quadratic dynamic team problem ○ Solution using linear matrix inequalities The objective is to minimize E � 4 i = 1 � x i + 1 − x i � 2 . • Example: Vehicle formation control ○ Conclusions Each vehicle obeys the independent dynamics z i ( k + 1 ) = z i ( k ) + u i ( k ) + w i ( k ) A vehicle formation A vehicle formation No communication: x 1 x 2 x 3 x 4 x 5 No communication: E � x 1 − x 2 � 2 = 3.40 E � x 3 − x 4 � 2 = 3.40 x 1 x 2 x 3 x 4 x 5 E � x 2 − x 3 � 2 = 3.40 E � x 4 − x 5 � 2 = 3.40 E � x 1 − x 2 � 2 = 3.40 E � x 3 − x 4 � 2 = 3.40 E � x 2 − x 3 � 2 = 3.40 E � x 4 − x 5 � 2 = 3.40 Full state information everywhere: E � x 1 − x 2 � 2 = 3.13 E � x 3 − x 4 � 2 = 3.16 E � x 2 − x 3 � 2 = 3.16 E � x 4 − x 5 � 2 = 3.13 A vehicle formation Outline x 1 x 2 x 3 x 4 x 5 E � x 1 − x 2 � 2 = 3.40 E � x 3 − x 4 � 2 = 3.40 E � x 2 − x 3 � 2 = 3.40 E � x 4 − x 5 � 2 = 3.40 ○ The linear quadratic dynamic team problem ○ Solution using linear matrix inequalities ○ Example: Vehicle formation control E � x 1 − x 2 � 2 = 3.13 E � x 3 − x 4 � 2 = 3.16 • Conclusions E � x 2 − x 3 � 2 = 3.16 E � x 4 − x 5 � 2 = 3.13 E � x 1 − x 2 � 2 = 3.30 E � x 3 − x 4 � 2 = 3.33 E � x 2 − x 3 � 2 = 3.32 E � x 4 − x 5 � 2 = 3.25 3

Recommend

More recommend