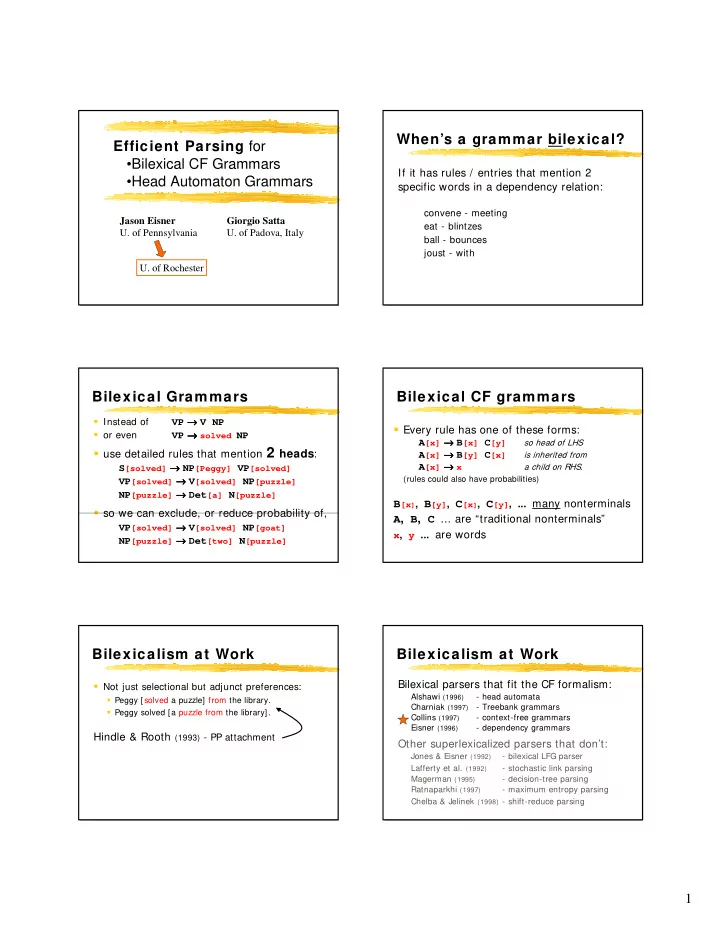

When’s a grammar bilexical? Efficient Parsing for •Bilexical CF Grammars If it has rules / entries that mention 2 •Head Automaton Grammars specific words in a dependency relation: convene - meeting Jason Eisner Giorgio Satta eat - blintzes U. of Pennsylvania U. of Padova, Italy ball - bounces joust - with U. of Rochester Bilexical Grammars Bilexical CF grammars VP → → → V NP → � Instead of � Every rule has one of these forms: VP → → solved NP → → � or even A [x] → → B [x] C [y] → → so head of LHS � use detailed rules that mention 2 heads : A [x] → → B [y] C [x] → → is inherited from A [x] → → x → → S [solved] → → → → NP [Peggy] VP [solved] a child on RHS. VP [solved] → → V [solved] NP [puzzle] → → (rules could also have probabilities) NP [puzzle] → → Det [a] N [puzzle] → → B [x ] , B [y] , C [x ] , C [y] , ... many nonterminals � so we can exclude, or reduce probability of, A , B , C ... are “traditional nonterminals” VP [solved] → → V [solved] NP [goat] → → x , y ... are words NP [puzzle] → → → → Det [two] N [puzzle] Bilexicalism at Work Bilexicalism at Work Bilexical parsers that fit the CF formalism: � Not just selectional but adjunct preferences: Alshawi (1996) - head automata � Peggy [solved a puzzle] from the library. Charniak (1997) - Treebank grammars � Peggy solved [a puzzle from the library]. Collins (1997) - context-free grammars Eisner (1996) - dependency grammars Hindle & Rooth (1993) - PP attachment Other superlexicalized parsers that don’t: Jones & Eisner (1992) - bilexical LFG parser Lafferty et al. (1992) - stochastic link parsing Magerman (1995) - decision-tree parsing Ratnaparkhi (1997) - maximum entropy parsing Chelba & Jelinek (1998) - shift-reduce parsing 1

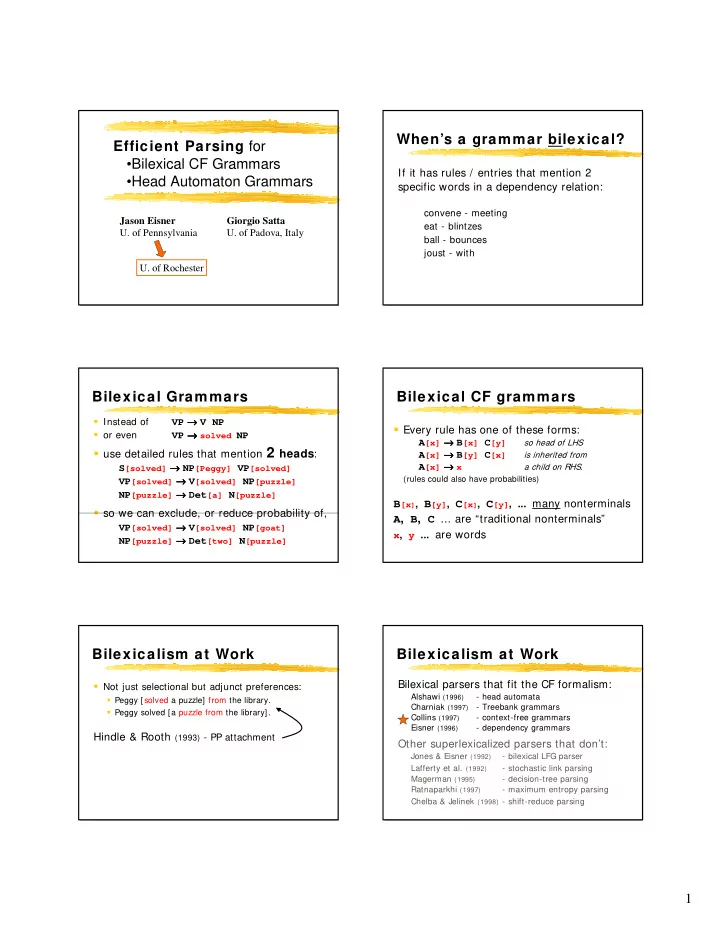

How bad is bilex CF parsing? The CKY-style algorithm A [x] → → → → B [x] C [y] [ Mary ] loves [ [ the ] girl [ outdoors ] ] � Grammar size = O(t 3 V 2 ) where t = | { A , B , ...} | V = | { x , y ...} | � So CKY takes O(t 3 V 2 n 3 ) � Reduce to O(t 3 n 5 ) since relevant V = n � This is terrible ... can we do better? � Recall: regular CKY is O(t 3 n 3 ) Why CKY is O(n 5 ) not O(n 3 ) Idea #1 ... advocate visiting relatives � Combine B with what C? ... hug visiting relatives B C � must try different-width C’s (vary k ) i h j j+1 h’ k O(n 3 combinations) B C � must try differently- headed C’s (vary h’ ) O(n 5 combinations) A i h j j+1 h’ k A � Separate these! i h k i h’ k Head Automaton Grammars Idea #1 (Alshawi 1996) [Good old Peggy] solved [the puzzle] [with her teeth] ! B C The head automaton for solved : h’ � a finite-state device i h j (the old CKY way) � can consume words adjacent to it on either side A � does so after they’ve consumed their dependents C B C C [Peggy] solved [puzzle] [with] (state = V) h’ [Peggy] solved [with] (state = VP) i j j+1 h’ k i h j j+1 h’ k [Peggy] solved (state = VP) A A solved (state = S; halt) i h’ k i h’ k 2

Formalisms too pow erful? Transform the grammar � So we have Bilex CFG and HAG in O(n 4 ). � Absent such cycles, � HAG is quite powerful - head c can require a n c b n : we can transform to a “split grammar”: ... [... a 3 ...] [... a 2 ...] [... a 1 ...] c [... b 1 ...] [... b 2 ...] [... b 3 ...] ... � Each head eats all its right dependents first not center-embedding, [a 3 [[a 2 [[a 1 ] b 1 ]] b 2 ]] b 3 � I.e., left dependents are more oblique. A A � Linguistically unattested and unlikely � Possible only if the HA has a left-right cycle � This allows � Absent such cycles, can we parse faster? i h h k � (for both HAG and equivalent Bilexical CFG) A i k Idea #2 Idea #2 � Combine what B and C? B C � must try different-width i h j j+1 h’ C’s (vary k ) (the old CKY way) A C B C B C C � must try different midpoints j h’ h’ k i h i h j j+1 h’ k i h j j+1 h’ k A A A � Separate these! i h’ k i h k i h k Idea #2 The O(n 3 ) half-tree algorithm [ Mary ] loves [ [ the ] girl [ outdoors ] ] B C h j j+1 h’ (the old CKY way) A C B C C h’ h’ k h i h j j+1 h’ k A A i h k h k 3

Theoretical Speedup Reality check � n = input length g = polysemy � Constant factor � t = traditional nonterms or automaton states � Pruning may do just as well � Naive: O(n 5 g 2 t) � “visiting relatives”: 2 plausible NP hypotheses � New: O(n 4 g 2 t) � i.e., both heads survive to compete - common?? � Even better for split grammars: � Amdahl’s law � Eisner (1997): O(n 3 g 3 t 2 ) � much of time spent smoothing probabilities � New: O(n 3 g 2 t) � fixed cost per parse if we cache probs for reuse all independent of vocabulary size! 3 parsers (pruned) Experimental Speedup (not in paper) 6000 5000 Used Eisner (1996) Treebank WSJ parser and its split bilexical grammar 4000 NAIVE IWPT-97 � Parsing with pruning: ACL-99 Time 3000 � Both old and new O(n 3 ) methods give 5x speedup over the O(n 5 ) - at 30 words 2000 � Exhaustive parsing (e.g., for EM): 1000 � Old O(n 3 ) method (Eisner 1997) gave 3x speedup over O(n 5 ) - at 30 words 0 0 10 20 30 40 50 60 � New O(n 3 ) method gives 19x speedup Sentence Length 3 parsers (pruned): log-log plot 3 parsers (exhaustive) 10000 80000 70000 y = cx 3.8 1000 60000 50000 NAIVE y = cx 2.7 IWPT-97 ACL-99 Time Time 100 40000 NAIVE IWPT-97 ACL-99 30000 10 20000 10000 1 0 10 100 0 10 20 30 40 50 60 Sentence Length Sentence Length 4

3 parsers (exhaustive): log-log plot 3 parsers 100000 80000 y = cx 5.2 70000 y = cx 4.2 10000 60000 NAIVE y = cx 3.3 50000 1000 IWPT-97 Time ACL-99 NAIVE Time IWPT-97 40000 ACL-99 NAIVE 100 IWPT-97 30000 ACL-99 20000 10 10000 1 0 10 100 0 10 20 30 40 50 60 Sentence Length Sentence Length 3 parsers: log-log plot 100000 Summary 10000 exhaustive � Simple bilexical CFG notion A [x] → → → → B [x] C [y] � Covers several existing stat NLP parsers 1000 pruned NAIVE Time IWPT-97 ACL-99 � Fully general O(n 4 ) algorithm - not O(n 5 ) NAIVE 100 IWPT-97 ACL-99 � Faster O(n 3 ) algorithm for the “split” case � Demonstrated practical speedup 10 � Extensions: TAGs and post-transductions 1 10 100 Sentence Length 5

Recommend

More recommend