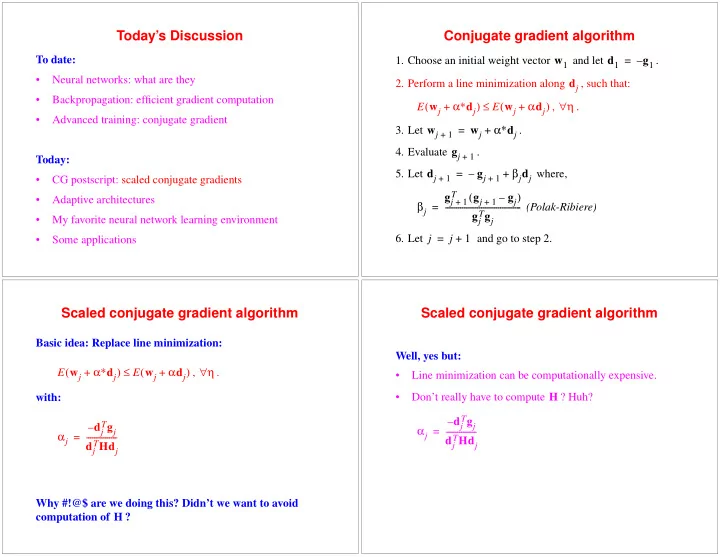

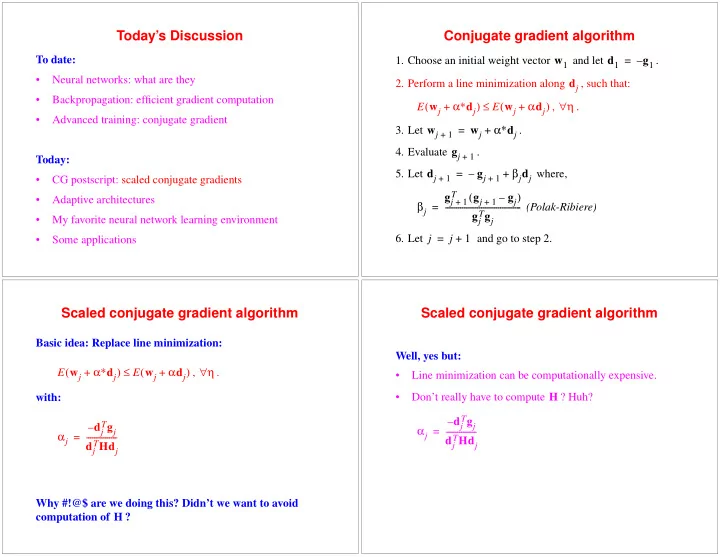

Today’s Discussion Conjugate gradient algorithm To date: 1. Choose an initial weight vector w 1 and let d 1 = – g 1 . • Neural networks: what are they 2. Perform a line minimization along d j , such that: • Backpropagation: efficient gradient computation α∗ d j ( ) ≤ ( α d j ) ∀ η E w j E w j + + , . • Advanced training: conjugate gradient α∗ d j w j w j 3. Let = + . + 1 g j 4. Evaluate . + 1 Today: β j d j d j g j 5. Let = – + where, • CG postscript: scaled conjugate gradients + 1 + 1 ( ) T g j g j g j – • Adaptive architectures + 1 + 1 β j = - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - (Polak-Ribiere) T g j g j • My favorite neural network learning environment 6. Let j = j + 1 and go to step 2. • Some applications Scaled conjugate gradient algorithm Scaled conjugate gradient algorithm Basic idea: Replace line minimization: Well, yes but: α∗ d j ( ) ≤ ( α d j ) ∀ η E w j E w j + + , . • Line minimization can be computationally expensive. H with: • Don’t really have to compute ? Huh? T g j – d j T g j d j – α j = - - - - - - - - - - - - - - - - α j = - - - - - - - - - - - - - - - - T Hd j d j T Hd j d j Why #!@$ are we doing this? Didn’t we want to avoid computation of H ?

A closer look at α j Computing Hd j ( ε d ) ( ε d ) Don’t have to compute H , only Hd j . g w 0 g w 0 + – – - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - Hd = lim 2 ε Theorem : ε → 0 ( ) First-order Taylor expansion of g w about w 0 : w 0 = current W -dimensional weight vector, ( ) ≈ ( ) ( ) g w g w 0 H w w 0 + – ( ) ∇ ( ) g w E w w = (gradient of E at some vector ), and, ( ε d ) ( ε d ) g w 0 g w 0 + – – H w 0 = Hessian of E evaluated at , ≈ - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - 2 ε [ ( ) H ε d ( ) ] [ ( ) H ε d ( ) ] d = arbitrary -dimensional vector. W g w 0 g w 0 + – – - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - 2 ε ( ε d ) ( ε d ) g w 0 g w 0 + – – - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - Hd = lim 2 ε ε → 0 Computing New conjugate gradient algorithm Hd j w 1 d 1 g 1 1. Choose an initial weight vector and let = – . ( ε d ) ( ε d ) g w 0 g w 0 + – – 2 ε Hd ≈ - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - 2 ε 2 ε α j 2. Compute : ( ε d ) ( ε d ) g w 0 g w 0 α j ⁄ ∀ η + – – T g j T Hd j = – d j d j , . ≈ Hd - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - 2 ε α j d j 3. Let w j = w j + . + 1 So: g j 4. Evaluate . + 1 ( ε d ) ( ε d ) g w 0 g w 0 + – – β j d j d j g j - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - 5. Let = – + where, Hd = lim 2 ε + 1 + 1 ε → 0 β j ( ) g j ⁄ T T g j g j g j g j = – + 1 + 1 T g j d j – α j 6. Let j = j + 1 and go to step 2. = - - - - - - - - - - - - - - - - now just requires two gradient evaluations... T Hd j d j Any problems?

What about < ? Examining λ H 0 α j ⁄ T g j T Hd j d j d j might take uphill steps... T g j = – d j – α j = - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - λ d j T Hd j 2 d j + Idea: λ I H H • Replace with + λ • What is the meaning of being very large? • So: λ • What is the meaning of being very small (i.e. zero)? T g j d j – α j = - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - λ d j T Hd j 2 d j + What the #$@! is this? Model trust regions Model trust regions Question: When should we “trust” Question: When should we “trust” T g j T g j d j d j – – α j α j ? ? = - - - - - - - - - - - - - - - - = - - - - - - - - - - - - - - - - T Hd j T Hd j d j d j 1. H is positive definite (denominator > 0) 2. Local quadratic assumption is good

Near a mountain, not a valley How to increase ? λ How about: Look at denominator of: δ λ ' 2 λ = – - - - - - - - - - - - - T g j d j – 2 d j α j = - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - λ d j T Hd j 2 d j + so that: δ λ d j T Hd j 2 = d j + δ ' δ ( λ ' λ ) d j 2 = + – δ δ < λ δ ' δ 2 λ λ 2 If , increase to make denominator positive. 0 = + – - - - - - - - - - - - - – d j 2 d j δ ' δ 2 δ λ d j δ λ d j 2 2 = – + = – + New effective denominator value Goin’ up? I’ll show you... Since the new denominator is: δ λ ' 2 λ = – - - - - - - - - - - - - δ ' T Hd j 2 d j d j = – α j the new value of is: δ ' δ λ d j 2 = – + T g j T g j – d j d j α j ' = - - - - - - - - - - - - - - - - - - - - = - - - - - - - - - - - - - - - - So: T Hd j T Hd j – d j d j α j ' α j = – α j δ ' ( T Hd j λ d j 2 ) λ d j 2 d j = – + + α j ' > H 0 < δ ' H T Hd j α j 0 d j = – (what does this mean?)

Model trust regions How to test local quadratic assumption? Check: Question: When should we “trust” ( ) ( α j d j ) E w j E w j – + ∆ = - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - ( ) ( α j d j ) E w j E Q w j – + T g j d j – α j ? = - - - - - - - - - - - - - - - - T Hd j d j What’s E Q ? 1 ( ) ( ) ( ) T b ( ) T H w ( ) E Q w E w 0 w w 0 - w w 0 w 0 = + – + - - – – 2 1. H is positive definite (denominator > 0) So: 2. Local quadratic assumption is good 1 ( α j d j ) ( ) α j d j T g j - α j 2 d j T Hd j E Q w j E w j - - + = + + 2 ∆ What does tell us? Local quadratic test Scaled conjugate gradient algorithm ( α j λ , ) ( ) ( α j d j ) E w j E w j – + δ λ d j T Hd j 2 ∆ 1. Compute = d j + . = - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - ( ) ( α j d j ) E w j E Q w j – + δ < λ 2 λ ( δ ⁄ 2 ) 2. If , set d j . 0 = – Adjustment of trust region: α j ⁄ ( λ d j ) T g j T Hd j 2 3. Compute d j d j . = – + ∆ > λ λ λ 2 ⁄ • If 0.75 then decrease (e.g. = ) ∆ 4. Compute : ∆ < λ λ 4 λ • If 0.25 then increase (e.g. = ) λ ( ) ( α j d j ) • Otherwise, leave unchanged E w j E w j – + ∆ 5. = - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - ( ) ( α j d j ) E w j E Q w j – + ∆ > λ λ 2 ⁄ ∆ < λ 4 λ 6. If , set , else if , set . 0.75 = 0.25 =

Scaled conjugate gradient algorithm Today’s Discussion To date: 1. Choose an initial weight vector w 1 and let d 1 = – g 1 . • Neural networks: what are they α j λ , 2. Compute : • Backpropagation: efficient gradient computation T g j d j – α j ∀ η = - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - , . • Advanced training: conjugate gradient λ d j T Hd j 2 d j + α j d j w j w j 3. Let = + . + 1 Today: g j 4. Evaluate . + 1 • CG postscript: scaled conjugate gradients β j d j d j g j 5. Let = – + where, + 1 + 1 • Adaptive architectures β j ( ) g j ⁄ T T g j g j g j g j = – • My favorite neural network learning environment + 1 + 1 6. Let j = j + 1 and go to step 2. • Some applications Adaptive architectures Adaptive architectures Standard learning: Standard learning: training • Select neural network architecture • Train neural network • If failure, go back to first step Adaptive approach: training Better approach: • Adapt neural network architecture as function of training

Recommend

More recommend