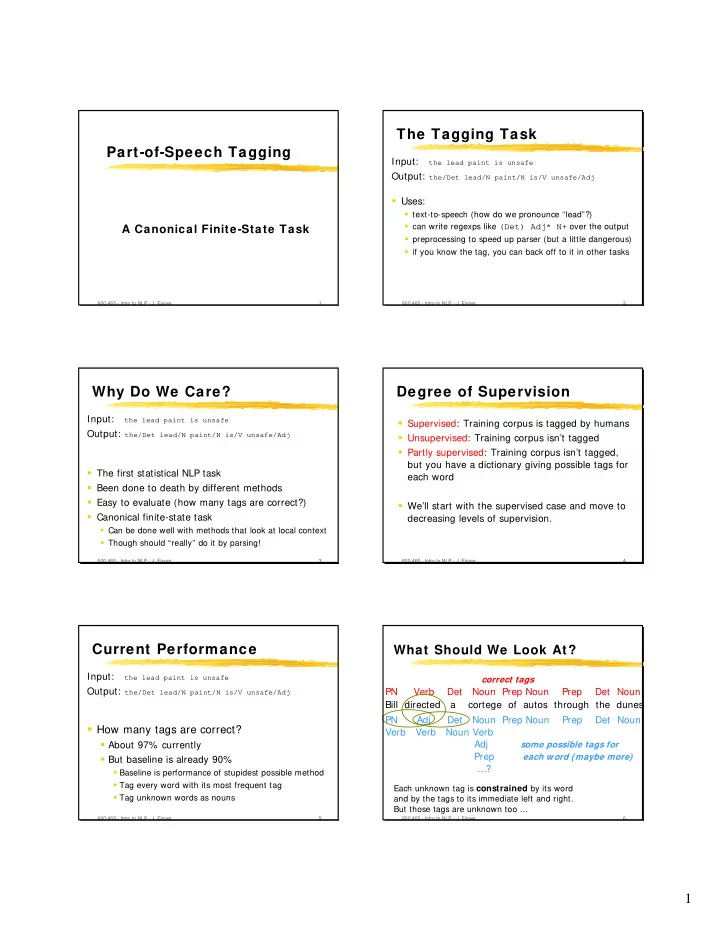

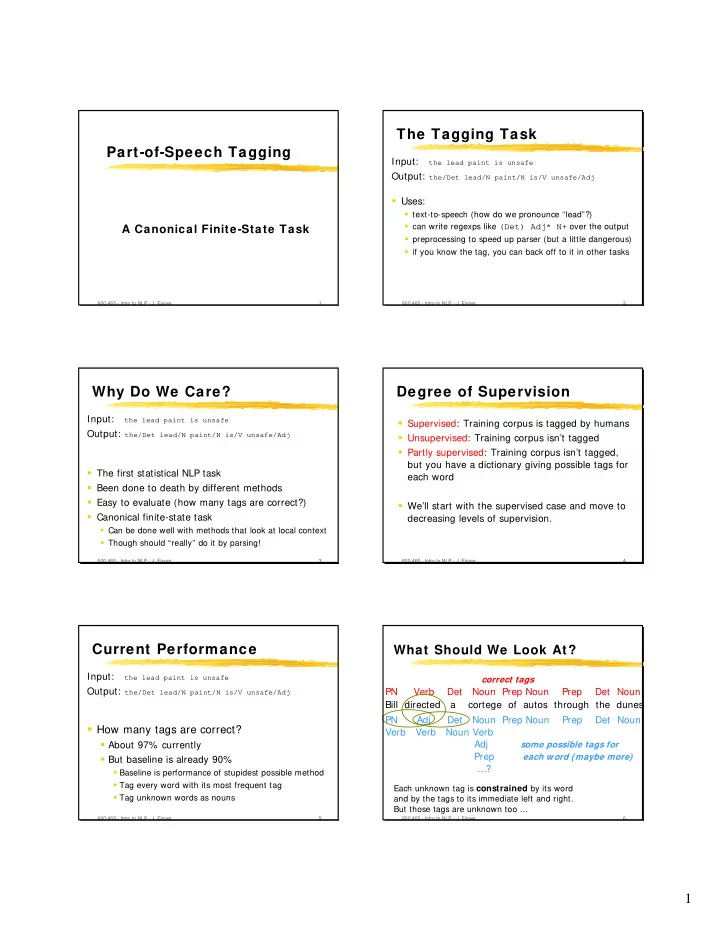

The Tagging Task Part-of-Speech Tagging Input: the lead paint is unsafe Output: the/Det lead/N paint/N is/V unsafe/Adj � Uses: � text-to-speech (how do we pronounce “lead”?) � can write regexps like (Det) Adj* N+ over the output A Canonical Finite-State Task � preprocessing to speed up parser (but a little dangerous) � if you know the tag, you can back off to it in other tasks 600.465 - Intro to NLP - J. Eisner 1 600.465 - Intro to NLP - J. Eisner 2 Why Do We Care? Degree of Supervision Input: the lead paint is unsafe � Supervised: Training corpus is tagged by humans Output: the/Det lead/N paint/N is/V unsafe/Adj � Unsupervised: Training corpus isn’t tagged � Partly supervised: Training corpus isn’t tagged, but you have a dictionary giving possible tags for � The first statistical NLP task each word � Been done to death by different methods � Easy to evaluate (how many tags are correct?) � We’ll start with the supervised case and move to � Canonical finite-state task decreasing levels of supervision. � Can be done well with methods that look at local context � Though should “really” do it by parsing! 600.465 - Intro to NLP - J. Eisner 3 600.465 - Intro to NLP - J. Eisner 4 Current Performance What Should We Look At? Input: the lead paint is unsafe correct tags Output: the/Det lead/N paint/N is/V unsafe/Adj PN Verb Det Noun Prep Noun Prep Det Noun Bill directed a cortege of autos through the dunes PN Adj Det Noun Prep Noun Prep Det Noun � How many tags are correct? Verb Verb Noun Verb � About 97% currently Adj some possible tags for Prep each word (maybe more) � But baseline is already 90% …? � Baseline is performance of stupidest possible method � Tag every word with its most frequent tag Each unknown tag is constrained by its word � Tag unknown words as nouns and by the tags to its immediate left and right. But those tags are unknown too … 600.465 - Intro to NLP - J. Eisner 5 600.465 - Intro to NLP - J. Eisner 6 1

What Should We Look At? What Should We Look At? correct tags correct tags PN Verb Det Noun Prep Noun Prep Det Noun PN Verb Det Noun Prep Noun Prep Det Noun Bill directed a cortege of autos through the dunes Bill directed a cortege of autos through the dunes PN Adj Det Noun Prep Noun Prep Det Noun PN Adj Det Noun Prep Noun Prep Det Noun Verb Verb Noun Verb Verb Verb Noun Verb Adj Adj some possible tags for some possible tags for Prep each word (maybe more) Prep each word (maybe more) …? …? Each unknown tag is constrained by its word Each unknown tag is constrained by its word and by the tags to its immediate left and right. and by the tags to its immediate left and right. But those tags are unknown too … But those tags are unknown too … 600.465 - Intro to NLP - J. Eisner 7 600.465 - Intro to NLP - J. Eisner 8 Three Finite-State Approaches Three Finite-State Approaches � Noisy Channel Model (statistical) 1. Noisy Channel Model (statistical) part-of-speech tags real language X 2. Deterministic baseline tagger composed (n-gram model) with a cascade of fixup transducers insert terminals noisy channel X � � Y � � 3. Nondeterministic tagger composed with text yucky language Y a cascade of finite-state automata that act as filters want to recover X from Y 600.465 - Intro to NLP - J. Eisner 9 600.465 - Intro to NLP - J. Eisner 10 Review : Noisy Channel Review : Noisy Channel 7 b . : 0 b / / a 0 p(X) p(X) : . a 3 real language X .o. * * 1 b . 0 : / C / C 0 p(Y | X) : a:D/0.9 . p(Y | X) a b 8 : noisy channel X � � Y D / 0 � � . 2 = = = 7 p(X,Y) 0 b p(X,Y) . : yucky language Y 0 C / / C a:D/0.63 0 : . a 2 b 4 : D / 0 . 0 6 want to recover x ∈ ∈ ∈ ∈ X from y ∈ ∈ Y ∈ ∈ Note p(x,y) sums to 1. choose x that maximizes p(x | y) or equivalently p(x,y) Suppose y= “C”; what is best “x”? 600.465 - Intro to NLP - J. Eisner 11 600.465 - Intro to NLP - J. Eisner 12 2

Review : Noisy Channel Review : Noisy Channel 7 b 7 b . : . : 0 b 0 b / / / / a 0 a 0 : . p(X) : . p(X) a 3 a 3 .o. .o. * * 1 1 . b . b 0 : 0 : C C / / / / C 0 C 0 : p(Y | X) : p(Y | X) a a:D/0.9 . a a:D/0.9 . 8 8 b b : : D D / / 0 0 . . 2 2 = = .o. * restrict just to 7 C : p(y | Y) 0 b p(X,Y) C . : / 0 1 / C / paths compatible C 0 : a:D/0.63 . a 2 b 4 : D with output “C” / 0 = = . 0 6 7 0 b p(X, y) . 0 : / C / C 0 : . a best path 2 4 Suppose y= “C”; what is best “x”? 600.465 - Intro to NLP - J. Eisner 13 600.465 - Intro to NLP - J. Eisner 14 Noisy Channel for Tagging Markov Model (bigrams) 7 b . : 0 b automaton: p(tag sequence) / / a 0 p(X) : . a 3 Verb “Markov Model” .o. Det * 1 b . 0 : C / / C 0 : a:D/0.9 p(Y | X) transducer: tags � a � words . Start b 8 : D � � / 0 . Prep 2 “Unigram Replacement” .o. * Adj C : p(y | Y) automaton: the observed words C / 1 Noun Stop “straight line” = = 7 transducer: scores candidate tag seqs 0 b p(X, y) . : 0 / C / C 0 : . a 2 on their joint probability with obs words; best path 4 pick best path 600.465 - Intro to NLP - J. Eisner 15 600.465 - Intro to NLP - J. Eisner 16 Markov Model Markov Model Verb Verb Det Det 0.8 0.3 0.3 Start 0.7 Start 0.7 Prep Prep Adj Adj 0.4 0.5 0.4 0.5 Noun Noun Stop Stop 0.2 0.1 0.1 600.465 - Intro to NLP - J. Eisner 17 600.465 - Intro to NLP - J. Eisner 18 3

Markov Model Markov Model as an FSA p(tag seq) p(tag seq) Verb Verb Det Det 0.8 0.8 0.3 0.3 0.7 0.7 Start Start Prep Prep Adj Adj 0.4 0.5 0.4 0.5 Noun Noun Stop Stop 0.2 0.2 0.1 0.1 Start Det Adj Adj Noun Stop = 0.8 * 0.3 * 0.4 * 0.5 * 0.2 Start Det Adj Adj Noun Stop = 0.8 * 0.3 * 0.4 * 0.5 * 0.2 600.465 - Intro to NLP - J. Eisner 19 600.465 - Intro to NLP - J. Eisner 20 Markov Model as an FSA Markov Model (tag bigrams) p(tag seq) p(tag seq) Verb Det Det Det 0.8 Det 0.8 Noun Adj 0.3 Adj 0.3 0.7 Start Start Prep Adj Adj Noun Noun 0.5 0.5 Adj 0.4 Adj 0.4 Noun Noun Stop Stop ε 0.2 ε 0.2 ε 0.1 Start Det Adj Adj Noun Stop = 0.8 * 0.3 * 0.4 * 0.5 * 0.2 Start Det Adj Adj Noun Stop = 0.8 * 0.3 * 0.4 * 0.5 * 0.2 600.465 - Intro to NLP - J. Eisner 21 600.465 - Intro to NLP - J. Eisner 22 Noisy Channel for Tagging Noisy Channel for Tagging Verb Det Det 0.8 Noun automaton: p(tag sequence) Adj 0.3 p(X) Start p(X) 0.7 Prep “Markov Model” Adj Noun .o. .o. Adj 0.4 0.5 Noun * Stop * … ε 0.1 ε 0.2 Noun:cortege/0.000001 Noun:autos/0.001 Noun:Bill/0.002 p(Y | X) p(Y | X) transducer: tags � � words Det:a/0.6 � � Det:the/0.4 “Unigram Replacement” Adj:cool/0.003 Adj:directed/0.0005 .o. * .o. * Adj:cortege/0.000001 … p(y | Y) the cool directed autos p(y | Y) automaton: the observed words “straight line” = = = = transducer: scores candidate tag seqs transducer: scores candidate tag seqs p(X, y) p(X, y) on their joint probability with obs words; on their joint probability with obs words; pick best path we should pick best path 600.465 - Intro to NLP - J. Eisner 23 600.465 - Intro to NLP - J. Eisner 24 4

… Verb Det Noun:cortege/0.000001 Noun:autos/0.001 Noun Det 0.8 Noun:Bill/0.002 Adj 0.3 Start 0.7 Det:a/0.6 Unigram Replacement Model Compose Prep Det:the/0.4 Adj Noun Adj 0.4 0.5 Adj:cool/0.003 Noun Stop Adj:directed/0.0005 ε 0.1 ε 0.2 Adj:cortege/0.000001 … p(word seq | tag seq) … p(tag seq) Noun:cortege/0.000001 Verb Det Noun:autos/0.001 Det 0.8 sums to 1 Adj 0.3 Noun:Bill/0.002 Start Det:a/0.6 Prep Det:the/0.4 Adj Noun sums to 1 0.5 Adj:cool/0.003 Adj 0.4 Noun Stop ε 0.2 Adj:directed/0.0005 Adj:cortege/0.000001 … 600.465 - Intro to NLP - J. Eisner 25 600.465 - Intro to NLP - J. Eisner 26 … Verb Det Noun:cortege/0.000001 Noun:autos/0.001 Noun Det 0.8 Noun:Bill/0.002 Adj 0.3 Start 0.7 Det:a/0.6 Compose Prep Det:the/0.4 Observed Words as Straight-Line FSA Adj Noun Adj 0.4 0.5 Adj:cool/0.003 Noun Stop Adj:directed/0.0005 ε 0.1 ε 0.2 Adj:cortege/0.000001 … word seq p(word seq, tag seq) = p(tag seq) * p(word seq | tag seq) Verb Det Det:a 0.48 Det:the 0.32 Adj:cool 0.0009 the cool directed autos Adj:directed 0.00015 Start Adj:cortege 0.000003 Prep Adj Noun ε Stop N:cortege Adj:cool 0.0012 N:autos Adj:directed 0.00020 Adj:cortege 0.000004 600.465 - Intro to NLP - J. Eisner 27 600.465 - Intro to NLP - J. Eisner 28 the cool directed autos the cool directed autos Compose w ith Compose w ith p(word seq, tag seq) = p(tag seq) * p(word seq | tag seq) p(word seq, tag seq) = p(tag seq) * p(word seq | tag seq) Verb Verb Det Det Det:a 0.48 Det:the 0.32 Det:the 0.32 Adj:cool 0.0009 Adj:cool 0.0009 Adj:directed 0.00015 Start Start Adj:cortege 0.000003 Prep Prep Adj Adj Noun why did this Noun ε ε Stop Stop N:cortege loop go away? Adj:cool 0.0012 N:autos N:autos Adj:directed 0.00020 Adj:directed 0.00020 Adj Adj:cortege 0.000004 600.465 - Intro to NLP - J. Eisner 29 600.465 - Intro to NLP - J. Eisner 30 5

Recommend

More recommend