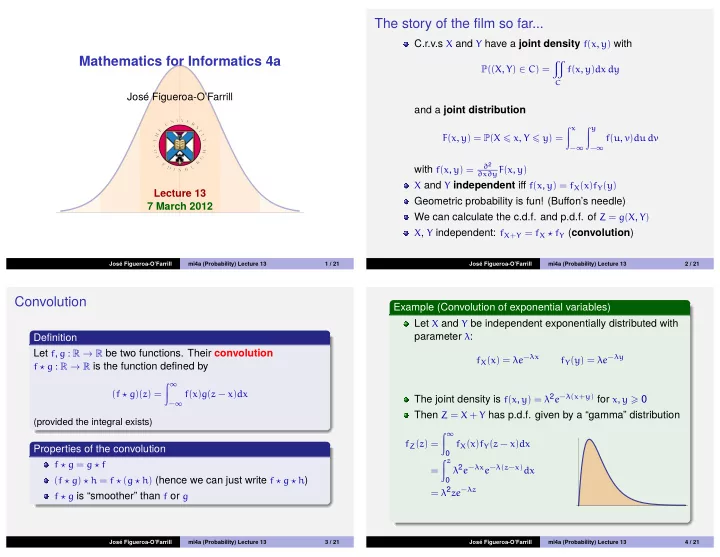

The story of the film so far... C.r.v.s X and Y have a joint density f ( x , y ) with Mathematics for Informatics 4a � P (( X , Y ) ∈ C ) = f ( x , y ) dx dy C Jos´ e Figueroa-O’Farrill and a joint distribution � x � y F ( x , y ) = P ( X � x , Y � y ) = f ( u , v ) du dv − ∞ − ∞ ∂ 2 with f ( x , y ) = ∂x∂y F ( x , y ) X and Y independent iff f ( x , y ) = f X ( x ) f Y ( y ) Lecture 13 Geometric probability is fun! (Buffon’s needle) 7 March 2012 We can calculate the c.d.f. and p.d.f. of Z = g ( X , Y ) X , Y independent: f X + Y = f X ⋆ f Y ( convolution ) Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 1 / 21 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 2 / 21 Convolution Example (Convolution of exponential variables) Let X and Y be independent exponentially distributed with parameter λ : Definition Let f , g : R → R be two functions. Their convolution f X ( x ) = λe − λx f Y ( y ) = λe − λy f ⋆ g : R → R is the function defined by � ∞ ( f ⋆ g )( z ) = f ( x ) g ( z − x ) dx The joint density is f ( x , y ) = λ 2 e − λ ( x + y ) for x , y � 0 − ∞ Then Z = X + Y has p.d.f. given by a “gamma” distribution (provided the integral exists) � ∞ f Z ( z ) = f X ( x ) f Y ( z − x ) dx Properties of the convolution 0 � z f ⋆ g = g ⋆ f λ 2 e − λx e − λ ( z − x ) dx = ( f ⋆ g ) ⋆ h = f ⋆ ( g ⋆ h ) (hence we can just write f ⋆ g ⋆ h ) 0 = λ 2 ze − λz f ⋆ g is “smoother” than f or g Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 3 / 21 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 4 / 21

Example (Independent standard normal random variables) Expectations of functions of random variables X , Y : independent, standard normally distributed. Their Let X and Y be c.r.v.s with joint density f ( x , y ) sum Z = X + Y has p.d.f. Let Z = g ( X , Y ) for some g : R 2 → R � ∞ 1 The expectation value of Z is defined by 2 πe − x 2 / 2 e −( z − x ) 2 / 2 dx f Z ( z ) = − ∞ � = e − z 2 / 4 � ∞ E ( Z ) = g ( x , y ) f ( x , y ) dx dy e −( x − z/ 2 ) 2 dx ( complete the square ) 2 π − ∞ (provided the integral exists) � ∞ = e − z 2 / 4 e − u 2 du ( u = x − 1 2 z ) We already saw that 2 π − ∞ 1 E ( X + Y ) = E ( X ) + E ( Y ) 2 √ πe − z 2 / 4 = even if X and Y are not independent so it is normally distributed with zero mean and variance 2. More generally, if X has mean µ X and variance σ 2 X and Y has mean µ Y and variance σ 2 Y , Z is normally distributed with mean µ X + µ Y and variance σ 2 X + σ 2 Y Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 5 / 21 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 6 / 21 Example (Normally distributed darts) Example (Normally distributed darts — continued) A dart hits a plane target at the point with coordinates ( X , Y ) What is E ( R 2 ) ? where X and Y have joint density E ( R 2 ) = E ( X 2 + Y 2 ) = E ( X 2 ) + E ( Y 2 ) = 1 + 1 = 2 f ( x , y ) = 1 2 πe −( x 2 + y 2 ) / 2 where we used linearity of E , and � X 2 + Y 2 be the distance from the bullseye. What is Let R = the fact that E ( X 2 ) = Var ( X ) = 1 and similarly for Y E ( R ) ? This shows that � 1 2 πre − r 2 / 2 rdr dθ E ( R ) = Var ( R ) = E ( R 2 ) − E ( R ) 2 = 2 − π 2 . � ∞ r 2 e − r 2 / 2 dr = 0 � ∞ r 2 e − r 2 / 2 dr = 1 2 − ∞ � ∞ 1 � � r 2 e − r 2 / 2 dr = π π = √ 2 2 2 π − ∞ Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 7 / 21 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 8 / 21

Independent random variables I Independent random variables II Theorem As with discrete random variables, we have the following Let X , Y be independent continuous random variables. Then Corollary Let X , Y be independent continuous random variables. Then E ( XY ) = E ( X ) E ( Y ) Var ( X + Y ) = Var ( X ) + Var ( Y ) Proof. Definition � E ( XY ) = xyf ( x , y ) dx dy The covariance and correlation of X and Y are � = xyf X ( x ) f Y ( y ) dx dy (independence) Cov ( X , Y ) = E ( XY ) − E ( X ) E ( Y ) � � � � � � = xf X ( x ) dx yf Y ( y ) dy Cov ( X , Y ) ρ ( X , Y ) = � Var ( X ) Var ( Y ) = E ( X ) E ( Y ) Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 9 / 21 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 10 / 21 Example Example (Continued) Consider X , Y uniformly distributed on the unit disk D , so that On the other hand, U = | X | and V = | Y | are correlated. f ( x , y ) = 1 | x | 1 y π � E ( U ) = πdx dy Then by symmetric integration, D � π � 1 2 r 2 cos θdr dθ = 2 x E ( XY ) = E ( X ) = E ( Y ) = 0 Cov ( X , Y ) = 0 ⇒ π = − π 0 2 � π � 1 2 Therefore X , Y are uncorrelated but not independent. = 2 r 2 dr cos θdθ π − π 0 2 = 2 π × 2 × 1 3 4 = 3 π 4 And by symmetry, also E ( V ) = 3 π . Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 11 / 21 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 12 / 21

Moment generating function of a sum Example (Continued) Finally, Let X , Y be independent continuous random variables and let Z = X + Y . Then | xy | 1 y � E ( UV ) = πdx dy M Z ( t ) = E ( e tZ ) D � � π � 1 e tz f Z ( z ) dz 2 = r 3 sin θ cos θdr dθ = 4 x π 0 0 � � � π � 1 e tz = f X ( x ) f Y ( z − x ) dx dz 2 = 4 r 3 dr sin θ cos θdθ π 0 0 � e t ( z − x ) e tx f X ( x ) f Y ( z − x ) dx dz = = 4 π × 1 2 × 1 1 4 = 2 π � � e tx f X ( x ) dx e ty f Y ( y ) dy = ( y = z − x ) Hence = M X ( t ) M Y ( t ) 2 π − 16 1 9 π 2 = 9 π − 32 E ( UV ) − E ( U ) E ( V ) = 18 π 2 < 0 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 13 / 21 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 14 / 21 Markov’s inequality Chebyshev’s inequality Theorem (Markov’s inequality) Theorem (Chebyshev’s inequality) Let X be a c.r.v. Then for all ε > 0 Let X be a c.r.v. with finite mean and variance. Then P ( | X | � ε ) � E ( | X | ) P ( | X | � ε ) � E ( X 2 ) . for all ε > 0 ε ε 2 Proof. Proof. � ∞ � ∞ E ( X 2 ) = x 2 f ( x ) dx E ( | X | ) = | x | f ( x ) dx − ∞ − ∞ � − ε � ε � ∞ � − ε � ε � ∞ x 2 f ( x ) dx + x 2 f ( x ) dx + x 2 f ( x ) dx = | x | f ( x ) dx + | x | f ( x ) dx + | x | f ( x ) dx = − ∞ − ε ε − ∞ − ε ε � − ε � ∞ � − ε � ∞ � ε 2 f ( x ) dx + ε 2 f ( x ) dx = ε 2 P ( | X | � ε ) � ε f ( x ) dx + ε f ( x ) dx = ε P ( | X | � ε ) − ∞ ε − ∞ ε Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 15 / 21 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 16 / 21

Two corollaries of Chebyshev’s inequality The Chernoff bound Corollary Corollary Let X be a c.r.v. with moment generating function M X ( t ) . Then Let X be a c.r.v. with mean µ and variance σ 2 . Then for any for any t > 0 , ε > 0 , P ( X � α ) � e − tα M X ( t ) P ( | X − µ | � ε ) � σ 2 ε 2 Proof. Corollary (The (weak) law of large numbers) 2 ) = P ( e tX/ 2 � e tα/ 2 ) P ( X � α ) = P ( tX 2 � tα Let X 1 , X 2 , . . . be i.i.d. continuous random variables with mean µ and variance σ 2 and let Z n = 1 and by Chebyshev’s inequality for e tX/ 2 , n ( X 1 + · · · + X n ) . Then P ( e tX/ 2 � e tα/ 2 ) � E ( e tX ) ∀ ε > 0 P ( | Z n − µ | < ε ) → 1 as n → ∞ = e − tα M X ( t ) . e tα Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 17 / 21 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 18 / 21 Waiting times and the exponential distribution Example (Radioactivity) If “rare” and “isolated” events can occur at random in the time The number of radioactive decays in [ 0, t ] is approximated by a interval [ 0, t ] , then the number of events N ( t ) in that time Poisson distribution, so decay times are exponentially interval can be approximated by a Poisson distribution distributed. The time t 1 / 2 in which one half of the particles have decayed is called the half-life . It is a sensible concept because P ( N ( t ) = n ) = e − λt ( λt ) n of the “lack of memory” of the exponential distribution. . n ! How are the half-life and the parameter in the exponential distribution related? By definition, P ( X � t 1 / 2 ) = 1 2 , whence Let us start at t = 0 and let X be the time of the first event; that ⇒ λ = log 2 is, the waiting time . Clearly, X > t if and only if N ( t ) = 0, e − λt 1 / 2 = 1 2 = whence t 1 / 2 P ( X > t ) = P ( N ( t ) = 0 ) = e − λt = ⇒ P ( X � t ) = 1 − e − λt The mean of the exponential distribution: 1 λ = t 1 / 2 / log 2 is called the mean lifetime . and differentiating, e.g., t 1 / 2 ( 235 U ) ≈ 700 × 10 6 yrs; t 1 / 2 ( 14 C ) = 5, 730 yrs; f X ( t ) = λe − λt t 1 / 2 ( 137 Cs ) ≈ 30 yrs whence X is exponentially distributed. Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 19 / 21 Jos´ e Figueroa-O’Farrill mi4a (Probability) Lecture 13 20 / 21

Recommend

More recommend