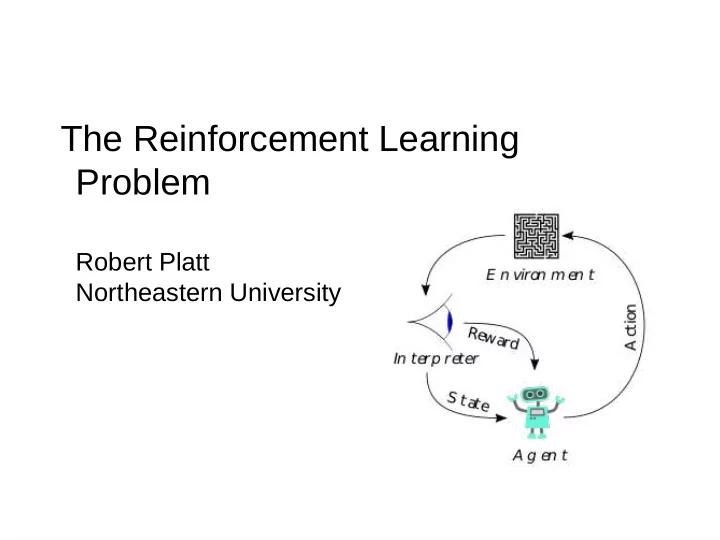

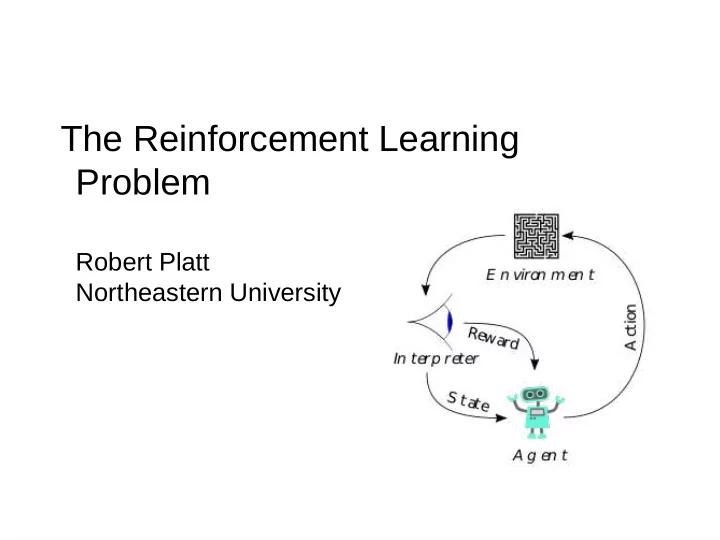

The Reinforcement Learning Problem Robert Platt Northeastern University

Agent Action Agent World Observation Reward On a single time step, agent does the following: 1. observe some information 2. select an action to execute 3. take note of any reward Goal of agent: select actions that maximize sum of expected future rewards.

Example: rat in a maze Move left/right/up/down Agent World Observe position in maze Reward = +1 if get cheese

Example: robot makes coffee Move robot joints Agent World Observe camera image Reward = +1 if coffee in cup

Example: agent plays pong Joystick command Agent World Observe screen pixels Reward = game score

Reinforcement Learning Action Agent World Observation Reward Goal of agent: select actions that maximize sum of expected future rewards. – agent computes a rule for selecting actions to execute

Reinforcement Learning Joystick command Agent World Observe screen pixels Reward = game score Goal of agent: select actions that maximize sum of expected future rewards. – agent computes a rule for selecting actions to execute

Model Free Reinforcement Learning Joystick command Agent World Observe screen pixels Reward = game score Agent learns a strategy for selecting actions based on experience – no prior model of system dynamics, i.e. no prior knowledge of “how the world works” – no prior model of reward, i.e. no prior knowledge of what actions lead to reward

Distinction Relative to Planning Joystick command Agent World Observe screen pixels Reward = game score Agent learns a strategy for selecting actions based on prior model – agent is given a model of system dynamics in advance – agent is “told” which states/actions are rewarding or not

RL vs Planning When to use RL: When to use planning: – hard to model systems – when the system is easily modeled – stochastic systems – when the system is deteriminstic

RL vs Planning When to use RL: When to use planning: – hard to model systems – when the system is easily modeled – stochastic systems – when the system is deteriminstic Ultimately, RL and planning are closely related...

Recommend

More recommend